Computing Environment

advertisement

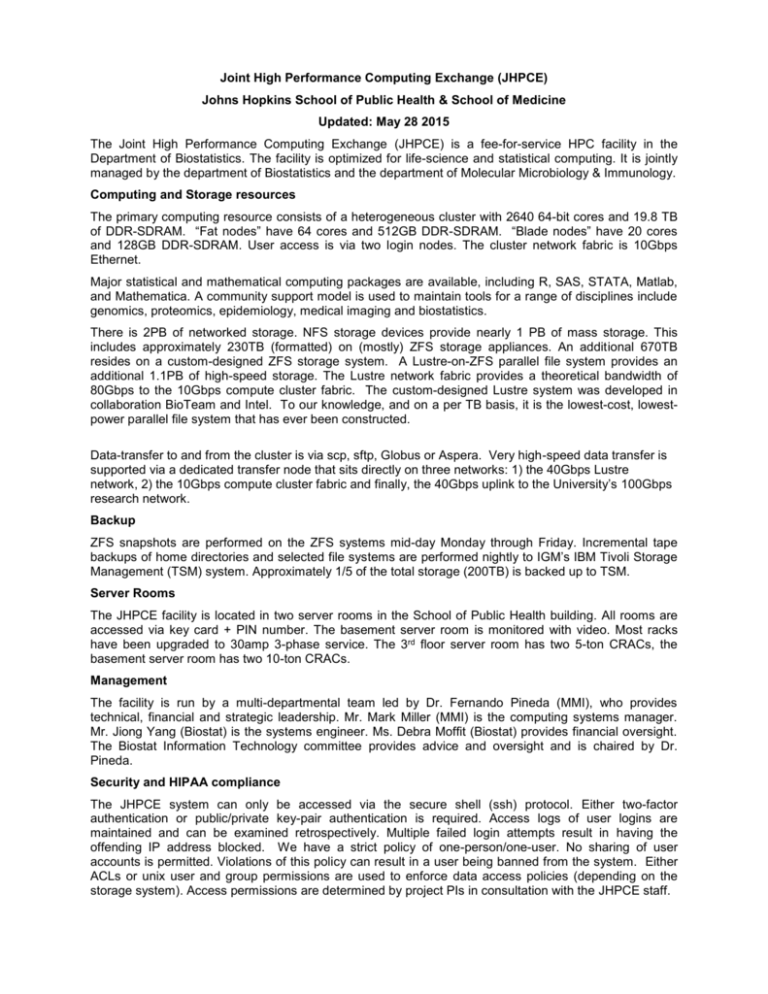

Joint High Performance Computing Exchange (JHPCE) Johns Hopkins School of Public Health & School of Medicine Updated: May 28 2015 The Joint High Performance Computing Exchange (JHPCE) is a fee-for-service HPC facility in the Department of Biostatistics. The facility is optimized for life-science and statistical computing. It is jointly managed by the department of Biostatistics and the department of Molecular Microbiology & Immunology. Computing and Storage resources The primary computing resource consists of a heterogeneous cluster with 2640 64-bit cores and 19.8 TB of DDR-SDRAM. “Fat nodes” have 64 cores and 512GB DDR-SDRAM. “Blade nodes” have 20 cores and 128GB DDR-SDRAM. User access is via two login nodes. The cluster network fabric is 10Gbps Ethernet. Major statistical and mathematical computing packages are available, including R, SAS, STATA, Matlab, and Mathematica. A community support model is used to maintain tools for a range of disciplines include genomics, proteomics, epidemiology, medical imaging and biostatistics. There is 2PB of networked storage. NFS storage devices provide nearly 1 PB of mass storage. This includes approximately 230TB (formatted) on (mostly) ZFS storage appliances. An additional 670TB resides on a custom-designed ZFS storage system. A Lustre-on-ZFS parallel file system provides an additional 1.1PB of high-speed storage. The Lustre network fabric provides a theoretical bandwidth of 80Gbps to the 10Gbps compute cluster fabric. The custom-designed Lustre system was developed in collaboration BioTeam and Intel. To our knowledge, and on a per TB basis, it is the lowest-cost, lowestpower parallel file system that has ever been constructed. Data-transfer to and from the cluster is via scp, sftp, Globus or Aspera. Very high-speed data transfer is supported via a dedicated transfer node that sits directly on three networks: 1) the 40Gbps Lustre network, 2) the 10Gbps compute cluster fabric and finally, the 40Gbps uplink to the University’s 100Gbps research network. Backup ZFS snapshots are performed on the ZFS systems mid-day Monday through Friday. Incremental tape backups of home directories and selected file systems are performed nightly to IGM’s IBM Tivoli Storage Management (TSM) system. Approximately 1/5 of the total storage (200TB) is backed up to TSM. Server Rooms The JHPCE facility is located in two server rooms in the School of Public Health building. All rooms are accessed via key card + PIN number. The basement server room is monitored with video. Most racks have been upgraded to 30amp 3-phase service. The 3rd floor server room has two 5-ton CRACs, the basement server room has two 10-ton CRACs. Management The facility is run by a multi-departmental team led by Dr. Fernando Pineda (MMI), who provides technical, financial and strategic leadership. Mr. Mark Miller (MMI) is the computing systems manager. Mr. Jiong Yang (Biostat) is the systems engineer. Ms. Debra Moffit (Biostat) provides financial oversight. The Biostat Information Technology committee provides advice and oversight and is chaired by Dr. Pineda. Security and HIPAA compliance The JHPCE system can only be accessed via the secure shell (ssh) protocol. Either two-factor authentication or public/private key-pair authentication is required. Access logs of user logins are maintained and can be examined retrospectively. Multiple failed login attempts result in having the offending IP address blocked. We have a strict policy of one-person/one-user. No sharing of user accounts is permitted. Violations of this policy can result in a user being banned from the system. Either ACLs or unix user and group permissions are used to enforce data access policies (depending on the storage system). Access permissions are determined by project PIs in consultation with the JHPCE staff. Policies and Cost recovery Computing and storage resources are owned by stakeholders (e.g. departments, institutes or individual labs). Job scheduling policies guarantee that stakeholders receive priority access to their nodes. Stakeholders are required to make their excess capacity available to other users via a low-priority queue. Stakeholders receive a reduction in their charges in proportion to the capacity that they share with other users. This system provides a number of advantages: 1) stakeholders see a stable upper-limit on the operating cost of their own resources, 2) stakeholders can buy surge capacity on resources owned by other stakeholders, and 3) non-stakeholders obtain access to high-performance computing on a lowpriority basis with a pay-as-you-go model. The SPH Dean and the department of Biostatistics both provide institutional support to the JHPCE. Unsupported costs are recovered from users and stakeholders in the form of management fees. Since 2007 we have employed a systematic resource and cost sharing methodology that has largely eliminated the administrative and political complexity associated with sharing of complex and dynamic resources. Custom software implements dynamic cost-accounting and chargeback algorithms. Charges are calculated monthly, but billed quarterly. Eight years of operation has demonstrated that it is a powerful approach for fair and efficient allocation of human, computational and storage resources. In addition, the methodology provides strong financial incentives for stakeholders to refresh their hardware.