Evolutionary Strategy MAININI RiDOLFI

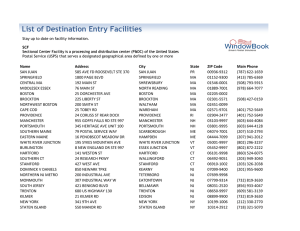

advertisement

Apprendimento Mimetico: Evolutionary Strategy for the Resolution of the Rosenbrock Equation Mainini, L.,1 and Ridolfi, G.2 Politecnico di Torino, Torino, Italy, 10100 The present paper main objective is to describe the methodology developed by the authors in order to solve the Rosenbrock equation with 2, 5, 10, 20, 50, and 100 variables. The authors used an evolutionary strategy with a dynamic adaptation of the degree with which the variables of the problem mutate, i.e., . After a brief description of the problem, in section I, in section II the main assumptions and the overall strategy are described. Results are presented in section III; finally, conclusions and recommendations are provided in section IV. Nomenclature xi D # of individuals of the parents population # of individuals of the offspring population Standard deviation Locus value Number of loci I. Introduction The main objective is to develop an efficient methodology to solve the Rosenbrock equation using 2, 5, 10, 20, 50, and 100 variables, comparing the results and analyzing the general trends. D 1 f D X 100 xi2 xi21 1 xi i 1 2 2 (1) In ( 1 ), the vector X is X x1 , x2 ,..., xD , with D 2,5,10, 20,50,100 . The solution of the problem is known; it has been used to test the performance of the methodology developed. The solution vector is X 1,1,...,1 , with fD X 0 . A solution with X : f D X 106 is considered to be sufficiently satisfactory. II. Methodology and trade-offs A. Main Algorithm The authors decided to adopt a comma strategy, , . The values of andhave been selected following the most adopted philosophies found in the literature. In particular 5 and 30 have been selected as first attempt, and 10 and 100 have been selected as second attempt in order to understand the trends. 1 2 PhD Student, DIASP, Corso Duca degli Abruzzi 24. laura.mainini@polito.it PhD Student, DIASP, Corso Duca degli Abruzzi 24. guido.ridolfi@polito.it 1 The Rosenbrock function, ( 1 ), represents the fitness function used to evaluate and rank the solutions. A tournament selection has been designed in order to select the parents, one for each element of the offspring population. The tournament selection has been designed such that three out of , then the best one, evaluated via the fitness function, is selected to take part to the parents-population; the tournament selection is iteratively evaluated times. Each individual is made of D loci: real numbers between -100 and 100. The value of we use to mutate each locus of the parents-population, is generated together with the population; it is equal for each locus and each individual. The value of is modified at each generation according to the 1 success rule. 5 / c if p 1 5 c if p 1 5 if p 1 (2) 5 The value of c has been selected to be equal to 0.8. Each locus of the parents is evolved using ( 3 ). xi' xi N 0,1 (3) The new children-population is made of the best individual out of . The values of c, andhave been selected after some experiments, looking at the general convergence velocity of the Evolutionary Strategy developed. Those experiments have shown a strong sensitivity of the convergence to the values of . In particular, simply using the 1 success rule, it was noticed a fast reduction of even when 5 distant from the optimum. 1st algorithm Rosenbrok D=2 iterations 4 1st algorithm Rosenbrok D=5 iterations 8 10 10 6 10 2 10 4 10 0 10 2 10 0 10 -2 10 -2 10 -4 10 -4 10 -6 10 -6 0 50 100 150 iterations 200 250 10 300 1st algorithm Rosenbrok D=10 iterations 10 100 200 300 400 iterations 500 600 700 800 1st algorithm Rosenbrok D=20 iterations 10 10 10 8 8 10 10 6 6 10 10 4 4 10 10 2 2 10 10 0 0 10 10 -2 -2 10 10 -4 -4 10 10 -6 10 0 -6 0 500 1000 1500 iterations 2000 2500 10 3000 2 0 1000 2000 3000 4000 5000 iterations 6000 7000 8000 9000 Figure 1: Rosenbrock function for D=2,5,10,20, main algorithm running 1st algorithm Best value D=2 1 1st algorithm Best value D=5 1 10 10 0 10 0 10 -1 10 -1 10 -2 10 -2 10 -3 0 50 100 150 iterations 200 250 10 300 1st algorithm Best value D=10 2 0 100 200 400 iterations 500 600 700 800 1st algorithm Best value D=20 2 10 300 10 1 10 1 10 0 10 0 10 -1 10 -1 10 -2 10 -2 10 -3 10 -3 10 -4 10 -5 10 -4 0 500 1000 1500 iterations 2000 2500 10 3000 0 1000 2000 3000 4000 5000 iterations 6000 7000 8000 9000 Figure 2: Loci behavior for D=2,5,10,20, main algorithm running B. Modified Algorithm In order to accelerate the convergence the authors decided to improve the previous algorithm adding a control on the fitness value and a consequent update of the value if a sort of stagnation of the fitness value occurs. So is multiplied by a certain random value between 2 and 4, when the fitness evaluated on the best individual doesn’t improve behind a certain threshold, ( 4 ). besti besti 1 0.01 besti 3 (4) 2nd algorithm Rosenbrok D=2 iterations 2 2nd algorithm Rosenbrok D=5 iterations 8 10 10 1 10 6 10 0 10 4 10 -1 10 2 10 -2 10 0 10 -3 10 -2 -4 10 -5 10 10 -4 10 -6 10 -6 0 50 100 150 200 10 250 0 100 200 300 400 iterations iterations 2nd algorithm Rosenbrok D=10 iterations 10 700 10 8 8 10 10 6 6 10 10 4 4 10 10 2 2 10 10 0 0 10 10 -2 -2 10 10 -4 -4 10 10 -6 10 600 2nd algorithm Rosenbrok D=20 iterations 10 10 500 -6 0 200 400 600 800 1000 iterations 1200 1400 1600 10 1800 0 1000 2000 3000 iterations 4000 Figure 3: Rosenbrock function for D=2,5,10,20, modified algorithm running 4 5000 6000 2nd algorithm Best value D=2 1 2nd algorithm Best value D=5 1 10 10 0 10 0 10 -1 10 -1 10 -2 10 -2 10 -3 0 50 100 150 200 10 250 0 100 200 300 400 iterations iterations 2nd algorithm Best value D=10 2 600 700 2nd algorithm Best value D=20 2 10 500 10 1 10 1 10 0 10 0 10 -1 10 -1 10 -2 10 -2 10 -3 10 -3 -4 0 200 400 600 800 1000 iterations 1200 1400 1600 10 1800 0 1000 2000 3000 iterations 4000 5000 Figure 4: Loci behavior for D=2,5,10,20, modified algorithm running 5 9 x 10 1st algorithm 2nd algorithm 8 7 6 # fitness calls 10 5 4 3 2 1 0 2 4 6 8 10 12 loci D 14 16 18 20 Figure 5: Number of fitness evaluations for the different quantities of variables (loci D) 5 6000 III. Results In Table 1, the number of fitness calls to reach convergence and the loci values there found are collected. It is possible to observe there are no available results for D=50 and D=100. The reason is related to the fact that for those numbers of variables both the implemented algorithms do not converge, but they keep on evaluating with endless oscillations. Table 1: Loci values when convergence is reached D Main Algorithm Modified Algorithm 1.0001 0.9994 2 -1.0001 0.9993 Fitness calls 25100 23400 0.9995 0.9995 0.9995 0.9995 0.9995 0.9995 5 0.9995 0.9995 0.9995 -0.9995 Fitness calls 72900 69100 0.9997 0.9997 0.9997 0.9997 0.9997 0.9997 0.9997 0.9997 0.9997 0.9997 10 0.9997 0.9997 0.9997 0.9997 0.9997 0.9997 0.9997 0.9997 0.9997 0.9997 Fitness calls 299400 177000 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 20 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 0.9998 -0.9998 0.9998 Fitness calls 865500 554400 50 100 6 In Table 2, the results in terms of number of fitness evaluations are presented. It is clear that dynamically adapting the value of , leads to some advantages: faster convergence velocity for a given PC. Further, for this particular problem, a strategy with 5 and 30 enable an even faster convergence velocity, i.e., lower number of fitness evaluations. Table 2: Strategies Comparison D 2 5 10 20 50 100 Fitness Evaluations Main Algorithm Modified Algorithm 10,100 10,100 5,30 25100 72900 299400 865500 - 23400 69100 177000 554400 - 9000 50000 125000 350000 - IV. Conclusions Simply with a comparison between Figures 1 and 3 it is possible to note the relevant quantity of crests and peaks in the Rosenbrock evaluation related to the modified algorithm. Such crests are footprints of the variance updates following the fitness stagnations. Figures 2 and 4 collect the behavior of the loci that are going to set their values at 1 when the convergence is reached. Figure 5 shows that the modified algorithm works better than the main one. It is possible to observe a sensitive reduction in the number of the fitness evaluations and a faster convergence for the modified algorithm with respect to the main one due to the more dynamics provided by the update. 7