EAC_paper-ResubmitFinal

advertisement

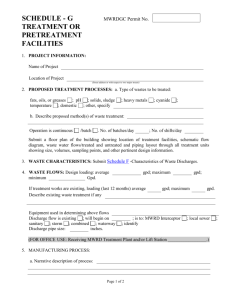

Probability Models for Estimating the Probabilities of

Cascading Outages in High Voltage Transmission Network

Qiming Chen, Member, IEEE, Chuanwen Jiang, Member, IEEE,

Wenzheng Qiu, Member, IEEE, James D. McCalley Fellow, IEEE

Abstract — This paper discusses a number of probability

models for multiple transmission line outages in power systems,

including generalized Poisson model, negative binomial model

and exponentially accelerated model. These models are applied to

the multiple transmission outage data for a 20-year period for

North America.

The probabilities of the propagation of

transmission cascading outage are calculated. These probability

magnitudes can serve as indices for long term planning and can

also be used in short-term operational defense to such events.

Results from our research shows that all three models apparently

explain the occurrence probability of higher order outages very

well. However, the Exponentially Accelerated Model fits the

observed data and predicts the acceleration trends best. Strict

chi-squared fitness tests were done to compare the fitness among

these three models and the test results are consistent with what we

observe.

Index Terms—exponentially accelerated cascading, negative

binomial distribution, generalized Poisson distribution, power law,

high-order contingency, cascading, blackouts, rare events,

multiple transmission outages.

I. INTRODUCTION

T

he high order contingencies in power systems are of great

interest nowadays because of their potential to cause huge

losses and the advances in computing technology make the

on-line analysis of high order contingencies possible[1][2].

The word “high-order” here means loss of multiple elements

during a short time period in power systems. Such events are

usually of lower probability than N-1 events which means loss

of a single element in power systems. If power systems are

weakened due to losses of more than one transmission line,

what would be the probability that another transmission line

trips? It is difficult to calculate or estimate their probability due

to their few occurrences. However, it would be very useful to

predict the likelihood of those events. For example, it would

help engineers in the transmission and generation planning

process, where capital investments in new facilities must be

weighed against the extent to which those facilities reduce risk

associated with contingencies. This could also help system

operators to estimate and evaluate network security in

operations, for control-room decision-making. Here, preventive

actions, which cost money and are routinely taken in

anticipation of N-1 events, are not reasonable for a rare event,

Qiming Chen is a transmission planning engineer with PJM Interconnection

Valley Forge, PA 19403, USA. (e-mail: qmchen@ieee.org ).

Chuanwen Jiang is with the Department of Electrical Engineering, Shanghai

Jiaotong University, Shanghai, 200030, China. (email: jiangcw@sjtu.edu.cn)

Wenzheng Qiu is a generation interconnection planning engineer with PJM

Interconnection Valley Forge, PA 19403, USA. (e-mail: qiuw@pjm.com ).

James D. McCalley is professor of Electrical and Computer Engineering at

Iowa State University, Ames, IA 50011, USA. (e-mail: jdm@iastate.edu).

since the certain cost of the preventive action cannot be

justified for the event that is so unlikely. Given that the number

of rare events is excessively large and it is neither possible nor

necessary to do analysis for all of them, to prioritize the events

becomes crucial for on line analysis. The best way to prioritize

event is by risk, which is the expected impact by definition.

However, considerable computation would be needed to find

out the impact. Another way to prioritize is by event

probability, assuming the impacts of events are of about the

same magnitude. The event with highest possibility will be

“computed” next in developing operational defense procedures.

Ways to estimate the probabilities of power system rare

events include fitting an existing probability model to historical

data, deriving the overall probability by system structure and

individual components; and using Monte Carlo simulation.

Dobson in [3] proposed the use of power law to model the

occurrences of large disturbances recorded in [4]. Later on, a

number of probability distributions, which are variants of

quasi-binomial distribution and generalized Poisson

distribution (GPD) [5], were proposed in [6]-[8]. Reference [9]

presents work done by importance sampling to expose hidden

failure. In this paper, a new model for forecasting the

probability of high order contingencies, exponentially

accelerated cascading (EAC), is proposed and it was compared

with negative binomial model [11] and generalized Poisson

model. There are different metrics in characterizing power

system rare events, including the number of customers

interrupted, power interrupted, energy not served, and the

number of elements lost (i.e. N-1, N-2,…, where N-K means the

lost of K transmission line in power system network). The latter

one is employed in our probability model because it better

conforms to planning and operating reliability criteria used in

the industry. For example, reliability standards performance

criteria are often categorized based on the number of elements

lost.

Although it is difficult to obtain the first hand statistical data

from the power industry, the survey in [12] provides a good

resource for academic research. All the conclusions and

models from this paper are based on the large amount of actual

statistical data gathered in [12]. This paper focuses on

analyzing the fitness of a number of general probability models

for the likelihood of transmission outages. It is not intended for

any specific class of transmission outages, nor does it point out

the methodology to identify and defend those contingencies.

Readers interested in this may refer to [1][2][13][14] for further

information.

Section II of this paper gives a preliminary analysis of the

transmission line outage for the past 20 years from 1965 to

1985. Section III describes three possible probability models:

negative binomial (NB), generalized Poisson distribution

(GPD)[1] and exponentially accelerated cascading (EAC). The

EAC model is developed in this paper specifically for

cascading transmission outages. Unlike the NB and GPD

models, it has not been found in any other statistical text.

Section III uses the maximum likelihood estimation to estimate

parameters for the three models. The tail behaviors of the

models are compared in Section V. Section VI uses a

chi-squared test to compare the fitness among the three models.

Section VII concludes and discusses.

II. TRANSMISSION OUTAGE STATISTICS

Ref. [12] is a detailed resource for power system reliability

investigators considering the scale of the survey and the

difficulty of obtaining statistics on power systems from

different sources. The statistic data analyzed in this paper is the

total number of elements lost in each contingency in North

America from 1965 to 1985 [12], as indicated in TABLE I and

Fig. 1. The last two columns give a summary. According to

[12], the data reported in TABLE I, whenever an event involves

components of different voltage levels, it will be counted as one

instance only with a specific voltage level.

TABLE I

High order transmission outages statistics

An IEEE survey of US and Canadian overhead

transmission outages at 230kv and above, 1965-1985, [12]

Number of Contingences By Line Voltage Levels

Cont.

Type

Total No & Perc

230kv

345kv

500kv

765kv

N-1

3320

5807

721

295

10143

89.85%

N-2

303

577

35

36

951

8.42%

N-3

39

99

3

2

143

1.27%

N-4

18

16

0

2

36

0.32%

N-5

7

1

0

0

8

0.07%

N-6

0

1

0

1

2

0.02%

N-7

3

1

0

0

4

0.04%

N-8

2

0

0

0

2

0.02%

10

100

1

N-1

N-2

1000

10000

TABLE II

Conditional Probability of N-K contingencies

Cont.

Accru. No

Conditional

Prob (%)

Increasing

Rate

K ≥1

11289

K ≥2

1146

C1=10%

-

K ≥3

195

C2=17%

C1/C2=170.0%

K ≥4

52

C3=27%

C2/C3=158.8%

K ≥5

16

C4=31%

C3/C4=114.8%

K ≥6

8

C5=50%

C4/C5=161.3%

K ≥7

6

C6=75%

C5/C6=150.0%

K ≥8

2

C7=33%

-

-

-

The data is rearranged in TABLE II to obtain conditional

probabilities conveniently. The second column of TABLE II

counts the number of events with more than k lines outaged.

For example, there are a total of 11289 events that involve at

least one line outage, and 11289 is just the summation of the

number of all outages listed in TABLE I. 1146 is the total

number of events that involve at least two lines and so on. The

last column of TABLE II lists the estimated conditional

probability of N-K contingencies derived from the sixth column

of TABLE I. They are calculated by the formula as follows:

Pr( K k 1)

Ck Pr( K k 1 K k )

(1)

Pr( K k )

The estimate of Pr(K k 1 K k ) can be found by simply

replacing Pr( K k 1) and Pr( K k 1) with the occurrence

frequencies of the events K ≥ i and K ≥ k+1, respectively. Fig. 2

illustrates the relationship between Ck and k. Fig. 2 shows that

the probability of any transmission outage that involves loss of

more than one line is 10%, a value significantly larger than that

commonly assumed. If North America power system loses 2

transmission lines and there is no other information considering

the timing, causes, location of the event, the probability that it

loses at least an additional line would be 17%, which is almost

doubled compared to 10%. If the system loses 6 lines, the

chance of losing another line is almost certain. This means that

if a system is already in a weakened condition, the probability

that it continues weakening would keep increasing until the

event develops into a system-wide blackout.

N-3

0%

10% 20% 30% 40% 50% 60% 70% 80%

N-4

N-5

N-6

N-7

N-8

Fig. 1. log{Pr(k)} v.s. N-k plot

Pr(K ≥ 2/ K ≥ 1)

Pr(K ≥ 3/ K ≥ 2)

Pr(K ≥ 4/ K ≥ 3)

Pr(K ≥ 5/ K ≥ 4)

Pr(K ≥ 6/ K ≥ 5)

Pr(K ≥ 7/ K ≥ 6)

Pr(K ≥ 8/ K ≥ 7)

Fig. 2. Pr(K ≥ k / K ≥ k) v.s. k

The increasing in conditional probability Ck with k is

understandable. The loss of one element immediately raises the

likelihood of losing another element, which has a similar effect,

and so on. A fault and the follow-up relay trip of the

component(s) cause transient oscillations throughout the power

system and make other protection devices more likely to

operate. The forced outage of one generator or line changes the

power flow pattern, and some circuits, being more loaded, may

trip either by proper or unintended protection operation. The

more severe the previous event is, the more likely an additional

event will follow. This tendency might be modeled statistically

using a number of probability distributions, such as Poisson

model, negative binomial model, power law, and GPD

[2]-[3][6]-[9].

Some caution must be taken when using the data in [12] to

draw a conclusion. First, a few utilities in the survey reported

their transmission contingencies by single line outages [12]. If

an event involved the loss of three lines, it was reported as three

different single outages. In order to prevent a multiple line

outage event from being counted as several single line outage

events, the survey processed the data provided by those

utilities. Outages reported by those utilities with identical

initiating timing, i.e., occurring within one minute, were

considered as one contingency with multiple line outages. All

other utilities reported outages by events, i.e., multiple

transmission outages in a single event were reported as one

instance of outage. Second, it appears that some huge

blackouts that outaged many lines, for example, the

Northeastern US blackout of 1965 [15], were not correctly

registered in the statistics, since the last stage of this event

obviously outaged more than 8 lines. In order to mitigate the

uncertainty in data error, all the outages that involve the loss of

more than 7 lines are grouped into a single category, i.e., K ≥ 7.

The number of events in this category is 4 + 2 = 6 and

constitutes about 0.06% of total observed events. In order to

mitigate the impact that would arise because of the possible

inaccuracy, all the discussion that follows will be based on this

treatment.

III. THREE DISCRETE PROBABILITY MODELS FOR

INTERDEPENDENT EVENTS

We have shown in [11] that both Poisson Model and Power

Law model are not desirable for transmission outages because

the former underestimates the interdependence among

transmission outages and the later overestimates. Generalized

Poisson model (GPD) is first proposed to model

over-dispersion [5] (variance greater than mean - note the

variance and mean are equal for Poisson). There is evidence

supporting the choice of GPD for the distribution of

transmission line outages on small test systems [4][7].

This section introduces a new model: exponentially

accelerated cascading model (EAC), which is specifically

proposed for cascading transmission outages. The cluster

model in [11], which is actually Negative Binomial model (NB)

and gives the best among the three models in [11] fitting for the

transmission outage statistics of TABLE I, will be re-discussed

as well.

A. Negative binomial distribution

The negative binomial distribution (NB) is an established

model for rare events such as car accidents [10][16], where

interdependence between events or over-dispersion in a data set

exists. We have presented in [11] that it is a better model for

transmission line outage than Power Law model and Poisson

model. The pdf (probability density function) of NB is given

by

( 1 k 1)

Pr K k ,

(k )( 1 ) 1

k 1

1

1

1

(2)

where k 1, 2, ...

The mean and variance of NB are given by

E( K ) 1

(3)

Var ( K ) 2

Note the sample space of K is {1, 2, 3,…} instead of {0, 1, 2,

…} here, which is different from the usual way of defining NB.

We do so because we want the sample space to be consistent

with the number of lines outaged in power systems.

B. Generalized Poisson distribution (GPD) model

A generalized Poisson distribution is given by

(k 1)

k 2

Pr( K k , )

(k 1)!

where k 1, 2, 3 ..., and 0

exp (k 1) ,

(4)

where 1 > θ > 0 and λ > 0.

There is also a GPD defined for θ ≥ 1. However, that is not

discussed here in order to limit the scope of this paper. When θ

= 0, the GPD degrades to a regular Poisson distribution with

parameter λ.

The mean and variance of GPD are given by

E( K ) (1 ) 1 1

(5)

Var ( K ) (1 ) 3

(6)

For the same reason as we stated for negative binomial

distribution, we use {1, 2, 3…} instead of {0, 1, 2…} for the

sample space of K.

C. Exponentially Accelerated cascading model (EAC)

This model is based on the observation that there is an

increasing trend of conditional probabilities in TABLE II and

Fig. 2 approximately following a potential exponential

relationship.

Note that the ratios of the conditional

probabilities in the last column of TABLE II vary in the vicinity

of 150%. The exponential accelerated cascading (EAC) model

proposed here assumes that the probability of another or more

transmission outage(s) follows an exponential function of the

number of lines already lost in the system. Denote the

conditional probability Pr(K k 1 K k ) as Ck , then

Ck 1 Ck , where is a constant and 1 (7)

Suppose after N-1 contingency happens, the probability that

one

or

more

line

outage

happens

with

probability p C1 Pr(K 2 K 1) , then

C k p k 1

(8)

Pr( K k ) Pr( K 2 K 1) Pr( K 3 K 2)

Pr( K k ) Pr( K k 1)

C1 C2 Ck 1

p p 1 p 2 p k 1

p k 1

by

differentiation

and

necessary to use the log form to process it in computer. Since

all the N-K contingencies with k≥ 7 in TABLE II are grouped

due to their potential inaccuracy, the log likelihood formula

here for the data set is given by

log L( 1 ,, m k1 ,, k n )

n log Pr( K k ,, ) N log Pr( K 7 ,, )

k

1

m

7

1

m

k{1, 2 ,, 6}

(9)

Pr(K k ) Pr(K k ) Pr(K k K k )

Pr(K k ) 1 Pr(K k 1 K k )

p k 1

L(1 ,, m x1 ,, xn )

of

L(1 ,, m x1 ,, xn ) is sometimes extremely small, it is

( k 1)( k 2 )

2

( k 1)( k 2 )

2

that

1 p

k 1

(10)

(12)

where nk is the number of N-k contingencies and N7 is the

number of N-K contingencies with K ≥ 7.

Equation (12) will be applied to all three models in this paper.

The sample spaces of the NB and GPD models shift from the

usual {0, 1, 2, …} to {1, 2, 3 …}, so N-1 events correspond to

the events K = 1 in NB and GPD models and so on .

TABLE III

A. Estimating the parameters of Negative Binomial model

Conditional Probability of N-K contingencies

Cont.

k

K≥k

Count

Cond.

Prob

(%)

Ck

Pr( K k )

Pr( K k )

1

11289

-

p 0

p0 0 1

p 0 0 (1 p )

2

1146

10%

p 1

p 0

p 1 0 (1 p )

3

195

17%

p 2

p2 1

p 2 (1 p 2 )

4

52

27%

p 3

p3 3

p 3 3 (1 p 3 )

5

16

31%

p 4

p4 6

p 4 6 (1 p 4 )

50%

p

6

k≥7

8

6

75%

5

-

5

p

10

p (1 p )

p

15

-

6

5

10

Substitute the pdf Pr k , of NB in (2) into the log

5

In equation (8), Ck increases with k without bound. It could

go to infinity. However, Ck must be less than or equal to one

because it represents a probability. To solve this apparent

inconsistency, this model assumes that the exponential law only

valid up to k = 6 and p 5 1 . If a system loses 7 lines in a

sequence for one outage, the system is considered collapsed

and there is no need to count further lost lines for statistical

purpose.

Note in the above derivation, the condition K 1 is omitted,

since all the discussion here assumes that there is already a

contingency, that is, Pr( K 1) 1 .

likelihood formula (12) to get

1

k 1

( 1 k 1) 1

Log L N k log

k 1,...,6

(k )( 1 ) 1 1

1

k 1

( 1 k 1) 1

6 log 1

1

1

1

k 1,...,6 (k )( )

(13)

The MLE Estimation for Negative Binomial model is

equivalent to finding the and that maximize (13).

Numerical technique is needed to find the global maximum

(ˆ , ˆ) because there is no close form available for (ˆ , ˆ) .

Fig. 3 plots the contour graph of ( , ) for likelihood. With

the help of this plot, the optimal (ˆ , ˆ) is found out to be

(2.675, 0.1225).

0.128

0.126

(2.675, 0.1225)

0.124

0.122

IV. MAXIMUM LIKELIHOOD ESTIMATION OF PARAMETERS

0.120

The log maximum likelihood estimation (MLE) [13] is given

by

log L(1 , , m k1 , , k n ) log Pr( k i 1 ,, m )

(11)

i{1, 2 ,,n}

= (1, 2, … ,m) and x = (k1, k2, …, kn) are defined as the

distribution parameter vector and variable vector, respectively.

The that maximizes L( | x) is called a MLE of the parameter

, denoted as ˆ . It should be noted that ˆ must be a global

maxima. Because it is easier to find the maxima of (11) than

0.118

0.116

2

2.2

2.4

2.6

2.8

3

Fig. 3. Contour plot of likelihood function (13): NB Model

3.2

B. Estimating the parameters of generalize Poisson model

The log likelihood formula for the GPD model is given by

Log L

(k 1)

k 2

k 1,...,6

N k log

k!

Pr K k 2.675 , 1.1225

exp (k 1)

k 2

(k 1)

6 log 1

exp (k 1)

(k 1)!

k 1,...,6

(14)

The maximum likelihood estimate of θ is given by the

solution of (14), assuming no censored data, according to [1]

(k 2)( k 1)

c

ni

n(k 1) 0

k 1

(k 1) (k k )

(15)

(k 1)(1 )

However, (15) is only correct for uniform sample data with k

known for each of the observed events. Since the sample data

available is heterogeneous because of the incomplete

observablity of N-K events (K ≥ 7), it is not convenient to use

(15) to get the maximum (ˆ, ˆ) . Inspection o f the contour

graph in Fig. 4 yields the MLE of (ˆ, ˆ ) to be (0.108, 0.155).

( 1 k 1)

(k )( 1 )

k 1

1

1

1

1

(17)

(k 1)

k 2

Pr( K k 0.108 , 0.155 )

(k 1)!

exp (k 1)

(18)

Pr( N k p 0.10295 , 1.515 ) p k 1

( k 1)( k 2 )

2

1 p

k 1

(19)

1.56

β

1.55

1.54

0.10295, 1.515

1.53

1.52

1.51

θ

1.5

1.49

0.18

0.17

1.48

0.1

(0.108, 0.155)

0.1005 0.101 0.1015 0.102 0.1025 0.103 0.1035 0.104 0.1045 0.105

p

0.16

Fig. 5. Contour plot of likelihood function (16) (EAC Model)

0.15

The three models above are evaluated for k = {1,2,3,4,5,6}

and k ≥ 7, and the results are shown in TABLE IV. These

results are also plotted in Fig. 6. By inspecting Fig. 6, it can

conclude that all three models reasonably predict the observed

data as all of them fits very well for k=1,…,6. A careful

examination of TABLE IV shows that for the N-K events with k

> 6, the EAC model predicts the occurrence of these extreme

events far more accurate than the other two models. EAC gives

0.00061 for the observed 0.00053, while the other two give

3.53E-05 and 9.16E-05, which are 10 times lower. Fig. 6 uses

log-scale for probabilities, so it shrinks the apparent difference

between three models. The strict statistic index χ2 is employed

in Section VI to do further comparison for the three models.

0.14

0.13

0.12

0.1

0.102

0.104

0.106

0.108

0.11

0.112

λ

Fig. 4. Contour plot of likelihood function

(14): GPD Model

C. Estimating the parameters for exponentially accelerated

cascading model

For the EAC model.

LogL( p, n1 , n2 ,, n6 , N 7 )

nk Log p ( k 1) ( k 1)( k 2 ) 2 1 p k 1 6 log p 71 ( 71)( 72 ) 2

6

TABLE IV

Comparing the fitness of three different probability models for the distribution

of observed multiple line outages

Cont.

k

Count

Observed

NB

GPD

EAC

N-1

1

10143

0.8985

0.8995

0.8976

0.8971

N-2

2

951

0.08424

0.08299

0.0830

0.08689

N-3

3

143

0.01267

0.01407

0.01487

0.01226

N-4

4

36

0.003189

0.00275

0.00334

0.00244

N-5

5

8

0.00071

0.00057

0.000842

0.00062

N-6

6

2

0.00018

0.000124

0.000228

0.00013

6

0.00053

3.52E-05

9.16E-05

0.00061

k 1

6

6 (k 1)( k 2)

nk (k 1) Log( p )

nk Log ( )

k 1

2

k 1

n

6

k 1

k

log(1 p

k 1

) 36 log( p ) 90 log( )

(16)

Inspecting contour graph Fig. 5 yields ( pˆ , ˆ ) = (0.10295,

1.515).

N-K,K > 6

1

Observed

Probability

Exponential Accelerated Cascading

24.7%

1

exp(1 ) 36.1%

(21)

(22)

Negative Binomial

0.1

Generalized Poisson Model

40%

35%

Pr( K ≥ k+1 / K ≥ k )

0.01

0.001

Asymptotic Line P=36.1%

30%

25%

20%

Assuming an N-2 contingency, the probability

that a next contingency happens increased to 19%

15%

10%

Assuming an N-1 contingency, the probability

that a next contingency happens is 10%

5%

0%

0.0001

1

3

2

4

5

6

7

8

9 10

1

i

2

3

4

5

6

7

8

9 10 11 12 13 14 15 16 17 18 19

Number of Line Out (k)

Fig. 6. The log-log plot of PDF for NB, GPD and EAC models

Fig. 8. Propagation of cascading sequence accelerates: GDP Model

V. TAIL BEHAVIORS OF THE MODELS

With the three models discussed in the last section, the

conditional probabilities can be obtained by

1 Pr( K j )

Pr( K k 1)

j k 1

Pr( K k 1 K k )

Pr( K k )

1 Pr( K i)

i k

(20)

Fig. 7 shows that there is an increasing accelerating trend for

the probability of occurrence of next events with the number of

lines already lost. However, the acceleration rate decreases

continuously and stabilizes at 24.7%.

The conditional probability for the GPD model can be

calculated the same way as the NB model. Fig. 8 shows that the

GPD model is similar to NB model in that both of them give the

accelerating trend of cascading transmission line outages and

the acceleration rate converges as k becomes large, except that

the GPD model converges to probability 36.1%, which is much

more larger than the value 24.7% in NB.

Fig. 9 plots the conditional probabilities in (20) for the EAC

model. The values approximate the actually observed values

properly, with the observed values less than the EAC model for

k ≤ 3 and greater for k ≥ 4. When k ≥ 7, it can be judged from

the increasing trend that the subsequent event happens with

certainty, a prediction supported by the statistics in TABLE I.

Fig. 10 plots all the conditional probability from the three

ideal models and the actual data together in one graph. All

three models match the actual data closely when k≤3. When

k≥4, the NB and GPD models start to deviate from the observed

values and the EAC model keeps following.

0.9

Exponential Accelerated Cascading (EAC)

0.8

Observed conditional probability

0.7

0.6

0.5

0.4

30%

P(K ≥ k+1 / K ≥ k)

25%

Asymptotic Line P=24.7%

0.3

0.2

20%

Assuming an N-4 contingency, the probability

0.1

15%

that a next contingency happens is more than 30%

Assuming an N-2 contingency, the probability

that a next contingency happens increased to 17%

10%

0

0

1

2

3

4

5

6

Number Lines in Outage (k)

Assuming an N-1 contingency, the probability

that a next contingency happens is 10%

5%

0%

1

2

3

4

5

6

7

8

9

10 11 12 13 14 15 16

Number of Line Out (k)

Fig. 7. Propagation of cascading sequence accelerates: NB Models

The asymptotic conditional probabilities for the NB and

GPD models are given by (21) and (22) respectively.

Fig. 9. Propagation of cascading sequence accelerates: EAC Model

7

decomposed into 5 exclusive sets. They are S1={1}, S2={2},

S3={3}, S4={4}, S5={5, 6, 7, … }. The reason to group them

this way is that for all Si and all three models tested,

P(XSk)Nk (where Nk is the number of samples that fall in set

Sk) is greater than 5%, which is suggested for the credibility of

the fitness test.

100%

Conditional Probability (K ≥ k+1|K ≥ k)

EAC

90%

Observed

80%

GPD

NB

70%

60%

TABLE V

50%

2-Test results for NB, GPD, and EAC models

40%

NB

30%

k

nk

10%

1

0%

2

2

3

4

5

6

7

8

Number Lines in Outage (k)

9

10

11

Fig. 10. Comparing the propagations of cascading

VI. FITNESS TEST OF THREE DIFFERENT PROBABILITY MODELS

The discussion in Section IV and V provides qualitative

evidence that the EAC model fits the data better than NB and

GPD models. In this section, the chi-squared test is applied to

provide quantitative evidence. The chi-squared test, based on

the Pearson theorem [17], is widely used in statistics to test the

fitness of a probability model to sample data. Suppose a certain

random trial has k possible outcomes, the probability that each

trial results in the kth outcome is pk, k=1, 2, 3, …, m, where

pk=1. If n trials are performed, and the kth outcome results Nk

times, then the multivariate distribution of Nk is

Pr( N1 n1 ,, N m nm p1 ,, pm )

n!

p1n p2n pmn

n1!n2 ! nm !

1

m

2

(23)

n

m

with

p

m

k

n and

k 1

k

1

k 1

Pearson theorem: Suppose the parameters of a polynomial

distribution has the pdf as in (23), and define

N

m

2

npk np k

2

k

(24)

k 1

when n, 2 follows the chi-squared distribution 2 (k-1).

One can see from (24) that the statistic 2 is an index for how

much the samples deviate from the polynomial distribution to

be tested. The larger the statistics 2 is, the larger the deviation

is. In order to apply the Pearson theorem, we need to convert

the distribution we are going to test into a polynomial

distribution. Since the sample space of the three distributions is

{1, 2, 3, …}, and it is an infinite set, it can be grouped into m

exclusive sets denoted as Sk=1, 2, 3, …, m. Suppose K is a

random variable and its pdf is Pr(K=k), k{1, 2, 3, ...}. A total

of n samples of K are drawn from pdf f (k). Count and denote

the number of samples that are members of the set Sk. as Nk.

Denote pk as Pr(KSk). Then the random variables Nk, (k=1, 2,

… , m) follow the polynomial distribution of (23). The

statistics 2 defined in (24) follow 2(m-1) distribution. If 2 is

too large, then it is reasonable to doubt the fitness of the tested

model with respect to the data. In this test the sample space is

EAC

pk

pkn

pk

pkn

pk

pkn

10143

89.946%

10154.93

89.763%

10134.22

89.705%

10127.69

951

8.299%

936.95

8.300%

937.06

8.689%

981.02

3

143

1.407%

158.85

1.487%

167.92

1.226%

138.45

4

36

0.275%

31.02

0.334%

37.71

0.244%

27.50

≥5

17

0.073%

8.25

0.116%

13.10

0.136%

15.33

20%

1

GPD

2

11.88

5.15

3.90

(m, r)

(5, 2)

(5, 2)

(5, 2)

m-r-1

2

2

2

1- quantile

0.066%

13.80%

27.85%

The Pearson theorem assumes all parameters for the

distribution to be tested are known. If there is any unknown

parameter so that pi’s are just estimates, the degrees of freedom

of the chi-squared distribution need to be reduced by one for

each estimated parameter. The rule is if there are a total of r

estimated parameters, the degrees of freedom of the

chi-squared distribution are reduced by r to become m-r-1. For

the GPD model, parameter and θ are estimates, so the

chi-squared distribution has degrees of freedom 5−2−1=2. The

NB and EAC models also have two estimated parameters, so

the degrees of freedom for both of them are 2 too. The test

result is summarized in TABLE V. The last row of the table

shows the probability of getting a sample deviation larger than

observed, assuming the sample comes from the corresponding

probability model. The EAC is far more fit than the other two.

VII. CONCLUSION AND DISCUSSION

A new model (EAC model) was proposed in this paper to

estimate the probabilities of high-order transmission line

contingencies. Two other possible models: NB and GPD were

also discussed in the paper. Comparison has been done among

these three models. All three models show that there is an

accelerating trend in the spreading transmission outages after

the initiating outage. The EAC model provides the best fits

among all three models. This model is simple and easier to

understand than the other two yet gives better prediction for the

observed outage data. Its two parameters p, which represents

the probability of occurrence of N-2 given an N-1 event at the

start of a cascading transmission blackout, and β, which

quantifies the increasing rate of the conditional probability, i.e.,

Pr(N-K-1/N-K), are estimated to be 10.3% and 1.515. That

means that around 10% of all transmission outages involve

more than one line and after the first outage, the cascading

outage happens with probability increasing by a factor 1.515

for each additional line lost.

All three models can be employed to evaluate and compare

large power systems’ resilience to cascading events. For

example, if the survey data is provided with information

regarding the locations of contingencies, we can model eastern

and western interconnection separately and compare the model

parameters for the two systems. On the other hand, if the

survey data is provided with time stamps for each contingency,

we can model the first and the last ten-year period separately.

This way, we can find out if the reliability of power systems in

US and Canada has been improved.

System Protection Schemes (SPS)[20], which involve a wide

range of automatic mitigation actions, such as under frequency

load-shedding, under voltage load-shedding, and controlled

islanding, are installed in a number of large power systems in

the last ten years. They are designed to prevent large blackouts

and should reduce the number of cascading outages. After they

are in operation for many years and with sufficient statistics, the

models in this paper can be applied to the collected statistics of

the system with and without SPS to find out if the tendency of

having a cascading blackout is reduced.

Risk and decision analysis, which is of great interests to

utilities as power industries are moving toward a more

competitive environment, depend on accurate estimate of

failure probabilities of power system components. The results

of this work will enhance decision making at both the planning

[21] and operational [22] level by giving quantitative

probabilities for high order contingencies. In particular,

operational procedures for defending against large outages are

of great interest to authors[2][14], and the proposed models in

this paper are candidates to aid in allocating computational

resources as they are used on-line to develop defense strategies

as real-time conditions change.

[8]

[9]

[10]

[11]

[12]

[13]

[14]

[15]

[16]

[17]

[18]

[19]

[20]

[21]

VIII. ACKNOWLEDGMENT

The authors thank Professor Ian Dobson of University of

Wisconsin, Madison for useful discussions.

[22]

International Conference on System Sciences, Kauai, Hawaii, January

2006

I. Dobson, B.A. Carreras, D.E. Newman, “Branching process models for

the exponentially increasing portions of cascading failure blackouts,”

Thirty-eighth Hawaii International Conference on System Sciences,

Hawaii, January 2005

A. G. Phadke and J. S. Thorp, “Expose hidden failures to prevent

cascading outages in power systems,” IEEE Comput. Appl. Power, vol. 9,

no. 3, pp. 20–23, Jul. 1996

W.A. Thompson, Jr., Point Process Models with Applications to Safety

and Reliability, Chapman and Hall, 1988

Qiming Chen and James D. McCalley, “A Cluster Distribution as a Model

for Estimating High-order Event Probabilities in Power Systems,”

Probability In The Engineering and Information Sciences, Vol. 19, .Issue

04, 2005, pp489 – 505

R. Adler, S. Daniel, C. Heising, M. Lauby, R. Ludorf, T. White, “An

IEEE survey of US and Canadian overhead transmission outages at 230

kV and above”, IEEE Trans. on Power Delivery, Vol. 9, Issue 1, pp 21

-39, Jan. 1994

Mili, L., Qui, Q. and Phadke, A.G. (2004) ‘Risk assessment of

catastrophic failures in electric power systems’, Int. J. Critical

Infrastructures, Vol. 1, No. 1, pp.38–63

Qiming Chen, Kun Zhu James D. McCalley, “Dynamic decision-event

trees for rapid response to unfolding events in bulk transmission

systems,” Power Tech Proceedings, 2001 IEEE Porto Volume 2, 10-13

Sept. 2001

Joseph C. Swidler; David S. Black; Charles, R. Ross; Lawrence J.

O’Connor, Jr., Report to the President By the Federal Power Commission

On the Power Failure in the Northeastern United States and the Province

Of Ontario on November 9-10, 1965

Miaou, S.-P., and Lum, H., "Modeling Vehicle Accidents and Highway

Geometric Design Relationships," Accident Analysis and Prevention,

25(6): 689-709, 1993

J. Neter, M. H. Kutner, C. J. Nachtsheim, W. Wasserman, Applied Linear

Statistical Models (4th edition), R. D. Irwin, 1996

D. C. Elizondo, J. de La Ree, A. G. Phadke, and S. Horowitz, “Hidden

failures in protection systems and their impact on wide-area

disturbances,” in Proc. IEEE Power Eng. Soc. Winter Meeting, vol. 2, 28

Jan.–1 Feb. 2001, pp. 710–714

Jun Zhang; Jian Pu; McCalley, J.D.; Stern, H.; Gallus, W.A., Jr.; “A

Bayesian approach for short-term transmission line thermal overload risk

assessment,” IEEE Transactions on Power Delivery, Vol. 17, Issue 3,

July 2002, pp770 - 778

CIGRE, task Force 38.02.19, “System protection schemes in power

networks,” CIGRE SCTF 38.02.19, 2000

Miranda, V.; Proenca, L.M.; “Why risk analysis outperforms probabilistic

choice as the effective decision support paradigm for power system

planning,” IEEE Transactions on Power Systems, Vol. 13, Issue 2, May

1998 pp:643 - 648

M. Ni; J. McCalley; V. Vittal; and T. Tayyib; “On-line risk-based security

assessment,” IEEE Transactions on Power Systems, Vol. 18., No. 1,

February, 2003, pp 258-265

REFERENCES

[1]

[2]

[3]

[4]

[5]

[6]

[7]

Qiming Chen, James D. McCalley, “Identifying High Risk N-k

Contingencies for Online Security Assessment” IEEE Transactions on

Power Systems, Vol. 20, Issue 2, May 2005, pp 823 – 834

Qiming Chen, "The probability, identification and prevention of rare

events in power systems," Ph.D. dissertation, Dept. Electrical and

Computer. Eng., Iowa State Univ., Ames, 2004

B.A. Carreras, D.E. Newman, I. Dobson, A.B. Poole, “Evidence for

self-organized criticality in a time series of electric power system

blackouts,” IEEE Transactions on Circuits and Systems I, Vol. 51, no 9,

Sept. 2004, pp 1733 - 1740

The Disturbance Analysis Working Group (DAWG), [on-line]

Website:http://www.nerc.com/dawg/welcome.html

Consul, P. C. Generalized Poisson Distributions: Properties and

Applications, Marcel Dekker, New York, 1989

I. Dobson, B.A. Carreras, D.E. Newman, “A branching process

approximation to cascading load-dependent system failure,”

Thirty-seventh Hawaii International Conference on System Sciences,

Hawaii, January 2004

I. Dobson, K.R. Wierzbicki, B.A. Carreras, V.E. Lynch, D.E. Newman,

“An estimator of propagation of cascading failure,” Thirty-ninth Hawaii

Qiming Chen (M’04) received the B.S. and M.S. from

Huazhong University of Science and Technology, Wuhan,

China in 1995 and 1998 respectively. He received the

Ph.D. degree in electrical engineering, Iowa State

University, Ames, in 2004.

He joined PJM

Interconnection, Eagleville, PA, as a Transmission

Planning Engineer in 2003.

Chuanwen Jiang (M’04) is an associate professor of the

School of Electric Power Engineering of Shanghai Jiaotong

University, P.R.China. He got his M.S. and Ph.D. degrees

in Huazhong University of Science and Technology and

accomplished his postdoctoral research in the School of

Electric Power Engineering of Shanghai Jiaotong

University. He is now in the research of reservoir dispatch,

load forecast in power system and electric power market.

Wenzheng Qiu (M’04) received the B.S.(1995) and

M.S.(1998) from Huazhong University of Science and

Technology, Wuhan, China in 1995 and 1998. She worked

for North East Power Research Institute from 1998 to 2000.

She received the Ph.D. degree in electrical engineering,

Iowa State University, Ames, in 2004. Currently, She

works in PJM Interconnection, Eagleville, PA, as a

Transmission Planning Engineer.

James D. McCalley (F’04) received the B.S., M.S., and

Ph.D. degrees in electrical engineering from Georgia

Institute of Technology, Atlanta, in 1982, 1986, and 1992,

respectively. He was with Pacific Gas & Electric

Company, San Francisco, CA, from 1985 to 1990 as a

Transmission Planning Engineer. He is now a Professor of

Electrical and Computer Engineering at Iowa State

University, Ames, where he has been employed since

1992. He is a registered professional engineer in California.