Lecturer`s notes on microarray analysis

advertisement

Microarray Analysis

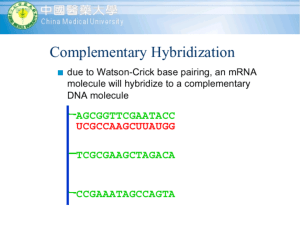

Microarray analysis is method to measure changes in gene expression (actually RNA

content) over a large number of genes at the same time. DNA representing each gene

(called a "probe") is placed on very small spots on a solid support. The mRNA (called a

"target") is converted to fluorescently labeled cDNA and hybridized to the array.

Analysis then reveals a number of genes that are differentially expressed at a higher or

lower level in two different targets. Although the target is typically mRNA, microarray

experiments are also used to quantify microRNAs, clarify splicing patterns, or directly on

genomic DNA to measure amplification of genes in cancer cells. The underlying motive

when measuring gene expression is that more mRNA usually means that more protein is

made. The mechanism could either be a change in the rate of transcription, or a change

in the rate of mRNA turnover. One always has to keep in mind that translational control,

alteration of protein turnover, or regulation by protein modification may also be

occurring, and will not be reflected by the amount of mRNA present.

The history of microarray analysis is summarized in:

Fodor SP et al, Science 1991 251: 767-73

Schena M et al., Science 270: 467-70.

Producing the targets

As with all RNA work, it is essential to avoid contamination with RNAse when preparing

the mRNA. This requires RNAse inhibitors, specially cleaned reagents, fastidious

technique, and an assay for RNA degradation. The classical assay for RNA degradation

is a Northern blot. More recently, HPLC applications have been used.

This image from Agilent's Bioanalyzer web advertisement emphasizes the relative

intensity of the 18S and 26S rRNA bands as an indicator of degradation. Note, however,

that rRNA is much more resistant to RNAse than is mRNA. There would be no mRNA

at all surviving in the sample to the right.

Typically, polyadenylated RNA is subjected to reverse transcription primed with oligo

dT. The label can be produced as dye conjugated dUTP, although each label has a

different efficiency of incorporation. It is also possible to incorporate amino allyl-dUTP,

and conjugate the dye to the amino group after the fact of making the cDNA.

cDNA can be accumulated by linear amplification. For prokaryotic mRNA, random

hexamer primers are used to prime conversion to cDNA. Cycling in this instance risks

generating great variation in the representation of different mRNA species.

Because the method requires reproducible kinetics of hybridization, achieving a

comparable concentration of cDNA in each of the samples is critical for producing usable

results.

Probes

The probes are tightly arrayed on a chip. The different variations can be divided into

short oligos (typically 25 nt), long oligos (typically 60 nt), and full cDNAs.

Different Microarray Instruments.

(Miller and Tang, [2009] Clin. Microbiol Rev. 22:611-633).

In situ synthesis

The

best

known

microarray

system

is

made

by

Affymetrix.

(http://www.affymetrix.com). The oligonucleotide probes are synthesized on the solid

support by a photolithographic technique. This makes use of a blocking group on each

added nucleotide that is removed by a photo-induced reaction allowing the next

nucleotide to add. A "mask" is applied so that only the spots scheduled for the next base

to be added at this position (e.g. a T below) are illuminated. Then the chip is washed

with the reagent to add a T (a nucleotidyl phosphoramidite). The mask is then shifted to

deprotect the spots to get another base (e.g. a C) and then that reagent is added. This is

repeated 4 times at each position until an array of 25 nt long oligonucleotides is built up

on the chip. These are typically called "short oligo" arrays.

Affymetrix tries to put ~11 oligos per gene all from the 3' UTR on each chip, and pairs

them with an oligo that has a mismatched base in the center. The idea is that the signal

from the mismatched probe can be used to subtract non-specific hybridization from the

signal from the perfectly matched probe. This is called PM-MM scoring. However, it

has been shown that correcting by the mismatched signal actually produces nosier data

than ignoring the mismatched signal (Milenaar et al, BMC Bioinformatics 7:137 [2006]).

So most users ignore the MM signal. The name of the software to reanalyze the data

without doing PM-MM subtraction is RMA. The average fluorescence intensity after

excluding outliers (probes whose signals do not change in the same pattern as the others)

is called the "expression value".

Short oligo arrays have less sensitivity than long oligo arrays. For low concentration

targets (e.g. microbiological samples) a preliminary PCR step may be required to amplify

the target. Discrimination of a single mismatch becomes better at shorter lengths. The

signal from a single mismatch is ~ 25% at a probe length of 19 nt. The lithographic

method can produce very high density arrays with a million probes per chip.

Another manufacturer of high density in situ synthesized microarrays is RocheNimbleGen. It gets around the Affymetrix patent on photolithographic synthesis by

using micro-mirrors to focus light on the spots to be extended rather than a lithographic

mask. Agilent uses high density in situ synthesized microarrays where the reagents to

add each successive base are supplied in a focused way by an inkjet printing process.

NimbleGen and Agilent equipment supports two color analysis, whereas Affymetrix

equipment supports only one color analysis.

Spotted (printed) arrays

Before microarrays, people made hybridization arrays by simply spotting DNA on a glass

slide. This strategy has been scaled down through the use of robotic spotting machines

that dip an array of needles into a microplate with solutions of different probes and then

touch them to a glass slide or other solid support. Spotted arrays can be made directly

from denatured cDNAs (or more commonly PCR amplicons from cDNAs), in which case

the DNA sticks to the glass support by electrostatic interaction. However, in order to

distinguish between closely related members of gene families, they are usually made

from synthetic oligonucleotides which are typically 60 nt long and correspond to 3'

untranslated regions. Oligos are bound to the support through a modified 5' or 3' end. To

distinguish from the lithographically synthesized arrays, printed oligo arrays are often

called "long oligo" arrays, although they can be made with oligos of any length. Printed

arrays usually have a density of only 10,000 - 30,000 spots, and have less redundancy per

gene.

The most well known vender of printed microarray equipment is Agilent. UTHSCSA has

Agilent equipment used under the supervision of Dr. Yidong Chen at CCRI. Core

facilities can provide substantial savings by buying a set of oligos, printing the arrays

locally, and distributing the cost over multiple users.

Most spotted array equipment allows two dye measurements. In two dye (or two

channel) measurements, two different targets are labeled with dyes of different colors.

The most commonly used dyes are named cy3 (green) and cy5 (red). Both targets are

hybridized at once to the chip, and the colors are analyzed separately. This has the

advantage of automatically normalizing for variation in the amount of probe from spot to

spot on the array. For two color analysis the raw readout is M = log2 Red/Green = log2R

- log2G. A plot of M for each gene against 1/2(log2R + log2G) is called an MA plot.

From Wikipedia:

The MA plots will typically be subjected to low level normalization based on the

assumption that the average gene is not differentially expressed.

Bead Arrays

Illumina mounts their oligos on 3 micron beads, that fit into wells in a microplate such

that fiber optic sensors attach to each bead. Their two platforms are called Sentrix Array

Matrixes (SAM) or Sentrix BeadChips. The beads have to go through a process called

"decoding" to figure out what oligo sequence became attached to each sensor. Basically,

when the probe oligos are initially synthesized they are concatenated to a 29 nt sequence

designed to be easily identifiable by a series of hybridizations to short oligonucleotides.

Hybridizations to these oligos is carried out first, identifying the address attached to each

optical fiber, and hence the associated probe sequence. The Illumina technology is

related to their next generation sequencing technology, and they sell a platform that will

do both kinds of analysis. Bead Arrays are configured for fewer probes and many more

target samples.

Sources of noise in microarray experiments.

Technical noise.

Probe efficiency variation: There can be variation in the amount of probe per spot, or

the efficiency of hybridization due to formation of hairpins within probes, or due to the

Tm being out of range. The first step of analysis is typically an imaging of the hybridized

spots and the exclusion of spots that are misshapen or compromised by dust or other

inappropriate fluorescent signals in the image.

Image of a section of a microarray from Howlader & Chaubey, IEEE Trans Image

Process 19:1953-1967 (2010).

Nonlinear hybridization kinetics:

To understand the capabilities and limitations of a microarray experiment, a comparison

to its forerunner, the Northern Blot, is given below.

Northern Blot

In a Northern Blot, the RNA from a cellular preparation is run on a denaturing agarose

gel and then the pattern is transferred to a filter to which the RNA becomes permanently

affixed. For a given gene, a cDNA is prepared and either radiolabeled or fluorescently

labeled. The cDNA is called the "probe" in this case. The probe is hybridized to the

filter over a long period of time until all complementary RNA on the filter is duplexed.

After a wash to eliminate probe that is not duplexed, the filter is imaged. Since the

hybridization was to completion, the amount of signal is proportional to the amount of

the cognate RNA on the filter.

Image from Wikipedia Commons.

The strengths of the Northern Blot are that quantification is accurate, degradation or lack

of it of the RNA is apparent, and it has a large dynamic range. Its weakness is that for

each gene, one has to prepare a separate labeled probe, strip the filter, and then hybridize

again. Hence Northern blots are not applicable to analyzing any appreciable number of

different genes. For analyzing large numbers of genes (up to 23,000) a microarray

experiment is used. For analyzing smaller numbers (up to 100) qPCR is now the

preferred method.

Kinetics of microarray hybridization

In microarray analysis, a probe for each gene is placed on a spot on a supporting material,

such that thousands of genes may be represented on a small area (called a "chip").

Fluorescently labeled cDNA corresponding to total RNA is prepared and hybridized to

the chip. The RNA is called the "target" or sometimes the "treatment". Typically one

will be comparing the amount of RNA present under two different circumstances (e.g.,

with and without application of a drug to cells in culture), so the usual outcome is to

identify genes which are more or less heavily represented in the RNA of cells for

treatment A vs. treatment B. In this case, if the target cDNA were hybridized to

completion, then all quantitative information would be lost. The signal intensity would

represent the amount of probe on the chip, not the amount of RNA in the target. Instead

of hybridizing to completion, the target is hybridized for a fixed amount of time so that

each spot is partially duplexed. Since the on rate for hybridization is related to the

concentration of the hybridizing species, the signal produced in a fixed hybridization time

reflects the concentration of the complementary cDNA in the target.

The accumulation of target cDNA on the probe is linear up to a point and then the spot

become saturated. cDNAs present at low levels may not exceed the amount of probe.

Those (red line in the fig. above) will begin to saturate at lower levels because the off rate

becomes significant as the amount of free cDNA approaches zero. The presence of nonspecific opportunities for low level cDNAs to interact with the chip may differ from spot

to spot on the chip and produce spot to spot variation in the signal due to localized

exhaustion of cDNA. The end result is that the signal tends to have poor linearity,

expression changes may be attenuated by saturation, and random variation can be

introduced into the results. Significant expression differences can be lost in the random

background from nonspecific interaction with the chip. This problem is discussed by

Chudin et al., (2002) Genome Biol. 3(1), RESEARCH0005, and Ono et al.,

Bioinformatics. (2008) 24:1278-85.

Noise with bioinformatics sources.

Probes are usually placed in the 3' untranslated regions of genes for two reasons: 1)

Many genes fall in families with sufficiently closely related paralogs that hybridization to

a coding region oligonucleotide would not distinguish between the family members. 2)

The cDNA is usually primed with oligo dT, and the amount of cDNA produced falls off

with distance from the polyA tail. Hence it is necessary to accurately predict the

polyadenylation sites of each gene. Two computer programs are in use for this purpose:

1) polyadq, and 2) Genescan. Neither is 100% reliable, and sometimes gene expression

is missed because the probe was placed downstream of the polyA addition site. Different

placement of the probe from one chip design to the next, coupled to different potentials

for cross hybridization to related sequences and the generally nonlinear response of the

method have historically led to extensive discrepancies in expression profiles from one

experiment to another. These properties are exacerbated when working from a genomic

sequence that is still in early stages of finishing.

Noise due to specifics of different oligos.

Variation in signal intensity among different oligos for the same gene. (modified from Li & Wong.

(2001) PNAS 98, 31-36. Each point is a different oligo in the same gene. The variation in oligo

response is generally greater than the differences from one treatment to another. An oligo's

response can be low because of hairpin formation or because its Tm is out of range. Oligo

response can be high due to cross hybridization to other species in the cDNA.

Many of the technical sources of noise should not affect a relative change in expression

level, and two color measurements should help

In principle, replicate microarray hybridizations (for both treatments) could be used to

drive down the noise. However, microarray experiments typically cost $1000 - $3000

per chip, so most experimenters do one chip per treatment, accept that the results are

noisy, and then proceed to use qPCR to filter out some genes with actual expression

changes. Some microarray setups allow measuring two colors. If treatment A is labeled

in one color and treatment B in another, then the ratio of A/B is measured over each spot

should be free of variation based on the amount of probe washing over the spot.

Another problem is that the total amount of RNA for treatment A and B may not have

been the same, or the cDNA synthesis may not have occurred comparably. Typically

there will be a normalization intended to enforce the assumption that most RNAs did not

change intensity between the two treatments.

Noise from Biological Variation

Deciding that there is confidence in a finding of differential gene expression requires a

statistical analysis that apportions the variation observed between differences in gene

expression and sources of random variation. Consider for example that RNA

preparations were made from the livers of several mice, some receiving no treatment (A)

and some treated with a drug (B). Consider the following experiment that would require

8 mice, four chips, a two color instrument, and 8 labeling reactions:

Here, if we had only done one chip with one treated and one untreated mouse (replicate

1), we might have concluded that gene 1 expression was suppressed by the drug while

gene 2 expression was unaffected. Upon repeating four replicates, we would realize that

expression of gene 1 was subject to extensive biological variation, whereas gene two is

relatively steadily expressed. The statistics to determine if the average change between A

and B for each mouse is significant given the variation from replicate to replicate is

beyond the scope of this course. But clearly without enough replicates there will be

genes identified as being affected by the drug treatment that are, in fact, not.

At first we may wonder if the "biological" variation was really variation of expression

among the livers of these different mice, or technical variation in how much total mRNA

was recovered from each mouse, or how efficiently each total mRNA sample was

converted to probe. However, if there are a large number of genes like B whose

expression appears very steady across replicates, that would suggest that our procedure

for labeled target production was consistent. More often, the RNA preparations won't be

as consistent as we'd like, but it would be possible to notice that some large majority of

gene varied consistently in expression from replicate to replicate, and then we would

normalize all the signals based on the assumption most genes are expressed the same

from mouse to mouse. Similarly, the steadily expressed set of genes may show a

consistent difference between treatment A and B. Commonly a normalization is imposed

to enforce the assumption that the expression of most genes is not different between

treatment A and B.

Alternatively, it can be done to devote two chips to the same A and B samples, only with

the probe labeling (red vs. green) reversed.

The proper statistics for dealing with data of this type is beyond the scope of this (or any

introductory statistics course), but exists in a number of varieties that go by names like

"linear model fitting", and "empirical Bayesian analysis".

The mainline software

package for finding confidence levels that expression has changed is limma, which is

freeware that runs within the R programming language and comes as part of the

bioconductor package (http://www.bioconductor.org/).

An example of R code to conduct the MA plots with normalization seen above:

library(affy)

if (require(affydata))

{

data(Dilution)

}

y <- (exprs(Dilution)[, c("20B", "10A")])

x11()

ma.plot( rowMeans(log2(y)), log2(y[, 1])-log2(y[, 2]), cex=1 )

title("Dilutions Dataset (array 20B v 10A)")

library(preprocessCore)

#do a quantile normalization

x <- normalize.quantiles(t)

x11()

ma.plot( rowMeans(log2(x)), log2(x[, 1])-log2(x[, 2]), cex=1 )

title("Post Norm: Dilutions Dataset (array 20B v 10A)")

Limma will want to fit a "model". The model is the experimental design with respect to

replicative samples, and is best understood by some examples. If one made cDNA from

one untreated mouse and labeled it green, and from one treated mouse and labeled it red

and then did a single dual color hybridization experiment, limma would be given a

"model" that specified that experimental arrangement. One would probably specify some

sort of invariance selection algorithm, in which some of the probes are assumed to detect

RNAs that are expressed the same in both mice, and these are used to normalize for

differences in the amount of cDNA made, the detection efficiency of the two dyes, and

similar systematic differences between the two target cDNAs.

Another program used to determine confidence in expression differences is SAM.

SAM runs as a tool within Microsoft Excel version 2000 or above and only on a

Microsoft Operating System Windows 2000 or above.

It acts as an interface to the R programming system, which must also be installed.

The user must provide for normalization of the data and export to an Excel

spreadsheet format.

As with limma, the user must describe the model of the experiment to SAM.

SAM can be obtained from http://www-stat.stanford.edu/~tibs/SAM/

The literature reference is V. Tusher, R. Tibshirani, and G. Chu. Significance

analysis of microarrays applied to transcriptional

responses to ionizing radiation. Proc. Natl. Acad. Sci. USA., 98:5116–5121, 2001.

An example of SAM summary output:

From the SAM web site.

Clustering

In considering whether genes are differentially expressed, there is usually an attempt to

consider them in groups that coordinate to carry out some function. Placing genes in

groups is called "classification" or "clustering".

Two different clustering strategies are in use:

1) Unsupervised: Enumerate genes showing a coordinated change between two targets.

Ask what gene ontologies are in common (more than by random chance) among that set

of genes.

This illustrates clustering and the use of a heat map for visualization of a gene's

expression profile. In this case the expression being measured is degree of induction (or

repression) at times after initiation of sporulation in yeast. For easy visualization, the

numeric values are converted to a shade of red or green indicating degree of induction or

repression. See the example on the left. Then the genes are stacked in an order that puts

the most similar expression patterns together. That's at right. Each gene is a thin slice of

this heat map. Genes that don't show much change at all are left out to compress the

display.

For association with function, the genes are broken into groups representing a similarity

of expression pattern. These are compared to groups of genes defined as to commonality

of

function

(Gene

ontology

groups,

or

GO groups). Each observed group of genes will overlap each preconceived functionally

defined group by a certain number of genes. A statistic is assigned expressing the

expectation that randomly picked set of genes of the same size would overlap by the

same number of genes. The GO groups are then sorted with the ones that most

improbably overlapped by chance listed first:

From the David Ease web site: genes with GO terms enriched in a set of 400 genes

whose expression was judged to be changed in a peripheral blood mononuclear cells

incubated with HIV envelope protein.

There are a variety of commercial tools that paint expression changes on maps of specific

pathways.

A

non-commercial

tool

of

this

kind

is

GenMapp

(http://www.genmapp.org/default.html).

A demonstration image from the GenMapp web site showing in red the proteins in the

yeast galactose metabolism pathway for which gene induction with galactose was

observed in a microarray experiment.

A more complex example showing differential expression among a number of different

human embryonic stem cell lines:

2) Supervised: Evaluate for preconceived sets of genes thought to collaborate on a

function whether their expression has changed in aggregate between the two targets. A

tool for this is GSEA (Gene Set Enrichment Analysis).

In this analysis, every gene in the preconceived GO group (which they prefer to call a

gene set) is marked in the clustered heat map. This is a difference from the unsupervised

method, where genes that didn't change much were not explicitly accounted for. Since

the heat map is ordered so that most strongly induced genes are at the top and the most

strongly repressed ones are at the bottom, a gene set that was coordinately induced or

expressed would have their members clustered near the top or bottom, respectively of the

heat map. Here we see a partial correlation. More of this gene set's members are

clustered near the top than would be expected by random chance. The analysis then

proceeds by trying to suggest a subset within the gene set that is correlated with the

expression data. Unlike the unsupervised method, this method could detect that a subset

of genes from a group are significantly clustered in the highly induced set even though

the over set is not significantly correlated. For each subset of the gene set starting with

the most induced and including genes in order of induction strength, a statistic (the

enrichment score) is calculated that the subgroup is clustered more to the top than

expected by random chance. When that statistic reaches it's maximum and starts to

decline, a boundary is established for the correlated subset.

Figure 1 from Subramanian et al., PNAS 102:15545 (2005) illustrating the GSEA

supervised classifier. The paper didn't really explain what "phenotype A and B" were,

but I'm thinking of them as replicates of treatment A and B.

Overall data processing pipeline:

The following is a series of steps that might be used in interpretation of micro array data.

Typically, earlier steps might be done with instrumentation-specific software, whereas

later steps would typically be done with R/Bioconductor, SAM and or other 3rd party

packages. In some cases, the instructions for the downstream package will specify not to

do one of more of the more primitive corrections, because that has already been written

into the downstream analysis.

Image inspection: removal of spots that have been compromised.

Background subtraction: removal of some amount of intensity from each spot

representing non-specific interaction with the probe.

Signal averaging and outlier exclusion: If there are multiple probes per gene,

probes that give results discordant with the others are excluded, and then the

remaining signals are averaged.

Normalization: Imposing the assumption that the average gene is not

differentially expressed.

Statistical analysis: Estimation of the within group variation and estimation of

confidence in between group variation. This usually involves specifying an

acceptable false discovery rate, and may involve specifying a minimal between

group differential expression.

Clustering/Classification: Identification of groups of genes that collaborate on a

common function that have varied coordinately with the experimental variable

(e.g. drug vs. no drug; normal vs. diseased).

Validation

Given the large potential for noise to obscure microarray results, essentially all genes said

to be differentially expressed should be subjected to validation. The most common

method is qPCR. Other possibilities are Northern blots or in situ hybridization. qPCR

can easily handle many more replicates, so a common strategy is to do relatively minimal

replicates by microarray (keeping the cost down), and then do more biological replicates

by qPCR.

Glossary of Microarray Jargon:

Biological variation: The degree of variation in results observed if RNAs from several

individuals thought to be in the same physiological state are examined.

Classifier: An algorithm to group data from genes according to functionally related

clusters of genes.

Unsupervised classification - Without prior information, genes found to have a

similar expression profile in a given data set are grouped together.

Supervised classification - The coordinated changes in expression of

preconceived groups of genes are examined in a give data set.

Dye-swap: Repeating a two color experiment (two targets labeled with different color

dyes and hybridized to one chip) with the dye switched between the two targets.

False Discovery Rate: The fraction of genes above a given expression ratio expected to

have been placed there as a result of noise.

Feature: a spot containing an amount of a specific probe sequence.

GSEA (Gene Set Enrichment Analysis): The practice of targeting measurement of

differential expression of preconceived sets of genes thought to collaborate on a function.

(i.e. a supervised classifier). Also the name of a specific software solution to carrying out

this

analysis

(Subramanian

et

al.,

[2005]

PNAS

102:15545-15550;

http://www.broadinstitute.org/gsea).

Miss rate: The false negative rate. The expected number of genes missing from the set

discovered to meet a threshold of significance.

Model: A description of which dyes were used, which targets were hybridized at the

same time, how experimental variables (e.g. drug vs. no drug; time points; control vs.

diseased), and replicates are represented within the number of targets hybridized.

MSigDB: The database of gene sets used by GSEA. The genes are organized into sets

according to one of the following principles:

Positional gene sets - grouped by human chromosome and cytogenetic band.

Curated gene sets - based on online pathway databases, publications in PubMed,

and knowledge of domain experts.

Motif gene sets - based on conserved cis-regulatory motifs.

Computational gene sets - defined by a tendency for coexpression with one of 803

cancer-associated genes.

GO gene sets - annotated by the same Gene Ontology terms.

Over fitting: Attributing meaning to a pattern of expression that fails to replicate. Too

few biological replicates in an experiment leads to a high risk of over fitting.

Technical variation: The degree of variation in results observed if the same RNA is

reanalyzed.