CPN4-ComputerError - Brigham Young University

advertisement

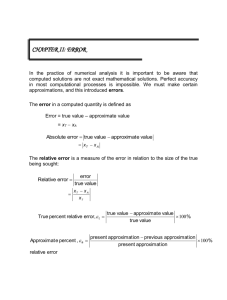

Brigham Young University - Idaho College of Physical Sciences and Engineering Department of Mechanical Engineering Class Prep Notes #4 Computer Precision & Round-Off Error Introduction Using computers to solve problems often times involves approximation, thus introducing error into our solutions. For many engineering problems, we cannot obtain analytical solutions and thus use numerical methods to find good approximations. Some questions to ponder concerning error and approximations include the following: How is error introduced into our calculations? How much error is present in our calculations? Is the error present tolerable? Please consider these questions as you review the following material. Numerical Error Numerical error in computer calculations can be classified into two major forms: roundoff error and truncation error. Round-off error is due to the fact that computers can only represent quantities with a finite number of digits. Truncation error is introduced because numerical methods employ approximations to represent exact mathematical operations and quantities. Significant Figures Significant figures may be defined as those digits of a number that can be used with confidence. Many times in working problems by calculator, students will jot down intermediate answers to a couple of decimal places. Later they will reenter the intermediate answer manually in an additional calculation to arrive at a final answer. By not including all available significant figures, small errors are introduced. Even if intermediate answers are passed directly with all of their significant digits to the final calculations, small errors may be introduced due to the limited number of significant digits available in the calculator or spreadsheet. Quantities such as pi, e, and the square root of many numbers are irrational numbers and cannot be expressed exactly by a limited number of digits. The omission of significant figures is called round-off error. Accuracy and Precision A brief discussion of the terms accuracy and precision may be useful in understanding computer precision and round-off errors. Accuracy refers to how closely a computed or measured value agrees with the true value. Precision refers to how closely individual computed or measured values agree with each other. The target analogy shown in Figure 1 illustrates that both accuracy and precision are desired. Suppose the bulls eye represents the true answer to a problem and the bullet holes represent the approximate answers as determined by a numerical calculation. It may be possible to have a numerical technique that produces highly repeatable, precise results that are not very accurate and miss the bulls eye by a substantial amount. Care must be taken to ensure that the chosen numerical techniques produce results that are both accurate and precise. Figure 1. Accuracy vs. Precision. Computer Representation of Numbers A number system is a convention for representing quantities. By far the most common numbering system in use throughout the world is base-10. A base number is the number used as a reference for constructing the number system. Why base-10? I suppose it has a lot to do with the fact that we have 10 fingers and toes. Figure 2 illustrates briefly how the base-10 numbering system works. Figure 2. Base-10 Numbering System Digital computers, on the other hand, are on/off electronic devices. As such numbers on computers are represented internally with a binary or base-2 system (See Figure 3). The fundamental unit whereby information is stored is called a word. A word consists of several binary digits or bits. Numbers are typically stored in one or more words. Figure 3. Base-2 Numbering System. Integers provide a quick and convenient method for doing many simple calculations. The range of the integer is limited by the number of bits in the word: 8-bit -128 to 127 16-bit -32,768 to 32,767 32-bit -2.15 billion to 2.15 billion 64-bit -9.2E18 to 9.2E18 Most engineering calculations, however, require the use of real numbers. In computer terminology, these are typically referred to as float-point numbers. Float-point numbers are essentially a computer implementation of scientific notation. They consist of a mantissa and exponent. The mantissa is the fractional part of the number, where the exponent is the integer part. The advantage of floating-point representation over integer representation in a computer is that it can support a much wider range of values. The range of floating-point numbers is shown below: 32-bit 1E-38 to 1E38 7 significant digits 64-bit 1E-308 to 1E308 15-16 significant digits Floating-point representation also has some disadvantages. For example, floating-point numbers take more memory and longer processing time than integers. More significantly, however, their use introduces a source of error because the mantissa holds only a finite number of significant digits. Thus, round-off error is introduced. It should be noted, however, although round-off errors can be important in contexts such as testing convergence, the number of significant digits carried on most computers allows most engineering computations to be performed with more than acceptable precision. Excel and Mathcad routinely use double precision (64-bit) to represent numerical quantities. The developers of these packages decided that mitigating round-off errors would take precedence over any loss of speed incurred by using extended precision. Others like Mathlab allow you to use extended precision, if you desire. Losses of significant digits in floating-point calculations can occur by adding very large and very small numbers, resulting in chopping. Losses also occur during the subtraction of nearly equal numbers. This type of loss is among the greatest source of round-off error in numerical methods. Even though an individual round-off error may be small, the cumulative effect over the course of a large computation can be significant. There are times when the foregoing examples can be circumvented by using a transformation. However, the only general remedy is to employ extended precision.