1 Aims Hydrological models that are able to simulate nationwide

advertisement

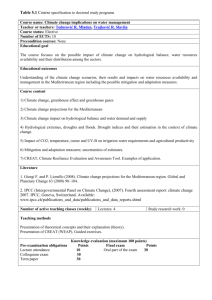

NATIONAL HYDROLOGICAL MODEL TESTING, PART I: NEED AND TEST PROCEDURES Booker DJ,1 McMillan HK,1 1 NIWA Aims Hydrological models that are able to simulate nationwide daily flow time-series using spatially consistent methodologies and input data are a valuable tool to support development of national polices for water management and national environmental reporting. Such models provide nationwide time-series of flood risk, drought potential, water availability for irrigation or power generation, and the effects of changes in climate on hydrology. However, when being used for these purposes, it is important that both spatial and temporal aspects of uncertainty in simulated time-series are quantified. Previous testing of national hydrological models has concentrated on quantifying the ability to model between-site patterns in time-averaged indices (e.g., mean flow, MALF). The aim of this work is to present a comprehensive and consistent suite of test procedures that could be applied to any nationwide hydrological model which calculates daily flow time-series. The first objective of these procedures was to quantify performance when simulating patterns in: a) between-site differences in time-averaged indices; b) between-site differences in daily time-series; and c) between-year differences in annual time-series. The second objective was to identify performance across hydrological signatures represented by various parts of the hydrograph. The third objective was to identify spatial and temporal patterns in performance. The fourth objective was to provide a consistent basis for comparison of performance between different models. Methods We collated daily flow time-series observed at 486 sites draining reasonably natural catchments (free of major dams or diversions). These sites were distributed throughout New Zealand and represented a range of catchment sizes and hydrological conditions. Although each time-series was at least 5 years in length, not all time-series covered the same time period. Years with more than 30 days of missing data were removed from the dataset. The location of each site on the River Environment Classification national river network (Snelder and Biggs, 2002) was identified. This allowed extraction of data describing site and catchment characteristics such as topographic setting, geology and climate. We then developed a suite of test procedures which, when applied together and over many sites, quantify model performance through time and space. The test procedures allow hypotheses which relate model performance to either model parameterisation, model structure, model boundary conditions or geographical setting to be tested. They also provide a consistent framework when comparing different modelled time-series. This is often a requirement when changes in model structure, parameterisation procedures or input data have been made. Alternatively, raw modelled values may be compared with post-processed corrected values gained after applying statistical correction procedures. Results A suite of test procedures was designed in which sets of observed and modelled values were compared (Table 1). These included: 1) mean daily values from the entire observed period for comparisons between days within sites; 2) mean daily values from each year of the observed period for comparisons between days within years within sites; 3) various indices calculated over the entire observed period (e.g., mean annual low flow) for comparison between sites; and 4) various indices calculated for each year of the observed period (e.g., annual low flows) for comparisons between years within sites. For each set of observed and modelled values, we applied three performance metrics, each designed to quantify a different aspect of model performance: Nash-Sutcliffe efficiency (NSE2); percent bias (pbias); and coefficient of determination (r2). See Moriasi et al. (2007) and references therein for full details of these performance evaluation metrics. NSE is a dimensionless metric that determines the relative magnitude of the residual variance (“noise”) compared to the observed data variance (“information”). NSE is commonly used to evaluate hydrological model performance; we used a scaled version NSE2 that takes values between -1 (worst) and +1 (perfect model), with 0 representing a model that is no better than a constant prediction at the mean flow value. Percent bias evaluates the average tendency of the simulated data to be larger (negative pbias) or smaller (positive pbias) than their observed counterparts. For mean flow values, percent bias allows us to test whether the water balance of the catchment is correctly represented. Coefficient of determination evaluates the correlation between observed and modelled values. This metric allows us to test whether the model correctly models locations/years with low/high index values, even if there is systematic bias in the values. These metrics were applied to each set of observed and modelled values in their untransformed raw units, and after having applied either a log or square root transformation in order to better approximate normal distributions. Table 1. Hydrological indices used to test model performance. Index type Index Description Daily flow series DAILY Daily flow series Annual flow descriptor Multi-year flow descriptor MEAN Mean annual flow MAX Annual flood MIN Annual low flow QFEB Proportion of flow in February QFLOOD5 5-year flood QLOW5 5-year low flow QVAR QBAR MALF MAF Interannual variation All-time mean flow Mean annual low flow Mean annual flood Calculation Model simulation of entire daily flow series Mean flow in each hydrological year Maximum daily flow in each hydrological year Minimum daily flow in each hydrological year Mean flow in February as a proportion of mean annual flow, in each hydrological year Maximum daily flow expected during a period of 5 years, using a Gumbel extreme value approximation. Minimum daily flow expected during a period of 5 years, using a normal distribution. Interannual variation in mean flow Mean flow over entire series Mean of the annual minimum flows Mean of the annual maximum flows Conclusion We developed a suite of test procedures that could be applied to any nationwide hydrological model which calculates daily flow time-series. The procedures are comprehensive because they cover a broad range of hydrological signatures across time and space. The procedures are also consistent because they have been defined in advance of their application. The suite of test procedures have been applied to the National TopNet Model. See McMillan et al (2015) for further details of this example application. References Booker, D.J. & Woods, R.A. (2014) Comparing and combining physically-based and empirically-based approaches for estimating the hydrology of ungauged catchments. Journal of Hydrology. 10.1016/j.jhydrol.2013.11.007. Snelder, T.H. & Biggs, B.J.F., 2002. Multi-scale river environment classification for water resources management. Journal of the American Water Resources Association 38, 1225–1240. Moriasi, D.N., Arnold, J.G., Van Liew, M.W., Bingner, R.L., Harmel, R.D. & Veith, T.L. 2007. Model evaluation guidelines for systematic quantification of accuracy in watershed simulations. Transactions of the American Society of Agricultural and Biological Engineers 50, 885–900. McMillan, HK, Booker, DJ, Cattoen-Gilbert, C, Zammit, C (2015) National hydrological model testing, Part I: results and interpretation. Hydrological Society Conference presentation. Dec 2015.

![Job description [DOC 33.50 KB]](http://s3.studylib.net/store/data/007278717_1-f5bcb99f9911acc3aaa12b5630c16859-300x300.png)