Compiler Design - Vel Tech University

advertisement

UNIT - I

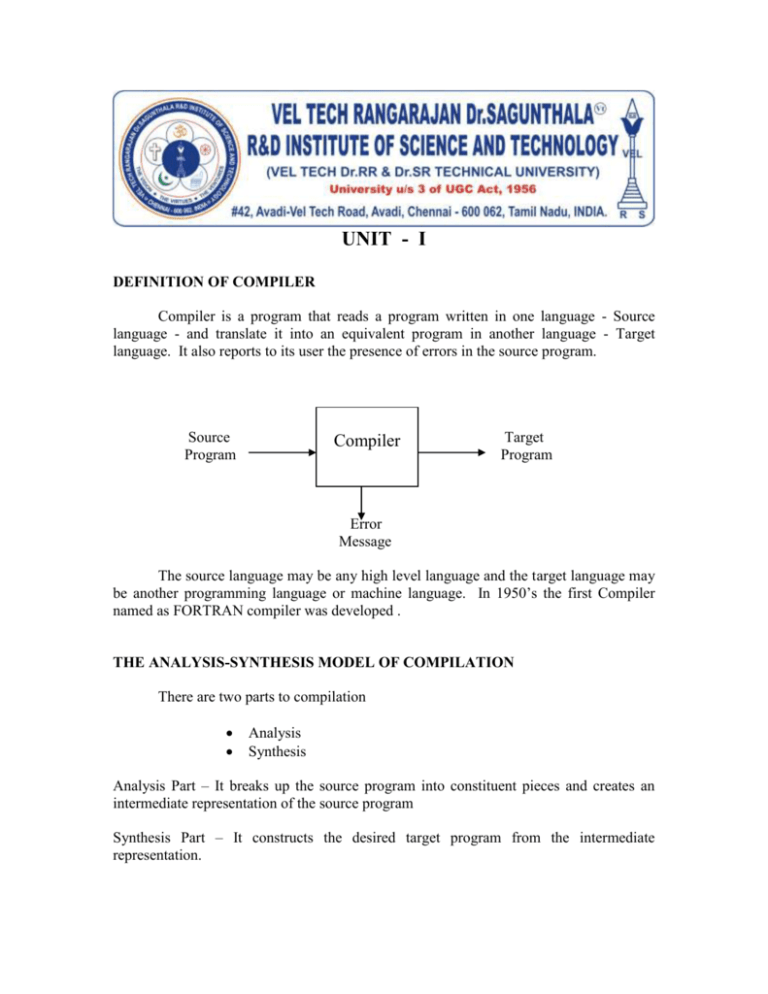

DEFINITION OF COMPILER

Compiler is a program that reads a program written in one language - Source

language - and translate it into an equivalent program in another language - Target

language. It also reports to its user the presence of errors in the source program.

Source

Program

Compiler

Compiler

Target

Program

Error

Message

The source language may be any high level language and the target language may

be another programming language or machine language. In 1950’s the first Compiler

named as FORTRAN compiler was developed .

THE ANALYSIS-SYNTHESIS MODEL OF COMPILATION

There are two parts to compilation

Analysis

Synthesis

Analysis Part – It breaks up the source program into constituent pieces and creates an

intermediate representation of the source program

Synthesis Part – It constructs the desired target program from the intermediate

representation.

During analysis, the operations implied by the source program are determined and

recorded in a hierarchical structure called a tree. A special kind of tree called a Syntax

Tree is used.

Syntax tree - It’s a tree on which each node represents an operation and the children of a

node represent the arguments of the operation.

Example

Draw a syntax tree for an assignment statement

position := initial + rate * 60

:=

position

+

initial

*

rate

60

SOFTWARE TOOLS FOR ANALYSIS

Many software tools that manipulate source programs first perform some kind of

analysis. They are

1. Structure editors

It accepts sequence of commands as input.

It performs text creation and manipulation similar to text editor.

It produce the hierarchical structure as output.

Its output is similar to the output of analysis phase.

2. Pretty printer

It analyzes the program and print the structure of the program.

It output is clearly visible.

3. Static Checker

It reads and analyze the program.

It discovers the bugs without running the source program.

4. Interpreters

It translate the source program in to desired target program

It interprets only one line at a time.

The following compilers have the same analysis phase. They are

1. Text formatters

2. Silicon compilers

3. Query interpreters

1. Text formatters

It takes input as a stream of characters or paragraphs or figures or

mathematical structures like subscript and superscripts.

It perform the operation similar to text editor.

2. Silicon compilers

It consider any programming language as a source language, but the

variable of the language taken as logical signal, not represent the location.

The output is a design in an appropriate language.

3. Query Interpreters

It translates a predicate in to commands

Using the commands, it search a database for records satisfying that

predicate.

THE CONTEXT OF A COMPILER

Compiler needs some other processing for execute the machine code.

structure of a language processing system is shown as follows

Skeletal source program

Preprocessor

Source Program

Compiler

The

Target assembly program

Assembler

Relocatable machine code

Loader / Link Editor

Library,

Reloacatable object files

The source program

maymachine

be divided

into no. of modules stored in separate files.

Absolute

code

The task of collecting the source program into a distinct program, called a

Preprocessor.

The preprocessor may also expand shorthand, called Macros, into the source

language statements.

The target program created by the compiler needs further processing before it can

be run.

The compiler creates assembly code that is translated by an assembler into

machine code.

The machine code is linked with some library routines by linker / load editor into

the code that actually runs on the machine.

Analysis of the Source Program

Analysis part of the compiler have three phases. They are

1. Linear Analysis

2. Hierarchical Analysis

3. Semantic Analysis

Linear Analysis

Linear analysis is otherwise called as Lexical analysis or scanning. This phase

mainly used to group the characters from the input into the tokens.

Example

The tokens for the assignment statement Position := initial + rate * 60 are as

follows

1.

2.

3.

4.

5.

6.

The identifier Position

The assignment symbol :=

The identifier initial

The + sign

The identifier rate

The * sign

7. The number 60

Hierarchical Analysis

It is otherwise called as Parsing or Syntax Analysis. It groups the tokens into

grammatical phrases that are used by the compiler to synthesize output. The grammatical

phrases of the source program are represented by a Parse tree is shown below.

The parse tree for the assignment statement

Position := Initial + Rate * 60 as follows

Assignment statement

:=

Identifier

expression

+

Position

expression

expression

*

Identifier

Initial

expression

expression

Identifier

number

Rate

60

The hierarchical structure of a program is expressed by recursive rules. The following

recursive rules defines the expression

1. Any identifier is an expression.

2. Any number is an expression

3. If expression1 and expression2 are expressions, then so are

expression1 + expression2

expression1 * expression2

(expression1)

rules 1 and 2 are nonrecursive basic rules and rule 3 is a recursive rule.

Lexical constructs do not require recursion, but Syntax analysis use recursion.

Syntax tree – It is a compressed representation of the parse tree in which the operators

appear as the interior nodes, and the operands of an operator are the children of the node

for that operator.

Semantic Analysis

This phase checks the source program for semantic errors. It uses the hierarchical

structure provided by the Syntax analysis phase to identify the operators and operands of

expressions and statements.

The important component of Semantic analysis is type checking. For example,

when a binary arithmetic operator is applied to an integer and real. In this case, the

compiler may need to convert the integer to a real.

PHASES OF A COMPILER

Compiler operates in phases, each of which transforms the source program from

one representation to another. The following diagram depicts a compiler phases.

Source Program

Lexical

Analyzer

Syntax

Analyzer

Semantic

Analyzer

Symbol-table

Manager

Error

Handler

Intermediate Code

Generator

Code

Optimizer

Symbol table management and Error handling are interacting with the six phases

of Lexical Analysis.

Target Program

Symbol Table Management

A Symbol Table is a data structure containing a record for each identifier with

fields for the attributes of the identifier. The data structure allows us to find the record

for each identifier quickly and to store or retrieve data from that record quickly.

Error Detecting and Reporting

Each phase can encounter errors. After detecting an error, a phase must deal with

that error, so that compilation can proceed to find further errors.

The Lexical Analysis phase detect errors where the characters remaining in the

input do not form any token of the language.

The Syntax Analysis phase detect errors where the token streams violates the

structure rules.

The Semantic Analysis phase detect errors where the syntactic structure does not

produce any meaning to the operation involved.

Analysis Phase

The LA phase reads the characters in the source program and groups them into a

stream of tokens. The tokens may be identifier, keyword, a punctuation character or a

multi-character operator like :=.

The character sequence forming a token is called the lexeme for the token.

Certain tokens will be augmented by a “lexical value”.

The Syntax Analysis phase imposes a hierarchical structure on the token stream,

called a Syntax tree in which an interior node is a record with a field for the operator and

two fields containing pointers to the records for the left and right children.

The Semantic analysis phase performs the type checking operation.

Intermediate Code Generation

We consider an Intermediate code form called “three address code” which is like

the assembly language for a machine. It consists of a sequence of instructions, each of

which has at most three operands. It has several properties as follows,

1. Each three address instruction has at most one operator in addition to the

assignment.

2. The compiler must generate a temporary name to hold the value computed by

each instruction.

3. Some instructions have fewer than three operands.

Code Optimization

This phase improves the intermediate code, so that faster running machine code

will result. A significant fraction of time of the compiler is spent on this phase.

Code Generation

This is a final phase which is used to generate the target code, may be either

relocatable machine code or assembly code. Memory locations are selected for each of

the variables used by the program. Then, intermediate instructions are each translated

into a sequence of machine instructions that perform the same task.

Translation of a statement

Position := initial + rate * 60

Lexical Analyzer

Id1 := id2 + id3 * 60

Syntax Analyzer

:=

id1

+

id2

*

id3

Semantic Analyzer

:=

60

id1

+

id2

*

id3

inttoreal (60)

Intermediate Code Generator

temp1 := inttoreal(60)

temp2 := id3 * temp1

temp3 := id2+ temp2

id1 := temp3

Code Optimizer

temp1 := id3 * 60.0

id1 := id2 + temp

Code Generator

MOVF id3, R2

MULF #60.0, R2

MOVF id2, R1

ADDF R2, R1

MOVF R1, id1

COUSINS OF THE COMPILER

The input to a compiler may be produced by one or more preprocessor, and

further processing of the compiler’s output may be needed before running machine code

is obtained.

Preprocessor

It produces inputs to the compiler. It performs the following functions

1. Macro processing – Macros are shorthand for longer constructs processed by

preprocessor.

2. File inclusion – Preprocessor includes header files into the program text.

3. “Rational Preprocessor” – It augment older language with more modern flowof-control and data structuring facilities.

4. Language extension – It adds capabilities to the language by what amounts to

built-in-macros.

Assemblers

Some compilers produce assembly code, that is passed to an assembler for further

processing. Assembler produce the relocatable machine code directly to the loader/linkeditor. It is a mnemonic version of machine code.

Loader/Link Editors

Loader is a program that is performs the two functions of loading and link editing.

The process of loading consists of taking relocatable machine code, altering the addresses

and placing the altered instructions and data in memory at the proper locations. The

link-editor allows us to make a single program from several files of relocatable machine

code.

Grouping of Phases

In an implementation of Compiler, activities from more than one phase are often

grouped together.

Front and Back Ends

The different phases are collected into a front end and a back end. Front end

consists of phases such as lexical analysis, syntax analysis, symbol table manager, Error

handler, semantic analysis and intermediate code generator. Back end consists of phases

such as symbol table manager, error handler, code optimizer and code generator.

Passes

Several phases of compilation are usually implemented in a single pass consisting

of reading an input file and writing an output file. Because of this grouping we directly

convert one form of representation of source program into another.

COMPILER CONSTRUCTION TOOLS

The compiler writer like any programmer, use software tools such as debuggers,

version managers, profilers and so on. Some other tools are

Parser Generators

Input is based on context free grammar and output is syntax analyzer.

It is easy to implement.

Scanner Generator

It generates Lexical Analyzer based on regular expression from input.

Syntax-directed translation engines

It produces collections of routines that walk the parse tree.

Automatic Code Generators

It translate the intermediate language into machine language based on

collection of rules

The rules include the detail to handle different possible access methods for

data.

“Template matching techniques is used

Templates that represent the sequence of machine instructions replace the

intermediate code statements.

Data flow Engines

It performs good code optimization

It involves data flow analysis – the gathering of information about how

values are transmitted from one part of the program in to other part.

SYNTAX

A programming language can be defined by describing what its programs look

like, i.e., the format of the language is called the syntax of the language.

SEMANTICS

A programming language can be defined by describing what its programs mean is

called the semantics of the language.

CONTEXT FREE GRAMMAR

A grammar naturally describes the hierarchical structure of many programming

language. It has 4 components are as follows

1. A set of tokens, known as terminal symbols

2. A set of non terminals.

3. A set of productions where each production consists of a non terminal called

left side of the production, an arrow and a sequence of tokens and/or non

terminals called the right side of the production.

4. A designation of one of the non terminals as the start symbol.

PARSE TREE

A parse tree pictorially shows how the start symbol of a grammar derives a string

in the language. If non terminal A ha s production A -> XYZ, then a parse tree may have

an interior node labeled A with three children labeled X, Y, and Z from left to right :

A

X

Y

Z

Properties of a Parse Tree

1. The root is labeled by the start symbol.

2. Each leaf is labeled by a token or by

3. Each interior node is labeled by a non terminal

Definition of Yield

The leaves of a parse tree read from left to right form the yield of the tree, Which

is the string generated or derived from the non terminal at the root of the parse tree.

Parsing

The process of finding a parse tree for a given string of token is called parsing that

string.

Ambiguity

A grammar can have more than one parse tree generating a given string of token.

Such a grammar is said to be ambiguous.

Eg. Two parse trees for 9 – 5+2

string

string

string

string

+

string

-

string

string

string

-

9

string

+

string

2

9

5

To avoid this ambiguous problem, two methods are used

5

2

1. Associativity of operators

2. Precedence of operators

Associativity of operators

By convention, 9+5+2 is equivalent to (9+5)+2 and 9-5-2 is equivalent to (9-5)-3.

When an operand like 5 has operators to its left and right, conventions are needed for

deciding which operators takes that operand. We say that the operator + associates t the

left because an operand with plus signs on both sides of its taken by the operator to its

left. In most programming languages the four arithmetic operators, addition, subtraction,

multiplication, and divisions are left associative.

Some common operators such as exponentiation are right associative. As another

example, the assignment operator = in C is right associative; in C, the expression a = b= c

is treated in the same way as the expression a=(b=c).

Precedence of operators

Consider the expression 9+5+2. There are two possible interpretation of this

expression: (9+5)*2 or 9+(5+2). The associativity of + * do not solve this ambiguity. For

this reason, we need to know the relative precedence of operators when more than one

kind of operators is present.

We say that * has higher precedence than + if * takes its operands before + does.

In ordinary arithmetic, multiplication and division have higher precedence than addition

and subtraction. Therefore, 5 is taken by * in both 9+5*2 and 9*5*2; i.e., the expression

are equivalent to 9+(5*2) and (9+5)+2, respectively.

Syntax Directed Definitions

A syntax – directed definition uses a context tree grammar to specific the

syntactic structure of the input. With each grammar symbol, it associated a set of

attributes, and with each production, a set of semantic rules for computing values of the

attributes associated with the symbols appearing in that production.

ROLE OF LEXICAL ANALYZER (LA)

The LA is the first phase of a compiler which is used to read the input characters

and produce as output a sequence of tokens that the parser uses for syntax analysis. The

interaction b/w lexical analyzer and parser is shown below.

Source

Program

Lexical

Analyzer

token

Parser

Get next token

Issues in Lexical Analysis

Symbol table

There are several reasons for separating the analysis phase of compiling into

lexical analysis and parsing.

1. Simpler design.

2. Compiler efficiency is improved.

3. Compiler portability is enhanced.

Tokens, Patterns, Lexemes

Lexeme - The character sequence forming a token is called the lexeme for the token. It

associates with lexical value.

Tokens - The set of characters are called as tokens.

Patterns - The set of strings are described by a rule called a pattern associated with

that token.

Example :

TOKEN

const

if

relation

id

num

literal

SAMPLE LEXEMES

Const

If

<, <=, >, >=, =, <>

pi, const, D2

3.1416,0,6.02E23

“core dumped”

INFORMAL DESCRIPTION OF PATTERN

const

if

< or <= or > or >= or = or <>

letter followed by letters and digits

any numerical constant

Any characters between “and” except “

Attributes for tokens

When more than one pattern matches a lexeme, the lexical analyzer must provide

additional information about the particular lexeme that matched to the subsequent phases

of the compiler. Identifiers and numbers having only one attribute as a pointer to the

symbol table entry.

Example : The tokens and associated attribute-values for the Fortran statement

E = M * C ** 2 are written as follows

< id, pointer to symbol-table entry for E >

< assign_op, >

< id, pointer to symbol-table entry for M >

< mult_op, >

< id, pointer to symbol-table entry for C >

< exp_op, >

< num, integer value 2 >

Lexical Errors

Suppose the lexical analyzer is unable to proceed because none of the patterns for

tokens matches a prefix of the remaining input. In this case it used “panic mode” error

recovery strategy. Its method is we delete successive characters from the remaining input

until the lexical analyzer can find a well formed token. Some other error recovery

strategies are as follows.

1.

2.

3.

4.

Deleting an extraneous character

Inserting a missing character

Replacing an incorrect character by a correct character

Transposing two adjacent characters

INPUT BUFFERING

To speed up the operations of lexical analyzer, we use two buffering techniques.

1. Two buffer input scheme

2. Using Sentinels

Buffer Pairs

This method is used when the lexical analyzer needs to look ahead several

characters beyond the lexeme for a pattern before a match can be announced.

In this method, we divide an input buffer into two N-character halves where N is

the no. of characters on one disk block. E.g., 1024 or 4096.

:

:

E :

=

:

M :

*

C

:

* :

*

:

2 : eof :

forward

Lexeme_beginning

We read N input characters into each half of the buffer with one system read

command, rather than invoking a read command for each input character. If fewer than

N characters remain in the input, then a special character eof is read into the buffer after

the input character. This character is different from any input character.

Two pointers to the input buffer is forward and lexeme beginning.

The string of characters between the two pointers is the current lexeme.

Both the pointers point to the first character of the next lexeme to be

found.

Forward pointer scans ahead until a match for a pattern is found. It will

point to the character at its right end after finding the token.

Code to advance forward pointer is as follows

if forward at end of first half then begin

reload second half;

forward := forward+1

end

elseif forward at end of second half then begin

reload first half;

move forward to beginning of first half

end

else forward := forward+1;

Sentinels

The previous method needs two tests for each advance of the forward pointer.

This can be reduced as a single test for this Sentinel concept. Each buffer half can hold a

sentinel character at the end. It is a special character that cannot be part of the source

program. The same buffer arrangement with sentinels as follows.

:

:

E :

=

:

M :

* eof

C

:

* :

*

:

2 : eof :

forward

Lexeme_beginning

Lookahed code with sentinels

forward := forward +1;

if forward = eof then begin

if forward at end of first half then begin

reload second half;

forward := forward+1

end

elseif forward at end of second half then begin

reload first half;

eof

move forward to beginning of first half

end

else

terminate lexical analysis

end

SPECIFICATION OF TOKENS

Strings and Languages

Any finite set of symbols are called as Alphabet or Character. Ex. for binary

alphabet is {0,1}

A finite sequence of symbols or alphabets are called as String

|s| is the no. of occurrences of symbols in s called as the length of the string s.

The string of length “zero” is called as Empty string denoted by .

Any set of strings over some fixed alphabet is called as a Language.

A string obtained by removing zero or more trailing symbols of string s is called

prefix of string s.

A string formed by deleting zero or more of the leading symbols of s is called

suffix of string s.

A string obtained by deleting prefix and a suffix from s is called Substring of s.

Every prefix or a suffix of s and a string s is also considered as a substring.

Any nonempty string x that is, a prefix, suffix, or substring of s such that sx is

called as proper prefix, suffix or substring of s.

Any string formed by deleting zero or more not necessarily contiguous symbols

from s is called as a subsequence of s. Ex. baaa is a subsequence of banana.

Operations on Language

There are several important operations that can be applied to languages. Some of

the important operations are as follows.

1. Union of L and M is denoted as

L U M = { s | s is in L or s is in M }

2. Concatenation of L and M is denoted as

LM = { st | s is in L and t is in M }

3. Kleene closure of L is denoted as

L* = U Li

i=0

4. Positive Closure of L is denoted as

L+ = U Li

i=0

Regular Expressions

A language denoted by a regular expression is said to be a regular set.

Regular Definitions

We will give names to regular expressions and to define regular expressions using

these names as if they were symbols. If is an alphabet of basic symbols, then a regular

definition is a sequence of definitions of the form

d1

r1

d2

r2

.....

dn

rn

Notational Shorthands

One or more instances : The unary prefix operator + means “one or more

instances of ”. The operator + has same precedence and associativity as

the operator *. The two algebraic identities r* = r+| and r+ = rr* relates

Kleene closure and Positive closure operators.

Zero or more instances : The unary postfix operator ? means “zero or

more instance of ”. The notation ( r )? is a shorthand for r|.

Character classes : The notation [abc] where a,b,c are alphabet symbols

denotes the regular expression a|b|c. Ex., the regular expression for

identifiers can be described using this notation as

[A-Za-z][A-Za-z0-9]*

Non Regular Sets

Some languages can not be described by any regular expression, that are called as

non regular sets. Example , Repeating strings cannot be described by regular expressions.

{wcw | w is a string of a’s and b’s }

UNIT - II

RECOGNITION MACHINE

RECOGNITION OF TOKENS

This topic explains how the tokens are recognized. For Example

stmt

if expr then stmt

| if expr then stmt else stmt

|

expr

term relop term

| term

term

id

| num

where the terminals if, then, else, relop, id and num generate sets of strings given

by the following regular definitions :

if

if

then

then

else

else

relop

< | <= | = | <> | > | >=

id

letter ( letter | digit )*

num

digit+ ( .digit+ )?( E(+|-)? digit+ )?

delim+

ws

Regular expression patterns for tokens is shown in the following table

Regular

Expression

ws

if

then

else

id

num

<

<=

=

<>

>

>=

Token

Attribute value

if

then

else

id

num

relop

relop

relop

relop

relop

relop

pointer to table entry

pointer to table entry

LT

LE

EQ

NE

GT

GE

Transition Diagrams

A stylized flowchart called a transition diagram which depicts the actions that

take place when a lexical analyzer is called by the parser to get the next token.

Positions in a transition diagram are drawn as circles and are called states.

The states are connected by arrows, called edges.

Edges leaving state s has labels indicating the input characters that can next

appear after the transition diagram has reached state s.

The label other refers to any character that is not indicated by any of the other

edges leaving s.

The starting state of a transition diagram is labeled as start state.

Transition diagram for relational operator

o

1

2

3

4

5

6

7

8

Letter or digit

Start

Letter

other

9

10

digit

Start 12 digit

13

11

return

(gettoken(), install_id())

digit

14

digit

15

digit

E

16 +or -

E

digit

17

digit

18 others

19

digit

digit

*

Start 20 digit

21

22

digit

23 others

24

digit

Start 25 digit 26 other 27 *

delim

*

Start

28 delim

29 other

30

Implementing a Transition Diagram

A sequence of transition diagrams can be converted into a program to look for the

tokens specified by the diagrams.

Program size is directly proportional to the no. of states and edges in the diagrams.

Each state gets a segment of code.

If any edge leaving a state, then its code reads a character and selects an edge to

follow, if possible.

A function nextchar( ) is used to read the next character from the input buffer and

advance the forward pointer and return the character read.

If all the transition diagrams are failed, then fail( ) routine is called.

A global variable lexical_value is assigned to the pointer returned by functions

install_id( ) and install_num( ).

The function nexttoken( ) is used to return the token of the LA.

Two variables start & state holds the present state and starting state of the current

transition diagram.

Coding for finding next start state

int state=0, start=0;

int lexical_value;

int fail( )

{

forward = token_beginning;

switch(start)

{

case 0: start = 9; break;

case 9: start = 12; break;

case 12: start = 20; break;

case 20: start = 25; break;

default : recover( ); break;

}

return start; }

Lexical Errors & Error Recovery Strategies

Suppose the lexical analyzer is unable to proceed because none of the patterns for

tokens matches a prefix of the remaining input. In this case it used “panic mode” error

recovery strategy. Its method is we delete successive characters from the remaining input

until the lexical analyzer can find a well formed token. Some other error recovery

strategies are as follows.

1.

2.

3.

4.

Deleting an extraneous character

Inserting a missing character

Replacing an incorrect character by a correct character

Transposing two adjacent characters

A LANGUAGE FOR SPECIFYING LEXICAL ANALYZERS

A particular tool to construct LA is called as Lex. This tool is otherwise called as

Lex compiler, and its input is Lex language.

First, the source program of Lex compiler is named as Lex.1 is given thro’ the

compiler, which produce the output in the form of tabular representation of

transition diagram.

It will be given to C compiler as a input which produce output as a.out. This is a

actual Lexical analyzer.

Finally it converts the stream of inputs into sequence of tokens as follows.

lex.1

Lex

compiler

lex.yy.c

lex.yy.c

C

compiler

a.out

i/p stream

a.out

Sequence of

tokens

Lex specification

%{ declarations }%

regular definitions

%%

translation rules

%%

auxiliary procedures

declarations: include and manifest constants (identifier declared to represent a

constant).

regular definitions: definition of named syntactic constructs such as letter using

regular expressions.

translation rules: pattern / action pairs

auxiliary procedures: arbitrary C functions copied directly into the generated

lexical analyzer.

Lex Conventions

The program generated by Lex matches the longest possible prefix of the input.

For example, if < = appears in the input then rather than matching only the <

(which is also a legal pattern) the entire string is matched.

Lex keywords are:

o yylval: value returned by the lexical analyzer (pointer to token)

o yytext: pointer to lexeme (array of characters that matched the pattern)

o yyleng: length of the lexeme (number of chars in yytext).

If two rules match a prefix of the same and greatest length then the first rule

appearing (sequentially) in the translation section takes precedence.

For example, if is matched by both if and {id}. Since the if rule comes first,

that is the match that is used.

The Lookahead Operator

If r1 and r2 are patterns, then r1/r2 means match r1 only if it is followed by r2.

For example,

DO/({letter}|{digit})*=({letter}|{digit})*,

recognizes the keyword DO in the string DO5I=1,25

Finite Automata

Recognizer - A Recognizer for a language is a program that take as input a string x and

answers “yes” if x is a sentence of the language and “no” otherwise.

Finite Automaton - A regular expression is compiled into a recognizer by constructing a

generalized transition diagram called a finite automaton.

Two types of Finite automata is Deterministic (DFA) & Non deterministric (NFA)

Difference between NFA & DFA

NFA

1. Slower to recognize any regular

Expression

2. Size is small

3. It has a state with transition.

4. For the same input more than one

transition is occur from a single state

DFA

Faster to recognize any regular

expression

Size is bigger than NFA

No state has an transition

For each state and input symbol, there is

at most one edge labeled with it.

Deterministic Finite Automata

It’s a mathematical model which consist of

1. a set of states S.

2.

3.

4.

5.

a set of input symbols .

a transition function move that maps state-symbol pairs to set of states.

a state s0 that is distinguished as the start state.

a set of states F distinguished as accepting states.

Transition Graph

An NFA can be represented diagrammatically by a labeled directed graph called a

transition graph, in which nodes are the states and the labeled edges represent the transition

function.

Transition Table

A table which contains a row for each state and a column for each input symbol and

if necessary.

Moves

A path can be represented by a sequence of state transitions called moves.

The Language defined by an NFA is the set of input string it accepts.

Deterministic Finite Automata

It is a special case of NFA in which

1. no state has an transition.

2. for each state s and input symbol a, there is at most one edge labeled a

leaving s.

Conversion of Regular Expression in to an NFA

Given a regular expression there is an associated regular language L(r). Since

there is a finite automata for every regular language, there is a machine, M, for every

regular expression such that L(M) = L(r).

The constructive proof provides an algorithm for constructing a machine, M,

from a regular expression r. The six constructions below correspond to the cases:

1) The entire regular expression is the null string, i.e. L={epsilon} r = epsilon

2) The entire regular expression is empty, i.e. L=phi

r = phi

3) An element of the input alphabet, sigma, is in the regular expression r = a

is an element of sigma.

where a

4) Two regular expressions are joined by the union operator, + r1 + r2

5) Two regular expressions are joined by concatenation (no symbol)

6) A regular expression has the Kleene closure (star) applied to it

r1 r2

r*

The construction proceeds by using 1) or 2) if either applies.

The construction first converts all symbols in the regular expression using construction

(3). Then working from inside outward, left to right at the same scope, apply the one

construction that applies from (4) (5) or (6).

Note: add one arrow head to figure 6) going into the top of the second circle.

The result is a NFA with epsilon moves. This NFA can then be converted to a NFA

without epsilon moves. Further conversion can be performed to get a DFA. All these

machines have the same language as the regular expression from which they were

constructed.

The construction covers all possible cases that can occur in any regular

expression. Because of the generality there are many more states generated than are

necessary. The unnecessary states are joined by epsilon transitions. Very careful

compression may be performed. For example, the fragment regular expression aba

would be

a

e

b

e

a

q0 ---> q1 ---> q2 ---> q3 ---> q4 ---> q5

with e used for epsilon, this can be trivially reduced to

a

b

a

q0 ---> q1 ---> q2 ---> q3

Simulating an NFA algorithm

S := -closure({s0)};

a := nextchar( );

while a eof do begin

S := -closure(move(S,a));

a := nextchar( );

end

if SF then

return “yes”

else return “no”;

Conversion of NFA to DFA

The subset construction algorithm for this conversion is as follows.

initially -closure(s0) is the only state in Dstates and it is unmarked;

while there is an unmarked state T in Dstates do begin

mark T;

for each input symbol a do begin

U := -closure(move(T,a));

if U is not in Dstates then

add U as an unmarked state to Dstates;

Dtran[T,a] := U

end

end

Computation of -closure is done by the following algorithm

push all states in T onto Stack;

initialize -closure(T) to T;

while stack is not empty do begin

pot t, the top element, off of stack;

for each state u with an edge from t to u labeled do

if u is not in -closure(T) do begin

add u to -closure(T);

push u onto stack

end

end

DESIGN OF A LEXICAL ANALYZER GENERATOR USING FA

A specification of a lexical analyzer of the form

P1 { action 1 }

P2 { action 2 }

. . . . . . ..

P3 { action n }

Each pattern pi is a regular expression and each action i is a program fragment that is

to be executed whenever a lexeme matched by pattern pi is found in the input.

Problem : Suppose if more than one pattern matches a single lexeme, then we will take the

longest match of lexeme for the problem as a solution.

Example

Iftext = 5

In this statement after reading i and f , it will be considered as a keyword, and next we

read other characters upto t that forms iftext is considered as an identifier. So iftext matches

both keyword and identifier. For this problem we will take the longest lexeme iftext and take

the pattern as an identifier and execute its corresponding action.

A lexical analyzer is also constructed by using Finite Automata and it may be either

Deterministic or Non deterministic. The model of Lex compiler using Finite Automata is

shown in the following figure.

Lex

Transition

Lex

specification

table

compiler

lexeme

FA

simulator

transition

table

The lex compiler is used to compiler the lex language input into the tabular

representation of a transition diagram. This transition table is given as a input to the FA

simulator which produce two pointers such as forward and lexeme beginning which points

the current lexeme in an input buffer. The FA simulator may be either NFA or DFA.

Pattern matching based on NFA’s

One method to construct the transition table of an NFA N for the patterns p1|p2|…|pn.

This can be done by creating an NFA N(pi) for each pattern pi, then adding a new start state

s0, and finally linking s0 to the start state of each N(pi) with an transition as shown below.

N(p1)

N(p2)

S0

.

.

N(pn)

Example

Consider a Lex program consist of 3 regular expressions and no regular

definitions as follows

a

{ } /* actions are omitted here */

abb

{ }

+

a*b

{ }

An NFA for all the above regular expressions are

start

start

11

3

a

a

2

4

b

5

b

6

start

7

b

8

Combined NFA

start

1

3

0

7

a

2

a

4

b

b

5

b

6

8

Sequence of sets of states entered in processing input aaba

p1

p3

a

a

b

a

0

2

1

4

3

7

7

8

7

We consider a string “aaba” that can match more than one patterns in NFA, and

the action is executed corresponding to the pattern. Starting state is 0137. The first input

symbol a is readed and it will reach the states 247. From 247, 2 is an accepting state for

the first pattern. The next input symbol a is reached only a state 7 and it does not match

any patterns. The third input symbol is b which reaches the state 8 and 8 is an accepting

state. So, it matches the 3rd pattern. The last input string is a. But it does not reach any

state. So we cannot execute any action.

DFA for Lexical Analyzers

Here we construct the transition table for a DFA for the same above example as

follows

State

0137

247

8

7

58

68

Input Symbol

a

247

7

7

-

B

8

58

8

8

68

8

Pattern

Announced

none

a

a*b+

none

a*b+

abb

Optimizing of DFA-based Pattern Matchers

There are three algorithms are used to optimize the DFA. They are

1. Directly convert the regular expression into DFA

2. Minimize the no. of states in DFA.

3. Make a transition table as a compact one.

Important states of an NFA

1. All states of an NFA is important if it has no transition.

2. Make an accepting state as important one by adding one unique right end

marker # at the end of the regular expression. Now the regular expression

is called as Augmented regular expression.

1. From Regular Expression to a DFA

1. Convert regular expression into an augmented regular expression ( r )#.

2. Construct a syntax tree T for ( r )#.

3. Compute four functions : nullable, firstpos, lastpos and followpos by

making traversals over T. The first 3 functions are defined on the nodes of

the syntax tree and the last one is defined on the set of position.

4. Finally we constrt the DFA from followpos.

Example

Consider the regular expression (a|b)*abb#

Firstpos and lastpos for nodes in syntax tree for (a|b)*abb# and followpos table

is as follows

NODE

followpos

1

2

3

4

5

6

{1,2,3}

{1,2,3}

{4}

{5}

{6}

-

2.

Minimizing the no.

Of states of a DFA

Input : A DFA M with

set of states S, set of inputs

, transitions defined for

all states and inputs, start

state s0, and set of accepting states F.

Output : A DFA M’ accepting the same language as M and having as few state as

possible.

Method :

1.

2.

Construct an initial partition of the set of states with two groups : the

accepting states F and the non accepting states S-F.

Apply the following procedure to to construct a new partition new.

for each group G of do begin

partition G into subgroups such that two states s and t of G

are in the same subgroup if and only if for all input symbols a.

states s and t have transitions on a to states in the same group

of .

replace G in new by the set of all subgroups formed.

end

3.

4.

If new=, let final= and continue with step 4. Otherwise, repeat step

2 with := new.

Choose one state in each group of the partition final as the representative

for that group. The representatives will be the states of the reduced DFA

M’.

5.

If M’ has a dead state, that is, a state d that is not accepting and that has

transitions to itself on all input symbols, then remove d from M’. also

remove any states not reachable from the start state. Any transitions to d

from other states become undefined.

3. State Minimization in Lexical Analyzers

Using table compression method, we can make the transition table as a compact

one. Normally transition table is a two dimensional array. Here, we use a data structure

consisting of four arras indexed by state numbers. The base array is used to determine

the base location of the entries for each state stored in the next and check arrays. The

default array is used to determine an alternative base location in case the current base

location in invalid. The data structure for representing transition tables as shown below

To compute nextstate(s,a), the transition for state s on input symbol a, we first consult the

pair of arrays next and check. We find their entries for state s in location l = base[s]+a,

where a is treated as an integer. We take next[ l ] to be the next state for s on input a if

check[ l ] = s. If check[ l ] s, we determine q = default[s] and repeat the entire

procedure recursively, using q in place of s. The procedure is the following

procedure nextstate(s,a);

if check[base[s]+a] = s then

return next[base[s]+a]

else

return nextstate(default[s],a)

Problem

Convert the Regular expression (a/b)*abb into NFA and then to DFA:

i)

Regular expression into NFA:

a

2

I

3

1

6 b

a

7

8

S0

b

4

5

9

b 3

F

S10

ii) NFA to DFA:

a) Finding -Closure for all States.

-Closure (S0) = {S0, S1, S2, S4, S7} =A

MOV (A, a) = {S3, S8}

-Closure (MOV (A, a) = {S3, S6, S7, S1, S2, S4, S8} =B

MOV (A, b) = {S5}

-Closure (MOV (A, b) = {S5, S6, S7, S1, S2, S4} =C

MOV (B, a) = {S3, S8}

-Closure (MOV (B, a) = {S3, S6, S7, S1, S2, S4, S8} =B

MOV (B, b) = {S5, S9}

-Closure (MOV (B, b)={S5, S6, S7, S1, S2, S4, S9}=D

MOV(C, a) = {S3, S8}

-Closure (MOV(C, a)={S3, S6, S7, S1, S2, S4, S8}=B

MOV(C, b) = {S5}

-Closure (MOV(C, b)={S5, S6, S7, S1, S2, S4}=C

MOV (D, a)={S3, S8}

-Closure (MOV (D, a)={S3, S6, S7, S1, S2, S4, S8}=B

MOV (D, b) = {S5, S10}

-Closure (MOV (D, b)={S5, S6, S7, S1, S2, S4, S10}=E

MOV (E, a) = {S3, S8}

-Closure (MOV (E, a)={S3, S6, S7, S1, S2, S4, S8}=B

MOV (E, b) = {S5}

-Closure (MOV (E, b)={S5, S6, S7, S1, S2, S4}=C

b) Transition diagram

State

Inputs

a

b

A

B

C

D

E

B

B

B

B

B

C

D

C

E

C

c) Minimization

The available states are ABC DE

The common state s (except final state) - AC

Remove C state & Put A in the place of C.

BD

d) Minimized Transition diagram

State

Inputs

A

B

D

E

a

b

B

B

B

B

A

D

E

A

e) DFA- (Minimized NFA)

b

A

a

a

B

b

D

b

a

b

a

Example of Converting an NFA to a DFA

E

E

This is one of the NFA examples in the lecture notes. Here we convert it to a DFA. (The

regular expression, above, is not relevant to this conversion.)

This machine is M = ({1, 2, 3, 4, 5, 6, 7, 8}, {a, b, c}, DELTA, 1, {8}) where DELTA =

{

(1, b, 1),

(1, epsilon, 2),

(2, epsilon, 7),

(2, b, 3),

(2, b, 5),

(3, a, 4),

(3, c, 4),

(4, c, 2),

(4, c, 7),

(5, a, 6),

(5, b, 6),

(6, c, 2),

(6, epsilon, 2),

(6, c, 7),

(6, epsilon, 7),

(7, b, 8) }. Note here that DELTA is a relation (a set of triples).

We are computing M' = (K', sigma, delta', s', F'). Note that sigma is the same as that of

the NFA. In this case sigma = {a, b, c}.

Step 1: Compute E(q) for all states, q in K

E(q) is the set of states reachable from q using only (any number of) epsilon transitions.

q E(q)

1 {1, 2, 7}

2 {2, 7}

3 {3}

4 {4}

5 {5}

6 {2, 6, 7}

7 {7}

8 {8}

Step 2: Compute s' = E(s)

Here E(s) = E(1) = {1, 2, 7}.

Step 3: Compute delta'.

We start from E(s), where s is the start state of the original machine. We add

states as necessary.

delta (q\sigma) A

B

c

{1, 2, 7}

{}

{1, 2, 3, 5, 7, 8}

{}

{}

{}

{}

{}

{1, 2, 3, 5, 7, 8}

{2, 4, 6, 7} {1, 2, 3, 5, 6, 7, 8} {4}

{2, 4, 6, 7}

{}

{3, 5, 8}

{2, 7}

{1, 2, 3, 5, 6, 7, 8} {2, 4, 6, 7} {1, 2, 3, 5, 6, 7, 8} {2, 4, 7}

{}

{}

{2, 7}

{4}

{3, 5, 8}

{2, 4, 6, 7} {2, 6, 7}

{4}

{2, 7}

{}

{3, 5, 8}

{}

{2, 4, 7}

{}

{3, 5, 8}

{2, 7}

{2, 6, 7}

{}

{3, 5, 8}

{2, 7}

delta'(StateSet, inputSymbol) = Union of E(q) for all q where (p, inputSymbol, q) is in

DELTA and p is in StateSet. We'll do one example in gory detail: delta({1, 2, 7}, b) =

{1, 2, 3, 5, 7, 8} because you can reach {1, 3, 5, 8} on "b" transitions (DELTA contains

(1, b, 1), (2, b, 3), (2, b, 5), and (7, b, 8)) and E(1) = {1, 2, 7}, E(3) = {3}, E(5) = {5}, and

E(8) = {8}. If you union all of those together, you get {1, 2, 3, 5, 7, 8}. Step 4:

Enumerate K', the set of states : The states are just the entries in the left column: K' =

{{1,2,7}, {}, {1,2,3,5,7,8}, {2,4,6,7}, {1,2,3,5,6,7,8}, {4}, {3,5,8}, {2,7}, {2,4,7},

{2,6,7}). There are 10 states, but 28 = 64 were possible.

Step 5: Compute F'

The final states are the states from K' that have some intersection with the final

state(s) of the original machine. In this case, since 8 was the only final state of the

original machine, our final states in the DFA are those states that have 8 in them: F' =

{{1,2,3,5,7,8}, {1,2,3,5,6,7,8}, {3,5,8}}.

Putting it all together

Our DFA, then, is M' = (K', {a, b, c}, delta', {1, 2, 7}, F') where K', delta', and F'

are as above.

Minimizing the DFA

Note that if the DFA is minimized (like in the project), then the states {2, 4, 6, 7}, {2,

4, 7}, and {2, 6, 7} coalesce, leaving an 8-state machine. (State minimization is a

separate algorithm.)

TOP-DOWN PARSING

Construction of the parse tree starts at the root, and proceeds towards the leaves.

Efficient top-down parsers can be easily constructed by hand.

Recursive Predictive Parsing, Non-Recursive Predictive Parsing (LL Parsing).

UNIT – III

THE ROLE OF THE PARSER

The parser obtains a string of tokens from the lexical analyzer and verifies the

string can be generated by the grammar for the source language. It also report any syntax

error in an intelligible fashion. It should also recover commonly occurring errors so that

it can continue processing the remainder of its input.

Source

Program

Lexical

Analyzer

token

get next

token

Parse

Parser

tree

Rest of

front end

Intermediate

representation

Symbol

table

There are 3 general types of parsers for grammar. They are

Universal parsing - It can parse any grammar. But it is too inefficient to use in

production compilers.

Top down parsing - It constructs parse tree from root to the leaves.

Bottom up parsing - It constructs parse tree from leaves to the root.

In both top down and bottom up parsing the inputs are scanned from left to right

one symbol at a time. These methods work more efficiently in sub classes of grammars.

These are LL and LR grammars which describe most syntactic constructs in

programming languages.

Errors occurs at different levels

Lexical, such as misspelling an identifier, keyword or operator.

Syntactic, such as an arithmetic expression with unbalanced parentheses.

Semantic, such as an operator applied to an incompatible operand.

Logical, such as an infinitely recursive call.

Goals of error-handler in parser

It should report the presence of errors clearly and accurately.

It should recover from each error quickly enough to be able to detect

subsequent errors.

It should not significantly slow down the processing of correct programs.

ERROR RECOVERY STRATEGIES

To recover any syntactic errors, the parser having many general strategies. They

are

Panic mode strategy

Phrase level strategy

Error productions

Global correction

Panic mode recovery

It can be used by most parsing methods.

On discovering an error, It discards input symbols one at a time until one of a

designated set of synchronizing tokens is found

The synchronizing tokens are usually delimiters, such as semicolon or end.

This token may be vary depends upon the programming languages.

Advantages

It is a simplest method to implement.

It is guaranteed not to go into an infinite loop.

It is adequate where multiple errors in the same statement are occur.

Disadvantage

Skips a considerable amount of input without checking it for additional

errors.

Phrase level recovery

On discovering an error, a parser may perform local correction on the

remaining input.

It may replace a prefix of the remaining input by some string and continue the

parsing.

Example : replace a comma by a semicolon, delete an extraneous semicolon,

or insert a missing semicolon.

We must be careful to choose replacements that do not lead to infinite loops.

Used in top-down parsing.

Advantages

This type of replacement can correct any input string

It has been used in several error-repairing compilers.

Disadvantage

The drawback is the difficulty it has in coping with situations in which the

actual error has occurred before the point of detection.

Error Production

If we have an idea about the common errors that might be encountered, we can

augment the grammar for the language at hand with productions that generate the

erroneous constructs. We then use the grammar augmented by these error productions to

construct a parser. If an error production is used by the parser, we can generate

appropriate error diagnostics to indicate the erroneous construct that has been recognized

in the input.

Global correction

We would like a compiler to make as few changes as possible in processing an

incorrect input string. There are algorithms for choosing a minimal sequence of changes

to obtain a globally least cost correction. Given an incorrect input string x and grammar

G, these algorithms will find a parse tree for a related string y, such that the no. of

insertions, deletions and changes of tokens required to transform x into y is as small as

possible.

Disadvantages

1. More expensive to implement.

2. It takes more time and occupy more space.

CONTEXT FREE GRAMMAR

A grammar naturally describes the hierarchical structure of many programming

language. It has 4 components are as follows

5. A set of tokens, known as terminal symbols

6. A set of non terminals.

7. A set of productions where each production consists of a non terminal called

left side of the production, an arrow and a sequence of tokens and/or non

terminals called the right side of the production.

8. A designation of one of the non terminals as the start symbol.

DERIVATIONS & REDUCTIONS

Derivations

The Non-Terminals can be expanded and it can derive certain tokens. This is

called as Derivations.

Reductions

The terminal does not derive any string. But the terminals can be reduced to any

Non-Terminal. These are called reductions.

Example :

EE*E

EE+E

Eid

i) Using the above grammar derive the string “id+id*id”

EE+E

[Expansion by EE+E]

Eid+E*E

[Expansion by EE*E]

Eid+E*E

[Expansion by Eid]

Eid+id*E

[Expansion by Eid]

Eid+id*id

[Expansion by Eid]

ii) Using the above grammar reduce the string “id+id*id” to the starting

Non-Terminal.

Eid+id*id

[Reduction by Eid]

Eid+id*E

[Reduction by Eid]

Eid+E*E

[Reduction by Eid]

EE+E*E

[Reduction by EE*E]

EE+E

[Reduction by EE+E]

EE

Parse Tree

A parse tree pictorially shows how the start symbol of a grammar derives a string

in the language. If non terminal A ha s production A -> XYZ, then a parse tree may have

an interior node labeled A with three children labeled X, Y, and Z from left to right :

A

X

Y

Z

Properties of a Parse Tree

4. The root is labeled by the start symbol.

5. Each leaf is labeled by a token or by

6. Each interior node is labeled by a non terminal

Definition of Yield

The leaves of a parse tree read from left to right form the yield of the tree, Which

is the string generated or derived from the non terminal at the root of the parse tree.

Parsing

The process of finding a parse tree for a given string of token is called parsing that

string.

Ambiguity

A grammar can have more than one parse tree generating a given string of token.

Such a grammar is said to be ambiguous.

Eg. Two parse trees for 9 – 5+2

string

string

string

string

string

-

string

+

string

string

-

9

string

+

string

2

9

5

To avoid this ambiguous problem, two methods are used

5

2

3. Associativity of operators

4. Precedence of operators

Associativity of operators

By convention, 9+5+2 is equivalent to (9+5)+2 and 9-5-2 is equivalent to (9-5)-3.

When an operand like 5 has operators to its left and right, conventions are needed for

deciding which operators takes that operand. We say that the operator + associates t the

left because an operand with plus signs on both sides of its taken by the operator to its

left. In most programming languages the four arithmetic operators, addition, subtraction,

multiplication, and divisions are left associative.

Some common operators such as exponentiation are right associative. As another

example, the assignment operator = in C is right associative; in C, the expression a = b= c

is treated in the same way as the expression a=(b=c).

Precedence of operators

Consider the expression 9+5+2. There are two possible interpretation of this

expression: (9+5)*2 or 9+(5+2). The associativity of + * do not solve this ambiguity. For

this reason, we need to know the relative precedence of operators when more than one

kind of operators is present.

We say that * has higher precedence than + if * takes its operands before + does.

In ordinary arithmetic, multiplication and division have higher precedence than addition

and subtraction. Therefore, 5 is taken by * in both 9+5*2 and 9*5*2; i.e., the expression

are equivalent to 9+(5*2) and (9+5)+2, respectively.

WRITING A GRAMMAR

The following reasons explains why the regular expressions are used to define the

lexical syntax of a language.

1. The lexical rules of a language are frequently quite simple.

2. It provides more concise and easier to understand notation for tokens than

grammars.

3. More efficient lexical analyzers can be constructed automatically from regular

expressions than from arbitrary grammars.

4. Separating the syntactic structure of a language into lexical and non lexical

parts provides a convenient way of modularizing the front end of a compiler

into two manageable-sized components.

Eliminating ambiguity

Sometimes an ambiguous grammar can be rewritten to eliminate the ambiguity. It

can be eliminated by the following “dangling-else” grammar.

Stmt if expr then stmt

| if expr then stmt else stmt

| other

According to this grammar, the compound conditional statement

if E1 then if E2 then S1 else S2 has the two parse trees as shown below

stmt

if

expr

then

stmt

E1

If

expr

then

E2

stmt

S1

S2

stmt

if

expr

E1

then

stmt

else

else stmt

stmt

S2

If

expr

then

stmt

E2

S1

In all the programming languages, the first parse tree is preferred. The general

rule is “Match each else with the closest previous unmatched then”. This disambiguating

rule can be incorporated directly into grammar. For example, we can rewrite grammar as

the following unambiguous grammar. The idea is that a statement appearing between a

then and an else must be matched, i.e., it must not end with an unmatched then followed

by any statement, for the else would then be forced to match this unmatched then. A

matched statement is either an if-then-else statement containing no unmatched statements

or it is any other kind of unconditional statement. Thus, we may use the grammar

matched_stmt

| unmatched_stmt

matched_stmt

if expr then matched_stmt else matched_stmt

| other

unmatched stmt if expr then stmt

| if expr then matched_stmt else unmatched_stmt

Stmt

ELIMINATION OF LEFT RECURSION

Left recursion

A grammar is left recursive if it has a non terminal ‘A’ such that there is a

derivation as follows

A --> AX for some i/p string, where X is a grammar symbol.

(Here the nonterminal ‘A’ is recursively called in the left). Top-down parsers

cannot handle such left recursive grammars. This must be eliminated. This can be done

by the following method.

Left recursive grammar : A-->AX/Y

Left recursion elimination : A-->YA’

A’-->XA’/E

where X, Y are grammar symbols and E is epsilon.

Left recursive grammar

:

E-->E+T/T

Apply the above rule :

Here A is E; X is +T; Y is T;

So after left recursion elimination:

E-->TE’

E’-->+TE’/E

Left factoring

This useful transformation to make certain grammar suitable for parsing

(predictive). If non-terminal has two choices for expansion, which are same, then there

will be confusion for selection of the choice, for a particular I/p string. For example:

A-->XB/XC

Now, there is a confusion which is to be selected for the any I/p string starting with

X . This problem can be solved by left factoring.

Left factoring is a transformation for factoring out the common prefixes. For the

above grammar the application of left factoring will result as

A-->XA’

A’-->B/C

Depending on how the parse tree is created, there are different parsing techniques.

These parsing techniques are categorized into two groups:

1. Top-Down Parsing

2. Bottom-Up Parsing

TOP-DOWN PARSING

Construction of the parse tree starts at the root, and proceeds towards the leaves.

Efficient top-down parsers can be easily constructed by hand.

Recursive Predictive Parsing, Non-Recursive Predictive Parsing (LL Parsing).

Recursive descent predictive parser

Recursive descent parsers are easily created from context-free grammar productions.

It is a top-down technique because it works by trying to match the program text against

the start symbol and successively replaces symbols by symbols representing their

constituents. This process can be regarded as constructing the parse tree in a top-down

direction. recursive descent parsers are often also called LL parsers because they deal

with the input from left-to-right (the first L) and construct a leftmost derivation (the

second L).

A recursive descent parser is a collection of procedures, one for each unique nonterminal. Each procedure is responsible for parsing the kind of construct described by its

non-terminal. Since the syntax of most programming languages is recursive the resulting

procedures are also usually recursive, hence the name "recursive descent".

The parser maintains an invariant in that a global variable always contains the first

token in the input that has not been examined by the parser. Every time a token is

"consumed" the parser will call the lexical analyser to get another token.

Parsing using a recursive descent parser is started by calling the lexical analyser to get

the first token. Then we call the procedure corresponding to the grammar start symbol.

When this procedure returns the parse is complete.

The body of a parsing procedure for a non-terminal X is constructed by considering

the grammar productions with X on their left-hand side. A non-terminal on the right-hand

side of one of these productions turns into a call to the parsing procedure for that nonterminal. A terminal (literal or non-literal) turns into a test to make sure that the current

token matches the required terminal, and a call to get another token.

For example, consider the following production and its associated parsing procedure.

Statement : Name ':=' Expression.

void Statement ()

{

Name ();

if (current token is not a colon equals)

report a colon equals missing;

get a token;

Expression ();

}

Of course, many non-terminals appear on the left-hand side of more than one

production. The parsing procedure must deal with all of these cases by checking at

the beginning of the parsing procedure. For example, expressions might come in a

few varieties.

Expression : Integer / Identifier / '(' Expression ')' /

...

void Expression ()

{

if (current token is an integer or an identifier) {

get a token;

} else if (current token is a left parenthesis) {

get a token;

Expression ();

if (current token is not a right parenthesis)

report a right parenthesis missing;

get a token;

} else ...

...

} else

report an illegal expression;

}

Decision making in recursive descent parsers

As in the Expression case above, for non-terminal symbols with more than one

production the parsing procedure needs to make a decision between the productions. To

obtain a deterministic parser (which we always want to have for a compiler) we must

guarantee that the appropriate choice is uniquely determined by the basic symbols.

To make a decision in a recursive descent parser we need to know which symbols

predict a particular production. Usually we try to achieve the ability to parse with one

token lookahead. This is because otherwise we need to store more than one token from

the lexical analyser which is not impossible but complicates matters. One token

lookahead is sufficient for most programming languages.

We can define the PREDICT sets for each production as follows using the auxiliary

sets FIRST and FOLLOW. We provide an informal definition. The text gives a more

mathematical definition with an algorithm for calculating these sets.

The FIRST set of a symbol A is the set of tokens that could be the first token of an A,

plus epsilon if A can derive epsilon (in other words, if an A can be empty).

FIRST can be extended to sequences of symbols by saying that the FIRST of a

sequence A1 A2 A3 ... An is FIRST(A1) union FIRST(A2) if epsilon is in FIRST(A1),

union FIRST(A3) if epsilon is in both FIRST(A1) and FIRST(A2), and so on.

The FOLLOW set of a symbol A is the set of tokens that can follow an A in a

syntactically legal program, plus epsilon if A can occur at the end of a program.

The PREDICT set of a production N : A1 A2 A3 ... An is FIRST(A1 A2 A3 ... An)

(without epsilon) plus FOLLOW(N) if A1 A2 A3 ... An can derive epsilon.

The PREDICT sets for the alternative productions of N are used when writing the

recursive descent parsing procedure for N. If the next unexamined token is in the

PREDICT set for a production then we predict that alternative. This is what we did earlier

in the parsing procedure for Expression.

Transforming grammars for recursive descent

Problems arise if the PREDICT sets for the productions of a non-terminal overlap. If

this is the case it is not possible to accurately predict a single production using just one

token of lookahead.

To ensure that decision making with one token lookahead is possible in a recursive

descent parser it may be necessary to transform the grammar. The intention is to change

the grammar so that it is acceptable to our parsing method, but defines the same language

as the original grammar.

Two common situations arise: left recursion and common prefixes.

Non-recursive predictive parser

It is a top-down parser. As a name implies it is not recursive. This needs the

following components to check whether the given string is successfully parsed or not.

Inbut buffer , Stack,

Parsing routine and parsing table

The input buffer is keeping the input string to be parsed .The input string is

followed by a symbol ‘$’. This is used to indicate that the input string is terminated. This

is used as right end marker.

The stack is keeping always the grammar symbols. The grammar symbols will be

either non-terminal or terminals. Initially the stack is pushed with “$” on the top of the

stack. After that, as parsing progress the grammar symbols are pushed this ‘$’ is used to

announce the completion of parsing.

The parsing table is generally a two-dimensional array. An entry in the table is

referred T (A, a), where ‘A’ is a non-terminal ‘a’, it is terminal and’T’ is table name.

A+b$

INPUT

Operation

STACK

X

Y

Z

$

Program

OUTPUT

Parsing

table

The program takes the first symbol on the top of the stack X and then current

input symbol a.

Three possibilities

1. If X=a=$, then parser halts with successful completion.

2. If X=a$,then parser pops X off from stack & mones the i/p pointer to the

next symbol.

5. If X,Non-terminal has another Non-terminal, then remove the Non-terminal

from the stack &substitude the corresponding production.

Reduction by predictive parser:

Productions

ETE’

E’+TE’/

TFT’

T’*FT’/

F(E)/id

Stack

Input

$E

$E’T

$E’T’F

$E’T’id

$E’T’

$E’

$E’T+

$E’T

$E’T’F

$E’T’id

$E’T’

$E’T’F*

$E’T’F

$E’T’id

$E’T’

$E’

$

id+id*id $

id+id*id $

id+id*id $

id+id*id $

+id*id $

+id*id $

+id*id $

id*id $

id*id $

id*id $

*id $

*id $

id $

id $

$

$

$

Output

ETE’

TFT’

Fid

T’

E’+TE’

TFT’

Fid

T’*FT’

Fid

T’

E’

Steps involved in non-recursive predictive parsing:

1. I/P buffer is filled with I/p string with $ as the right end marker.

2. Stack is initially used with $

3. Construction of parsing table T using FIRST () & FOLLOW ().

Computation of FIRST( )

1. If X is terminal, then FIRST (X) ={X}.

2. If X , then FIRST (X) ={}

3. If X is a Non-terminal, & x a is a production, then add a FIRST (X)

e.g. Xa.FIRST(X)={a}.

6. If XY1, Y2, Y3…Yn then FIRST (X)={FIRST (Y1), FIRST (Y2), FIRST

(Y3)…FIRST (Yn)}

Computation of FOLLOW( )

1. $ is in FOLLOW (S) where S is a Start symbol

Then FOLLOW (S)={$}

2. If there is a production AB, then FOLLOW(B)={FIRST()}(but

except )

3. If there is a production AB (or) a production AB, FIRST() = then

Follow

(B)={FOLLOW(A)}

The FIRST () & FOLLOW () for the above productions are

FIRST (E)=FIRST (T)=FIRST (F)={(, id}

FIRST (E’)={+, }

FIRST (T’)={*, }

FOLLOW (E)={$,)}

FOLLOW (E’)=FOLLOW (E)={$,)}

FOLLOW (T)={+, $,)}

FOLLOW (T’)=FOLLOW (T)= {+, $,)}

FOLLOW (F)= {*, +, $,)}

Parsing table

id

+

E ETE’

E’

E ’+TE’

T TFT’

T’

T’

F Fid

*

(

ETE’

)

$

E’ E

TFT’

T’*FT

T’

T’

F(E)

ERROR RECOVERY IN PREDICTIVE PARSING

We can use both panic mode and phrase level strategies for recovering the errors

in predictive parsing.

BOTTOM-UP PARSING

Construction of the parse tree starts at the leaves, and proceeds towards the root.

Normally efficient bottom-up parsers are created with the help of some software

tools.

Bottom-up parsing is also known as shift-reduce parsing.

Operator-Precedence Parsing – simple, restrictive, easy to implement

LR Parsing – much general form of shift-reduce parsing, LR, SLR, LALR

Shift-reduce parsers

In contrast to a recursive descent parser that constructs the derivation "top-down"

(i.e., from the start symbol), a shift-reduce parser constructs the derivation "bottom-up"

(i.e., from the input string). Shift-reduce parsers are often used as the target of parser

generation tools. Some reasons for their popularity are the large class of grammars that

can be parsed in this way (there are more grammars in this class than in the class that can

be processed using recursive descent) and our ability to implement them efficiently.

Shift-reduce parsers are often called LR parsers because they process the input

left-to-right and construct a rightmost derivation in reverse.

In the following we will briefly describe how shift-reduce parsers work, because

knowledge of their operation is useful when using parser generators (which you might

have to do in the future). Our concentration is on the basic mechanisms used during

parsing, not on the techniques used to generate such parsers (which can be quite

complex). The text has much more detail which you can study if you are interested.

Informally, a shift-reduce parser starts out with the entire input string and looks

for a substring that matches the right-hand side of a production. If one is found, the

substring is replaced by the left-hand side symbol of the production. This step is a

reduction. The parser then looks for another substring (now possibly containing a nonterminal symbol), replaces it, and so on. Reductions occur until the string is reduced to

just the start symbol. If no reductions are possible at any stage, it might mean that the

string is not a sentence in the language defined by the grammar, or it might mean that an

earlier reduction was performed in error. The most complex parts of defining a shiftreduce parser are locating valid substrings (called handles) and determining when and if

reductions should be performed on which handles.

S : 'a' A B 'e'.

A : A 'b' 'c' / 'b'.

B : 'd'.

abbcde =>

=>

=>

=>

aAbcde

aAde

aABe

S

Shift-reduce parsers can be described by machines operating on a stack of

symbols and an input buffer containing the input text. Initially, the stack is empty and the

input buffer contains the entire input string. A step of the parser examines the top of the

stack to see if a handle is present. If so, then a reduction could be performed (but doesn't

have to be). If a reduction is not possible or is not desirable, the parser shifts the next

input symbol from the input buffer to the top of the stack. The process then repeats. If the

parser reaches a state where the stack contains just the start symbol and the input buffer is

empty, then the input has been correctly parsed. If the input is consumed but the stack can

not be reduced, then the input is not a sentence.

Grammar

E

:

Input String

Stack

id

E

E +

E +

E +

E +

E +

E +

E +

E

:

E '+' E / E '*' E / '(' E ')' / id.

id + id * id

Input

Action

id + id * id

+ id * id

+ id * id

id * id

* id

* id

id

id

E

E *

E * id

E * E

E

initial state

shift id

reduce by E : id

shift +

shift id

reduce by E : id

shift *

shift id

reduce by E : id

reduce by E : E '*' E

reduce by E : E '+' E