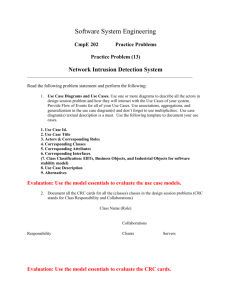

Intrusion detection has traditionally been done at the operating

advertisement