18071

advertisement

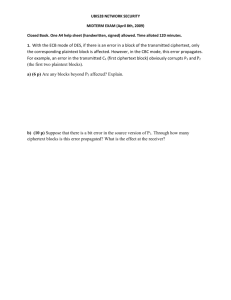

18071 >> Kristin Lauter: Okay. So today I'm very pleased to have Vinod Vaikuntanathan visiting to speak to us on side channels and clouds. So now I've gotten over my big hurdle for the day, pronouncing his last name. So we're very pleased to have him visiting. He's currently a post-doctoral fellow at IBM, and he did his Ph.D. at MIT, with Jaffe Goldwasser, in 2009 where he won the Best Dissertation Award from the computer science department. Thank you, Vinod. >> Vinod Vaikuntanathan: Thanks Kristin. Before I start the talk, I have to tell you that Kristin told me before the talk that she wants to ask me a lot of questions. I really want to encourage you guys to do the same. Let's make this interactive. So the topic of this talk is side channels and clouds, new challenges in cryptography. The development of cryptography has always gone hand in hand with the emergence of new computing technology. Whenever there's a big change in the way we do computation or think about computation, it has always led to problems and challenges for cryptography, and ultimately we have faced these challenges. We have managed to come up with new techniques in cryptography. In fact, we can see if you look back at the history of cryptography we can see a number of examples of this. Such as the development of automated ways of doing cryptography, of encrypting messages. For example, the [indiscernible] machine of the 1930s. The mention of public cryptography in the 1970s, and so on and so forth. Today, in the 21st century, we stand at the brink of a new big change in the way we do computing. A lot of computing these days is being done on small and mobile devices, which is computing out there in the world in an environment that we do not necessarily trust. Whereas we used to think of data being stored and programs being executed in a personal computer, sitting right in our office under our control, these days we do a lot of our computing on devices such as laptops, cell phones, RFID devices and so on and so forth. The problem with this change in the way we do computing is that these devices store sensitive data and they're just out there computing where the adversary, the attacker, has an unprecedented level of access to these devices and control over these devices. He has a lot of -- the devices are becoming closer and closer to the adversary, and that's becoming, that's making it much easier for the adversary to carry out his attacks. The second big change we see in cryptography, in computing, is the amount of data that we need to store and the amount of computation we need to perform on this data is becoming so huge that the end users are not willing to do all of it themselves. Either because they simply do not have the resources to do so or various other reasons. Instead, what they do is they outsource storage and computing to big third-party vendors such as Microsoft, Google, Amazon and so forth. This is called cloud computing, and again it's a big concern in terms of cryptography because we're putting out data and programs out there away from our trusted personal computers. This new computing reality poses two new challenges for cryptography, which will be the focus of my talk. First of all, in cryptography, we traditionally think of cryptographic devices as black boxes. In other words, what this means is that the only way an attacker, the assumption is that the only way an attacker can access the cryptographic system is by receiving the input and receiving the output of the computation. What's really happening inside the device, he has no clue what's going on. The real world is quite different. In fact it turns out there are various mechanisms by which computing devices leak information to the outside world, not just through input, output access. In particular, an attacker can avail of a large family of attacks called side channel attacks, which reveal extra information about the storage, about the data stored inside the device and the computation being performed. Such attacks utilize the fact that physical characteristics of computation, such as the amount of time for which a particular program runs, the power consumed by running a piece of software, are in fact something noninvasive such as the electromagnetic emitted by the device during the computation, or these physical characteristics leak more information about the computation than just input/output access. And this extra information leakage is actually being successfully utilized to break many existing cryptographic systems. So one thing I want to say before I go further is that these attacks are not completely new. They have existed for well over a couple of decades. What makes them particularly acute and interesting in this context is the physical proximity that the attacker has to these devices. This is the result of the new sort of computing reality that we're facing. Now the point I want to make is these attacks are not just theoretical attacks, they're actual attacks on implementations of standardized crypto systems such as RSA advanced crypto standards and they've been attacked very successfully using side channel attacks. So this is a real concern for us. So the first challenge, challenge number one that I will address is can you protect against this kind of information leakage? Can you protect against side channel attacks? Okay. So that's number one. The second challenge appears in the context of cloud computing, the context of security in cloud computing. The general setting of cloud computing is one in which there's a client and a server. The client has some input data and she wants to do some computation on the input data. And the point is that she may not have enough resources to do the computation or might otherwise be unwilling to do the computation herself so what she does she sends the data across to the server which does the computation on her behalf. This is a very general setting, and specific examples of these settings are specific familiar examples of these setting are the context of searching the Internet. Using Bing, for example, to search the Internet. But this is also interesting in the modern context in a more general scenario where the server performs an arbitrary computation on the input data. So, first of all, the client may not be willing to disclose her entire data to the server. Why would it? Right? So the client demands privacy of her data. And one way to achieve privacy of data is for her to encrypt the data and send it over to the server. Don't send the data in the clear. Encrypt it. But now we are in a little bit of a problem, because what the server gets is encrypted data. It doesn't even see what's inside the ciphertext and yet it's supposed to perform some -- it's supposed to execute the program. It's supposed to perform some meaningful computation on the underlying data. So the question is can we reconcile these opposing goals of privacy and functionality? So in more complete terms, our challenge number two is can we compute on encrypted data? So these are the two challenges I'm going to address in this talk. And the talk will go in three parts. And the first part I will show a mechanism to protect against side channel leakage. But this is a hugely active in cryptography at this point. Many, many models of how outside channels behave and many constructions of cryptographic primitives that are against side channels. In this talk I'll tell you about a simple model for side channels and construction of a particular cryptographic primitive that's secure in this model. This is joint work with Akavia and Goldwasser. In the second part of the talk I'll describe a mechanism to compute unencrypted data. This is done using a very powerful cryptographic primitive called a fully homomorphic scheme. This work is joint with Van Dijk, et al., and I'll talk about my research philosophy and open questions and future directions. This is the structure of the talk. And probably this is a good point to stop, see if everyone is on the same page. Okay. Wonderful. So let's jump right in. Part one. How to protect against side channels. The traditional approach to handle side channel attacks has been to address side channels as they come along. In other words, for each particular kind of attack, design tailor-made solution that handles this attack. So that has been the traditional way of looking at this problem. This could be done in hardware, namely manufactured specific types of hardware that protect against specific attacks. Can you modify programs so they assist in particular kinds of attack. So one sort of little draw back with both these solutions is that they are usually ad hoc. And they don't come with a proof or a guarantee that they actually do the job. Solutions have also been explored in sort of a higher level setting, in an algorithmic setting when people have considered leakage of specific bits of the secret. So let's leak the first bit and 50th bit and 100th bit, et cetera. This is a specific and people have explored this in a number of works. So this general approach of solving side channels has two problems. One is the scalability of this kind of solution. So every time you want to protect against a new side channel attack you have to incur the cost of building a new type of counter measure. In other words, the cost of your solution grows as the number of side channels that you want to protect against. Yes. >>: Just curious about you're distinguishing algorithmic and software attacks. There's a lot of overlap. I'm not sure I really distinguish them that well. >> Vinod Vaikuntanathan: Sure. The line is a little bit thin in that case. But what I mean by software is that I take a specific program. So one approach that people have explored to counter act timing attacks is to make sure that the program runs for the same amount of time on every input. >>: So it's not ->> Vinod Vaikuntanathan: That's not an algorithmic. So the line is a little bit thin. But so the first problem with this line of attack is its scalability. The cost grows as the number of side channels that we need to protect against. The second, more subtle and probably more serious problem, is the composability. To roughly explain the problem, the problem is that if I build a solution that protects against one side channel and build another solution that protects against the second side channel, who said the effects of these side channels won't cancel each other? The question is do the different protection mechanisms work well with each other. That may not be the case. In fact, people have done a lot of work in studying the effect of these countermeasures, the effect that they have on each other. So there are a couple of problems with this traditional specific approach to counter acting side channels. What we will look at today is a modern approach. The modern approach is to say let's step back and let's try to come up with a model that captures the wide class of side channels and design a solution in this model. And if your model captures a large class of side channels the solution will be secure against all these attacks as well. This approach was advocated and pioneered by Micali and Reyzin. So, in other words, instead of looking at each of these side channel attacks one by one, what we want to do is we want to define a model of the adversary that interacts with this system and the model should capture all these attacks in one goal. And once you come up with this model, you want to construct cryptographic primitives and prove they're secure in this model. Yes? >>: The new way of side channel the military had a new approach of side channel attacks keep the crypto in a very secure location and don't let anybody in. And except for timing attacks, it seems to be a pretty good idea. >> Vinod Vaikuntanathan: So it is. But the attacks still don't prevent people measuring the electromagnetic radiation that come out from my cell phone when I'm walking around, for example. >>: That's a cheap cell phone. If it were serious -- [indiscernible]. >>: But then you don't get affordability, which is his whole point. >> Vinod Vaikuntanathan: Yes. >>: If carried ->>: For $5 you get affordability, too. >>: So I put my phone on [indiscernible] I try to punch the buttons [indiscernible] anyways, keep going. >> Vinod Vaikuntanathan: But good point. Okay. Good. So this is the general approach that we want to take. And this is, as I said before, this is a very active, very recent and very active area of research, with the number of models and matching results. Aside from the results that I mentioned before, many of the researchers have come up with various different kinds of models and constructions of cryptographic primitives. What I'm going to talk about today is one specific result, the work with Akavia and Goldwasser where we proposed a separate model and a construction of a particular cryptographic primitive that's secure in this model. That's what we're going to talk about today. So the model we propose is called the bounds leakage model. And starting observation to this model is that if you allow the side channel to leak an unbounded amount of information, there's really nothing you can do. Because the information could be the entire secret contained in the device itself. So one sort of necessary condition that seems -- one condition that seems to be necessary is to put a limitation on the amount of information that the side channel leaks. And that's our first restriction. We say that the amount of leakage is less than the length of the secret key. In fact, it's a parameterizable quantity. The larger this amount is, the more secure you get and so forth. So this is the first requirement that we pose on side channels. The second observation is that if you allow the side channel unlimited computing power, then it can break the crypto system itself. Right? There's no way you can protect against such a side channel. And that leads to our second restriction. We say that the side channel should be, the computation done by the side channel, which is an [indiscernible] for electromagnetic radiation or oscilloscope is poly bound. That is the second restriction. >>: Is it really enough to have leakage to be less than the key? >> Vinod Vaikuntanathan: No, a lot less than the length. In fact, it's a parameterizable quantity. >>: Good. >> Vinod Vaikuntanathan: I should say that it's possible to -- this is a very basic model. I'm presenting this because of simplicity. In fact, it's possible to relax both these conditions, in particular the information limitation quite a bit. And I'll be happy to talk about this one-on-one. Okay. Good. So this is the model. This is sort of the abstract model that we'll work with today. Yes? >>: So the first restriction, restriction on the adversary or on the ->> Vinod Vaikuntanathan: It's a restriction on the -- if you think about side channels there's two entities, one is me, I'm the attacker. I'm using a particular hardware device to get some measurement on the computation. So there are two entities here. One is the attacker one is the device. The restriction is on the device and the amount of information that the device gives me and the amount of computation that it can do. Does that answer your question? >>: So you're saying that this will only work if the device doesn't actually leak less information to the key? >> Vinod Vaikuntanathan: Absolutely. In fact, you can see if the device leaks the amount of information that leaks is more than the length of the secret key it can just leak the entire secret key itself, right, potentially. So good. So this is the model that we'll work with today. And the rest of this part of the talk I'm going to use public encryption as my example primitive. Let's refresh our mind about what public encryption is. In the setting there are two people. Bob and Alice. Bob wants to send a message to Alice privately over the public channel. The way he does it is by letting Alice choose two keys. Secret key and a public key. Public key you publish. The secret key Alice keeps to herself. And Bob has a mechanism to use the public key to encrypt the message, send it over to Alice in the public channel. Alice can use her secret key to decrypt. It's a very simple setting. And what the attacker can do, the attacker that we will look at in the setting is an eavesdropping attacker. That's the most basic model of -that's the most basic attack that you can perform on a public encryption scheme. The attacker gets access to the public key. It's out there in the open. So you can look at it. It looks at the ciphertext because it's going through a public channel. And it tries to gain some information about the underlying message. The security requirement, the classical security requirement is that the attacker has no information about the underlying message. >>: Your keys there, already looking it's the direction ->>: Public key? >>: Because it's got the MIT marks on it. Your office keys. >> Vinod Vaikuntanathan: Right. Aside from that. No. [laughter] I'm surprised you can see it from here. >>: I can see it as well. I don't know what building it is. But security. >>: No worries. >>: Just joshing. Keep going. >> Vinod Vaikuntanathan: We want to talk about the bounded leakage model. And the change that we make to the security game is very simple. Instead of in addition to letting the adversary get the public key and the ciphertext, we also let him ask the decryption Oracle, the decryption box, for a leakage function F. So this is the leakage function that's computed by either the oscilloscope or iranta [phonetic]. This is one that models both of these leakages. And as a result he gets back the function F applied on the secret. So this is a very sort of simple extension to the classical security model. >>: So F is polynomial time boundary? Oh, I guess ->> Vinod Vaikuntanathan: Obviously following our discussion before. We need to have two restrictions on the leakage function F. One is that it has bounded output length. This is sort of a sliding scale. The more the output length is, the more the leakage is and the better your security gets. And the second restriction is that the function is polynomial time computable. Okay. So this is sort of the concrete model for public encryption that we'll talk about. Now we have a model. The question is, are there encryption schemes that are secure in this model, that protect against this class of attacks? So let's first look at well known encryption schemes. Encryption schemes that we know and love. So unfortunately turns out that the outside encryption scheme is insecure for bounded leakage. There are a number of results that show if you leak a small constant fraction of the secret key, you can break the entire crypto system. So RSA is out of question. As for the El Gamal encryption scheme, there's no explicit attack that breaks the system with leakage, but there's no real guarantee or proof that it's secure either. So unfortunately these two systems, not good for us. What we show is that a newer class of encryption schemes based on mathematical objects called lattices are in fact secure against bounded leakage. So these are not -- the mathematical basis for these systems is not factoring a discrete log, but it's a different kind of object called lattices. So we show that an encryption scheme proposed by Regiff [phonetic] is secure against leaking upon any constant fraction bits of the key, 99% or 99.99%. And subsequently in work with Dodis Goldwasser, Kalai and Peikert, we showed that a different encryption scheme based on lattices also has this property. The interesting thing I want to point out is that these encryption schemes were not explicitly designed with leakage in mind. They were designed for a completely different purpose. It just so happens that leakage resilience is built into them. Okay. So that's what we show. And following our work, a number for the constructions were shown to be leakage, some based on more standard assumptions such as Diffie-Hellman assumption, also a class of constructions based on what's called hash proof systems. Also shown to be secure against bounded leakage. >>: Who are the authors on this, DHH? >>: This is Don Bonnie [phonetic] from Stanford, Shy Levy, Mike Hamburg and Rafael Strofski [phonetic]. So there are all these constructions. In the rest of this part of the talk I'm not going to focus on any of these specific constructions. But instead we'll step back and ask the question: What is it that makes these schemes leakage resilient? Is there some kind of underlying structure or principle behind these schemes, which makes them secure against these attacks? In fact, I will show two principles, two ideas, such that if an encryption scheme satisfies these two principles, two conditions, then it's automatically secure against bounded leakage. In fact, all these encryption schemes I showed you in the previous slide actually satisfy these restrictions. So you can think of this as sort of a way to explain why these schemes are leakage resilient. The first idea, the first property that I require from an encryption scheme is that given a public key, there are many possible secret keys. In fact, I will require that there are exponentially many possible secret keys for any given public key. If you're used to thinking about RSA or El Gamal, this is just a foreign concept, because in RSA the public key is a product of two primes. The secret key is a unique factorization. So although you can't compute a secret key efficiently given it's a public key it's uniquely determined. >>: What you use as the secret key RSA is the decryption component, which is the encrypt component. >> Vinod Vaikuntanathan: Yes, you can add a multiple -- I'm going to get to it in a minute. So this is the first property we require. And, of course, the encryption algorithm, when it encrypts a message, it has no clue which secret key the decryption box contains. It could be one of these many exponentially many different possibilities. Therefore, correctness of the encryption scheme requires that any of these secret keys decipher the ciphertext to the same message. In fact the message that the ciphertext originally instructs. In other words, all these secret keys are functionally equivalent. They all decrypt the ciphertext to the exact same message. And yet even though they're functionally equivalent, we will show that partial information about any single secret key is totally useless to the attacker. So let's actually think about this idea, sort of step back and think about this idea a little bit, to answer Jeff's question. You can take any encryption scheme, any encryption scheme that you like, and you can make it have this property. You can take the secret key and pad it with a bunch of random nonsense. So the number of secret keys grows, but we haven't really done anything. Right? So this idea by itself is not particularly useful. What makes it useful is the second idea. And the second idea, the second property that I require from the encryption scheme there are two distinct ways to generate a ciphertext. One is the way that Bob uses to encrypt his message. Takes a message, uses the public key, and computer ciphertext. This is what Bob does in the real world. The second way to generate a ciphertext is what I will call a fake encryption algorithm. The fake encryption algorithm is fake. It's never -- no one ever executes this in the real world. It's just an artifact of the proof. It's just sort of a mental experiment that exists because it's a mental experiment that exists because we want to prove this scheme's secure. So fake encryption scheme is a randomized algorithm that takes nothing, no input, and just produces a random ciphertext. And the ciphertext behaves very differently from a real ciphertext. In the sense if you take a ciphertext and decrypt it with any of the possible exponentially many keys, you'll get a different message every time. If I take a random one of these secret keys and decrypt the ciphertext I'll get a random message. Functionally the ciphertext behaves very different from a real ciphertext. >>: Are you going to show sufficiency of these because presumably nothing is wrong with the necessity of these, do you have an answer? >> Vinod Vaikuntanathan: No, I don't. In fact, in a couple of slides before I mentioned that El Gamal is not known to be secure in this setting. Turns out El Gamal does not satisfy either of these properties. But we have no clue if it's secure or not. So I'm not sure. I'm not sure that these ideas are necessary. But this is one way to achieve security. >>: And that is the lattice because they're all short based and you have a lot of equivalent for short vectors? Is that where you're getting this from? >> Vinod Vaikuntanathan: So just a brief sort of overview of these lattice solutions. In the lattice-based encryption schemes the public key is a point in space, in dimensional space, and the secret key is any lattice vector that is close to this point. So depending on how you define close, there could be an exponential number of points that are close. That's what contributes. And of course it still doesn't explain why the scheme satisfies this other property. But just to give you an idea. So the fake ciphertext behaves very differently from the real ciphertext. And yet we require, if I see a fake ciphertext in one hand and the real ciphertext in the other, I can't tell which is which. So fake ciphertext is indistinguishable from the real ciphertext. >>: Secret key. >> Vinod Vaikuntanathan: Even if you know secret key. Even if you know a single secret key. So if you know -- >>: It will encrypt to the same thing with different keys but the only one won't. >> Vinod Vaikuntanathan: You can't tell. So if you know a single key -- but so this is the second property we need. And now I'm going to show that if you have these two properties, that's that. You get leakage resilience. So the first step in sort of the way to see why this works is to look at the fake world and the fake ciphertext, right? And the fake ciphertext often contains it was generated without the knowledge of the public key or mass -- it contains no information about any message or server. In fact, the fake ciphertext decrypts to a random message under the choice of a random secret key. This is what happens when the adversary is given just to the fake ciphertext. What if it's also given some amount of leakage from the secret key? So let's look at that. Let's say he gets one bit of leakage from the secret key, right? So originally from his view the number of possible secret keys was exponentially large. All these huge amount of secret keys. If I get one bit of information about the set, that narrows down the set by a factor of half. Number of possible secret keys is reduced by a vector of half. Two bits, right? It becomes a quarter. And if I get a bounded amount of information about the secret key, there is still quite a bit of possibilities that are left in as a secret key. And of course each of these, random one of these secret keys decrypts to a random message. Therefore still the attacker has no clue what the message is. Okay. So far everything happened in the fake world and we don't even care about what the fake world is, right? But the key thing to observe is that ciphertext, that happens -- that's produced in the fake world, is indistinguishable from a ciphertext in the real world. So the adversary is not going to know the difference if it gets a fake ciphertext or a real ciphertext. And therefore it has no information about the message either. It didn't have any information about the message in the fake world. Why would it have any information in the real world? That's that. That's the end of the proof. All right. And that actually finishes what I wanted to -- I just told you about a model, a simple model for side channels and a way to construct a public encryption scheme that is secure in this model. So that finishes my section on side channels. Is there any -- >>: Supposing you -- this is the thing, supposing you have corresponding ciphertext, would that help them at all? >> Vinod Vaikuntanathan: New ciphertext potentially. So this is sort of a strong -- the attack you're mentioning is a stronger attack called a chosen -- I guess a known ciphertext. Potentially it could. But I think, again, don't hold me to this. But I think I can show that this scheme is secure against a known ciphertext attack. Ciphertext message pairs. That's simply because it's a public encryption scheme. I can construct messages in ciphertext by myself. But a chosen ciphertext attack is a different issue. This may not actually be secure. We can take this off line. Yes? >>: How do I know if my system only leaks a very small amount of information? That's an engineer's problem? [laughter] >> Vinod Vaikuntanathan: Exactly. >>: If I put an antenna at your smart card, you're leaking continuous data over, even over a bounded mile path, how do I know there's no bit in there? >> Vinod Vaikuntanathan: How do you know that the leakage doesn't sort of uniquely -- you can't recover the unique secret key? I'm going to borrow John's answer, say it's an engineer's problem. >>: But you motivation for this was all these countermeasures that engineers put in there they're heuristic and they don't add any formal proof to what you're doing and we're going to avoid that problem. >> Vinod Vaikuntanathan: One way to look at this solution is if you can guarantee to me that the amount of information leaked by this particular attack is bounded, I'm going to tell you -- I'm going to show you a provable way to protect against this attack. So really -- you should think of the solution as a combination of the hardware countermeasure and an algorithmic countermeasure. You give me the guarantee that the amount of information is bounded. Here's the solution. >>: Is it not just a reasonable imagine, it's just the channel. >> Vinod Vaikuntanathan: Couple of different answers to your question. Maybe we can -- okay. So this is what I wanted to say about side channels. Let's move on. The second part is how to compute encrypted data. So again let's remind ourselves of our motivating example. There's a client and a server. The client sends an encrypted data to the server. And the server has to perform computation on the encrypted data and send back the encrypted result. This makes sense in various specific contexts, for example, encrypted Internet search, which it turns out that for specific instantiations of this problem there are already sort of tailor-made solutions that solve these cases. For example, searchable encryption, a cryptographic mechanism that solves encrypted Internet search. What we're concerned about today is performing general computation on encrypted data. A cryptographic tool that is really helpful in this general context is called fully homomorphic encryption. That's what I'll talk about for the rest of the talk. >>: So the data is by the user, not the data sent back to the user, correct? >> Vinod Vaikuntanathan: Well, the question is what does it mean for -- it could be that we want to protect the program against, so it could be that the client doesn't know what program the server's running. And I want to sort of hide this fact from her. But that's sort of a different concern that we're not going to focus on. >>: In your case you care about bounded results, that's what the -- >> Vinod Vaikuntanathan: Yes. >>: [indiscernible]. >> Vinod Vaikuntanathan: Well, another way to look at -- another way to answer your question is that the computation on the encrypted data is performed without the knowledge of the secret key. It's a public computation. Anyone can do this. So the information that the encrypted result leaks cannot be any more than what the original ciphertext leaks. Because I'm performing some public computation on the encrypted data. Right? So okay. So this is the problem that we're concerned with. So we're going to model a program, an arbitrary program, as a boolean circuit. So if you go down to the most basic level you can write any computation as a combination of exclusive or gates and and gates. So I'm going to write this as a big boolean circuit. I'm going to look at exclusive or. It's an addition in the field of size two and it's multiplication. That's an equivalent that I'm going to use. So what is a fully homomorphic encryption scheme. A fully homomorphic scheme is a scheme where you can compute the and function on two interpretive bits and interpretive bits. Add and multiply encrypted data. Because you can write any computation as a combination of and/or gates, if you can do both of these you can do everything. Right? So that's a fully homomorphic encryption. What do we know about the fully homomorphic encryption scheme? This sort of concept was first defined by the name of privacy homomorphism by [indiscernible] in early 1978 when they didn't really have a candidate scheme that satisfied the definition. So it was such an insightful sort of active foresight they defined this without having the candidate scheme. The motivation was actually sort of the modern motivation for constructing these schemes, namely searching unencrypted data. What we know so far, limited variance of homomorphic encryption. For example, the RSA scheme, we know it's multiplicative homomorphic. E.g., if I give you an encryption of one of a different message I can encrypt the product of the two messages, that's something you can do. El Gamal and Goldwasser Kalai encryption schemes additively homomorphic. You can add encrypted plain text but you can't multiply them. There are also mechanisms that compute very limited classes of functions on encrypted bits, namely quadratic formulas. The real question is: Can you do arbitrary computation on interpretive data and that's been opened for nearly 30 years until last year Craig Gentry constructed the first sort of instantiation of a fully homomorphic scheme. This construction was based on very sophisticated new mathematics based on ideals in polynomial ranks. So fully homomorphic scheme is a really very powerful object. And this lets you do a lot of very nice useful things. The question that Gentry's construction raises is: Is there a simple way, simple and elementary way of achieving fully homomorphic encryption? I don't want to have to learn algebraic number theory to understand -- >>: It would help to learn algebraic geometry. >> Vinod Vaikuntanathan: Algebraic X. [laughter]. >> Vinod Vaikuntanathan: So the question is it an elementary construction of the subject? So, in other words, the question we want to ask is what simple mathematical objects can you both add and multiply, both permit addition and multiplication. So what we show is the construction of a fully homomorphic encryption scheme based on integers, namely the operations, the basic operations in the scheme is just addition and multiplication, plain addition and multiplication of integers. No ideals, no polynomial ranks, just simple operations. >>: Bounded in some way? >> Vinod Vaikuntanathan: Integers mark something. >>: How big is a thing? The penalty for the Gentry function, you frighten me? I'm more afraid of the number of cross-function than having to learn new math. >> Vinod Vaikuntanathan: The answer is kind of unfortunate. If you're frightened by the cost of Gentry's function, you'll be really frightened by -- but the point is that -- the point I'm trying to make is that you can improve on a construction only if you understand it. You have sort of a good idea how this encryption scheme behaves. And therefore what we should do is construct simple elementary encryption schemes. So we showed two constructions, one based on integers and the security of the scheme is based on the approximate GCD problem. The second construction uses addition and multiplication of matrices, and this scheme is not fully homomorphic. It can only evaluate a limited set of functions, namely quadratic formulas. The security of this is based on standard well-studied lattice problems. So what I'm going to talk about is the integer-based construction. So lets sort of jump into the scheme. So, first of all, what I'm going to -- we've been talking about public encryption all over the place. But what I'm going to describe to you is a secret key homomorphic encryption scheme. Namely, to encrypt a message I need the secret key. And I decrypt the message, decrypt the ciphertext using the same secret key. That's the setting. In fact, the setting is already useful in the context of cloud computing, because the sender and receiver are the same entity. Because how does encryption scheme work? Well, the secret key is an N bit where N is pretty large. N bit odd number P. So that's the secret key. How do I encrypt a bit P? I'm going to talk about encryption schemes that encrypt a simple bit for simplicity. How do I encrypt a bit P? I pick a large multiple of P. Let's say Q times P. Q is a very large number. I'm going to pick a small number, small even number, 2 times R. And I'm going to add all these quantities together with the message. So the ciphertext is Q times P. It's a large number. Plus the small noise term, 2 times R plus B. That's the ciphertext. Okay. How do I decrypt this ciphertext? First of all, if I take the -- I have the secret key. I have the decryption algorithm. I have the secret key. If I take the ciphertext and take it modular P, what I get is the noise term. It's 2 times R plus B. In fact, the key thing to note is that I get the exact noise term. I do not get 2 times R plus B mark B. I get that number by itself. That's an important thing to note. Now once I have this noise term, I can just read off the least significant bit and that's the decryption algorithm. So this is the entire encryption scheme. Any questions? Questions? Comments? Good. Wonderful. So this is the encryption scheme. What I didn't tell you is how to add and multiply encrypted bits. So that's the next thing we'll go over how to add and multiply encrypted bits. At a high level addition and multiplication works because if I take a ciphertext and another ciphertext and add and multiply them together, the noise term is still going to be small. Assuming that the original noise is really small, the noise terms are not going to grow by too much. >>: Would the number P not encrypt successive messages with the same key? >> Vinod Vaikuntanathan: No. So that's part of the assumption. The assumption is that if I give you the approximate GDC assumption, if I give you many near multiples of P, like large multiple plus noise, it is indistinguishable. You can't recover P. That's the assumption. So good. So addition and multiplication works in high level because the noise terms do not grow by too much when you add and multiply numbers. So let's look at it more concretely. Let's take two ciphertexts. One encrypting a bit B1 and the other encrypting B2. And addition of the underlying bits is done by simply adding these two numbers as integers. So when you add these two numbers, I get a multiple of P. I get a noise term, which is slightly bigger than the original noise because I added two of these things together, plus the sum of the two bits. So now if I take this sum of the two numbers part B and I read off the least significant bit, I get the exclusive word of the two bits. So that's that. How do you multiply? Essentially proceeds the same way, except that it gets a little bit more, the algebra gets a little bit more complicated. So when you multiply two ciphertexts, I again get a multiple of P plus a small even noise. It's much bigger than before because if you start with sort of an N bit noise -- >>: What's an N bit noise? >> Vinod Vaikuntanathan: So I started with an N to the half bit noise and N to the half bit noise. And what I get is two times N to the half bit noise. That's the resulting noise. And if I take the smart P and read off the least significant bit, again get the product of these two bits mark two. So the homomorphic operation, homomorphic multiplication and addition is simply adding and multiplying the underlying integer ciphertext. Josh, question? >>: You get N bit noise. >>: N bits times two. Two N bits. So we're missing something -- >> Vinod Vaikuntanathan: N bits. >>: Square root. Square root of. >>: Oh, square root. >> Vinod Vaikuntanathan: N bits. >>: Never mind. >>: Thank you. >> Vinod Vaikuntanathan: Good. So -- >>: I made that mistake. >>: N of N, if you want security. >> Vinod Vaikuntanathan: That's a good question. So it depends on how secure we believe this approximate GDC problem is. I don't have a good enough answer to you -- actually, I don't have any answer to you. So we have to study this problem and see how difficult it is. And some of which we do in the paper but we don't have a concrete recommendation for what a security parameter is. >>: So the approximate GDC problem was just introduced a year or two ago? >> Vinod Vaikuntanathan: Good. Good. So this problem has been known in various different sort of forms and shapes before. So if you look at it a little more carefully, you can see this is a variant of the simultaneous [indiscernible] approximation problem I give you fractions you find me a fraction number which approximates each of these so many numbers I give you. So this was a problem that was fairly well studied. In fact, Jeff Logarias [phonetic] has a work on it as far as 1982 and people have studied this problem for a while. So the name was introduced by Howard Gregham [phonetic] like five years ago. >>: I suppose you're going to get to this quickly. But you go through multiplications -- >> Vinod Vaikuntanathan: Good. So you see that the least significant bit is a product of the -- oh, great. Good. So clearly this scheme has two problems. One is that if I multiply two ciphertexts, the size of the resulting ciphertext grows. And essentially it grows -- essentially when you compute a big program on the ciphertext, the resulting ciphertext is as big as the size of the program itself. So if you think in terms of the cloud computing example, the receiver gets this huge ciphertext, just reading the whole thing takes it as much time as computing the program. So this is a huge problem. I'm not going to talk in detail about how we solve this problem, but the rough idea is that we give the server another near multiple of P. And every time you do a homomorphic operation you take this mod, this near multiple. I'm not giving you any extraneous information because instead of giving you hundred near multiples of P, I'm giving you 101 near multiples. The problem doesn't change that much, but it does decrease the size of the ciphertext. The more serious problem is that the underlying noise in the ciphertext grows whenever you add or multiply numbers. This is a slightly more serious problem to handle. And in fact the first thing that we observe is that we can do a limited number of additions and multiplications already with this basic scheme. And then there's a way to bootstrap this limited additions and multiplications to get computation of an arbitrary circuit. This is a very beautiful technique that Craig Gentry introduced in his original construction. So putting these two things together essentially solves our problem. Good. So this is QD, all I wanted to say, right? Any questions? >>: How important is that? So I think the bounded R models are the square root and bits. So how important is that to be able to increase it, say, or how flexible is it? >> Vinod Vaikuntanathan: So you can make it a polynomial, let's say N to the 0.99. And you will still get -the larger you make that noise, the larger your noise grows when you do homomorphic operations. The smaller of the number of homomorphic operations you can do with this basic scheme. So amount of noise dictates the amount of homomorphic operations that you can do. On the other hand, if you have a larger amount of noise, it's conceivable that underlying problem is harder. So there's a trade off here. The smaller you make the noise, the easier the underlying problem becomes and the more the homomorphic operations you can do. So square root of N is sort of an arbitrary number which lets me do log N multiplications. That's sort of the threshold I want to wager. >>: Log N, because -- >> Vinod Vaikuntanathan: Order of log N. Order of log N. So that's that, the rest of the talk, two slides, I want to tell you a little bit about my research philosophy and to tell you about a couple of open questions that this work leaves behind. So I think cryptography is an exciting field to work, because it both takes practical problems, problems that arise in practice, and solves it using very exciting mathematical techniques. So this combination is what makes for me what makes cryptography very exciting thing to do. What I told you about today is two applications, two exciting applications that we solved using cryptographic techniques. The first is [indiscernible] security channels. And both the fields are sort of new fields. They're just in their infancy. There's a lot of work to be done. A big question that arises in the context of security against side channel attacks is the following. I showed you a particular construction of a public security scheme that's against secure side channels. The big question is can you take any program which is not necessarily secure against side channels and immunize it against, a general way of transforming any program to a leakage resilient program. And I want to do this while not killing the, for this to be practical, I want to do this while not killing the efficiency of the program. This is I think a very big question in this field. Number two, again the big question in the context of computing our interpretive data is how efficient can you make the fully encryption scheme. The scheme I presented to you is David's law slow, so it's polynomial time. But it's a bit polynomial. So asymptotically we did a back-of-the-envelope computation. We optimized the exponent, the exact polynomial. So I think the number that we came up with is N to the 8. In other words, if executing a program takes K steps, executing this under the encryption takes N to the eight times K steps where N is the security parameter. >>: But the size of the [indiscernible]. >> Vinod Vaikuntanathan: Sure. So the really big question here is can you do this -- can you take this algorithm and make some improvements in the implementation? That might already bring down, that might already bring down the complexity quite a lot. And the question is can you design completely different schemes that are dramatically more efficient. That's the big question. One thing I didn't get to tell you about is my work on the mathematical foundations of cryptography. So a lot of these works use either explicitly the encryption schemes that I developed based on lattices. Lattice are geometric objects that look like periodic grids in space, but they embed very hard problems, very hard computational problems. And this is a very new sort of mathematical field that we have based cryptography on. And it turns out that the schemes that we develop in this context are useful in both protecting against side channels and also the insights very useful in constructing the homomorphic encryption scheme. So this is another big set of problems that I'm interested in. And that's that. [applause] >>: Your integer lattice scheme has a little bit of the flavor of the [indiscernible] schemes a few years back. Is it just -- >> Vinod Vaikuntanathan: So there's no formal relation that I can prove yet. One sort of superficial difference is that all lattice-based schemes use N dimensional lattices and here we're just working with integers. So that's a very superficial difference. But the real answer to your question is that a lot of the insights are the same in constructing both these schemes, but I don't know of a formal connection. >>: Okay. >>: I'll ask at this point, this is probably something we can do off line. I'd love to get a better sense for how the smoothing works to keep the [indiscernible] down. I imagine that's more than a slide or two. >> Vinod Vaikuntanathan: Yes. Yes. In fact, sort of handling these two problems that I mentioned, it's 15 full minutes of the talk. >>: So we'll do that later. >>: [indiscernible]. >>: I was going to ask about your estimate of the N to the eighth, if that comes out of those details. >> Vinod Vaikuntanathan: So it comes out of the details. So if you look at the -- so the security parameter that we work with is the size of the noise. So let's call that K, which is square root of N. So the prime that I need to pick is of size K squared. And it turns out that this problem is actually easy if the multiple that I choose, the multiple Q I choose is too small. So I need to pick that to be sort of this square of this size of P. So that's K to the fourth. And then when I do this bootstrapping process that already adds the square root of this number, so that's K to the 8. And I'm not sure if this explains anything, but the complexity grows very fast when you put all these steps together. >>: Still the size of the -- it sounds more like the size, but you're actually saying the steps grow [phonetic]. >> Vinod Vaikuntanathan: That's probably -- the number of steps grow, too, that's probably not apparent from what I described. But once you do this bootstrapping process, the time required to process every gate is essentially K to the power 8, the size of these numbers. I can explain this off line. >>: Another thing, following up on one of Brian's comments in the first half of your talk, so does it actually, instead of the shortest vector problem, does it reduce to the closest vector problem. >> Vinod Vaikuntanathan: It's not the closest vector problem. It's something that looks like the closest vector problem. The closest vector problem says I give you a point and you're supposed to find the really closest lattice point. The problem that I'm looking at here is that I give you a point in space and you're supposed to find some close. >>: Which is LQ does. >> Vinod Vaikuntanathan: Yes. Some close point within a certain radius. There are exponentially many close points. And you're supposed to find one of them. >>: Kristin Lauter: Other questions? Okay. Thank you. [applause]