Monte Carlo Simulation - YSU Computer Science & Information

advertisement

Approximating Probabilities and Percentage Points in Selected Probability Distributions

Problems in Statistical Computing:

1.

Finding p where

p = F(xp) and

F ( x) x f (t )dt

In many cases, F(x) is not expressible in closed form and we must approximate this integral.

2.

Finding xp where

p = F(xp) and

F ( x) x f (t )dt

To solve the problem:

Approximate Transformation of Random Variables, e.g., Normal approximation to Chi-square.

Closed Form Approximations, e.g., using Polynomial approximation. (The Weierstrass Approximatin Theorem, If F is a realvalued and continuous on a compact interval [a,b], there is a polynomial ...)

Numerical Techniques: Newton-Cotes Quadrature, Gaussian Quadrature.

Simulation

Study and experiment with the complex internal interactions of a given system, e.g., a firm, an industry, an economy, a

rocket system, ... .

Study complex system that cannot be described in terms of a set of mathematical equations for which analytic solutions are

obtainable.

Validating the mathematical models describing a system with simulated data to reduce cost.

A pedagogical device for teaching both students and practitioners basic skills in theoretical analysis, statistical analysis and

decision making, e.g., business administration, economics, medicine, law, ... .

Monte Carlo Simulation

Monte Carlo method is a technique that uses random number or pseudo-random numbers for solution of a model.

In 1908 the famous statistician Student used the Monte Carlo method for estimating the correlation coefficient in his tdistribution.

The term "Monte Carlo" was introduced by von Neumann and Ulam during World War II, as a code word for the secret work at

Los Alamos; it was suggested by the gambling casinos at the city of Monte Carlo in Monaco. The Monte Carlo method was then

applied to problems related to the atomic bomb. The work involved direct simulation of behavior concerned with random

neutron diffusion in fissionable material.

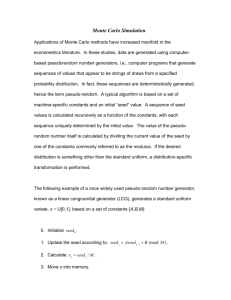

Pseudo-Random Numbers ( Quasi-Random Numbers )

Monte Carlo simulations are inherently probabilistic, and thus make frequent use of programs to generate random numbers, as do

many other applications. On a computer, these numbers are not random at all -- they are strictly deterministic and reproducible,

but they look like a stream of random numbers. For this reason such programs are more correctly called pseudo-random number

generators.

A standard pseudo-random number generator aims to produce a sequence of real random numbers that are uncorrelated and

uniformly distributed in the interval [0, 1]. Such a generator can also be used to produce random integers and sequences with a

probability distribution that is not uniform.

Essential Properties of a Random Number Generator

Repeatability -- the same sequence should be produced with the same initial values (or seeds). This is vital for debugging.

Randomness -- should produce independent uniformly distributed random variables that pass all statistical tests for

randomness.

Long period -- a pseudo-random number sequence uses finite precision arithmetic, so the sequence must repeat itself with a

finite period. This should be much longer than the amount of random numbers needed for the simulation.

Insensitive to seeds -- period and randomness properties should not depend on the initial seeds.

Further Properties of a Good Random Number Generator

Portability -- should give the same results on different computers.

Efficiency -- should be fast (small number of floating point operations) and not use much memory.

Disjoint subsequences -- different seeds should produce long independent (disjoint) subsequences so that there are no

correlations between simulations with different initial seeds.

Homogeneity -- sequences of all bits should be random.

Random Number Generation

Congruential Generator

Congruential methods are based on a fundamental congruence relationship, which may be expressed as

Xi+1 = ( a Xi + c ) ( mod m ),

i = 1, ... , n,

(I)

where the multiplier a, the increment c, and the modulus m are nonnegative integers. The modulo notation ( mod m ) means

that

Xi+1 = a Xi + c m ki ,

where ki = [ (a Xi + c)/m ] denotes the largest positive integer in (a Xi + c)/m .

Given an initial starting value X0, also called the seed, (I) yields a congruence relationship (modulo m) for any value i of the

sequence { Xi }. Generators that produce random numbers according to (I) are called mixed congruential generators. The

random numbers on the unit interval (0, 1) can be obtained by

Ui = X i / m

Such a sequence will repeat itself in at most m steps, and will therefore be periodic. For example, let a = c = X0 = 3 and m = 5;

then the sequence obtained from the recursive formula

Xi+1 = (3 Xi + 3 )( mod 5 ), is Xi = 3, 2, 4, 0, 3.

The period of the sequence is 4.

Let p be the period of the sequence. When p equals it maximum, that is, when p = m, we say that the random number generator

has a full period. The generator defined in (I) has a full period, m, if and only if,

c is relatively prime to m, that is, c and m have no common divisor.

a = 1(mod g) for every prime factor g of m

a = 1(mod 4) if m is a multiple of 4.

Since most computer utilize a binary system, we can select m = 2, where denotes the word-length of the particular computer.

(guarantees a full period)

Multiplicative generator

Xi+1 = a Xi ( mod m ), i = 1, ... , n,

(A full period can not be achieved here, but a maximal period can, provided that X0 is relatively prime to m and a meets certain

congruence conditions.)

Additive congruential generator

Xi+1 = Xi + Xi-k ( mod m ), k = 1, ... , i1.

(When k = 1, it is a Fibonacci sequence.)

Inverse Transform Method

Theorem: Let Y have a distribution that is U(0,1). Let F(x) have the properties of a distribution function of the continuous

type with F(a) = 0 and F(b) = 1, and suppose that F(x) is strictly increasing on the support a < x < b, where a and b could be

and , respectively. Then the random variable X defined by X = F 1(Y) is a continuous random variable with distribution

function F(x).

Proof: The distribution function of X is

P (X x ) = P [ F 1(Y) x ],

a< x<b.

Since F (x) is strictly increasing, { F 1(Y) x } is equivalent to { Y F (x) } and hence

P (X x ) = P [ Y F (x) ],

a< x<b.

But Y is U(0, 1); so P (Y y ) = y for 0 < y < 1, and accordingly,

P (X x ) = P [Y F (x) ] = F ( x ), 0 F(x ) < 1.

That is the distribution function of X is F(x).

To generate a random numbers from exponential distribution with mean 10:

F(x) = 1 ex/10 and x =F1(y) = 10ln(1 y)

Use uniform U(0, 1) random number generator to generate random numbers y1, y2, ..., yn .

The exponentially distributed random numbers xi 's will be

xi = 10ln(1 yi) , i = 1, ..., n.

Therefore, if the uniform random number generator generates a number 0.1514, then 1.6417 = 10ln(1 0.1514) would be a

random exponential observation.

Statistical Tests of Pseudo-random Numbers

Checking distribution

Chi-square Goodness-of-Fit Test

Kolmogorov-Smirnov Goodness-of-Fit Test

Cramer-von Mises Goodness-of-Fit Test

Checking randomness

Serial Test

The Up-and-Down Test

Gap Test