Use of ID3 Algorithm for Classification

advertisement

Use of ID3

Algorithm

for

Classification

IRNLP Coursework 2

Amrit Gurung 2702186

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

1. Abstract ............................................................................................................................................... 4

1. Machine Learning ............................................................................................................................... 5

2. Information Retrieval .......................................................................................................................... 5

3. Information Organisation .................................................................................................................... 6

3.1 Semantic Web ............................................................................................................................... 6

3.2 Classification................................................................................................................................. 6

4. Classification Techniques ................................................................................................................... 7

4.1.1 Probabilistic Models .................................................................................................................. 7

4.1.2 Symbolic Learning and Rule Induction ..................................................................................... 7

4.1.3 Neural Networks ........................................................................................................................ 7

4.1.4 Analytic Learning and Fuzzy Logic........................................................................................... 8

4.1.5 Evolution Based Models ............................................................................................................ 8

4.2.1 Supervised Learners ................................................................................................................... 8

4.2.2 Unsupervised Learners............................................................................................................... 8

4.3.3 Reinforcement Learners ............................................................................................................. 8

5. ID3 Algorithm..................................................................................................................................... 8

5.1 Decision Trees .............................................................................................................................. 8

5.2 Training Data and Set ................................................................................................................... 9

5.3 Entropy........................................................................................................................................ 10

5.4 Gain ............................................................................................................................................. 10

5.5 Weaknesses of ID3 Algorithm .................................................................................................... 11

6. WEKA .............................................................................................................................................. 11

7. Using ID3 to Classify Academic Documents in the Web ................................................................. 11

7.1 Attributes of a Web Document ................................................................................................... 11

7.2 Training Set................................................................................................................................. 12

7.3 Training Data .............................................................................................................................. 13

7.4 Weka Output ............................................................................................................................... 14

2

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

7.5 Inferring Rules from the Decision Tree ...................................................................................... 16

7.6 Positive classifications ................................................................................................................ 16

7.7 Negative Classifications.............................................................................................................. 16

7.8 Issues with the training data set .................................................................................................. 17

8. Conclusion ........................................................................................................................................ 18

9. References ......................................................................................................................................... 18

10. Appendix ......................................................................................................................................... 19

10.1 “mytest.arff” file ....................................................................................................................... 19

10.2 Screenshot of Weka .................................................................................................................. 20

3

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

1. Abstract

Information systems have huge amount of data. Information Retrieval is the act of acquiring

the correct data that we have, at the time we need it. “Google” is one of the leading

companies that have been the most successful for Information Retrieval yet it is not perfect.

There are millions of documents on the web. Shifting through them manually is impossible.

Machine learning tries to organise as they come and in and retrieve these documents

according to the query put in by the user. ID3 algorithm is one of the machine learning

techniques that can help to classify data. The report will look into; how ID3 fits into

Information Retrieval and Machine Learning, how it works, implement it to classify some

data and finally conclude on its performance.

4

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

1. Machine Learning

Machine Learning is a part of Artificial Intelligence. In Machine Learning computers are

instructed using algorithms to help infer and deduce. Computers are taught to find useful

information from data. Machine Learning will allow automatic generation of patterns and

rules in large data, help predict outcome of queries and also help classify data Machine

Learning has application in many fields like Information Retrieval, Natural Language

Processing, Biomedicine, Genetics, Stock Market etc.

2. Information Retrieval

Processed data that is meaningful is known as information. We have so much data available

on the internet. There are search engines like “Google” and “Yahoo” that try to find what we

are looking for in the web. The data on the web is not meaningful at all if it is not available to

the people who are looking for it. “Information Retrieval” is the process of finding this prestored information. We know it’s there and we try to access it.

Fig1 Information Retrieval

Suppose we have a set of documents “N” and a subset “A” of “N” that includes all the

documents that relates to the keyword “A”. When a user inputs “A” as the search keyword

then the ideal situation in Information Retrieval would be to return all the documents in the

set of “A”. Let’s look at Google, the most popular search engine for Information Retrieval in

the web. A query of the phrase “Implementation of ID3 algorithm for classification” in

Google returns 40,000 results in English. The Scholar version of Google returns 8000 results.

We also find that all the results that it produces are not the exact matches of what we were

looking for but the success ratio is acceptable.

5

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

There are two main processes involve in Information Retrieval, understanding what the user

is looking for and organising the data so that all related data are grouped together for correct

retrieval. The user in a web search normally inputs search terms in keywords. Web search

engines can’t fetch good results when natural language is used in the query so keywords are

preferred. “User Modelling” can be done to check what the users input and what results they

accepted for their query. This will help us determine what the user was looking for when he

input a particular search phrase. Organising the data that relates to the search term is known

as Classification. Manually classifying data is impossible when there’s million of datasets

involved. There are numerous algorithms and techniques that instruct computers how to

classify data. ID3 algorithm together with C4.5, Neural Networks, Bayesian model, Genetic

algorithm, is one of them.

Information Retrieval differs from Data Mining by the fact that in Information Retrieval we

know exactly what we are looking for. In Data Mining we are looking at data to try and find

patterns which will lead us to information that we previously didn’t know.

3. Information Organisation

One of the main challenges in Information Retrieval is to organise the data so that similar

data are grouped together. As shown in Figure 1, we have to group all data covers the subject

“A” so that when “A” is queried we return all the elements of A. Internet is the biggest source

of networked information that we have at the moment. In the internet we have heterogeneous

data that is located in a multitude of servers. There are duplications, no verification of the

documents, inaccurate descriptions and also data which disappear. This makes the job of

Information Retrieval even more difficult in web search.

3.1 Semantic Web

One of the main ideas to organise data and the documents in the web is to have Meta data as

compulsion. Meta data describes the what ‘data’ the data actually holds. This will make it

very efficient to collect, organise and retrieve data. But there is no authority in the web that

enforces the use of Meta data. Hyper Text Transfer Protocol (HTML) files, text files and

multimedia files like JPEG, Flash and WMV files allow the use of Meta tags to describe their

contents, size, author and other properties. We don’t actively use the META tags which

makes the goal of making the web semantic more difficult. One option is to collect all the

data and documents without Meta tags in the web and add them by means of machine

learning automatically. This is a challenging proposal but a semantic web will help

tremendously in Information Retrieval.

3.2 Classification

Classification is process of grouping together documents or data that have similar properties or are

related. Our understanding of the data and documents become greater and easier once they are

classified. We can also infer logic based on the classification. Most of all it makes the new data to be

sorted easily and retrieval faster with better results.

6

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

Fig 2 Classification of Books according to Subject

Dewey Decimal Classification is the system most used in the libraries. It is hierarchical; there are ten

parent classes which are further divided into ten further divisions which also are in turn divided into

ten sections. Each book is assigned a number according to its class, division and section

alphabetically. Dewey Decimal Classification is very successful in libraries but unfortunately it can’t

be implemented in Information Retrieval. Somebody needs to have a central catalogue of all the

documents in the web and whenever a new document is added the central committee would have to

look at it classify it assign a number and publish it in the web. This is in strong violation of the way

the internet works. Some authority controlling the contents of the web will restrict the amount of data

that can be added into the web. We need a web that allows everyone to upload their content in the web

together with a Machine Learning technique that finds these new data and classifies them as they

come.

4. Classification Techniques

There are different Machine Learning paradigms for classification.

4.1.1 Probabilistic Models

In Probabilistic Models the probability of each class and features are recorded with the help

of the training data set. The outcome of the new data or its classification is based on these

probabilistic models. One of the examples of Probabilistic Modelling is the Bayesian Model.

4.1.2 Symbolic Learning and Rule Induction

In Symbolic Learning and Rule Induction the algorithms learn by being told and looking at

examples. ID3 algorithm developed by Quinlan is one of them.

4.1.3 Neural Networks

In Neural Networks, the data and its output (nodes) are inter-connected in a web like structure

through programming constructs which mimic the function of the neurons in the human

brain. Based on these, when new data is place in one of the nodes, its output can be predicted

or it can be classified accordingly.

7

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

4.1.4 Analytic Learning and Fuzzy Logic

Analytic Learning and Fuzzy Logic normally have logical rules which are used to create even

more complex rules. Based on these logical rules it looks for truths ranged between 0 and 1.

4.1.5 Evolution Based Models

Evolution based models are based on Darwin’s theory of Natural Selection and are divided

further into a) Genetic algorithms, b) Evolution strategies and c) Evolutionary programming.

These classification techniques also can be classified as being;

4.2.1 Supervised Learners

Algorithms that learn from looking at input/output matches of training data to find results for

new data (like the ID3 algorithm).

4.2.2 Unsupervised Learners

There are no training data sets, the algorithms learn by looking at the input patterns and

predict the result.

4.3.3 Reinforcement Learners

These algorithms observe the state and make predictions. Each prediction are rewarded or

punished according to the accuracy. The algorithm then learns how to make the right

decision.

5. ID3 Algorithm

ID3 algorithm is an example of Symbolic Learning and Rule Induction. It is also a supervised

learner which means it looks at examples like a training data set to make its decisions. It was

developed by J. Ross Quinlan back in 1979. It is a decision tree that is based on mathematical

calculations. The main concepts of ID3 algorithm are described below from sections 5.1 to

5.4.

5.1 Decision Trees

Fig 3 Decision Tree

8

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

A decision tree classifies data using its attributes. It is upside down. The tree has decision

nodes and leaf nodes. In Fig 3, “linkFromAcademias” attribute is a decision node and the

“author” attribute is the leaf node. The leaf node has homogenous data which means further

classification is not necessary. ID3 algorithm builds similar decision trees until all the leaf

nodes are homogenous.

5.2 Training Data and Set

ID3 algorithm is a supervised learner. It needs to have training data sets to make decisions.

The training set lists the attributes and their possible values. ID3 doesn’t deal with

continuous, numeric data which means we have to descretize them. Attributes such age which

can values like 1 to 100 are instead listed as young and old.

Attributes

Values

Age

Young, Middle aged, Old

Height

Tall, Short, Medium

Employed

Yes, No

Fig 4 Training Set

The training data is the list of data containing actual values.

Age

Height

Employed

Young

Tall

Yes

Old

Short

No

Old

Medium

No

Young

Medium

Yes

Fig 5 Training Data

9

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

5.3 Entropy

Fig 6 Entropy

Entropy refers to the randomness of the data. It ranges from 0-1. Data sets with entropy 1

means it is as random as it can get. A data set with entropy 1 means that it is homogenous. In

Fig 6, the root of the tree has a collection of Data. It has high entropy which means the data is

random. The set of data is eventually divided into subsets 3, 4, 5 and 6 where it is now

homogenous and the entropy is 0 or close to 0. Entropy is calculated by the formula:

E(S) = -(p+)*log2(p+ ) - (p- )*log2(p- )

“S” represents the set and “p+” are the number of elements in the set “S” with positive values

and “p –“ are the number of elements with negative values.

The purpose of ID3 algorithm is to classify data using decision trees, such that the resulting

leaf nodes are all homogenous with zero entropy.

5.4 Gain

In decision trees, nodes are created by singling out an attribute. ID3’s aim is to create the leaf

nodes with homogenous data. That means it has to choose the attribute that fulfils this

requirement the most. ID3 calculates the “Gain” of the individual attributes. The attribute

with the highest gain results in nodes with the smallest entropy. To calculate Gain we use:

Gain(S, A) = Entropy(S) - S ((|Sv| / |S|) * Entropy(Sv))

In the formula, ‘S’ is the set and ‘A’ is the attribute. ‘SV ‘ is the subset of ‘S’ where attribute

‘A’ has value ‘v’. ‘|S|’ is the number of elements in set ‘S’ and ‘|Sv|’ is the number of

elements in subset ‘Sv’.

10

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

ID3 chooses the attribute with the highest gain to create nodes in the decision tree. If the

resulting subsets do not have entropy zero or equal to zero then it chooses one of the

remaining attribute to create further nodes until all the subsets are homogenous.

5.5 Weaknesses of ID3 Algorithm

ID3 uses training data sets to makes decisions. This means it relies entirely on the training

data. The training data is input by the programmer. Whatever is in the training data is its base

knowledge. Any adulteration of the training data will result in wrong classification. It cannot

handle continuous data like numeric values so values of the attributes need to be discrete. It

also only considers a single attribute with the highest attribute. It doesn’t consider other

attributes with less gain. It also doesn’t backtrack to check its nodes so it is also called a

greedy algorithm. Due to its algorithm it results in shorter trees. Sometimes we might need to

consider two attributes at once as a combination but it is not facilitated in ID3. For example

in a bank loan application we might need to consider attributes like age and earnings at once.

Young applicants with fewer earnings can potentially have more chances of promotion and

better pay which will result in a higher credit rating.

6. WEKA

“Weka is a collection of machine learning algorithms for data mining tasks. The algorithms can

either be applied directly to a dataset or called from your own Java code. Weka contains tools for

data pre-processing, classification, regression, clustering, association rules, and visualization. It is

also well-suited for developing new machine learning schemes.”[1]

It was developed by E. Frank, M. Hall and L.Trigg et al at the University of Waikato. In this

report, the Weka software is used to classify scholar documents in the web using the ID3

algorithm. Weka doesn’t create visual trees for the ID3 algorithm. It checks the accuracy of

the classified instances and training data sets can be loaded as ‘.arff’ files.

7. Using ID3 to Classify Academic Documents in the Web

Google has a scholar section where academic documents are available for students. It tries to

classify if a document has any academic merit in it. The use of ID3 for classifying the scholar

will be tested by identifying the attributes/ value pairs of academic documents and creating a

training data set.

7.1 Attributes of a Web Document

A web document has many properties like, author, date created, description and tags. To

identify if a document is academic or not, I have created a test scheme. The author should be

an academic himself, like a doctor, professor a student. It is impossible to check the author’s

titles but it’s just an assumption that we can. All journal articles are academic articles.

Websites of academic institutions have “.ac” or “.edu” and governmental organizations have

“.gov” in their domain names. So all documents published in those websites by a doctor,

professor or students are considered academic. If a document is published in a “.com”

11

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

website and written by any of the academics, it can only be considered scholar material if it is

referred or linked to be by an academic or governmental website.

Google has more than five hundred million hyper-links in database to help them rank pages.

The document that the hyper-links point to are given ticks and ranked by the number of the

ticks they get. So analysing if the document containing the hyper-links is from an academic

or governmental website shouldn’t be troublesome.

7.2 Training Set

Attribute

Possible Values

linksFromAcademias

YES,NO

author

doctor, professor, student

domain

com,edu,ac,gov

linksTo

null, low, high

journalArticle

YES,NO

scholarMaterial

YES,NO

Fig 7 Training Set to check Academic documents

The ‘linksFromAcademia’ attribute is to check if the document containing the hyper-link to

the document is from an academic website or not. The ‘author’ attribute has values doctor,

professor and student. It means documents authored by people other than this will not be

considered at all. The ‘linkTo’ attribute shows the number of hyperlinks in the document. ID3

can’t handle continuous numeric data so the possible values have been descretized as null,

high and low. The ‘journalArticle’ checks if the document is from Journal or not. And finally

the ‘scholarMaterial’ attribute is the decision attribute to decide if the document is academic

or not.

12

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

7.3 Training Data

For the training data twenty-eight instances have input. The data set is consistent with no

contradictory instances.

linksFromAcademia

s

NO

YES

NO

NO

YES

YES

NO

NO

YES

YES

NO

NO

YES

NO

NO

NO

NO

YES

YES

NO

NO

NO

YES

NO

NO

NO

NO

NO

author

student

doctor

doctor

student

student

professor

student

doctor

professor

doctor

professor

student

professor

student

professor

doctor

professor

professor

student

professor

doctor

professor

student

student

professor

doctor

doctor

doctor

domain

ac

ac

gov

com

com

com

com

com

edu

edu

edu

com

ac

gov

gov

com

com

ac

com

com

com

com

gov

edu

ac

com

edu

ac

linksTo

low

low

null

null

low

high

high

high

low

low

null

high

null

null

null

null

null

high

high

null

null

null

high

null

null

null

high

low

journalMaterial

NO

NO

NO

NO

NO

NO

NO

NO

NO

NO

NO

NO

NO

NO

NO

YES

YES

NO

YES

NO

NO

NO

NO

NO

NO

NO

NO

NO

scholarMaterial

NO

YES

YES

NO

YES

YES

NO

NO

YES

YES

YES

NO

YES

NO

YES

YES

YES

YES

YES

NO

NO

NO

YES

NO

YES

NO

YES

YES

13

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

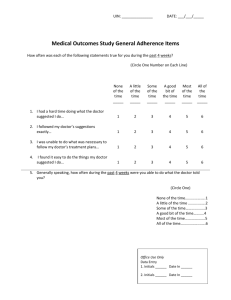

7.4 Weka Output

The above data set was tried in Weka using the ID3 algorithm. The tree generated is shown

below.

linksFromAcademias = YES: YES

linksFromAcademias = NO

| author = doctor

| | domain = com

| | | journalArticle = YES: YES

| | | journalArticle = NO: NO

| | domain = edu: YES

| | domain = ac: YES

| | domain = gov: YES

| author = professor

| | domain = com

| | | journalArticle = YES: YES

| | | journalArticle = NO: NO

| | domain = edu: YES

| | domain = ac: YES

| | domain = gov: YES

| author = student: NO

To visualise the tree the straight lines have to be taken as nodes.

Fig 8 Half tree

Figure eight shows the half of the tree actually generated by the Weka software for the

training data set in section 7.3.

The ‘linksFromAcademias’ attribute had the highest gain so the node was created based on

that attribute. The sub-set with values ‘YES’ for the ‘linksFromAcademias’ node was

homogenous which meant there was no need to classify it further. There were 9 instances

with the ‘YES’ value in that particular subset. The subset with ‘NO’ values for the

‘linksFromAcademias’ had nineteen instances and they were not homogenous (the entropy

was still high). The subset with the ‘NO’ value was then divided by using the ‘author’

attribute because it had the highest gain. In the resultant subset the subset values was

homogenous but the subsets with doctor and professor values were not. So the subset with

doctor and professor values were divided on basis of the ‘journalArticle’ attribute which

resulted into a perfectly homogenous. The tree had in total five nodes and eight leaves.

14

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

Weka producing the following evaluation report:

=== Evaluation on training set ===

=== Summary ===

Correctly Classified Instances

Incorrectly Classified Instances

Kappa statistic

Mean absolute error

Root mean squared error

Relative absolute error

Root relative squared error

Total Number of Instances

28

0

1

0

0

100%

0%

0%

0%

28

=== Confusion Matrix ===

a b <-- classified as

17 0 | a = YES

0 11 | b = NO

All the twenty eight instances were correctly classified. Seventeen were classified as scholar

documents and 11 were not classified as scholar documents. The classifications of these

instances were all correct which proves that the training set data was consistent and not

ambiguous.

15

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

7.5 Inferring Rules from the Decision Tree

By observing the decision tree we can create a series of if and else statements to create rules

to classify academic documents. Weka doesn’t have this ability so this has to be done

manually for now. In our case the inferring rules can be described by the following if else

statements:

If linksFromAcademias = ‘YES’ then{

Document = academic}

Elseif author = student then {

Document != academic (not equal to)}

Elseif author = doctor {

Elseif domain = ‘edu’, or ‘gov’ or ‘ac’ then {

Document = academic}

Elseif domain = com {

Elseif journalArticle = ‘YES’ then {

Document = academic }

Else {

Document != academic (not equal to)}

}

}

Elseif author = professor then {

Elseif domain = ‘edu’, or ‘gov’ or ‘ac’ then {

Document = academic}

Elseif domain = com {

Elseif journalArticle = ‘YES’ then {

Document = academic }

Else {

Document != academic (not equal to)}

}

}

The above nested if else statements actually states the rules to classify academic documents

based on the training data set.

7.6 Positive Classifications

Whenever a new data comes in we can classify it instantly by looking at this nested if else

statements. A document with links from other academia will be instantly classified as a

scholar document. Documents without links from other academia, and written by doctors and

professors are all classified as academic material unless they were published in a ‘.com’

website and not a journal article too.

7.7 Negative Classifications

Non journal documents written by doctors and professors that were published in ‘.com’ sites

were all classified correctly as non academic. All documents authored by students without

any links from any academia were classified as non academic.

16

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

7.8 Issues with the training data set

If a document didn’t have any links from any academia and was written by a student then

it was instantly classified as a non academic document. This is not true in real life. We

would like to state that documents written by students in journals should be classified as

academic documents. The training data set didn’t have this instance which made the ID3

algorithm come to this decision. To be able to modify this inclusion we have to add the

following instance in the data set where a student’s document published in a journal without

any academia’s link is set as an academic document.

linksFromAcademia

s

NO

author

student

domain

linksTo

com

low

journalMaterial

YES

scholarMaterial

YES

When the above instance was added to the training set and the tree generated by the ID3

algorithm was different from the previous one in section 7.4.

linksFromAcademias = YES: YES

linksFromAcademias = NO

| journalArticle = YES: YES

| journalArticle = NO

| | domain = com: NO

| | domain = edu

| | | author = doctor: YES

| | | author = professor: YES

| | | author = student: NO

| | domain = ac

| | | author = doctor: YES

| | | author = professor: YES

| | | author = student: NO

| | domain = gov

| | | author = doctor: YES

| | | author = professor: YES

| | | author = student: NO

The second node in the new decision tree takes the ‘journalArticle’ attribute to create new

leaves. In the previous decision tree it was the ‘author’ attribute. This new decision tree can

correctly classify journal articles without academic links, published in ‘.com’ sites and

written by students as being academic.

ID3 algorithm cannot handle missing attribute values. If we try to enter a training data set

with missing values in Weka then it gives an error.

Inconsistent training data set created errors in the decision tree. For example there are two

similar instances with different outcomes;

linksFromAcademia

s

NO

NO

author

doctor

doctor

domain

ac

ac

linksTo

low

low

journalMaterial

NO

NO

scholarMaterial

YES

NO

The above instances have same values except for the last attribute which is opposite. When

the training data set was tried out using the ID3 algorithm, the accuracy was only 96%.

17

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

The ‘linksTo’ attribute was never tested out while building decision tree in any of the cases

above. ID3 algorithm only considers the attribute with the highest gain. Due to this, it builds

the fastest and the shortest tree. In our case the ‘linksTo’ attribute was correctly left out but in

other scenarios all the attributes might have to considered equally.

8. Conclusion

Classification is very essential to organise data, retrieve information correctly and swiftly.

Implementing Machine learning to classify data is not easy given the huge amount of

heterogeneous data that’s present in the web. ID3 algorithm depends entirely on the accuracy

of the training data set for building its decision trees. The ID3 algorithm learns by

supervision. It has to be shown what instances have what results. Due to this ID3 algorithm, I

think, cannot be successfully classify documents in the web. The data in the web is

unpredictable, volatile and most of it lacks Meta data. The way forward for Information

Retrieval in the web, in my opinion would be to advocate the creation of a semantic web

where algorithms which are unsupervised and reinforcement learners are used to classify and

retrieve data.

9. References

1. http://www.cs.waikato.ac.nz/ml/weka/ [1]

2. “Building Classification Models: ID3 and C4.5 “[Online] Available from:

http://www.cis.temple.edu/~ingargio/cis587/readings/id3-c45.html [Accessed 7th Dec 08]

3. H. Hamilton, E.Gurak, F. Leah, W. Olive, (2000-2) “Computer Science 831: Knowledge

Discovery in Databases “[Online] Available from:

http://www2.cs.uregina.ca/~dbd/cs831/index.html [Accessed 7th Dec 08]

4. Bhatia, MPS and Khalid, Akshi Kumar (2008). "Information retrieval and machine

learning: Supporting technologies for web mining research and practice." Webology, 5(2),

Article 55. [Online] Available from: http://www.webology.ir/2008/v5n2/a55.html

[Accessed 7th Dec 08]

18

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

10. Appendix

10.1 “mytest.arff” file

@relation mytest

@attribute

@attribute

@attribute

@attribute

@attribute

@attribute

linksFromAcademias {YES,NO}

author{doctor,professor, student}

domain{com,edu,ac,gov}

linksTo {null, low, high}

linksTo {YES,NO}

scholarMaterial {YES,NO}

@data

NO, student, ac, low, NO, NO

YES, doctor, ac, low, NO, YES

NO, doctor, gov, null, NO, YES

NO, student, com, null, NO, NO

YES, student, com, low, NO, YES

YES, professor, com, high, NO, YES

NO, student, com, high, NO, NO

NO, doctor, com, high, NO, NO

YES, professor, edu, low, NO, YES

YES, doctor, edu, low, NO, YES

NO, professor, edu, null, NO, YES

NO, student, com, high, NO, NO

YES, professor, ac, null, NO, YES

NO, student, gov, null, NO, NO

NO, professor, gov, null, NO, YES

NO, doctor, com, null, YES, YES

NO, professor, com, null, YES, YES

YES, professor, ac, high, NO, YES

YES, student, com, high, YES, YES

NO, professor, com, null, NO, NO

NO, doctor, com, null, NO, NO

NO, professor, com, null, NO, NO

YES, student, gov, high, NO, YES

NO, student, edu, null, NO, NO

NO, professor, ac, null, NO, YES

NO, doctor, com, null, NO, NO

NO, doctor, edu, high, NO, YES

NO, doctor, ac, low, NO, YES

19

Amrit Gurung 2702186

Use of ID3 Algorithm for Classification

IRNLP Coursework 2

10.2 Screenshot of Weka

20