Word Document - Mysmu .edu mysmu.edu

advertisement

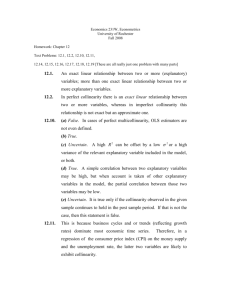

Econ107 Applied Econometrics Topic 7: Multicollinearity (Studenmund, Chapter 8) I. Definitions and Problems A. Perfect Multicollinearity Definition: Perfect multicollinearity exists in a following K+1-variable regression Yi 0 + 1 X 2i + . . . + K X K 1i i 0 X 1i + 1 X 2i + . . . + K X K 1i i if: 1 X 1i + 2 X 2i + 3 X 3i + . . . + K 1 X K 1i = 0 where the 'lambdas' are a set of parameters (not all equal to zero) and X 1i 1 . This must be true for all observations. Alternatively, we could write any independent variable as an exact linear function of the others. X 2i = - 1 3 X - . . . - K 1 X 1i X K 1i 3i 2 2 2 This says essentially that X2 is redundant. Just a linear combination of the other regressors. Problems with perfect multicollinearity: (1) Coefficients can't be estimated. For example, in the 2-variable regression: ˆ1 x 2i yi x 22i Suppose X2i=λX1i=λ. As a result x2i=0 for all i and hence the denominator is 0. Thus, estimated slope coefficients are undefined. This result applies to MLR. Intuition: Again, we want to estimate the partial coefficients. Depends on the variation in one variable and its ability to explain the variation in the dependent variable that can’t be explained by the other regressor. But we can’t get variation Page -2 in one without getting variation in the other by definition. (2) Standard errors can't be estimated. In the 3-variable regression model, the standard error on ˆ1 can be written: se ( ˆ1 ) = ˆ 2 x22i (1 - r 223 ) But perfect multicollinearity implies r23=1 or r23=-1 (r223=1 in either case), and the denominator is zero. Thus, standard errors are undefined ( ). The solution to perfect multicollinearity is trivial: Drop one or several of the regressors. B. Imperfect Multicollinearity Definition: Imperfect multicollinearity exists in a K+1-variable regression if: 1 X 1i + 2 X 2i + 3 X 3i + . . . + K 1 X K 1i + vi = 0 where vi is a stochastic variable. As Var(vi)0, imperfect becomes perfect multicollinearity. Alternatively, we could write any particular independent variable as an 'almost' exact linear function of the others. X 2i = - 1 1 3 X - . . . - K 1 vi X 1i X K 1i 3i 2 2 2 2 If you know K variables, you don’t know the K+1th variable precisely. What are the problems with imperfect multicollinearity? Coefficients can be estimated. OLS estimators are still unbiased, and minimum variance (i.e., BLUE). Imperfect multicollinearity does not violate the classical assumptions. But standard errors 'blow up'. They increase with the degree of multicollinearity. It reduces the ‘precision’ of our coefficient estimates. Page -3 For example, recall: se ( ˆ1 ) = 2 x22i (1 - r 223 ) As |r23|1, the standard error . Numerical example: Suppose standard error is 1 when r23=0. then the standard error=1.01. then the standard error=1.03. then the standard error=1.15. then the standard error=1.51. then the standard error=2.29. then the standard error=7.09. 4 1 2 3 se(beta2) 5 6 7 If r23=.1, If r23=.25, If r23=.5, If r23=.75, If r23=.9, If r23=.99, 0.0 0.2 0.4 0.6 0.8 1.0 |r23| Standard error increases at an increasing rate with the multicollinearity between the explanatory variables. Result in wider confidence intervals and insignificant t ratios on our coefficient estimates (e.g., you’ll have more difficulty rejecting the null that a slope coefficient is equal to zero). Page -4 This problem is closely related to the problem of a ‘small sample size’. In both cases, standard errors ‘blow up’. With a small sample size the denominator is reduced by the lack of variation in the explanatory variable. II. Methods of Detection 3 General Indicators or Diagnostic Tests 1. T Ratios vs. R2. Look for a ‘high’ R2, but ‘few’ significant t ratios. Common 'rule of thumb'. Can't reject the null hypotheses that coefficients are individually equal to zero (t tests), but can reject the null hypothesis that they are simultaneously equal to zero (F test). Not an 'exact test'. What do we mean by 'few' significant t tests, and a 'high' R2? Too imprecise. Also depends on other factors like sample size. 2. Correlation Matrix of Regressors. Look for high pair-wise correlation coefficients. Look at the correlation matrix for the regressors. Multicollinearity refers to a linear relationship among all or some the regressors. Any pair of independent variables may not be highly correlated, but one variable may be a linear function of a number of others. In a 3-variable regression, multicollinearity is the correlation between the 2 explanatory variables. Often said that this is a “... sufficient, but not a necessary condition for multicollinearity.” In other words, if you’ve got a high pairwise correlation, you’ve got problems. However, it isn’t conclusive evidence of an absence of multicollinearity. 3. Auxiliary Regressions. Run series of regressions to look for these linear relationships among the explanatory variables. Given the definition of multicollinearity above, regress one independent variable against the others and 'test' for this linear relationship. Page -5 For example, estimate the following: X 2i = 1 + 3 X 3i + . . . + K 1 X K 1i + ei where our hypothesis is that X2i is a linear function of the other regressors. We test the null hypothesis that the slope coefficients in this auxiliary regression are simultaneously equal to zero: H 0 : 3 4 K 1 0 with the following F test. 2 R2 F = K - 12 1 - R2 n-K where R22 is the coefficient of determination with X2i as the dependent variable, and K is the number of coefficients in the original regression. This is related to high Variance Inflation Factors discussed in the textbook, where VIFs 1 ; if 1 R22 VIF>5, the multicollinearity is severe. But ours is a formal test. Summary: No single test for multicollinearity. III. Remedial Measures Once we're convinced that multicollinearity is present, what can we do about it? Diagnosis of the ailment isn’t clear cut, neither is the treatment. Appropriateness of the following remedial measures varies from one situation to another. EXAMPLE: Estimating the labour supply of married women from 1950 -1999: HRS t = 0 + 1W W t + 2W M t + t where: HRSt = Average annual hours of work of married women. Wwt = Average wage rate for married women. Wmt = Average wage rate for married men. Page -6 Suppose we estimate the following: Hˆ RS t = 733.71 + 48.37 W W t - 22.91 W M t ................... (34.97) (29.01) 2 R = .847 Multicollinearity is a problem here. First tipoff is the t-ratios are less than 1.5 and 1 respectively (insignificant at 10% levels). Yet, R2 is 0.847. But easy to confirm mulicollinearity in this case. Correlation between mean wage rates is 0.99 over our sample period! Standard errors blow up. Can’t separate the wage effects on labour supply of married women. Possible Solutions? 1. A Priori Information. If we know the relationship between the slope coefficients, we can substitute this restriction into the regression and eliminate the multicollinearity. Heavy reliance on economic theory. For example, suppose that β2=-.5β1. We expect that β1>0 and β2<0. HRS t = 0 + 1W W t - .5 1W Mt + t = 0 + 1( W W t - .5 W Mt ) + t = 0 + 1W *t + t where we compute W*t=WWt-.5WMt. Suppose we re-estimate and find: * Hˆ RS t = 781.10 + 46.82 W t .......................(6.07) Clearly, this has eliminated multicollinearity by reducing this from a 3- to a 2-variable regression. Using earlier assumption that β2=-.5β1 we get individual coefficient estimates: ˆ1 = 46.82 ˆ 2 = -23.41 Unfortunately, such a priori information is extremely rare. Page -7 2. Dropping a Variable. Suppose we omit the wage of married men. We estimate: HRS t = 0 + 1W W t + vt The problem is that we're introducing 'specification bias'. We're substituting one problem for another. Remedy may be worse than the disease. Recall the fact that the estimate of α1 is likely to be a biased estimate of β1. E(ˆ1 ) 1 2b12 In fact, bias is increased by the multicollinearity. Where the latter term comes from the regression of the omitted variable on the included regressor. 3. Transformation of the Variables. One of the simplest things to do with time series regressions is to run 'first differences'. Start with the original specification at time t. HRS t = 0 + 1W W t + 2W M t + t The same linear relationship holds for the previous period as well: HRS t -1 = 0 + 1W W t -1 + 2W M t -1 + t 1 Subtract the second equation from the first: ( HRS t - HRS t -1 ) = 1( W W t - W W t -1 ) + 2 ( W M t - W M t -1 ) + ( t - t -1 ) or HRS t = 1 W W t + 2 W M t + t The advantage is that ‘changes’ in wage rates may not be as highly correlated as their ‘levels’. Page -8 The disadvantages are: (i) Number of observations are reduced (i.e., loss of a degree of freedom). Sample period is now 1951-1999. (ii) May lead to serial correlation. Cov ( t , t - 1 ) 0 t = t - t -1 t -1 = t -1 - t - 2 Again, the cure may be worse than the disease. Violates one of the classical assumptions. More on serial correlation later. 4. New Data. Two possibilities here: (i) Extend time series. Multicollinearity is a 'sample phenomenon'. Wage rates may be correlated over the period 1950-1999. Add more years. For example, go back to 1940. Correlation may be reduced. Problem is that it may not be available, or the relationship among the variables may have changed (i.e., the regression function isn’t ‘stable’). More likely that the data isn’t there. If it was, why wasn’t it included initially? (ii) Change Nature or Source of Data. Switch from time-series to cross-sectional analysis. Change the 'unit of observation'. Use a random sample of households at a point in time. The degree of multicollinearity in wages may be relatively lower ‘between’ spouses. Or combine data sources. Use 'panel data'. Follow a random sample of households over a number of years. 5. 'Do Nothing' (A Remedy!). Multicollinearity is not a problem if the objective of the analysis is forecasting. Doesn't affect the overall 'explanatory power' of the regression (i.e., R2). More of a problem if the objective is to test the significance of individual partial coefficients. Page - 9 However, estimated coefficients are unbiased. Simply reduces the 'precision' of the estimates. Multicollinearity often given too much emphasis in the list of common problems with regression analysis. If it’s imperfect multicollinearity, which is almost always going to be the case, then it doesn’t violate the classical assumptions. Much more a problem of if the goal is to test the significance of individual coefficients. Less of a problem for forecasting and prediction. IV. Questions for Discussion: Q8.11 V. Computing Exercise: Example 8.5.2 (Johnson, Ch 8)