Perceived Suitability of the Structured e

advertisement

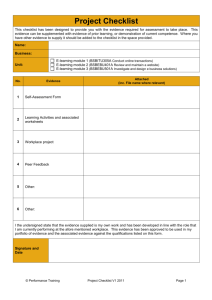

Perceived Suitability of the Structured e-Learning Content Based on the Classification of Tacit Knowledge Abstract This paper involved the design and evaluation of the Structured e-Learning Content based on the tacit knowledge classification. The paper firstly investigated the current state of research in e-learning software and addressed the current issues of explicit and tacit knowledge processing by taking into account the elements of pedagogical design such as conveying precise search results, offering key points concisely, and chunking the content into digestible segments for easy onscreen reading. Furthermore, an e-learning system for structuring contents was designed based on a new pedagogical model for separating the learning contents into segments that employed super-ordinate concepts in the student's cognitive structure, such as title, introduction, problem, objective, method, result. A questionnaire was constructed by adapting factors of perceived Ease of Use (EOU) and perceived Usefulness. The sample comprised 102 postgraduates students from two schools in a renown public university in Penang. The findings showed that the mean total scores for Usefulness and EOU were at moderately agreed at 3.48, 3.87 and 3.37 respectively. The findings revealed that the Structured e-Learning Content is suitable by program and school, and triggered satisfactory levels of processing for tacit knowledge. Keywords: Tacit Knowledge, e-learning, TAM, Pedagogy INTRODUCTION The digital content for e-learning is huge, and retrieving this content normally results in a random list output that can be daunting, meaningless, and not efficient for learning purposes (Roca, Chiu, & Martínez, 2006). Each item on the list consists of a vague description of the document with the keywords entered for the search matched in various combinations. Clicking on the item would invoke a download or full display of the document which could be one page or hundreds of pages long. These facts reflect the current lack of pedagogical preparation to provide an efficient representation that helps ensure meaningful use of or access to the documents in the e-learning systems. Thus, there is a need to employ technologies for designing more suitable presentations of the learning contents and documents. Structuring knowledge has been recognized as an important issue and systems can be personalized for different knowledge displays. Also, representing learning contexts in several ways and styles, based on the pedagogical design of the learning environment, can provide quick and accurate additional information for those interested in details (Coffield, Moseley, Hall, & Ecclestone, 2009). The knowledge representation of content in the e-learning applications has been categorized into various formats in a different number of educational environments. Structured knowledge can be found in different forms, which seek to reside, as a conceptual stage, in the head of the person using e-learning resources (Wong, Yin, Yang, & Cheng, 2011). This kind of knowledge contains several forms (both tacit and explicit) that most learners understand. Another type presents knowledge as information, which represented in a mode of speaking in the form of documents (Ferrer & Alonso, 2010). The adoption of structured knowledge in the academic fields alters the impressions of educators and learners and allows them to perform their tasks meaningfully. E-learning systems now offer huge sets of data and information based on deploying different learning tools and techniques for teaching, learning and reference purposes, which could be seen as a blessing with plenty of information readily available just a click away (Tang & McCalla, 2005). Even so, it could equally be seen as an exponentially growing nightmare, in which unstructured information chokes the delivery system without providing any articulate or meaningful knowledge. The main goal of e-learning is to share knowledge and wisdom and this is accomplished through the distribution of data and information. The user or the learner has to access the relevant data and information and then analyse, synthesize and integrate them into meaningful knowledge. E-learning contents and databases are getting huge and without proper management of the contents and the use of efficient learning strategies the collection remains as data. An example of an effort around this issue for the business databases is the use of data mining, which is a method of extracting meaningful information from mountains of data (Dredze, et al., 2007). No such provision is available for e-learning. Nonaka and Takeuchi (1999) have categorized knowledge into explicit and tacit knowledge. Explicit knowledge is knowledge that can be easily or directly expressed and documented and shared through hard and soft copies of documents such as those uploaded to the e-learning databases. Tacit knowledge, on the other hand, is expertise, high level mastery, and wisdom that were developed through years of practice and reflection and cannot be directly shared or transferred to another person or captured into a document. They suggest that tacit knowledge can be developed through numerous iterations of the process of externalization of the ideas by the experts and the processes of combination and internalization by the novices or the learners. The process of externalization involves providing meaningful structures to the content or skill in a way that can be efficiently reconstructed by the learners. For elearning, the structuring of the digital content in a meaningful or holistic way that offers the development of tacit knowledge can accomplished through the principles of pedagogical design. Pedagogical design consists of the use of high-order patterns in presenting knowledge contents by employing strategies such as structuring, ordering, chunking, and customizing. Knowledge providers and current educational services such as Google, Yahoo, ProQuest, etc. offer search engines that locate and attempt to structure knowledge from web or e-learning databases but they only offer data and pieces of information in the form of explicit knowledge. This paper took into account the elements of pedagogical design such as conveying precise search results, offering key points concisely, and chunking the content into digestible segments for easy onscreen reading. After addressing the basic issues and other difficulties in learning from online materials, an e-learning system was developed to present these materials structurally based on a new pedagogical design model for separating the contents into segments that employed super-ordinate concepts following Ausubel’s (1968) prescriptions that were already in the student's cognitive structure, such as title, introduction, problem, objective, method, analysis, and result. TAM was employed to evaluate the acceptance of the system as shown in Figure 1. Suitability Usefulness Ease of Use TAM Structured e-Learning Content Title Introduction Problem Statement Objective Method Finding Figure 1: Theoretical Framework The study variables included Ease of use as the degree to which a person believes that using Structured e-Learning Content would be free from effort as measured. While Usefulness as the degree to which a person believes that using Structured e-Learning Content would be useful as measured. However, the tacit classification for knowledge is presented in Figure 2 in terms of join of knowledge wholes and formation of knowledge wholes. Tacit Join of Wholes Structured e-Learning Content Wisdom Connection of Parts » ut ur e Knowledge «F «Context » Formation of a Whole Explicit Information Current Techniques Data «P as t» Gathering of Parts «Understanding » Researching Absorbing Doing Interacting Reflecting Figure 2: Conceptual Framework (Modified from Clark, 2004) STRUCTURED E-LEARNING CONTENT DESIGN This paper adapted the main steps of Ausubel that follows logical rules in organizing the information and ideas it receives, and sorts and arranges them in an orderly fashion in into the learner’s cognitive structure. Ausubel offers a theory for organizing or integrating information meaningfully into a schema or cognitive framework. According to Ausubel, the human mind follows logical rules in organizing the information and ideas it receives, and sorts and arranges them in an orderly fashion in into the learner’s cognitive structure. Ausubel argues that an efficient cognitive structure is hierarchically organized, that is, highly inclusive or general concepts form at the top of the knowledge hierarchy with less inclusive sub concepts and informational data subsumed below them. Creating the proper categories and arranging them into a hierarchical structure allows the learners to retain and recall a specific set of knowledge more efficiently. Having a cognitive structure that is clear and well organized facilitates the learner to absorb new information without much effort and in a shorter time. Ausubel’s theory proposes the use of major or super-ordinate concepts to act as anchoring posts for the new information to be meaningfully acquired. Academic and research articles are written or presented using specific formats and set of concepts or phrases for section headings, such as “problem statement”, “method”, “data analysis”, and “findings”, etc. These super-ordinate concepts are already in the student's cognitive structure, especially among those pursuing graduate studies. A new pedagogical model was designed based on the adaptation of factors of TAM by Davis (1990), knowledge conversion by Clark (2004), and a model to ensure reliability by Ferrer (2010). The structuring of the new model evolved the concepts of Ryberg, Niemczika and Brenstein (2009) and Stufflebeam (2001) to design and structure a meaningful pedagogical model. Figure 3 shows the Structured e-Learning Content page design. The utilisation of pedagogical patterns is still increasing, especially in the e-learning field. Whereas developers of these applications apply pedagogical patterns in a conventional manner, the learning community is still far from employing pedagogical designs in the development of a proper learning pattern. Ryberg and others has made an attempt to characterise pedagogical meaning in terms of design patterns (Ryberg, Niemczik, & Brenstein, 2009). In listing good education and training practices through pedagogical designs, they recommend nine characteristics related to putting a proper pedagogical design on record for a clear representation; thus, this research followed the following steps in customizing the pedagogical design, such as: - Name: This represents the proposed pedagogical design in a single phrase, enabling quick organisation and retrieval. - Problem: This is a description of the problem, involving its target or a needed outcome, and clarifies that the problem exists. - Context: This presents a precondition that needs to be concluded in order for that problem to occur. - Forces: This provides a short explanation of contexts and how these interact among community members. Some forces may be inconsistent. - Solution: This concerns instructions, which may involve alternative forms. The solution may contain pictures, diagrams, prose or other media. - - Examples: These are particular pattern implementations and resolutions, similarities, visual examples and known uses which can be provided to a user who needs to understand the context. Resulting Context: This represents the results after adopting the pedagogical design, and comprises post-conditions and other new problems that might result from figuring out the first problem. Rationale: This presents the concepts of choosing the pedagogical design, and contains a description of how the pattern works and how forces and constraints are determined to build the required representation. Related Patterns: These consist of the differences from and relationships with other patterns that are used to figure out the problems. Precel, Eshet-Alkalai and Alberton (2009) argued that most academic online learning is perceived as complementary to lecture-based courses, and therefore employ pedagogical approaches that are adopted from the traditional, frontal teaching and learning process. Consequently, online courses and materials do not usually employ pedagogical approaches that fit online learning. As a result, students’ achievements when reading digital text are reported to be lower than their achievements when reading printed text. Reading academic text in a digital format is problematic for most learners because of disorientation problems and the low level of ownership that readers have in digital text. The following process characterizes how the system runs by drawing the relations among agents. The reason of employing MAS is to improve the representation result of the stored contents. The process was classified into three parts: i) Client: presents the user or learners that serve the internet for retrieving their request. ii) Server: presents the work cycle between client request and database contents, which helps to organize the user query to be switched into the system database through content switch. iii) Database: presents the place for storing the data, this study took in account the different type of contents to be stored into the database clusters. To implement the multi-agent system, three basic types and five external types of agents were defined in this stage to clarify the agent’s goals, tasks, and user interface. Agents were developed by using Hypertext Preprocessor (PHP) and MYSQL. Table 1 presents the agents, their goals and their main tasks. While Figure 3 shows the designed Structure e-Learning Content page. No 1 2 3 4 5 6 7 8 Table 1: Agent’s Goals, Tasks, and User Interface Tasks User Interface Main Agents Recording Agent Managing Agent Goals Store the e-learning contents Manage the e-learning contents Classify the contents into structured classification Organize the content classification Retrieving Agent Retrieve the e-learning contents Represent the structured view for the contents External Agents Search Agent Profile Agent Classifier Agent Tracking Agent Goals Tasks User Interface Find queries Match queries with the available contents Retrieve the user details Search page Works as a controller between objects Report the availability of the e-learning contents Not Applicable Summarize the user activities towards elearning contents Summary page Analyzer Agent Optimize the user interface Utilize a new object between two activities Detecting the missing elements in the e-learning contents Analyze the content usage in term of view and download. Add article page Manage Content, Categories, Groups, and User Pages Display result page User profile page Crash report page Figure 3: Display for Structure e-Learning Content METHOD This study employs the evaluation research techniques by Stufflebeam (2001). He defines evaluation means as a study designed and conducted to assist some audience to assess an object’s merit and worth. Copes, Vieraitis, River and Hall (2005) stated that evaluation research is conducted to assess a program’s merit and worth, to improve program delivery, to develop knowledge, and to ensure oversight and compliance towards specific regulations and standards. In addition, the Utilization-Focused Evaluation method based on the premise by Patton (2002) was used that concern on every evaluation should be judged by their intended utility and actual use by intended users and offers excellent accountability and accuracy. Usefulness (11 items) Ease of Use (9 items) Table 2: Measurement Process Suitability Technology Acceptance Model Assessments by Davis (1990) The usability testing of this research was conducted on eleven postgraduate students out of twelve from CITM and CS in USM. The testing was conducted to perceive students satisfaction towards content and Graphical User Interface (GUI). The instrument was adapted from Chin, Diehl and Norman (1988) which involved 27 items for user interface satisfaction. The data collection method for usability test was conducted based on Lee recommendations as in Table 3. Table 3: Data Collection Methods for Usability Testing (Adapted from Lee, 1999) Technique Details Observation Data collection by observing the user’s behavior throughout the usability testing. Interview/Verbal Report Data collection by the user’s verbal report using interview after completing the usability testing. Thinking-Aloud Data collection using user’s thought throughout the usability testing. Questionnaire Data collection using question items that address information and attitude about usability of the Structured e-Learning Content. Video Analysis Data collection by one or more videos used to capture data about user interactions during usability testing. Auto Data-Logging Data collection by auto-logging programs used to track user actions throughout the Program usability testing. Software Support Data collection using software designed to support the evaluation expert during the usability testing process. A total of eleven participants were involved in this usability test to ensure stable results. Each participant spent approximately 50 minutes to one hour. In general, all participants found the Structured e-learning Content web site to be clear and straightforward (With an average 84.77 points) as shown in Table 4. Table 4: Usability Test Result Participant Overall reaction to the software (max. 60) Screen (max. 40) Terminology and system information (max. 60) Learning (max. 50) Capabilities (max. 60) (100) 1 2 3 4 5 6 7 8 9 10 11 Average 50 55 45 40 41 53 56 52 48 39 46 47.77 / 60 37 33 39 31 37 28 30 35 30 39 38 34.27 / 40 49 57 52 50 42 56 41 45 55 52 47 49.63 / 60 50 46 43 45 42 47 38 43 46 44 48 44.72 / 50 59 55 52 47 57 51 49 48 58 53 49 52.54 / 60 90.74 91.11 85.55 78.88 81.11 87.03 79.25 82.59 87.77 84.07 84.44 84.77 100 Each individual session lasted approximately 50 minutes to one hour. During the sessions, the test administrator explained the test session and asked the participant to fill out a brief background questionnaire (Appendix A). Participants read the task scenarios and tried to find the information on the website. The tasks consisted of the main steps such as registration, login, browsing, navigating, and downloading, as well as exploring all the links and system functionalities available in the software. After each task: i) The participants rated the overall learning to the Structured e-Learning Content on a 9-point Scale with different ranging for six subjective measures. ii) The participants rated the website screen by using a 9-point scale for four subjective measures. iii) The participant rated the website terminology and information by using a 9-point scale for six subjective measures. iv) The participant rated the website learning by using a 9-point scale for five subjective measures. After the last task was completed, the test administrator asked the participant to rate the Structured e-learning Content capabilities by using a 9-point scale for six subjective measures. RESULT & DISCUSSION The total reliability was calculated for 17 items from the Usefulness & EOU. The Cronbach’s Alpha for the 17 items was =.896 by 102 participants, as shown in Table 6. The total reliability for the usefulness was calculated for 8 items and the Cronbach’s Alpha for these items was =.772, as shown in Table 5, while the total reliability for the EOU was calculated for 9 items and the Cronbach’s Alpha for these items was =.767 by 102 participants.. Items For 17 Items Usefulness EOU Table 5: Total Reliability Cronbach's Alpha .896 .772 .767 N of Items 15 8 9 The assumptions of normality for Usefulness & EOF were supported by the data as shown in Figure 4 and Figure 5. All Q-Q plots fell along the straight line showing that the variables were normally distributed within groups. / Figure 4: Normality for Usefulness Figure 5: Normality for EOU The total mean for Usefulness was 27.86 (3.48/5 by the Likert scale) with SD = 6.10, indicating that the participants found Structured e-Learning Content to be useful, as shown in Table 6. An analysis by group reveals the means for usefulness for master by coursework students was 28.63 with SD = 5.96, while the mean for master by research students was 29.86 with SD = 5.28, and the mean for PhD was 26.18 with SD = 6.25. Results of the ANOVA test showed that F (2.99) = 2.717 at p = .071. As p > .05, there is no significant difference between the respondents with respect to usefulness by program. The total mean for EOU was 34.84 (3.87/5 by the Likert scale) with SD = 5.25 indicating that the participants found Structured e-Learning Content to be easy to use, as shown in Table 6. An analysis by group reveals the mean for EOU for master by coursework was 34.57with SD = 4.72, while the mean for master by research was 35.35 with SD = 4.25, and the mean for PhD was 35.00 with SD = 6.22. Analysis using the ANOVA test showed that F (2.99) = .148 at p = .863. As p > .05, there is no significant difference between the respondents with respect to EOU by program. As p > .05 for all tests, the results indicated that there were no significant differences between the respondents with respect to Usefulness and EOU by program. Table 6: Means, standard deviations and results of ANOVA tests for Usefulness & EOU by Program Results of ANOVA USEFULNESS EOU Master_C Master_R PhD Total Master_C N 49 14 39 102 49 Mean 28.63 29.85 26.17 27.86 34.57 Std. Deviation 5.96 5.28 6.25 6.09 4.72 F (2,99) = 2.717 p =.071 F (2,99) = .148 Master_R PhD Total 14 39 102 35.35 35.00 34.84 4.25 6.22 5.25 p = .863 The total mean for Usefulness was 27.86 (3.48 by the Likert scale) with SD = 6.09 indicating that respondents from CITM found Structured e-Learning Content to be useful as shown in Table 7. An analysis by group reveals the means for usefulness of Structured e-Learning Content by CITM was 29.25 with SD = 7.22, while mean for school of Computer Science (CS) was 27.80 with SD = 5.94, and mean for others was 27.43 with SD = 6.52. Results of the ANOVA test showed that F (2,99) = .246 at p =. 783. As p > .05, there was no significant difference between the respondents from various schools with respect to usefulness. The total mean for EOU was 34.84 (3.87/5 by the Likert scale) with SD = 5.25 indicating that respondents from CITM found Structured e-Learning Content to be easy to use as shown in Table 7. An analysis by group reveals the mean for EOU of Structured e-Learning Content by CITM was 34.37 with SD = 5.06, while mean for CS was 35.00 with SD = 4.91, and mean for others was 34.31with SD = 7.00. Results of the ANOVA test showed that F (2,99) = .146 at p = .865. Again, as p > .05, there is no significant difference between the respondents with respect to EOU. As p > .05 for all tests, the results indicate that there are no significant differences between the respondents with respect to Usefulness & EOU by school. Table 7: Means, standard deviations and results of ANOVA tests for Usefulness & EOU by School Results of ANOVA USEFULNESS EOU CITM CS Other Total CITM CS Other Total N 8 78 16 102 8 78 16 102 Mean 29.25 27.80 27.43 27.86 34.37 35.00 34.31 34.84 Std. Deviation 7.22 5.95 6.52 6.09 5.06 4.91 7.00 5.25 F (2,99) =.246 p =.783 F (2,99) = .146 p = .865 CONCLUSION This paper demonstrated the current issues towards representation and structuring the e-learning contents. A new pedagogical model was presented ‘Structured e-Learning Content’ based on the recommendations of Ausubel for structuring and representing contents. After that, an evaluation was conducted to address the suitability of the new pedagogical model in terms of EOU and Usefulness among 102 participants. The results revealed that there were no significant differences between the respondents with respect to Usefulness & EOU. The findings indicated that the Structured e-Learning Content was acceptable and suitable by program and school, and triggered satisfactory levels of processing for tacit and explicit knowledge. REFERENCES Ausubel, P. (1968). Educational psychology: A cognitive view. New York: Holt, Rinehart & Winston. Clark, R., & Mayer, R. (2007). E-learning and the science of instruction: Proven guidelines for consumers and designers of multimedia learning: Pfeiffer & Co. Coffield, F., Moseley, D., Hall, E., & Ecclestone, K. (2009). Learning styles and pedagogy in post-16 learning: A systematic and critical review: National Centre for Vocational Education Research (NCVER). Copes, H., Vieraitis, L. M., River, N., & Hall, P. (2005). Evaluation research in the social sciences: Upper Saddle River, NJ: Prentice Hall. Davis, F., Bagozzi, R., & Warshaw, P. (1989). User acceptance of computer technology: A comparison of two theoretical models. Journal of Management Science, 35(8), 982-1003. Dredze, M., Blitzer, J., Talukdar, P., Ganchev, K., Graca, J., & Pereira, F. (2007). Frustratingly hard domain adaptation for dependency parsing. 22nd International Conference on Computational Linguistics. Stroudsburg, PA, USA, 1051–1055. Dolk, D., & Konsynski, B. (2009). Knowledge representation for model management systems. Software Engineering, IEEE Transactions on(6), 619-628. Ferrer, N., & Alonso, J. (2010). Content Management for E-Learning: Springer Verlag. Imitola, J., et al. (2004). Directed migration of neural stem cells to sites of CNS injury by the stromal cell-derived factor 1 /CXC chemokine receptor 4 pathway. Proceedings of the National Academy of Sciences of the United States of America, 101(52), 18117. Keller, J., (1983). Motivation design of instruction, in Instructional-Design Theories and Models: An Overview of Their Current Status, (edit.,Reigeluth, C.M.), Lawrence Erlbaum Associates, Hillsdale NJ (1983) pp. 383-434. Lee, S. H. (1999). Usability testing for developing effective interactive multimedia software: Concepts, dimensions, and procedures. Educational Technology & Society, 2(2), 1-13. Nonaka, I., & Takeuchi, H. (1995). The knowledge creating company: How Japanese companies create the dynamics of innovation. Oxford: Oxford University Press. Wong, W. K., Yin, S. K., Yang, H. H., & Cheng, Y. H. (2011). Using Computer-Assisted Multiple Representations in Learning Geometry Proofs. Educational Technology & Society, 14(3), 43–54. Patton, M. Q. (2002). Utilization-focused evaluation (U-FE) checklist. Last retrieved on March, 20, 2005. Precel, K., Eshet-Alkalai, Y., & Alberton, Y. (2009). Pedagogical and design aspects of a blended learning course. International Review of Research in Open and Distance Learning, 10 (2), 1-16. Roca, J., Chiu, C., & Martínez, F. (2006). Understanding e-learning continuance intention: An extension of the Technology Acceptance Model. International Journal of Human-Computer Studies, 64(8), 683-696. Ryberg, T., Niemczik, C., & Brenstein, E. (2009). Methopedia-pedagogical design community for european educators. The Proceedings of the 8th European Conference on e-Learning, University of Bari, Italy, 503-511. Stufflebeam, D. (2001). Evaluation Models. New Directions for Program Evaluation, no. 89. San Francisco: Jossey-Bass. Tang, T., & McCalla, G. (2005). Smart recommendation for an evolving e-learning system. International Journal on Elearning, 4(1), 105–129. APPENDIX A Perceived Usefulness Q1: Using the Structured e-Learning Content for learning would enable me to retrieve and understand the contents quickly. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Agree (4) Strongly Agree (5) Q2: Using the Structured e-Learning Content would improve my learning performance. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Agree (4) Strongly Agree (5) Q3: Using the Structured e-Learning Content for learning would increase my productivity. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Agree (4) Strongly Agree (5) Q4: Using the Structured e-Learning Content would enhance my effectiveness on the learning process for certain topic. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Agree (4) Strongly Agree (5) Q5: Using the Structured e-Learning Content would make it easier to display the main contents of research articles. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Agree (4) Strongly Agree (5) Agree (4) Strongly Agree (5) Q6: The Structured e-Learning Content would be useful for learning. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Q7: This content was more difficult to understand than I would like for it to be. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Q8: The Structured e-Learning Content presentation is eye-catching. Agree (4) Strongly Agree (5) Strongly Disagree (1) Disagree (2) Slightly Agree (3) Agree (4) Strongly Agree (5) Perceived Ease of Use Q9: The pages of the Structured e-Learning Content look dry and unappealing. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Agree (4) Strongly Agree (5) Q10: Learning to operate The Structured e-Learning Content would be easy for me. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Agree (4) Strongly Agree (5) Q11: I would find it easy to get the necessary information from the Structured e-Learning Content. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Agree (4) Strongly Agree (5) Q12: The Structured e-Learning Content offers intuitive interaction facilities. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Agree (4) Strongly Agree (5) Q13: It was easy to become skillful in using the Structured e-Learning Content. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Agree (4) Strongly Agree (5) Q14: It is easy to remember the main elements of articles using the Structured e-Learning Content. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Agree (4) Strongly Agree (5) Agree (4) Strongly Agree (5) Q15: I found the Structured e-Learning Content was easy to use. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Q16: I would learned many things that were surprising or unexpected when using the Structured e-Learning Content. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Agree (4) Strongly Agree (5) Q17: The good organization of the content helped me be confident that I would learn this lesson. Strongly Disagree (1) Disagree (2) Slightly Agree (3) Agree (4) Strongly Agree (5)