‧Update of output – Layer Weights

advertisement

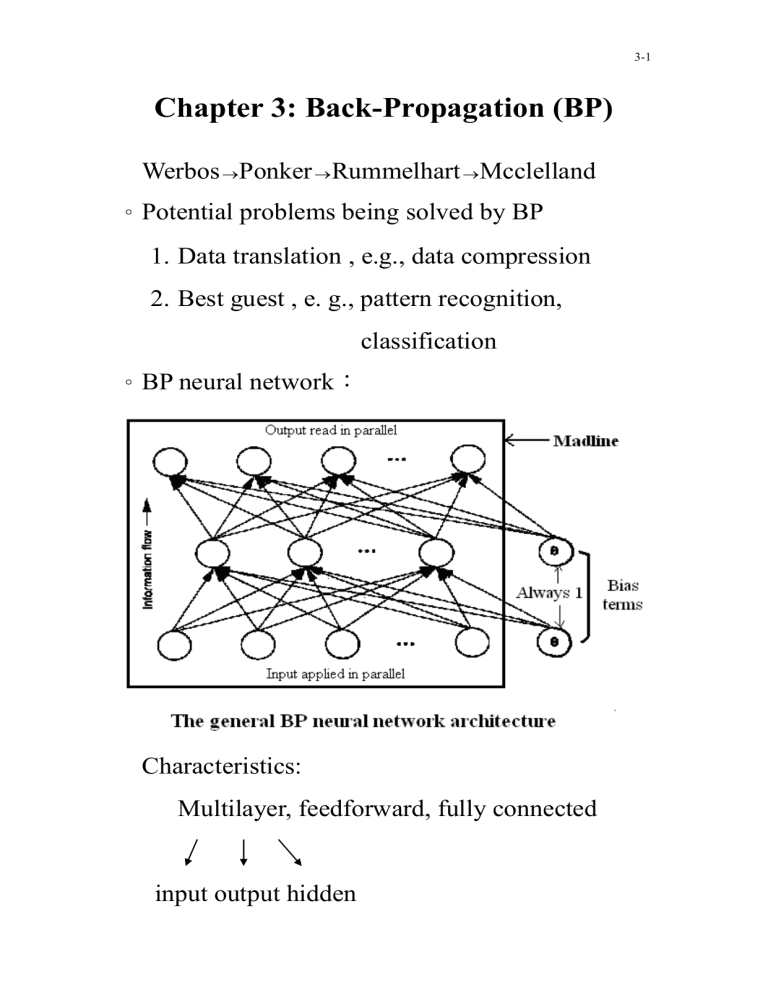

3-1 Chapter 3: Back-Propagation (BP) Werbos Ponker Rummelhart Mcclelland 。Potential problems being solved by BP 1. Data translation , e.g., data compression 2. Best guest , e. g., pattern recognition, classification 。BP neural network: Characteristics: Multilayer, feedforward, fully connected input output hidden 3-2 3.1. BP Neural Network 。Character recognition application Translate a 7 × 5 image to 2–byte ASCII code Traditional methods: Lookup table Suffer from: a. Noise, distortion, incomplete b. Time consuming 3-3 。 Recognition-by-components 3-4 During training, self-organization of nodes on the intermediate layers s. t. different nodes recognize different features or their relationships. Noisy and incomplete patterns can thus be handled. 3.1.2. BP NN Learning Given examples: (x1, y1), (x2, y2), … ,(xn, yn) where yi = Φ(xi) Find an approximation Φ through learning 3-5 。 Propagate – adapt learning cycle Input patterns apply to input layer e n e r a t e g output output patterns until layer compare with desired propagate through upper layers compute errors transmit backward outputs converge toward update intermediate a state connection weights encode layers all training patterns 3.2. Generalized Delta Rule (GDR) Consider input vector x p ( x p1 , x p 2 , , x pN ) Hidden layer: Net input to the jth hidden unit N net hpj whji x pi θ hj hidden layer i 1 pth input vector jth hidden ith input bias term unit unit with jth unit 3-6 Output of the jth hidden unit i pj f jh (net hpj ) transfer function Output layer: net 0pk L wkj0 i pj θk0 - input j 1 o pk f k0 (net 0pk ) - output 。 Update of output layer weights The error at a single output unit k pk ( y pk o pk ) The error to be minimized 1M 2 E p ( w ) pk , M: # output units 2 k 1 o The descent direction E p The learning rule wo(t+1) = wo(t) + △wo = wo(t) – E p where : learning rate 3-7 。 Determine E p 1M 2 1M ∵ E p pk ( y pk o pk )2 2 k 1 2 k 1 E p o pk ( y pk o pk ) o o wkj wkj ∵ o pk f ko (net opk ) , o pk f ko (net 0pk ) ∴ o wkj (net opk ) wkjo 3-8 net opk ∵ L wkjo i pj θ oj , L: # hidden units j 1 L (net 0pk ) o o ∴ ( w i θ kj pj j ) i pj o o wkj wkj j 1 ∴ E p o ( y o ) f i pj wkjo E p pk pk k o wkj The weights on the output layer are updated as wkjo (t 1) wkjo (t ) wkjo E p wkjo (t ) ( y pk o pk ) f koi pj --- (A) 。 Consider f ko (net opk ) Two forms for the output functions f ko i, Linear ii, f ko ( x) x Sigmoid f ko ( x) (1 e x ) 1 1 or f ko ( x) [1 tanh(x)] 2 3-9 For linear function, f ko 1, (A) wkjo (t 1) wkjo (t ) ( y pk o pk )i pj For sigmoid function, f ko (net opk ) (1 e net ojk ) 2 net ojk e (net ojk ) (1 e net jk ) 2 e net jk ( ) o (1 e o net ojk 2 net ojk ) e f ko 2 ( f ko 1 1) f ko (1 f ko ) o pk (1 o pk ) Let 1. (A) wkjo ( (t 1) wkjo (t ) ( y pk o pk )o pk (1 o pk )i pj 。Example 1: Quadratic neurons for output nodes Net input: netk wkj (i j vkj )2 defined j k Output function: sigmoid f ko (net ojk ) (1 e net jk ) 1 wkj j wkj , vkj : weights (independent) ij : the jth input value 3-10 Weight update equations for w and v ∵ o pk f ko (net 0pk ) output of output node ∵ i pk f kh (net hpk ) output of hidden node pk ( y pk o pk ) 1M 2 1 E p pk ( y pk o pk )2 2 k 1 2 k E p f ko (net opk ) ( y pk o pk ) o akj (net opk ) akjo where akjo wkjo or vkjo (net opk ) 2 ( w ( i v ) ) kj pj kj o o wkj wkj j 2 2 ( w ( i v ) ) ( i v ) kj pj kj pj kj wkjo j ( net opk ) ( w (i vkj ) 2 ) o o kj pj vkj vkj j 2wkj (i pj vkj ) ∴ E p o o 2 [ y o ] f ( net )[ i v ] pk pk k pk pj kj wkjo E p [ y pk o pk ] f ko (net opk )[2wkj (i pj vkj )] o vkj 3-11 (1 e net ojk 1 ∵ f ko (net ojk ) ∴ f ko f ko (1 f ko ) o pk (1 o pk ) ) p wkjo ( y pk o pk )o pk (1 o pk )(i pj vkj ) 2 p vkjo ( y pk o pk )o pk (1 o pk )[2wkj (i pj vkj )] ◎ Updates of hidden-layer weights Difficulty: unknown measure of the outputs of the hidden-layer units Idea: the total error Ep must be related to the output values on the hidden layer 1 M 1 E p ( y pk o pk ) 2 [ y pk f ko (net opk )]2 2 k 1 2 k L 1 o [ y pk f k ( wkjo i pj θko )]2 2 k j 1 ∵ i pj f jh (net hpj ) , N net hpj whji x pi θ hj i 1 E p : function of whji E p 1 2 [ ( y o ) ] pk pk h h w ji 2 w ji k o pk 1 2 [ ( y o ) ] ( y o ) pk pk pk pk whji 2 k whji k 3-12 o pk f ko (net opk ) L f ko ( wkjo i pj θko ) j 1 L f ko ( wkjo f jh (net hpj ) θko ) j 1 L f ko ( L wkjo j 1 f jh ( whji x pi θ hj ) θko ) j 1 o pk f ko (net opk ) f jh (net hpj ) = h w ji (net opk ) i pj (net hpj ) whji f ko (net opk ) wkjo f jh (net hpj ) x pi Consider sigmoid output function f ko (net opk ) o pk (1 o pk ) , f jh (net hpj ) i pj (1 i pj ) ∵ net opk wkjo i pj θko (net opj ) ∴ wkjo i pj whji x pi θ hj (net hpj ) ∴ x pi h w ji j ∵ net hpj i E p o o h ( y o ) f w f x pi pk pk k kj j h w ji k 2 ( y pk o pk )o pk (1 o pk ) wkjo i pj (1 i pj ) x pi k 2i pj (1 i pj ) x pi ( y pk o pk )o pk (1 o pk ) wkjo k 3-13 Now whji E p i pj (1 i pj ) x pi h w ji ( y pk o pk )o pk (1 o pk )wkjo k and whji (t 1) whji (t ) whji ※ The known errors on the output layer are propagated back to the hidden layer to determine the weight changes on that layer 3.3. Practical Considerations 。 Training data: Sufficient # pattern pairs, Representative Principles: i, Use as many data as possible (capacity) ii, Adding noise to the input vectors (generalization) iii, Cover the entire domain (representative) 。 Network size: # layers and nodes of hidden layers Principles: i, Use as few nodes as possible. If the NN 3-14 fails to converge to a solution, it may be that more nodes are required ii, Prune the hidden nodes whose weights change very little during training 。 Learning parameters i, Initialize weights with small random values ii, Learning rate η decreases with # iterations η small η large slow perturbation ☆ iii, Momentum technique -- Adding a fraction of the preview change, while tends to keep the weight changes going in the same direction – hence the term momentum, to the weight change, i.e., wkjo (t 1) wkjo (t ) p wkjo (t ) p wkjo (t 1) : momentum parameter 3-15 iv, Perturbation – Repeat training using multiple initial weights. 3.4. Applications ◎ Data compression - video images a BPN can be trained to map a set of patterns from an n-D space to an m-D space. ‧ Architecture 3-16 The hidden layer represents the compressed form of the data. The output layer represents the reconstructed form of the data. Each image vector will be used as both the input and the target output. ‧System 3-17 ‧Size NTSC: National Television Standard Code 525 × 640 = 336000 #pixels/image #nodes 750000 336000 + 336000/4 + 336000 input hidden output (compression rate = 4) 50 billion connections Strategy: Divide images into blocks e.g., 8 × 8 = 64 pixels ∴ 64-output layer, 16-hidden layer 64-input layer ‧ Training data 64-D space Randomly generate points in the space. ◎ Paint quality inspection Reflects a laser beam off the painted panel and onto a screen 3-18 Poor paint: Reflected laser beam diffused ripples, orange peel, lacks shine Good paint: Relatively smooth and bright luster Closely uniform throughout its image 。 Idea 3-19 The output was to be a numerical score (1(best) -- 20(worst))