APPLICATION OF DYNAMIC SELF-ORGANIZING NEURAL NETW

advertisement

APPLICATION OF DYNAMIC

SELF-ORGANIZING NEURAL

NETWORKS TO WWW-DOCUMENT

CLUSTERING

Marian B. GORZALCZANY, Filip RUDZI ´ NSKI

Department of Electrical and Computer Engineering,

Kielce University of Technology, Kielce, Poland

E-mail: {m.b.gorzalczany, f.rudzinski}@tu.kielce.pl

Abstract The paper presents an application

of the clustering technique based on

the dynamic self-organizing neural networks to WWW-document clustering

that belongs to highly multidimensional clustering tasks. The collection of

548 abstracts of technical reports available at WWW server of the

Department of Computer Science, University of Rochester, USA

(www.cs.rochester.edu/trs) has been the subject of clustering. The proposed

technique has provided excellent results, both in absolute terms (close to

90% accuracy of classification) and in relative terms (in comparison with

alternative techniques applied to the same problem).

Keywords: computational intelligence, self-organizing neural networks,

WWW-document clustering

1 Introduction

A rapid growth of World-Wide-Web resources and significant advances in

information and communication technologies o er the users a wide access to

vast amounts of WWW documents available online. In order to make that

access more e cient and taking into account that text- and hypertext documents

belong to most important WWW resources, a constant search for new and

e ective text-mining techniques is underway. Among these techniques,

WWW-document clustering (also referred to as text clustering) is a principal

one. In general, given a collection of WWW documents, the task of document

clustering is to group documents together in such a way that the documents

within each cluster are as "similar" as possible to each other and as "dissimilar" as

possible from those of the other clusters.

This paper presents an application of the dynamic self-organizing neural

networks introduced in [4] to clustering of selected collection of WWW

documents. First, the paper presents - in a nutshell form - the operation of the

dynamic self-organizing neural network applied in this work. Then, a

VectorSpace-Model representation of WWW documents is outlined as well as

some approaches to its dimensionality reduction are briefly presented. Finally, the

application of the proposed technique to clustering of the collection of 548

abstracts of technical reports available at the WWW site of the Department of

Computer Science, University of Rochester, USA (www.cs.rochester.edu/trs) is

presented. A comparative analysis with some alternative text-clustering

techniques is also carried out (for this purpose, also a subset of 476 abstracts of

the afore-mentioned original collection of abstracts is also considered).

2 Dynamic Self-Organizing Neural Networks for

WWWDocument Clustering in a Nutshell Form

A self-organizing neural network with one-dimensional neighbourhood is

considered. The network has n inputs x1;xand consists of m neurons arranged in a

chain; their outputs are y12;y;:::;x2n;:::;ym, where yj= Pn i=1, j = 1;2;:::;m and

wjiwjixiare weights connecting the output of j-th neuron with i-th input of the

network. Using vector notation (x = (x1;x2;:::;xnT), wj= (wj1;wj2;:::;wjn)T), yj= wT

jx. The learning data consists of L input vectors xl(l = 1;2;:::;L). The first stage of

any Winner-Takes-Most (WTM) learning algorithm that can be applied to the

considered network, consists in determining the neuron jx winning in the

competition of neurons when

learning vector xlis presented to the network. Assuming the normalization of

learning vectors, the winning neuron jx is selected such that

d(xl;wjx ) = minj=1;2;:::;md(xl;wj); (1)

n

ji

and

wj

; (2)

i=1

w2 ji

d(xl;w

w

n i=1(x

x

where d(xl;wj) is a distance measure between ; throughout this paper, a distance measure d based on the

function S (most often used for determining similarity of te

xl

will be applied:

2 Pn

kk j

P

li i=1

w

j) = 1 S(xl;wj

T l) = 1

w j k = 1 Pli) q

xkxl

(k:kare Euclidean norms). The WTM learning rule can be j(k)]; (3)

formulated as follows:

wj(k + 1) = wj(k) + j(k)N(j;j x ;k)[x(k) w

(k) is the learning coe cient, and N(j;j

w

is the neighbourhood function. In this paper, the Gaussian-type

h

neighbourhood function will be used:

er

e

k

is

th

e

it

er

at

io

n

n

u

m

b

er

,

j

l

N(j;j x ;k) = e x(jj) 22

2

(k)

)

li

li

li

l

i

) where tfli

li

l=

i

tfli

i

; (4) where (k) is the "radius" of

neighbourhood (the width of the Gaussian "bell").

After each learning epoch - as presented in detail in [4] - five successive

operations are activated (under some conditions): a) the removal of single,

low-active neurons, b) the disconnection of a neuron chain, c) the removal of

short neuron sub-chains, d) the insertion of additional neurons into the

neighbourhood of high-active neurons, and e) the reconnection of two selected

subchains of neurons.

3 Vector Space Model of WWW Documents - an Outline

Consider a collection of L WWW documents. In the Vector Space Model (VS

M) [2, 3, 5, 9, 10], every document in the collection is represented by vector x=

(xl1;xl2;:::;xlnT(l = 1;2;:::;L). Component x(i = 1;2;:::;n) of such a vector represents

i-th key word or term that occurs in l-th document. The value of xlidepends on the

degree of relationship between i-th term and l-th document. Among various

schemes for measuring this relationship (very often referred to as term

weighting), three are the most popular: a) binary term-weighting: xli= 1 when i-th

term occurs in l-th document and x= 0 otherwise, b) tf-weighting (tf stands for

term frequency): xwhere tfdenotes how many times i-th term occurs in l-th

document, and c) tf-idfweighting (tf-idf stands for term frequency - inverse

document frequency): x= tflilog(L=dfiis the term frequency as in tf-weighting, df

denotes the number of documents in which i-th term appears, and L is the total

number of documents in the collection. In this paper tf-weighting will be

applied. Once the way of determining xis selected, the Vector Space Model can

be formulated in a matrix form:

VS M(n L) = X(n L) = [xl]l=1;2;:::;L = [xli ]T l=1;2;:::;L;

(5

i=1;2;:::;n

)

where index (n L) represents its dimensionality. The VS

M-dimensionality-reduction issues are of essential significance as

far as practical usage of VS Ms is concerned. There are two main classes of

techniques for VS M-dimensionality reduction [6]: a) feature selection methods,

and b) feature transformation methods. Among the techniques that can be

included into the afore-mentioned class a) are: filtering, stemming, stop-word

removal, and the proper feature-selection methods sorting terms and then

eliminating some of them on the basis of some numerical measures computed

from the considered collection of documents. The first three techniques

sometimes are classified as text-preprocessing methods, however - since they

significantly contribute to VS M-dimensionality reduction - here, for simplicity,

they have been included into afore-mentioned class a).

During filtering (and tokenization) special characters, such as %, #, $, etc., are

removed from the original text as well as word- and sentence boundaries are

identified in it. As a result of that, initial VS M(nini L)is obtained where niniis the

number of di erent words isolated from all documents. During stemming all

words in initial model are replaced by their respective stems (a stem is a portion of a word left after removing its su xes and

prefixes). As a result of that, VS M(nstem L)is obtained where nstem<nini. During

stop-word removal (removing words from a so-called stop list),

words that on their own do not have identifiable meanings and therefore are of little

use in various text processing tasks are eliminated from the model. As a result of that,

VS M(nstpl L)is obtained where nstpl<nstem. Feature selection methods usually operated

on term qualityqi, i = 1;2;:::;nstpl

defined for each term occurring in the latest VS M. Terms characterized by

qi<qtreswhere qis a pre-defined threshold value are removed from the model. In

this paper, the document-frequency-based method will be used to determine qitres,

that is qi= dfiwhere dfiis the number of documents in which i-th term occurs. As a

result of that, final VS Mis obtained where nfin<nstpl.(nfin L)

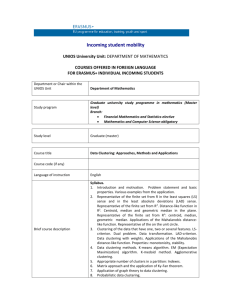

4 Application to Complex WWW-Document Clustering

Problem

The dynamic self-organizing neural networks will now be applied to reallife

WWW-document clustering problem, that is, to clustering of the collection of 548

abstracts of technical reports available at WWW server of the Department of

Computer Science, University of Rochester, USA (www.cs.rochester.edu/trs);

henceforward, the collection will be called CSTR-548 (CSTR stands for Com-

puter Science Technical Reports). The number of classes (equal to 4: AI (Natural

Language Processing), RV (Robotics-Vision), Systems, and Theory) and the class

assignments are known here, which allows us for direct verification of the results

obtained. Obviously, the knowledge about the class assignments by no means will

be used by the clustering system (it works in a fully unsupervised way).

For the purpose of comparative analysis, also a subset of 476 abstracts

(referred to as CSTR-476) of the afore-mentioned original collection CSTR-548

of abstracts will be considered. Collection CSTR-476 contains the abstracts of

technical reports that have been published until the end of 2002, whereas

CSTR-548 covers the abstracts of reports published until June 2005. Collection

CSTR-476 was the subject of clustering by means of some alternative clustering

techniques in [7, 8].

The processes of dimensionality reduction of the initial VS Ms for CSTR548

and CSTR-476 have been presented in Table 1 with the use of notations

introduced in Section 3 (additionally, in square brackets, the overall numbers of

occurrences of all terms in all documents of a given collection are presented). For

each of the considered abstract collections two final numerical models (identified

in Table 1 as "Small" and "Large" data sets) have been obtained. For this purpose

two values of threshold parameter qhave been considered: qtrestres= 20 - to get

models of more reduced dimensionalities ("Small"-type sets) and qtres= 2 - to get

models of higher dimensionalities but also of higher accuracies ("Large"-type

sets). It is worth noticing that qtres= 2 results in removing from the model all the

terms that occur in only one document of the collection; therefore, they do not

contribute to the clustering process.

Figs. 1 and 2 present the performance of the proposed clustering technique for

CSTR-548"Small" and CSTR-548"Large" numerical models of CSTR-548

collection of abstracts. As the learning progresses, both systems adjust the

overall numbers of neurons in their networks (Figs. 1a and 2a) that finally are

equal to 88 and 89, respectively, and the numbers of sub-chains (Figs. 1b and

2b) finally achieving the values equal to 4 in both cases; the number of

subchains is equal to the number of clusters detected in a given numerical

model of the abstract collection. The envelopes of the nearness histograms for

the routes in the attribute spaces of CSTR-548"Small" and CSTR-548"Large"

data sets (Figs. 1c and 2c) reveal perfectly clear images of the cluster

distributions in them, including the numbers of clusters and the cluster

boundaries (indicated by 3 local minima on the plots of Figs. 1c and 2c). After

performing the socalled calibration of both networks, class labels can be

assigned to particular sub-chains of the networks as shown in Figs. 1c and 2c.

Table 1. The dimensionality reduction of the initial VS Ms for CSTR-548 and

CSTR-476 collections of abstracts

VS M Dimensionality of VS M for abstract collection: CSTR-548 CSTR-476

(nini L) = (7438 548) (nini L) = (6752 476) [47382]

VSM(nini L)

[41072]

VSM(nstem L)

(nstem L) = (4896 548) (nstem L) = (4438 476) [44201]

[38307]

VSM(nstpl L)

(nstpl L) = (4574 548) (nstpl L) = (4119 476) [30644]

[26525]

120

L) CSTR-548"Small" CSTR-548"Large" CSTR-476"Small" CSTR-476"Large"(qtres=

100

20)

(qtres= 2) (qtres= 20) (q= 2) (nfin L) = (405 548)(nfin L) =

(2396 548)(nfin L) = (342 476)(ntresfin L) = (2217 476)[18096] [28466]

[14805] [24623]

80

60

40

a) b)

20

20

0

0 20 40 60 80 100 Epoch number

16

VSM(nfin

12

8

4

0

0 20 40 60 80 100 Epoch number

Systems RV AI Theory

c)

15

12

Number of neurons

0 8 16 24 32 40 48 56 64 72 80 88 Numbers of neurons along the route

Figure 1. The plots of number of neurons (a) and number of sub-chains (b)

6vs. epoch number, and c) the envelope of nearness histogram for the route in

3the attribute space of CSTR-548"Small"

9

0

a) b)

20

120

100

16

80

12

60

8

40

0

Number of neurons

4

20

0

0 20 40 60 80 100 Epoch number

0 20 40 60 80 100 Epoch number

RV Theory AI Systems

40

0 8 16 24 32 40 48 56 64 72 80 88 Numbers of neurons along the route

30

20

Figure 2. The plots of number of neurons (a) and number of sub-chains (b) vs.

epoch number, and c) the envelope of nearness histogram for the route in the

attribute space of CSTR-548"Large"

10

Number of sub-chains

Since the number of classes and class assignments are known in the original

collection of abstracts, a direct verification of the obtained results is also possible.

0

Numerical results of clustering have been collected

in Table 2. Moreover, in Table 3 the

results of comparative analysis with three alternative approaches applied to

CSTR-548"Small" and "Large" data sets are presented. The following alternative

clustering techniques have been considered: the EM (Expectation Maximization)

method, the FFTA (Farthest First Traversal Algorithm) approach, and the well-known

k-means algorithm. In order to carry out the clustering of the CSTR-548"Small" and

"Large" data sets with the use of the the afore-mentioned techniques, WEKA (Waikato

Environment for Knowledge Analysis) application that implements them has been used.

The WEKA application as well as details on the clustering techniques can be found on

WWW site of the University of Waikato, New Zealand

(www.cs.waikato.ac.nz/ml/weka).

In order to extend the comparative-analysis aspects of this paper (as explained earlier

in the paper), additionally, the clustering of CSTR-476 subset of original abstract

collection CSTR-548 has been carried out. This time the operation of the dynamic

self-organizing neural network clustering technique has been compared with six

alternative clustering approaches.

Envelope of nearness histogram

c)

Table 2. Clustering results for CSTR-548"Small" (a) and CSTR-548"Large"

(b) data sets (Sy=Systems, Th=Theory)

Clas

Number of decisions

Number

Number

Percentag

s

for sub-chain labelled:

of

of

e of

label

correct

wrong

correct

AI RV Sy Th decisions decisions decisions a) AI 80 13 12 7 80 32

71.43%

RV 27 61 1 0 61 28 68.54% Sy 1 5 191 0 191 6 96.95% Th 1 2 10 137

137 13 91.33%

ALL 109 81 214 144 469 79 85.58% b) AI 77 23 9 3 77 35 68.75%

RV 1 81 7 0 81 8 91.01% Sy 0 2 194 1 194 3 98.48% Th 0 1 11 138 138

12 92.00%

ALL 78 107 221 142 490 58 89.42% Table 3. Results of comparative

analysis for CSTR-548 numerical models

(DSONN=Dynamic Self-Organizing Neural Network, EM=Expectation

Maximization algorithm, FFTA=Farthest First Traversal Algorithm)

Clustering Percentage of correct decisions method

CSTR-548"Small" CSTR-548"Large"

DSONN 85.58% 89.42% EM 62.23% 51.09% FFTA

37.77% 36.68%

k-means 65.33% 36.68% .

Apart from three earlier-considered methods (EM, FFTA and k-means), three

new methods have been taken into account: IFD (Iterative Feature and Data

clustering) method, EB (Entropy-Based clustering) approach and hierarchical

clustering technique available from CLUTO software package

(wwwusers.cs.umn.edu/˜ karypis/cluto). The results of applying IFD, EB and

CLUTObased clustering techniques to CSTR-476 abstract collection have been

reported in [7, 8] and are repeated in Part II of Table 5. Figs. 3 and 4 as well as

Table 4 illustrate the application of the dynamic self-organizing neural

networks to the clustering of two numerical models of CSTR-476 ("Small" and

"Large" data sets) in the similar way as Figs. 1, 2 and Table 2 present the

CSTR-548 clustering. Part I of Table 5 provides similar comparative analysis

as Table 3 for CSTR-548 numerical models.

a) b)

15

100

12

80

9

60

6

40

0

3

Number of neurons

20

0

0 20 40 60 80 100 Epoch number

0 20 40 60 80 100 Epoch number

Systems AI Theory RV

10

8

0 8 16 24 32 40 48 56 64 72 80 Numbers of neurons along the route

6

Figure 3. The plots of number of neurons (a) and number of sub-chains (b) vs.

epoch number, and c) the envelope of nearness histogram for the route in the

attribute space of CSTR-476"Small"

4

2

Taking into account the results that have been reported in this paper, it is

clear that clustering technique based on dynamic self-organizing neural

0

networks is a powerful

tool for highly multidimensional cluster-analysis

problems such as WWW-document clustering and provides much better results

than many alternative techniques in this field.

5 Conclusions

Number of sub-chains

In this paper, the clustering technique based on the dynamic self-organizing

neural networks and introduced by the same authors in [4] has been

employed to WWW-document clustering that belongs to highly

multidimensional clustering tasks. The collection of 548 abstracts of

technical reports available at WWW server of the Department of Computer

Science, University of Rochester, USA (www.cs.rochester.edu/trs) has been

the subject of clustering. In order to extend some aspects of comparative

analysis with alternative clustering techniques, also the 476-element subset of

the original collection of abstracts has been considered.

Envelope of nearness histogram

c)

a) b)

15

100

12

80

9

60

6

0

3

Number of neurons

20

0

0 20 40 60 80 100 Epoch number

0 20 40 60 80 100 Epoch number

c)

Number of sub-chains

40

RV Theory AI Systems

40

0 9 18 27 36 45 54 63 72 81 Numbers of neurons along the route

30

Figure 4. The plots of number of neurons (a) and number of sub-chains (b) vs.

epoch number, and c) the envelope of nearness histogram for the route in the

attribute space of CSTR-476"Large"

Table 4. Clustering results for CSTR-476"Small" (a) and CSTR-476"Large"

(b) data sets (Sy=Systems, Th=Theory)

20

Clas

s

label

Envelope of nearness histogram

10

0

Number of decisions

for sub-chain labelled:

AI RV

Sy Th decisions

decisions decisions a)

AI 68 26 2 5 68 33

67.33%

RV 2 69 0 0 69

2 97.18% Sy 8

51 118 1 118

60 66.29% Th

3 8 1 114 114

12 90.48%

ALL 81 154

121 120 369 107

77.52% b) AI 64 27

6 4 64 37 63.37%

RV 1 61 8 1 61

10 85.92% Sy

0 0 178 0 178 0

100% Th 0 5 5

116 116 10

92.06%

ALL 65 93 197

121 419 57 88.03%

Number

of

correct

Number

of

wrong

Percentag

e of

correct

Table 5. Results of comparative analysis for CSTR-476 numerical models

(DSONN=Dynamic Self-Organizing Neural Network, EM=Expectation

Maximization algorithm, FFTA=Farthest First Traversal Algorithm,

IFD=Iterative Feature and Data clustering, EB=Entropy-Based clustering,

CLUTO=Software Package for Clustering High-Dimensional Datasets)

method Dimensionality of VS MClustering Percentage of correct decisions method

Part I

CSTR-476"Small" CSTR-476"Large"

DSONN 77.52% 88.03% EM 69.96% 50.00%

FFTA 37.82% 38.03%

k-means 69.75% 38.45% Clustering CSTR-476

abstract collection

Percentage of correct decisions

Part II

IFD 1000 476 87.82% EB n/a 73.9%CLUTO

n/a 68.8% n/a - not available

The proposed technique has provided excellent results, both in absolute terms

(close to 90% accuracy of classification) and in relative terms (in comparison with

several alternative techniques applied to the same problem).

References

[1] Berry M.W.,2004, Survey of Text Mining, Springer Verlag, New York. [2]

Chakrabarti S., 2002, Mining the Web: Analysis of Hypertext and Semi

Structured Data, Morgan Kaufmann Publishers, San Francisco. [3] Franke

J., Nakhaeizadeh G., Renz I. (eds.), 2003, Text Mining : Theoretical Aspects and Applications, Physica Verlag/Springer Verlag, Heidelberg.

[4] Gorzalczany M.B., Rudzi ´ nski F., 2006, Cluster analysis via dynamic

selforganizing neural networks, in Proc. of 8th Int. Conference on Artificial

Intelligence and Soft Computing ICAISC 2006, Zakopane.

[5] Salton G., McGill M.J., 1983, Introduction to Modern Information

Retrieval, McGraw-Hill Book Co., New York.

[6] Tang B., Shepherd M., Milios E., Heywood M.I., 2005, Comparing and

combining dimension reduction techniques for e cient text clustering, in Proc. of

Int. Workshop on Feature Selection and Data Mining, Newport Beach.

[7] Tao Li, Sheng Ma, 2004, Entropy-Based Criterion in Categorical

Clustering, Proc. of the 21st IEEE International Conference on Machine

Learning, Ban , Alberta, pp. 536-543.

[8] Tao Li, Sheng Ma, 2004, IFD: Iterative Feature and Data Clustering, Proc.

of the 2004 SIAM International Conference on Data Mining, Lake Buena Vista.

[9] Weiss S., Indurkhya N., Zhang T., Damerau F., 2004, Text Mining:

Predictive Methods for Analyzing Unstructured Information, Springer, New

York.

[10] Zanasi A. (ed.), 2005, Text Mining and its Applications to Intelligence,

CRM and Knowledge Management , WIT Press, Southampton.