2 The Summary Generator

advertisement

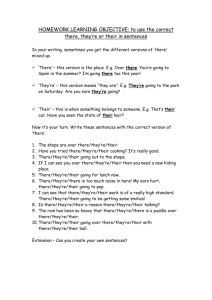

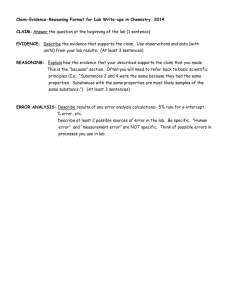

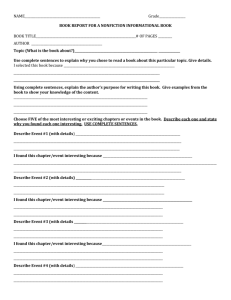

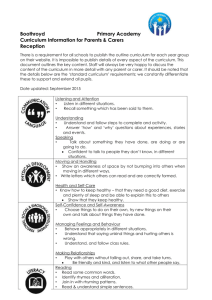

Text Summarization as Controlled Search Terry COPECK School of Information Technology & Engineering University of Ottawa 150 Louis Pasteur, P.O. Box 450 Stn. A Ottawa, Ontario, Canada K1N 6N5 terry@site.uottawa.ca Nathalie JAPKOWICZ School of Information Technology & Engineering University of Ottawa 150 Louis Pasteur, P.O. Box 450 Stn. A Ottawa, Ontario, Canada K1N 6N5 nat@site.uottawa.ca Stan SZPAKOWICZ School of Information Technology & Engineering University of Ottawa 150 Louis Pasteur, P.O. Box 450 Stn. A Ottawa, Ontario, Canada K1N 6N5 szpak@site.uottawa.ca Paper ID: NAACL-2001-XXXX Keywords: machine learning, automatic evaluation Contact Author: Terry Copeck Under consideration for other conferences (specify)? no Abstract We present a framework for text summarization based on the generate-and-test model. A large set of summaries, generated by enumerating all plausible values of six parameters, was assessed against the abstract included in the document. The number involved dictated the use of automated means, supervised machine learning, to make this assessment. Results suggest the method of key phrase extraction is the most, and possibly only, important variable. Theme Area: Evaluation and Text/Training Corpora Wordcount: 3,800 Text Summarization as Controlled Search Abstract We present a framework for text summarization based on the generate-andtest model. A large set of summaries was generated by enumerating all plausible values of six parameters. Quality was assessed by measuring them against the abstract included in the summarized document. The large number of summaries involved dictated the use of automated means to make this assessment; we chose to use supervised machine learning. Results suggest that the method of key phrase extraction is the most, and possibly the only, important variable. Machine learning identified parameters and ranges of values within which the summary generator might be used with diminished reliability to summarize new texts for which no author's abstract exists. Introduction Text summarization consists in compressing a document into a smaller précis of the information contained in the document. Its goal is to include the most important facts in that précis. Summarization can be achieved by constructing a new document from elements not necessarily present in the original. Alternatively, it can be seen as search for the most appropriate elements, usually sentences, that should be extracted for inclusion in the summary. This paper takes the second approach. It describes a partially automated search engine for summary building. The engine has two components. The Summary Generator takes as input a text and a number of parameters that tune generation in a variety of ways, and produces a single summary of the text. While each set of parameter values is unique, it is possible that two or more sets will produce the same summary. The Generation Controller evaluates the summary output in order to determine the best parameters for use in generation. Summary generation has been designed to combine a number of publicly-available text processing programs to perform each of three main stages in its operation. Many parameter settings are possible. Just selecting among the available programs produces 18 possible input combinations; given that many of these programs accept or require additional parameterization themselves, evaluating the possible set of inputs manually becomes impossible. Generation control is achieved by supervised machine learning that associates the parameters of the summary generation process with expressions of the generated summary’s quality. The training data consist of the parameter settings used to generate each summary, along with a rating of that summary. The rating is obtained by comparing automatically generated summaries against the author’s abstract, which we treat as the “gold standard” for the document on the grounds that no one is better able to summarize a document than its author. The control process is iterative: once bad parameter values have been identified, the controller is applied to the remaining good values in a further attempt to refine the choice of parameters. While the summary generator is currently fully automated, the controller was applied in a semimanual way. We plan to automate the entire system in the near future. The remainder of this paper is organized in four sections. Section 2 describes the overall architecture of our system. Sections 3 and 4 present its two main components, the Summary Generator and the Generation Controller, in greater detail. Section 5 presents our results, discussing the parameters and combination of parameters identified as good or bad. Section 6 describes refinements we are planning to introduce. Text to be Summarized Best Parameters Parameter Values Future Feedback Loop Parameter Assessor Rating Summary Assessor SUMMARY GENERATOR GENERATION CONTROLLER Summary + Parameter Values Author Abstract Figure 1. Overall System Architecture 1 Overall Architecture The overall architecture of the system is shown in Figure 1. Its two main components are the Summary Generator and the Generation Controller. The Summary Generator takes as inputs the text to be summarized and a certain number of parameter values. It outputs one or more summaries. These are then fed into the Generation Controller along with the parameter values used to produce them. The Generation Controller has two subcomponents. The Summary Assessor takes the input summary along with a “gold standard” summary—the abstract written by the document’s author—and issues a rating of the summary with respect to the abstract. The Parameter Assessor takes an accumulated set of parameter values used to generate summaries together with their corresponding ratings and outputs the set of best and worst combinations of parameters and values with respect to the ratings. We plan to implement an iterative feedback loop that would feed the output of the Parameter Assessor into the parameter values input to both the Summary Generator and Generation Component several times in order to refine the best possible parameter values to control summarization. 2 The Summary Generator The Summary Generator is based on the assumption that a summary composed of adjacent, thematically related sentences will convey more information than one in which each sentence is independently chosen from the document as a whole and, as a result, likely bearing no relation to its neighbors. This assumption dictated both an abstract methodology and a design architecture for this component: one of our objectives is to validate this intuitive but unproven restriction of summary extraction. Let us first discuss the methodology. A text to be summarized is initially a) broken down into a series of sequences of sentences or segments which talk about the same topic. The set of segments is then rated in some way. We do this by b) identifying the most important key phrases in the document and c) ranking each segment on its overall number of matches for these key phrases. A requested number of sentences is then selected from one or more of the top-ranked segments and shown to the user in document order as the summary. accomplish this we decided to design a configurable system with alternative modules for each of the three main tasks identified above; and where possible to use for these tasks programs which are well-known to the research community because they are in the public domain or are made freely available by their authors. In this way we hoped to be able to evaluate our assumption effectively. Three text segmenters were adopted: the original Segmenter from Columbia University (Kan et al. 1998), Hearst's (1997) TextTiling program, and the C99 program (Choi 2000). Three more programs were used to pick out keywords and key phrases from the body of a text: Waikato Because one of our objectives was to investigate the policy of taking a number of sentences from within one or more segments rather than those individually rated best, we wanted to control for other factors in the summarization process. To Text Segmenter Kea SEGME TextTiling NTING KEYPHRASE Extractor EXTRACTION Exact MATCHING Stem Ranked Segments Summary Figure 2. Summary Generator Architecture C99 NPSeek University's Kea (Witten et al. 1999), Extractor from the Canadian NRC's Institute for Information Technology (Turney 2000), and NPSeek, a program developed locally at the University of Ottawa. by hitcount. When this is no longer true the generator takes sentences from the next most highly rated segment until the required number of sentences has been accumulated. 3 The Generation Controller Once a list of key phrases has been assembled, the next step is to count the number of matches found in each segment. The obvious way is to find exact matches for a key in the text. However there are other ways, and arguments for using them. To illustrate, consider the word total. Instances in text include total up the bill, the total weight and compute a grand total., While different parts of speech, each of these homonyms is an exact match for the key. What about totally, totals and totalled? Should they be considered to match total? If the answer is yes, what then should be done when the key rather than the match contains a suffix? Counting hits based on agreement between the root of a keyword and the root of a match is called stem matching. The Kea program suite contains modules which perform both exact and stem matching, and each these modules was repackaged as a standalone programs to perform this task in our program. The architecture of the summarization component appears in Figure 2, which shows graphically that independent of other parameter settings, eighteen different combinations of various programs can be invoked to summarize a text. 3.1 The Summary Assessment Component The particular segmenter, keyphrase extractor (keyphrase) and type of matching (matchtype) to use are three of the parameters required to generate a summary. Three others must also be specified: the number of sentences to include in the summary (sentcount), the number of keyphrases to use in ranking sentences and segments (keycount), and the minimum number of matches in a segment (hitcount). The last parameter is used to test the hypothesis that a summary composed of adjacent, thematically related sentences will be better than one made from individually selected sentences. The Summary Generator constructs a summary by taking the most highly rated sentences from the segment with the greatest average number of keyphrases, so long as each sentence has at least the number of instances of keyphrases specified Summary assessment requires establishing how effectively a summary represents the original document. This task is generally considered hard (Goldstein et al. 1999). First of all, assessment is highly subjective: different people are very likely to rate a summary in different ways. Summary assessment is also goal-dependent: how will the summary be used? Much more knowledge of a domain can be presumed in a specialized audience than in the general public, and an effective summary must reflect this. Finally, and perhaps most important when many summaries are produced automatically, assessment is very labor-intensive: the assessor needs to read the full document and all summaries. It is difficult to recruit volunteers for such pursuits, and very costly to hire assessors. As a result of these considerations, we focused on automating summary assessment. The procedure turns out to be pretty straightforward, but the criteria and the assumptions behind them are arbitrary. In particular, it is not clear whether the author's abstract can really serve as the “gold standard” for a summary; we assume that abstract in journal papers have high enough quality (Mittal et al. 1999). We work with 75 academic papers published in 1993-1996 in the on-line Journal of Artificial Intelligence Research. It also seems that, lacking both domain-specific semantic knowledge and statistics derived from a corpus, analysis of a document based on key phrases at least relies on the statistically motivated shallow semantics embodied in key phrase extractors. A list of keyphrases is constructed for each paper’s abstract; a keyphrase is any unbroken sequence of tokens between stop words (taken from a stop list of 980 common words). To distinguish such a sequence from the product of key phrase extraction, we refer to it as a key phrase in abstract, or KPIA. Sentences in the body of a paper are ranked on their total number of KPIAs. Rating a summary is somewhat more complicated than rating an individual sentence, in that it seems appropriate to assign value not only to the absolute number of KPIAs in the summary, but also to its degree of coverage of the set of KPIAs. Accordingly, we construct of a set of KPIAs appearing in the summary, assigning a weight of 1.0 to each new KPIA which is added to the set, and 0.5 to each which duplicates a KPIA already in the set. A summary’s score is thus: Score = 1.0 * KPIAunique + 0.5 * KPIAduplicate The total is normalized for summary length and KPIA coverage in the document1 by dividing it by the total KPIA count of an equal number of those sentences in the document with the highest KPIA ratings. Often the sentences with the highest KPIA instance counts do provide the best possible summary of a document. However because our scoring technique gives unduplicated KPIAs twice the weight of duplicates, sometimes a slightly higher score could be achieved by taking sentences with the most unique, rather than the most, KPIA instances. Computing the highest possible score for a document which can run to hundreds of sentences is computationally quite intensive because it involves looking at all possible combinations of sentcount sentences. The ‘nearly best’ summary rating computed by simply totaling the KPIA instances for those was deemed an adequate approximation for purposes of normalization. 3.1 The Summary Assessment Component The purpose of the Parameter Assessor is to identify the best and worst parameters for use in the Summary Generator so that our summary assessment procedure gives best results. This task can be construed as supervised machine learning . Its input is a set of summary descriptions (including the parameters that were used to generate that summary) along with the KPIA rating of each summary. Its output is a set 1 The use of an abstract has been defended on the grounds that no one is better able to summarize a document than its author; a criticism of some force is the fact that on average 24% of KPIAs do not occur in the body of the 75 texts under study (σ 13.3). of rules determining which summary characteristics (including, again, the parameters with which the summary was generated) yield good or bad ratings. These rules can then be interpreted so as to guide our summary generation procedure, in particular, by telling us which parameters to use and which to avoid in future work with the same type of documents. Because we needed easy rules to interpret, we chose to use C5.0 decision tree and rule classifier, one of the state of the art techniques currently available (Quinlan 1993). Our (summary description, summary rating) pairs then had to be formatted according to C5.0's requirements. In particular, we chose to represent each summary by the 6 parameters that were used to generate them: the segmenter (Segmenter, TextTiling, C99) the key phraser (Extractor, NPSeek, Kea) the match type (exact or stemmed match) key count, the number of key phrases used in the summary sent count, the number of sentences used in the summary hit count, the minimum number of matches for a sentence to be in the summary The KPIA ratings obtained from the Summary Assessor are continuous values and C5.0 requires discrete class values, so the ratings had to be discretized. We did so using the k-means clustering technique (McQueen 1967), whose purpose is to discover different groupings in a set of data points and assign the same discrete value to each element within the same grouping but different values to elements of different groupings. We tried dividing the KPIA values into 2, 3, 4 and 5 groupings but ended up working only with the division into two discrete values because the use of additional discrete values led to less reliable results.2 2 The unreliability of results when using more than two discrete values is caused by the fact that our summary description is not complete (in particular, we did not include any description of the original document). We are planning to augment this description in the near future and believe that such a step will improve the results obtained when using more than two discrete values. The C5.0 classifier was then run on the prepared data. This experiment was conducted on 75 different documents, each summarized in 1944 different ways (all plausible combinations of the values of the 6 parameters that we considered). Our full data set thus contained 75 * 1944 = 145,800 pairs of summary description and summary ratings. In order to assess the quality of the rules C5.0 produced, we divided this set so that 75% of the data was used for training and 25% of it was used for testing. The results of this process are reported and discussed in the following section. 4 Identification of Good and Bad Parameters Figure 3 presents highlights of the results obtained by running C5.0 on our database of automaticallygenerated summaries and their associated ratings to produce a division into good or bad summaries. A discussion of these results follows. The value in parentheses near each rule declaration indicates how many of the 109,350 training examples were covered by the rule. The value in square brackets appearing near the outcome of each rule tells us the percentage of cases in which the application of this rule was accurate. Altogether, these rules gave us a 7.8% Rule 1: (cover 3298) keyphrase = k keycount > 3 sentcount > 20 hitcount <= 3 -> class good Rule 2: (cover 3987) keyphrase = k keycount > 5 sentcount > 20 -> class good Rule 3: (cover 5965) keyphrase = k sentcount > 20 -> class good Rule 4: (cover 4071) keyphrase = e sentcount > 20 hitcount <= 3 -> class good accuracy rate when tested on the testing set and we therefore consider them reliable. It is interesting that the rules with the highest coverage and accuracy are negative: they show combinations of parameters that yield bad summaries (Rules 5-9 in Figure 3). In particular, these rules advise us not to use NPSeek as a keyphrase extractor (Rule 5), not to generate summaries containing fewer than 20 sentences (Rule 6), and not to rely on fewer than four different key phrases to select best sentences and best segments for inclusion in the summary (Rule 7). Furthermore, we should not require that sentences within a segment have more than three key phrase matches before shifting to the next best segment (Rule 9) in all cases, and no more than two key phrase matches when the number of keys involved is seven or less (Rule 8). These rules seem useful and reasonable. Rule 6 appears excessive, most probably because the assessment procedure gives a bad rating to a summary that contains few of the key phrases from the author's abstract. Rule 8, on the other hand, seems particularly reasonable. The positive rules (Rules 1-4 in Figure 3) determine combinations of parameters that give good summaries. These rules are less definitive in terms of coverage and accuracy. Still, they Rule 5: (cover 36200) keyphrase = n -> class bad [0.672] [0.989] Rule 6: (cover 90596) sentcount <= 20 -> class bad [0.958] Rule 7: (cover 17990) keycount <= 3 -> class bad [0.933] Rule 8: (cover 36315) keycount <= 7 hitcount > 2 -> class bad [0.927] Rule 9: (cover 36302) hitcount > 3 -> class bad [0.915] [0.665] [0.591] [0.554] Figure 3. Rules Discovered by C5.0 suggest that Kea is the best key phrase extractor, while Extractor can be good. All four positive rules confirm the conclusion of Rule 6, that summaries of no more than 20 sentences are not good. Rules 1, 2, 4 express the general need to keep high the number of key phrases used to select best sentences and best segments for inclusion in the summary (key count), and to keep low the number of key phrase matches within a segment for its sentences to be selected for inclusion in the summary (hitcount). Rule 1, just like Rule 8, demonstrates the interplay between keycount and hitcount. Interestingly, two parameters do not figure in any rule, either negative or positive: segmenter (it decides what segmentation technique is used to segment the original text), and matchtype (it says whether it is necessary to match key phrases on exact or stemmed terms). If this observation can be confirmed in larger experiments, we will be able to generate summaries without bothering to experiment with two of the three stages in summarization. While this conclusion is not intuitive, it may turn out that only keyphrase extraction is important. Conclusions and Future Work This paper has presented a framework for text summarization based on the generate-and-test model. We generated a large set of summaries by enumerating all plausible values of six parameters, and we assessed the quality of these summaries by measuring them against the abstract included in the original document. Because of the high number of automatically generated summaries, the adequacy of parameters and their combinations could only be determined using automated means; we chose supervised machine learning. The results of learning suggest that the key phrase extraction method is the most, and possibly the only, important variable. The summary generator could perhaps be used (with a certain amount of reliability) within these ranges of parameter values on new texts for which no author's abstract exists. We will need to consider at least four questions in the near future. Feedback loop: We only went through a single iteration of the Parameter Assessment Component. We should keep the parameters and their values that we found useful, throw in new parameters and their values and repeat the process. This could be done several times, until no improvement resulted from the addition of new parameters or new values. Better summary description and summary ratings: For the time being, we consider all documents equivalent in terms of how summary generation should be applied. Yet, it is possible that different documents require different methodologies. A very simple example is that of long versus short texts or dense versus colloquial ones. It is very possible that short or colloquial texts require shorter summaries than long or dense ones. Some description of the summarized document could be included in the summary description. The ratings too could be normalized to take the size of the summary into consideration, or altogether new measures of summary quality could be devised. Full automation: In the present system, the linking of the various components of the system had to be done manually. This was time-consuming, and could make the implementation of the feedback loop mentioned earlier quite tedious. An important goal for our system would then be to automate the linkage between the different components and let it run on its own. Integration of other techniques/ methodologies into the Summary Generator: Our current system only experimented with a restricted number of methodologies and techniques. Once we had a fully automated system, nothing would prevent us from trying a variety of new approaches to text summarization that could then be assessed automatically until an optimal one has been devised. References Choi, F. 2000. Advances in domain independent linear text segmentation. To appear in Proceedings of ANLP/NAACL-00. Hearst, M. 1997. TexTiling: Segmenting text into multi-paragraph subtopic passages. Computational Linguistics 23 (1), pp. 33-64. Goldstein, J., M. Kantrowitz, V. Mittal, and J. Carbonell. 1999. Summarizing Text Documents: Sentence Selection and Evaluation Metrics. In Proceedings of ACM/SIGIR-99, pp.121-128. Kan, M.-Y., J. Klavans and K. McKeown. 1998. Linear Segmentation and Segment Significance. In Proceedings of WVLC-6, pp. 197-205. Klavans, J., K. McKeown, M.-Y. Kan and S. Lee. 1998. Resources for Evaluation of Summarization Techniques. In Proceedings of the 1st International Conference on Language Resources and Evaluation. MacQueen, J. 1967. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical statistics and probability, (1), pp. 281-297. Mittal, V., M. Kantrowitz, J. Goldstein & J. Carbonell. 1999. Selecting Text Spans for Document Summaries: Heuristics and Metrics. In Proceedings of AAAI-99, pp. 467-473. Quinlan, J.R. 1993. C4.5: Programs for Machine Learning. Morgan Kaufman, San Mateo, CA. Turney, P. 2000. Learning algorithms for keyphrase extraction. Information Retrieval, 2 (4), pp. 303336. Witten, I.H., G. Paynter, E. Frank, C. Gutwin and C. Nevill-Manning. 1999. KEA: Practical automatic keyphrase extraction. In Proceedings of DL-99, pp. 254-256.