An Tutorial of Projects for CSM16 Visual Information Systems

advertisement

An Tutorial of Projects for CSM16 Visual Information Systems

By Li Chen

20/02/2004

This tutorial introduces steps of designing and developing part of the projects in

detail. Some particular algorithms/methods should be treated as samples, be aware

that what have been described here are not the only solutions and you can develop

your own. The general information can refer to the formal documents of proposal

for the projects of this module.

Project1: Develop a system which applies Gaussian Model to realize image

classification, content-based and semantic-based retrieval

1

Training stage

1.1

Analysis of data

You have to decide which type of image data used in the projects firstly, and then predefine

their labels/categories.

1.2

Choose training samples

An example:

There are six images (training samples), which are separated into two categories {w1 , w2 } :

w1 : image1, image2, image3

w2 : image4, image5, image6

1.3

Extract primitive features

Primitive features can be colour or texture. Here I will give an example of colour histogram

extracted from RGB image. The related codes as following.

function RGBHist = Colour_Feature(rgbimage)

% Extract the colour histogram features

RGBHist = [];

%extract Red

[counts,x] = imhist(rgbimage(:,:,1));

totalpixels = sum(counts);

for j = 1: size(x)

RGBHist = [RGBHist counts(j)/totalpixels];

end

%extract Green

[counts,x] = imhist(rgbimage(:,:,2));

totalpixels = sum(counts);

for j = 1: size(x)

RGBHist = [RGBHist counts(j)/totalpixels];

end

1

%extract Blue

[counts,x] = imhist(rgbimage(:,:,3));

totalpixels = sum(counts);

for j = 1: size(x)

RGBHist = [RGBHist counts(j)/totalpixels];

end

RGBHist = RGBHist';

1.4

Calculate parameters in Gaussian classification functions

The Gaussian probability density function for the category wi is in the following format:

1

P( x | wi ) [( 2 ) d i ] 1 / 2 exp{ ( x u i ) T i1 ( x u i )}

2

where

u i --- the mean of vector of class wi

i --- the i-th class covariance matrix

x is the feature vector.

u i and i are calculated from training samples belonging to the category wi

1

ui

N

Ni

x

j 1

j

, x j wi ,where N i is the number of training patterns from the class wi .

The covariance matrix as

i

1 Ni

( x j ui )( x j ui )T

N j 1

The following is an example for the procedure of calculating parameters of Gaussian probability

density of the class w1 :

Assume:

w1 : image1, image2, image3

After extracting primitive features from images,

2

3

1

Features_image1= 1 , Features_image2= 3 , Features_image3= 1

3

4

2

1 2 3

6 2

1 1 5

u1 {1 3 1} * 5

3

3

3

3 4 2

9 3

,

2

1

1 3

T

( x j u1 )( x j u1 )

3 j 1

1 2

1 2

2 2

2 2

3 2

3 2

5 T

5

5 T

5

5

1 5

{( 1 ) * ( 1 ) ( 3 ) * ( 3 ) ( 1 ) * ( 1 ) T }

3

3

3

3

3

3

3

3 3

3 3

4 3

4 3

2 3

2 3

2 / 3 0 0

0

0 1

2 / 3 1

1

1

*{2 / 3 4 / 9 0 0 16 / 9 4 / 3 2 / 3 4 / 9 2 / 3}

3

0

0

0 0 4 / 3

1 1

2/3

1

0

1 / 3

2/3

0

8 / 9 2 / 3

1 / 3 2 / 3 2 / 3

1

1

0

1 / 3

2/3

0

8 / 9 2 / 3

1 / 3 2 / 3 2 / 3

1

1.1629

0.4281

0.2604

0.2604

0.2354 (Forget

0.3068

0.4281

0.5993

0.2354

the formula how to

solve it, and maybe you can check your mathematic books and find the solution.

Anyway, you also do not need know the calculation details but use the function of

pinv(x) to calculate inverse of x)

2. Testing stage

2.1 Theoretical inference

P( x, wi ) P( x) * P( wi | x) P( wi ) * P( x | wi )

P( wi | x) P( wi ) * P( x | wi ) / P( x)

To simplify the problem here, we suppose P( x), and P( wi ) are scale factors or constants, so

p( wi | x) P( x | wi ),

where is a cons tan t to make sure

p( w | x) 1

i

i

2.2 Classify an unknown sample

An example:

After training stage, we have one Gaussian function for category w1 :

Gaussian function for category w2 :

posterior probability:

If

P( x | w1 ) and the other

P( x | w2 ) . From the above theoretical inference, we get the

P(w1 | x) and P(w2 | x) .

P(w1 | x) > P(w2 | x) , assign x to w1 ; otherwise assign x to w2 .

3. Content based image retrieval

I recommend you to try the simplest function to calculate the distance between the query example and

data in your image database. Rank them according to values of distance.

References:

[1] B. S. Manjunath, J. R. Ohm, V. V. Vasudevan and A. Yamada, “Color and texture descriptors”,

IEEE Transactions on circuits and systems for video technology, VOL.11, NO.6, June 2001,

pp.703-715.

[2] Schalkoff, Robert J.. - Pattern recognition: statistical, structural and neural approaches / Robert. New York; Chichester: Wiley, 1992. (Chapter 2)

3

Project2: Develop a system, which uses multiple classifier combination method

to realize image classification.

1.

Design individual classifiers

Individual classifiers can be constructed based on different feature spaces (colour, texture) [4] and

different classification algorithm (K-Nearest Neighbours,

Neural

Networks,

Gaussian

classification, etc.)

The introduction of Neural Networks can refer to the documents by Mr. Jifeng Wang, which will

go with the tutorial.

The details about classifiers based on Gaussian model can refer to the project 1.

I will introduce K- Nearest Neighbours Algorithm

1.1 An example of K- Nearest Neighbours Algorithm

Given a training set of patterns

vector, and

X {( x1 , y1 ), ( x2 , y2 ),..., ( x N , y N )} , where xi is feature

yi is class label for xi . Assume 2- class classification problem.

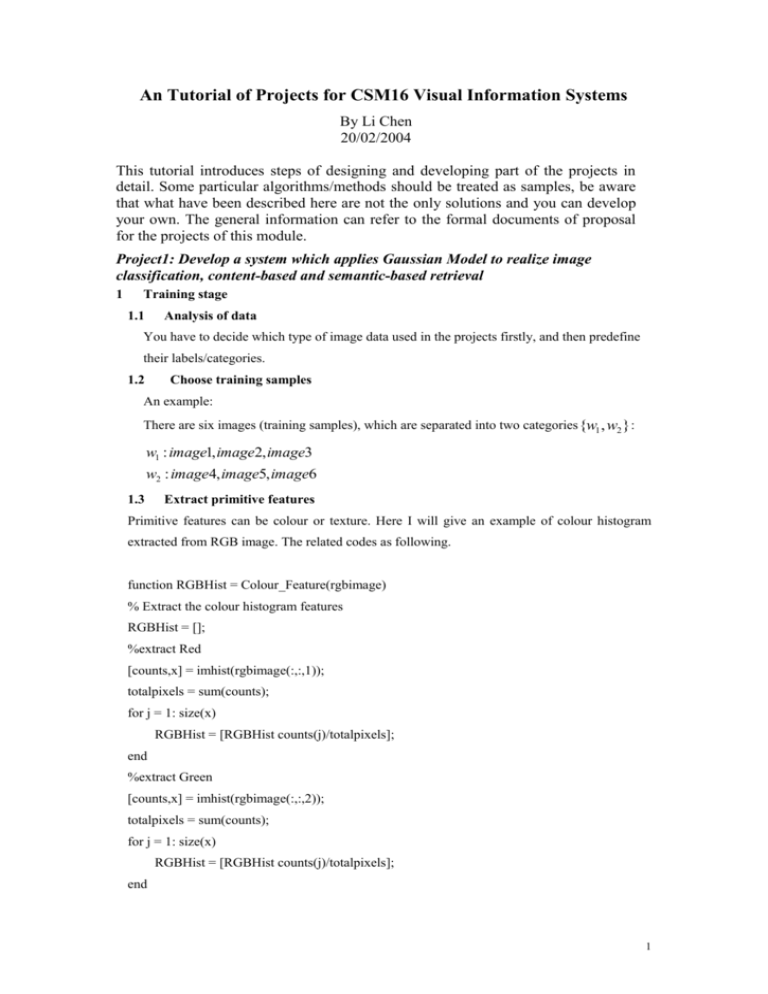

Circle mean w1 , and square mean w2 . Crossed symbol is input vector x. K should be related to the

size of N of the training set. We suppose K = 4. Draw a circle with the centre of x, which

concludes K=4 training samples. There are 3 samples from w1 , and 1 sample from w2 , so assign x

to w1 .

x

2.

Combination strategies

Fixed rules on combining classifiers are discussed in theoretical and in practical in [5]. Xu,

et. Al [3] summarised possible solutions to fuse individual classifiers are divided into three

categories according to the levels of information available from various classifiers. I will

give two different algorithms for examples.

2.1 An example of simplest majority voting algorithm

Assume 3 classifiers and all of them will output labels for unknown images. If two of them agree

with each other, we take their result as the final decision. For example, classifier 1 says this image

is A, classifier 2 says it is B, and classifier 3 says it is A. A is the final decision. At some

situations, if three classifiers output different labels, you have to decide which one is right using

some rules.

2.2 The Borda count method algorithm

4

The example can refer to Notes from Dr. Tang’s lecture.

Assume we have 4 categories {A, B, C, D} and 3 classifiers. Each classifier outputs a rank list.

When an unknown image x is inputted into the system, we have the following output information:

Rank value

Classifier 1

Classifier 2

Classifier 3

4

C

A

B

3

B

B

A

2

D

D

C

1

A

C

D

Rank values mean scores to assign for different ranked levels.

The score of x belonging to the category A equals as following:

S A S 1A S A2 S A3 1 4 3 8

The score of x belonging to the category B equals as following:

S B S B1 S B2 S B3 3 3 4 10

The score of x belonging to the category C equals as following:

SC SC1 SC2 SC3 4 1 2 7

The score of x belonging to the category D equals as following:

S D S D1 S D2 S D3 2 2 1 5

The final decision is B because it obtains the highest value.

References:

[3] L. Xu, A. Kryzak, C. V. Suen, “Methods of Combining Multiple Classifiers and Their

Applications to Handwriting Recognition”, IEEE Transactions on Systems, Man Cybernet, 22(3),

1992, pp. 418-435.

[4] B. S. Manjunath, J. R. Ohm, V. V. Vasudevan and A. Yamada, “Color and texture

descriptors”, IEEE Transactions on circuits and systems for video technology, VOL.11, NO.6,

June 2001, pp.703-715.

[5] J. Kittler, M. Hatef, R. Duin and J. Matas, “On Combining Classifiers”, IEEE Transactions on

Pattern Analysis and Machine Intelligence, 20(3), 1998, pp. 226-239.

[6] R. Duin, “The Combining Classifier: to Train or Not to Train”, in: R. Kasturi, D.

Laurendeau, C. Suen (eds.), ICPR16, Proceedings 16th International Conference on Pattern

Recognition (Quebec City, Canada, Aug.11-15), vol. II, IEEE Computer Society Press, Los

Alamitos, 2002, pp.765-770.

5