Here - European Topic Centre on Spatial Information and Analysis

advertisement

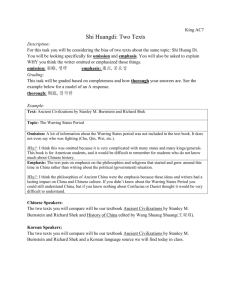

Final Recommendations Quantitative assessment high-resolution soil sealing layer EEA subvention 2007 Prepared by: G. Maucha and G. Büttner 03.12.2007 Version 1.0 Project 1.2.6: CORINE Land Cover update EEA Project manager: Ana Sousa ETC-LUSI Task manager: George Büttner ETC Land Use and Spatial Information Universitat Autònoma de Barcelona Edifici C – Torre C5 4ª planta 08193 Bellaterrra (Barcelona) Spain Contact: +34 93 581 3545 Fax +34 93 581 3518 Administration mail: etcte@uab.es http://terrestrial.eionet.europa.eu European Topic Centre Land Use and Spatial Information TABLE OF CONTENTS 1 Background ............................................................................. 2 2 Basic considerations ............................................................... 3 2.1 2.2 2.3 2.4 2.5 2.6 2.7 Verification or validation? ........................................................................ Point sampling or cluster sampling? ......................................................... Reference data for quality control ............................................................ The controlling procedure ....................................................................... Understanding 85% accuracy .................................................................. error types ............................................................................................ Stratification ......................................................................................... 3 3 3 3 4 5 6 3 Recommendations .................................................................. 8 4 Annexes .................................................................................. 9 4.1 4.2 4.3 Statistical approach to accuracy assessment ............................................. 9 Omission errors .................................................................................... 10 Stratification ........................................................................................ 11 533553989 i European Topic Centre Land Use and Spatial Information 1 BACKGROUND Based on requirements of the EC and other users, in March 2006 EEA put forward a proposal to collaborate with the European Space Agency (ESA) and the European Commission (EC) on the implementation of a Fast Track Service Precursor on land monitoring, in line with the Communication from the Commission to the Council and the European Parliament “Global Monitoring for Environment and Security (GMES): From Concept to Reality” (COM(2005) 565 final). The project builds on the benefits of GMES by combining the CORINE land cover (CLC) update with the production of additional high resolution data for a selected number of land cover classes such as those concerning built-up areas and forests. The shortcomings of a standard CLC update, which is deemed insufficient to meet the wide range of user needs, can be solved by the creation of complementary high resolution land cover data for a selected number of classes. ESA provides high resolution satellite imagery (SPOT-4 and IRS LISS-3) for the purposes of the project, in two time windows selected by the countries for the years 2006+-1. DLR has provided high quality orthorectification (IMAGE2006). The producers of the high-resolution layers (the Service Providers) are using the same imagery as the national CLC2006 teams. This document is dedicated to the high resolution layer: built-up layer: per-pixel based classification of built-up and non built-up areas (2 classes), together with an estimate of imperviousness of the area, also called degree of “soil sealing”. Built-up areas are characterized by the substitution of the original (semi)-natural cover or water surface with an artificial, often impervious, cover. This artificial cover is usually characterized by long cover duration (FAO Land Cover Classification System, 2005). Impervious surfaces of built-up areas account for 80 to 100% of the total cover. A perpixel estimate of imperviousness (continuous variable from 0 to 100 percent) will be provided as index for degree of soil sealing for the whole geographic coverage. The data will be produced in full spatial resolution, i.e. 20 m by 20 m, which provides the best possible core data for any further analysis. The classification accuracy per hectare (based on a 100 m x 100 m grid) of built-up and non built-up areas should be at least 85%, for the European product. Another high resolution layer (forest/non-forest mask) is being produced by the JRC. This layer is not scope of this document. The purpose of this document is twofold: to clarify some basic issues of quality control; to recommend a practical methodology for national teams to carry out a quantitative assessment of the national dataset (soil sealing layer). European-level quality control will take profit of the national validation. Recommendations for quantitative assessment of soil sealing layer 2 European Topic Centre Land Use and Spatial Information 2 BASIC CONSIDERATIONS 2.1 VERIFICATION OR VALIDATION? There are two different kinds of quality control procedures: Verification has the objective to enhance the quality of the product. Therefore it has to be incorporated into the production process. After verification the production continues by using the findings of the verification. Validation is performed after the end of production, with the aim to assess the overall accuracy of the database. The database should not be changed after the validation. If the quality control is done by a representative sampling (this means sufficient number of sampling points) it can be considered as a validation, if the 85%±x % accuracy is reached (x – being the uncertainty of the accuracy should be specified!). If the database “fails”, it should be corrected and checked again. 2.2 POINT SAMPLING OR CLUSTER SAMPLING? The basic way of quality control is to compare the high-resolution layer to an even higher resolution layer (not used in the production), which has a similar acquisition date and nomenclature of high compatibility. The comparison can be based on sample points, or on sample areas. Only point sampling is "statistically sound", at least it was hard to find any literature about the estimation of the accuracy measure produced by a "cluster sampling" reliability (known distribution, standard deviation, etc). Point samples should be equally distributed inside the examined area, which could be a regular grid or random sampling. A "blocky" sampling (to use many samples only at locations where VHR data are available reduces drastically the reliability of the accuracy measure. 2.3 REFERENCE DATA FOR QUALITY CONTROL VHR imagery (aerial orthophotos / VHR satellite images, taken around 2006) + topographic maps are the primary data for the quality control of HR layers. Most of the countries have orthophotos available, used for other environment-related projects. If no VHR imagery is available GoogleEarth data can be used (warning: usually there is no information on satellite image date!). In the worst case Image2006 (used to derive the HR layers) can be used - but in this case the reliability of the results is much more limited. To use any existing thematic database is not recommended, because it may be not upto-date and is already the result of an interpretation. 2.4 THE CONTROLLING PROCEDURE In order to control HR layers (raster data) we have to compare visually the VHR imagery and the 100x100 m raster cell of the HR layer (as explained in the first chapter, the accuracy value of 85% refers to the 100x100m grid). If more than 80% of the cell is Recommendations for quantitative assessment of soil sealing layer 3 European Topic Centre Land Use and Spatial Information covered by a sealed surface it should be considered as a sealed cell. Of course, exact percentage is never computed, the validating expert has to be able to estimate whether the actual sealed surface coverage is below or above 80% in the given sampling cell. Here again, and as explained in the first chapter, an area is considered as built-up if 80 to 100% of the total cover is sealed. Figure 1: Illustration of a sealed (left) and non-sealed (right) sample cell. Cellsize: 100x100 meters 2.5 UNDERSTANDING 85% ACCURACY 85% accuracy is what the Service Providers have to reach (contractual clause) for the European product. What does the 85% accuracy mean? How many samples we need to achieve this accuracy? According to statistics theory (see some details in Annex 1) 500 sample points are needed to test a classification with 85% 2 % (90 % significance) overall accuracy. This is the proposed sample number for estimating overall accuracy. Less uncertainty would need more samples, while bigger uncertainty would need less samples (Table 1). Table 1 Examples for optimising the sampling design at the 90% significance level. Error interval* 15 15 15 15 15 Number of required samples 1% 2% 3% 4% 5% 2 000 500 230 125 80 Maximum number of wrong samples Maximum percentage of wrong samples 300 74 33 18 11 15,0 14,8 14,3 14,4 13,8 % % % % % This figure of estimation of the overall accuracy does not depend on the area of the country. The overall accuracy value estimates the proportion of the error inside the whole examined area (country or EU). * Error intervals are approximate values, because the binomial distribution is not symmetrical and the number of required samples have been rounded up, as well Recommendations for quantitative assessment of soil sealing layer 4 European Topic Centre Land Use and Spatial Information Large error intervals (the use of very few samples) could have two consequences: Acception of databases having an accuracy significantly lower than 85% (but good accuracy shown in the sample), Refusion of databases having an accuracy significantly higher than 85% (but low accuracy shown in the sample). Figure 2: Illustration of comission and omission errors. Pcomission = 15%, Pomission = 15%, errors are relative to the Pclass = 5% built-up area in the country 2.6 ERROR TYPES During quality control of HR layers we have to estimate two kinds of errors: 1. Pixels are classified as built-up but the control shows that they are actually non built-up. This is called commission error. 2. Pixels are not classified as built-up, but the control shows that they are actually built-up. This is called omission error. Both kinds of errors should be examined to control the possible mistakes of classification. If either the commission or the omission errors exceeds the required level (i.e. 15 x%), the overall accuracy of the HR layer is considered not satisfactory. 2.6.1 Commission error The commission error is defined as the proportion of the number of wrongly classified pixels (Kcommission) related to the number of all pixels classified as built-up (Nclass): Pcommission K commission N class Recommendations for quantitative assessment of soil sealing layer 5 European Topic Centre Land Use and Spatial Information The random sample pixels have to be selected only from pixels that were classified as a built-up area. The required number of samples is calculated as described for the estimation of overall accuracy (2.5). 2.6.2 Omission errors The omission error is defined as the proportion of the number of pixels (K omission) erroneously NOT classified as built-up, related to the number of all pixels classified as built-up (Nclass): Pomission K omission N class We would like to calculate the number of samples that have to be examined (n) and the maximum number of wrong samples found (c) for Pomission = 15 2% omission error, to reach the 90% reliability level (like in the case of commission error). To find pixels affected by omission error we have to examine pixels belonging NOT to the examined class (not classified as built-up). Therefore the estimation of omission errors could be much more problematic, because especially the built-up area covers only a small fraction of the whole (country) area, thus the area to be examined is rather large (see Figure 2). The probability of finding omission errors (P* omission) is therefore depending on the covarege of the examined class (Pclass): * Pomission Pomission Pclass (see details in Annex I) 1 Pclass In Europe built-up area coverage is typically between 1,5-7%. To estimate the maximal 15% omission error with 2% accuracy would require a very large number of sample points in most of the cases, which would increase the work / costs dramatically. The reduction of the number of samples results however in an increasing uncertainty in the estimation of omission errors. Therefore a more relaxed sampling design is proposed, yielding some times different reliabilities for omission and commission errors. Table 2 presents examples for the possible sampling design, calculated for different P class values. Table 2 Examples for optimising the sampling design to estimate the omission error at different Pclass values at the 90% significance level. Pclass 50 40 30 20 10 5 4 3 2 1 % % % % % % % % % % P*omission (Pomission = 15%) 15,00 10,00 6,43 3,75 3,75 1,67 1,67 0,79 0,79 0,46 Number of required samples to reach 15% 2% reliability % % % % % % % % % % 1 2 5 11 14 19 30 60 500 800 300 300 400 500 500 500 000 000 Reliability of estimation of omission error (N = 2000 samples) 15% 15% 15% 15% 15% 15% 15% 15% 15% 1.0% 1.3% 1.6% 2.2% 3.3% 4.8% 5.2% 6.1% 7.5% 15% 10.5% Considering quality control of HR layers, to interpret 500 + max. 2000 samples in each country seams to be a moderate task. 2.7 STRATIFICATION The estimation potential – the number of required samples - for low Pclass values can be increased by stratification. Stratification means: We use independent (a-proiri) Recommendations for quantitative assessment of soil sealing layer 6 European Topic Centre Land Use and Spatial Information knowledge of land cover by planning the sampling design and calculating the required number and distribution of samples. Stratification however may cause confusion in calculating exact reliability levels, if the independent land cover information itself is less accurate than the examined database. The recommended way of stratification is to exclude areas from the sampling, which have a very well known land cover type, so it is proofed, that the probability of omission error on these areas is close to zero. We can calculate the proportion (probability of finding) of omission error within the remaining pixels (within pixels, which do belong neither to the sealed class, nor to the excluded areas): * Pomission Pcomission Pclass 1 Pexcl Pclass where Pexcl is the proportion of the number of excluded pixels relative to the total number of pixels: Pexlc N excl N all Recommendations for quantitative assessment of soil sealing layer 7 European Topic Centre Land Use and Spatial Information 3 RECOMMENDATIONS The following list of actions is proposed for the quantitative assessment of the high resolution soil sealing layer at national level: 1. prepare a vector layer containing the randomly distributed 100x100 meter cells. These should be based on the HR layer as follows: 500 samples will be distributed inside the sealed area to estimate commission error, and another set of samples (maximum 2000) will be distributed outside the sealed area, to estimate omission errors. 2. compare the vector file containing the sample locations with the available VHR imagery. The interpretation will be “blind” in the sense that the interpretation will be carried out without having access to the HR product. All sampling cells should be evaluated and qualified, e.g. sealed/not sealed. 3. compare the cells with the HR database, and calculate the commission (according to 2.6.1) and omission errors (according to 2.6.2). 4. If the commission error is smaller than 15%+2%, and the omission error is smaller than 15%+x% (x depending on the probability of the sealed class), then the accuracy of the HR database has reached the target 85% value in the country. 5. If either the commission error is greater than 15%+2%, or the omission error is greater than 15%+x% (x depending on the probability of the sealed class), then the accuracy of the HR database has not reached the target 85% value in the country, and the HR database should be corrected. Recommendations for quantitative assessment of soil sealing layer 8 European Topic Centre Land Use and Spatial Information 4 ANNEXES 4.1 STATISTICAL APPROACH TO ACCURACY ASSESSMENT According to the theory of statistics, we can say with 100% reliability that accuracy is e.g. 85% only if we checked all pixels and exactly 15% of them are wrong. Using a sampling instead of checking all pixels, only the following question can be raised: We found c = 15 wrong ones in a set of pixels containing n = 100 samples. What is the L(p,n,c) probability, that the true proportion of errors in the database is less than p = 15%? With other words: What is the probability of that we can accept a database which contains less than p = 15% wrong pixels? Assuming that the number of all pixels N is much greater than the n number of sample pixels (N 10n) we can use the binomial distribution to answer the question: c n nk L p; n, c p k 1 p k 0 k If we would check all pixels, finding that exactly 15% of the pixels is wrong, then we would accept with 100% probability all databases that contain less than or equal to 15% wrong pixels and we would accept it with 0% probability (this means we would not accept) databases that contain more than 15% rejections. This is represented as a yellow line in Figure 2. L(p,n,c) - The probability of accepting the database (c = 15%) 100% 90% Probability of acceptance 80% 70% n=N 60% n = 20 50% n = 100 40% n = 1000 30% 20% 10% 0% 0 10 20 30 40 50 60 70 80 90 100 True proportion of refuses in the database - p% Figure 2 The probability of accepting the database at different sample sizes Let’s assume that we use 100 samples: If 15% of the samples (15 pixels) are wrong, the probability of accepting all databases containing less than 15% wrong pixels is only Recommendations for quantitative assessment of soil sealing layer 9 European Topic Centre Land Use and Spatial Information 56,8%. As shown by the upper part of the red line at Figure 2., we can state with 90% probability (called level of reliability / significance), that we accept all databases containing less wrong pixels, than 11,4%. Looking at the lower part of the red line, we can read, that we will accept with 10% probability (or with other words we reject with 90% probability) all databases containing more errors than 20,6%. The 85% criteria we interpreted as mean accuracy i.e. 85 ± 2%. Referring to the previous paragraph this implies that With 90% reliability we would like to reject databases that contain more than 17% error (user’s interest). With 90% reliability we would like to accept databases that do not contain more than 13% error (producer’s interest). Using the binomial distribution it can be calculated, that the above criteria is fulfilled if we check n = 500 samples and no more than c = 74 out of the 500 randomly selected samples (14,8% of the samples) are wrong. 4.2 OMISSION ERRORS What we can estimate directly via sampling is the proportion of pixels affected by omission error relative to ALL pixels classified as non built-up. Let we sign this proportion (proportion corresponds to the probability of finding these pixels as well) with P *omission: * Pomission K omission . N all N class The proportion of built-up area inside the whole (country) area is signed with Pclass: Pclass N class , N all i.e. the number of built-up pixels divided by the number of all pixels. Now we can formulate: * Pomission K omission N class (1 Pclass ) Pclass K omission Pclass , N class 1 Pclass or finally: * Pomission Pomission Pclass 1 Pclass The above formula shows that if Pclass = 0,5 (50%), then P*omission = Pomission, i.e. the probability of finding pixels affected by omission error is the same as it is in the case of commission errors. If Pclass < 0,5 (50%), then P*omission < Pomission, i.e. the probability of finding pixels affected by omission error within pixels belonging NOT to the examined class will be (much) lower than the probability of finding commission error within built-up pixels. This implies that at such low probabilities either the number of required samples would be extreme high to be able to keep the 2% error interval, or we have to allow larger intervals for the estimation of omission error. Recommendations for quantitative assessment of soil sealing layer 10 European Topic Centre Land Use and Spatial Information 4.3 STRATIFICATION Decreasing the number of non built-up pixels to be examined can increase the probability of finding omission errors within non built-up pixels. With other words, using a priori knowledge we have to exclude pixels from the examination that surely (or with very high probability) belong not to the class examined. Then we can calculate the proportion (probability of finding) of omission error within the remaining pixels: * Pomission Pcomission Pclass . 1 Pexcl Pclass where Pexcl is the proportion of the number of excluded pixels relative to the total number of pixels: Pexlc N excl . N all An effective method for selecting pixels to be excluded is the calculation of vegetation indices from RS data and the exclusion of pixels with high NDVI values (non built-up areas). CLC2006 database can also be used, however, only with limitations: e.g. larger water surfaces can be excluded (some inward buffer is recommended). More complex stratification strategies (e.g. the use of different sample density for different CLC classes) may cause confusions in calculating exact reliability levels. Recommendations for quantitative assessment of soil sealing layer 11