Error Protection Coding

advertisement

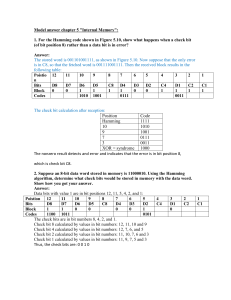

EETS 8316 DIGITAL TELEPHONY SECTION # - VIDEO DISTANT 401 FALL 2003 ERROR PROTECTION CODING BY JUAN M. QUIJADA STUDENT ID (18760291) EETS 8316 Fall 2003 ABSTRACT This document provides a mathematical overview of the methods used for performing error protection coding in telecommunications systems. These methods are used to improve the reliability and performance of various communication systems. Error protection codes are applied to detect and correct corrupted bits or symbols and to recover from errors introduced from transmission impairments such as delay, attenuation, distortion, network congestion and interference, among others. Normally, different codes and their associated encoding/decoding processes are used to satisfy different system requirements and applications. Often, a combination of different methods is used to provide additional protection of most significant data bits that are critical to the decoding of the transmitted information. Error protection is divided in two major categories, error detection and correction. Error detection, as its name indicates, can only provide for the detection of errors received from the transmission of an information frame. Error correction is subdivided into backward and forward error correction. Backward Error Correction (BEC) incorporates the use of a technique known as Automatic Repeat Request (ARQ) to allow for the retransmission of lost or damaged frames. Forward Error Correction (FEC), on the other hand, utilizes sufficient extra redundancy added to the original information frame to allow for the capability for errors to be corrected by the receiving device without the need for retransmission. Every form of error protection requires the sacrifice of available channel bandwidth as a tradeoff to enabling error detection and correction. This provides an increase in reliability, but a decrease in system efficiency. Performance and applicability of each technique varies according to the type of error received. Some of the factors to keep in mind during the design process include error protection capability, complexity, allowable redundancy and speed of the system. ii EETS 8316 Fall 2003 TABLE OF CONTENTS Page No. 1. INTRODUCTION..................................................................................................... 1 1.1 1.2 2. BACKGROUND ...................................................................................................... 1 OBJECTIVE ........................................................................................................... 1 ERROR PROTECTION CODING ......................................................................... 2 2.1 ERROR DETECTION .............................................................................................. 3 2.1.1 Parity Check ................................................................................................ 3 2.1.2 Cyclic Redundancy check ........................................................................... 4 2.1.2.1 Modulo 2 Arithmetic............................................................................... 4 2.1.2.2 Polynomials............................................................................................. 4 2.1.2.3 Digital Logic or Hardware ...................................................................... 6 2.2 ERROR CORRECTION ............................................................................................ 8 2.2.1 Backward Error Correction ......................................................................... 8 2.2.2 Forward Error Correction ........................................................................... 9 2.2.2.1 Block Codes .......................................................................................... 10 2.2.2.1.1 Hamming Codes.............................................................................. 10 2.2.2.1.2 Cyclic Codes ................................................................................... 12 2.2.2.1.3 Bose-Chaudhuri-Hocquenhem (BCH) Codes ................................. 14 2.2.2.1.4 Reed-Solomon (RS) Code............................................................... 14 2.2.2.2 Convolutional Codes............................................................................. 15 3. APPLICATIONS IN WIRELESS ENVIRONMENTS ....................................... 17 3.1 WIRELESS NETWORKS. ....................................................................................... 17 3.1.1 Advanced Mobile Phone Service (AMPS). ..................................................... 17 3.1.2 Digital-Advanced Mobile Phone Service (D-AMPS)...................................... 18 3.1.3 Global System for Mobile Communications (GSM). ...................................... 18 3.1.4 Code Division Multiple Access (CDMA)........................................................ 19 3.1.5 Mobitex. ........................................................................................................... 20 3.1.6 802.11 Wireless LAN (WLAN). ...................................................................... 20 4. CONCLUSION ....................................................................................................... 20 FIGURES Figure 2.1 Error Protection Process Diagram ....................................................................... 3 Figure 2.2 Digital Logic Overview for CRC Calculation ...................................................... 7 Figure 2.3 CRC Digital Logic Overview for a Given GP(X) Value ........................................ 7 Figure 2.4 CRC Digital Logic Overview with 1011101 Input Sequence ................................ 7 iii EETS 8316 Fall 2003 Figure 2.5 ARQ Mechanism ............................................................................................... 9 Figure 2.6 Convolutional Encoding Process ...................................................................... 16 TABLES Table 2.1 Hamming Code Calculation with No Error ........................................................ 12 Table 2.2 Hamming Code Calculation with Single Bit Error .............................................. 12 Table 2.3 Cyclic Code Error Patterns ............................................................................... 14 Table 2.4 Convolutional Encoding Results for a 1011101 Input Pattern ............................. 16 APPENDICES APPENDIX A. CRC Calculation using polynomials .................................................... A-1 APPENDIX B. CRC Calculation Using Digital Logic ................................................. B-1 APPENDIX C. Hamming Code Calculation ................................................................. C-1 APPENDIX D. Cyclic Code Calculation....................................................................... D-1 APPENDIX E. Convolutional Code Calculation ...........................................................E-1 iv EETS 8316 Fall 2003 1. INTRODUCTION 1.1 Background Error protection or error control methods have been utilized for quite some time to detect and correct errors during transmission of a data signal. In the old days, the use of analog transmission methods introduced more errors during transmission of the analog signals. Amplifier units further added to the problem by also magnifying noise coupled into the signals, creating stronger but noisier signals. Many of these problems were solved with digital transmission methods, yet the probability of errors was not eliminated. Digital systems employ discrete values of binary data to represent the information to be transmitted. Digital representation of information does not suffer from cumulative noise errors and are less susceptible to noise interference, which reduces the probability of errors. There are several ways that errors can be introduced into systems: attenuation, electrical noise, electromagnetic interference (crosstalk), echo, thermal noise, intermodulation distortion, delay distortion, fading, cable loss, equipment failure and environmental conditions (i.e. fog, flooding). To help cope with these factors, several techniques for error protection have been developed to increase the probability of error detection and correction. However, these techniques require part of the total channel capacity to be used for error control. Additional redundant information is calculated during the encoding process and is used at the receiver side to help analyze received data for the presence of errors. Some of the techniques used to achieve this are discussed in this document. These techniques transmit information and the decoding process evaluates this data against a predetermined number of possible transmitted messages. The receiver does not know which of these messages was sent, but it only needs to determine this from the predetermined set of allowable messages. Performance and applicability of each technique varies according to the type of error from which wishing to recover. Wireless systems are more susceptible than landline because environmental transmission impairments can more severely affect and degrade a signal. This is more significant in big cities that have an abundance of man-made electromagnetic noise and multipath problems. 1.2 Objective The purpose of this paper is to provide an overview, from a mathematical perspective, on how error protection codes are used to improve data transmission and reliability of communication systems. The goal in the design of telecommunications networks is to create reliable systems that function correctly when something goes wrong. Generally, the reliability of a system is improved through redundancy by using additional information so that, if one piece of information is lost or corrupted, the system can detect and/or correct the error and continue to function. 1 EETS 8316 Fall 2003 2. ERROR PROTECTION CODING Data is transmitted through a communication channel as a sequence of bits called frames. Many conditions can occur during transmission of a message frame that requires some form of error protection to be used. For instance, the message can be lost during transmission and fails to arrive at the receiving end (lost frame). Or, perhaps a message does arrive to the receiving end but contains errors (damaged frame). On data communication systems, it is observed that the probability that a frame arrives without bit errors decreases as the Bit Error Rate (BER) increases. BER is the ratio between error bits to the total number of bits transmitted. Also, the probability of errors increases with the use of longer data frames or higher data rates. For this reason, it is important to keep this in mind when designing transmission systems. Certain techniques are still employed beyond this point to minimize the probability of errors. Error protection coding, or error control, is used to minimize errors and protect data from transmission impairments. There are two categories of error control: error detection and error correction. Error detection focuses on the detection of errors only. Error correction comprises two categories, backward and forward error correction. BEC uses the ARQ mechanism to assist error detection codes correct the errors by retransmitting corrupted frames. FEC allows the correction of errors at the receiving end without the need for retransmission. BEC is normally the preferred method to use in wired digital data systems, where errors are less likely to occur, and where retransmission of corrupted information can be tolerated without major loss of intelligibility. This, however, is not suitable for some wireless application environments such as satellite or mobile communications. FEC is mostly used in systems where retransmission of information cannot be tolerated or afforded such as in deep space exploration, military applications, and certain wireless communication (i.e. satellite, mobile phones). There are two types of FEC codes: block and convolutional codes. Block codes encode information as blocks and are only concerned with the current message sent for decoding. Convolutional codes encode information in a stream, and rely on memory of previous information received during the decoding process. There are several advantages and disadvantages to each error protection technique, which will be explained in further detail in later subsections. In either case, each method involves, unavoidably, the use of available bandwidth to carry the extra redundant bits for detecting and correcting errors. As a result and subject to a fixed channel bandwidth, it reduces the number of information bits that can be transmitted at a time. Error detection codes utilize available bandwidth for transmission of additional parity bits necessary to detect error, BEC for retransmission of erroneous frames and FEC to carry sufficient redundant bits to correct errors without retransmission. Figure 2.1 provides a high level diagram to present the process used to achieve error protection in a given system. 2 EETS 8316 Fall 2003 Received Information Bits Codeword (n = k + r) Data Bits (k) Recalculate Check Bits Calculate (n = k + r) Check Bits (r) Communication Compare Channel k-bits Accepted if check bits match Figure 2.1 Error Protection Process Diagram 2.1 Error Detection As explained earlier, error detection is required to indicate the presence of errors during the transmission of an information frame. One of the drawbacks of this method is that it can detect the presence of errors, but not correct the erroneous bits. This is due to the fact that it uses a much fewer number of redundant bits than error correction codes. These extra bits are part of the error detecting code or redundant bits (r-bits), which are calculated from the original k data bits and then appended to the encoded information frame (n = k + r). The opposite calculation is performed at the receiving end to separate the original k data bits from the error detecting r-bits, which are compared with the precalculated check bit code. If the codes differ, then an error has been introduced and detected. Error detection techniques are commonly used in data link layer protocols such as High Level Data Link Control (HDLC). Error correction is achieved through the use of ARQ or other higher layer protocols such as Transmission Control Protocol (TCP). Resolution of detected errors using these techniques implies a longer error recovery time involved with the retransmission process. 2.1.1 Parity Check Parity check code is the simplest method for implementing error detection. A k-bit data frame needs to be transmitted as a single block. Parity check adds one extra bit called the parity bit to the information frame. The parity bit is added depending on whether an odd or even parity is required. For an even parity, a 1 or 0 parity bit is added only to make the total number of 1s in the k-bit data frame an even number. For an odd parity, a 1 or 0 is added only to make the number of 1s an odd number. This technique can only detect a single bit error. Therefore, the use of a parity bit is not very reliable since it’ll only detect an error if the parity at the receiving end doesn’t match (odd or even). When an error occurs, a condition known as parity alarm is reported. An error won’t be detected if more bits are altered and the parity remains the same. For this reason, the parity bit is hardly used for data transmission anymore. However, the concept of parity checks is utilized by other techniques discussed later. “The likelihood of detecting errors using parity bits is about 65 percent” [BATE, 01]. Hamming codes represent a more robust form of parity check block coding. 3 EETS 8316 Fall 2003 One of the applications for parity checking is inside digital computers where errors rarely occur. This technique was also used to identify parity errors for the transmission of 7-bit ASCII coded characters. Nowadays, the use of parity bit has been replaced by the use of more powerful error detection methods such as the CRC. 2.1.2 Cyclic Redundancy check The use of the CRC error detection technique is one of the most widely used and more powerful methods for detecting errors. This technique calculates an r number of redundant bits, which are added to the original data by the encoding process as a field known as Frame Check Sequence (FCS). The length of the FCS is chosen depending on the desired level of error detection probability. CRC calculation is performed at the data link layer of the Open Systems Interconnect (OSI) reference model and is commonly used with HDLC based protocols to perform detection of errored frames. HDLC-derived protocols use either a 16 or 32-bit FCS depending on its application. This method can detect any number of burst errors which length is less than the CRC length. To perform error correction, a technique known as ARQ can be employed to allow for the retransmission of the entire information frame. “The likelihood of detecting errors using this approach is 99.99995 percent” [BATE, 01]. The calculation of the CRC is accomplished by performing the following procedures. 2.1.2.1 Modulo 2 Arithmetic Modulo 2 (mod 2) arithmetic can be used to perform addition, subtraction, multiplication and division of binary numbers. To perform modulo 2 addition and subtraction, an Exclusive-OR (XOR) operation of the binary number is performed in the following manner. Addition Subtraction 1100 +1010 1100 - 1010 0110 0110 Multiplication and division provides the following results: Multiplication Division 1 1 0 0 x1 x0 x1 x0 1 /1 1 /0 0 0 /1 /0 1 1 - 0 0 0 0 - 2.1.2.2 Polynomials Before proceeding with the derivation of polynomial calculations, it is important to understand a useful concept in data transmission. First, the way binary information is encountered and processed inside computer registers is expressed in the versus space representation. For most systems, this presents the bit ordering with the Least Significant Bit (LSB) at the rightmost register location. If performing a right-bit shift empties the register, then the LSB is the one that is transmitted first out of the register. Most data transmission systems send the LSB first. For example, the ASCII code for letter S is 4 EETS 8316 Fall 2003 1010011, which is the way it is seen inside the register or versus space. During transmission the same code is seen as 1100101, or inverted, which is known as the order of transmission or versus time representation (LSB in the leftmost position). Since CRC calculations are used to detect transmission errors, versus time bit ordering will be used in this example. One way to perform CRC calculation is to represent all of the binary values of the data bits (k), check bits (r), and encoded frame (n) as polynomials of a variable X. Let us define the following variables: DP(X) = k-bit input data polynomial NP(X) = n-bit encoded polynomial (codeword) CP(X) = r-bit check code or CRC polynomial (remainder polynomial) GP(X) = generator polynomial, dividing pattern In order to ensure an r-bit CRC, the value of the data polynomial DP(X) is multiplied by Xr. This provides the effect of appending an r number of 0s to the end of the data polynomial which reserves the space where the resulting CRC is inserted to form the NP(X) codeword. In order to achieve error free transmission, NP(X) as to be exactly divisible by GP(X). Therefore, the CRC field is calculated by dividing Xr DP(X) by GP(X) to obtain following: Xr DP(X) / GP(X) = Q(X) + RP(X)/GP(X) = Q(X) + CP(X) (2.1) Where Q(X) is the resulting quotient and RP(X) is the remainder that expresses the value of CP(X), which is the redundant error detecting code. This means that the transmitted frame is: NP(X) = Xr DP(X) + CP(X) (2.2) At the receiving end, it is desirable that the CRC recalculation does not contain a remainder when the transmitted frame is divided by the generator polynomial (remainder of NP(X)/GP(X) = CPR(X) = 0). This indicates that no errors were introduced during transmission. NP(X)/GP(X) = [Xr DP(X) + CP(X)]/GP(X) = Xr DP(X) /GP(X) + CP(X)/GP(X) (2.3) Substituting equation 2.1 into 2.3 yields: NP(X)/GP(X) = [Q(X) + CP(X)/GP(X)] + CPR(X)/GP(X) = Q(X) (2.4) Where CPR (X) is the recalculated CRC value at the receiver. If no error occurs, then CP(X) = CPR (X) and the modulo 2 addition of CP(X) + CPR (X) equals zero. As an example, given a DP(X) = X6+X4+X3+X2+1, GP(X)= X4+X+1 and a desired 4-bit CRC. The following is done to calculate the CRC value: First expand the polynomial terms to show all coefficients of X. DP(X) = 1*X6+0*X5+1*X4+1*X3+1*X2+0*X1+1*X0 4 3 2 1 GP(X) = 1*X +0*X +0*X +1*X +1*X 0 (2.5) (2.6) Now multiply DP(X) by X4. 5 EETS 8316 Fall 2003 X4*DP(X)=X4*(1*X6+0*X5+1*X4+1*X3+1*X2+0*X1+1*X0) =1*X10+0*X9+1*X8+1*X7+1*X6+0*X5+1*X4+0*X3+0*X2+0*X1+0*X0 (2.7) Finally, dividing X4*DP(X) by GP(X) and using modulo 2 arithmetic yields the CRC value. From Appendix A, part A.1, the remainder value of 0*X3+1*X2+1*X1+0*X0 constitutes the CRC value. To simplify these calculations, the exponential coefficients of X can be removed from equations 2.1 through 2.7 allowing the quantities to be expressed as a binary sequence. Using this concept yields the following results: Equation 2.1 becomes, 2r DP /GP = Q + RP/GP = Q + CP (2.8) Equation 2.2 becomes, NP = 2r DP + CP (2.9) Equation 2.3 becomes, NP = 2r DP + CP =(Q + CP) + CPR Equation 2.4 becomes, NP/GP = (Q + CP/GP) + CPR /GP = Q Equation 2.5 becomes, DP = 1 0 1 1 1 0 1 (2.10) (2.11) (2.12) Equation 2.6 becomes, GP = 1 0 0 1 1 Equation 2.7 becomes, 24 DP = 10111010000 The CRC calculation can again be obtained by performing division of 24 DP by GP, which provides the result as shown in Appendix A, part A.2. Using Equation 2.9, the value of NP equals 10111010000 (24*DP). Substituting the CRC value of 0110 allows NP to be rewritten as 1011010110 and provides the binary pattern of the encoded codeword. At the receiving end, the equipment receives the incoming pattern and recalculates the value of the CRC by performing the calculations in Appendix A, part A.3. The zero remainder value indicates that no error was detected. In the case of an error, this calculation will produce a non-zero value. Suppose NP is changed to 1010101010, recalculating the CRC value provides the results of Appendix A, part A.4. The resulting remainder is not zero, and the quotient (Q) does match the value calculated at the transmitting end. As it can be seen, the received frame is not divisible by GP resulting in the detection of the error. 2.1.2.3 Digital Logic or Hardware In addition to the algebraic method examined in 2.1.2.2, electronics devices calculate CRC values using a hardware method. The digital logic circuit consists of a shift register and XOR gates. The value of the generator polynomial GP(X) is used to construct a right-shift register with linear switches used as feedback taps. The switches are closed to represent the coefficients of GP(X) and the presence of an XOR gate. The input end of the shift register represents the highest order coefficient of the generator polynomial. All of the contents in the shift register are preset to 0. Then, the input DP(X) data frame is entered and shifted, 1 bit at a time, through the shift register. The final content of the register are the error detection bits or CRC. Here is the way the hardware method works. Representing the generator polynomial in the following manner provides: GP(X)=AnXm + An-1Xm-1+ An-1Xm-1+ ………A1X1+ A0X0 6 EETS 8316 Fall 2003 A0 0 A1 ….. An-4 + + 0 An-3 + 0 An-2 0 + An-1 0 + An 0 + INPUT Figure 2.2 Digital Logic Overview for CRC Calculation Using the value of GP(X) from 2.1.2.2 into Figure 2.2 provides the following result. GP(X) = 1*X4+0*X3+0*X2+1*X1+1*X0 1 1 0 + 0 0 + 0 0 + 1 0 + INPUT Figure 2.3 CRC Digital Logic Overview for a Given GP(X) Value 0s in GP(X) indicate that the XOR gates remain open and are therefore not included. Redrawing Figure 2.3 and using equation 2.12 as the binary input value into the registry gives: 0 + 0 0 0 + 1011101 Figure 2.4 CRC Digital Logic Overview with 1011101 Input Sequence Which represents the register logic to calculate the CRC value. Next, the binary input number is shifted through the register 1-bit at a time while performing the appropriate XOR computation. The final step of this digital logic calculation provides a 0110 CRC value as described in Appendix B, providing the same result obtained by the polynomial method in section 2.1.2.2. The output sequence of the register is the 01101011101 binary sequence written in the versus space representation. 7 EETS 8316 Fall 2003 2.2 Error Correction Errors can be corrected more easily by encoding additional extra redundant bits and transmitting them with the original information. There are two methods used to enable the correction of received errors in a transmission medium. The first is BEC, which employs an error detecting code and then uses ARQ to retransmit damaged data. The second is FEC, in which sufficient redundant error correction bits are added to the original signal to allow the receiver to use extra bits to correct data without retransmission. Both mechanisms require the sacrifice of available channel bandwidth as a tradeoff to enabling error correction. 2.2.1 Backward Error Correction BEC is used by data link layer protocols and combines one of the error detecting methods, such as CRC, with the ARQ to provide correction of corrupted frames. ARQ is the mechanism by which lost or damaged frames are rejected and retransmitted. This process is closely related to the flow control mechanism and follows the same principle to perform retransmission. Flow control limits the amount of information being sent so the receiver is not overwhelmed with data, which can cause loss of frames due to an inability to process incoming data. BEC is a very accurate method that provides a high probability of success for detecting and correcting errors. However, ARQ doesn’t use enough redundant bits to allow correction or reconstruction of the message block at the receiving end. Instead, the mechanism detects an error and discards the information, then discovers the missing frame sequence and requests retransmission. During data transmission, a process of acknowledging information is employed between the terminal equipment (TE). In this process, timers are established at each end to determine whether the proper acknowledgement (ACK) is received during this time interval. Data acknowledgement helps the flow control process and provides the mechanism for indicating the status of the received data. A positive ACK indicates the reception of an error free frame. A negative ACK (NACK) indicates the reception of a damaged frame. Finally, a timeout indicates the failure to acknowledge a frame by the required timeout period. All of these mechanisms for acknowledging data form part of the ARQ process. Three techniques are used: Stop-and-Go ARQ, Go-Back-N ARQ and Selective-Reject ARQ. For Stop-and-Go ARQ, the transmitting TE sends a frame and then waits for an ACK from the receiving TE. No other frame can be transmitted until a positive ACK is returned. If an error is encountered, the receiver will request retransmission of frames by issuing a NACK. This is the reason why this technique is considered somewhat inefficient and is not so commonly used, since it can only deal with the transmission of one frame at a time. For Selective-Reject ARQ (SREJ), both TE can deal with the processing of more than one frame. If an error occurs in a series of sent frames, not necessarily in every sent frame, the technique can selectively point out which frame or frames needs to be retransmitted by issuing an SREJ. In other words, frames can be retransmitted out of order, and only those that were damaged will be resent. The drawback to this technique is that it requires a much bigger buffer in order to be capable of sorting and retransmitting frames out of order. 8 EETS 8316 Fall 2003 Go-Back-N ARQ, also known as Continuous ARQ with pullback or explicit reject (REJ) is the most widely used technique. Most of the connection oriented HDLC-based systems use this concept. Therefore, a more elaborate description is provided. With this technique, the transmission of more than one frame is also possible and totally depends on the flow control mechanism maximum window size being used (sliding window concept). A transmitting TE can send a series of frames, up to the window size, in sequential order. If an error is encountered at the receiving end, the receiver will explicitly reject (REJ) that frame and all successive frames. It will then ask the transmitter to resend frames again in sequential order starting from the frame that was damaged. These are the two main reasons why this technique is preferred; more than one frame can be transmitted and it doesn’t require a huge buffer to sort frames out. The disadvantage is that available channel bandwidth is re-utilized to retransmit frames that weren’t originally damaged but were discarded. This is simplified in Figure 2.5. Transmitter F5 F4 F3 Receiver F2 F1 F5 F4 F3 F2 F1 Figure 2.5 ARQ Mechanism For example, the transmitter sends a series of frames F1 through F5, out of which F3 was received with an error. The receiver will send back a REJ F3 notification, which informs the transmitter that F3 was damaged. The transmitter will then retransmit frames F3, F4 and F5 in order even if F4 and F5 contained no errors. For the SREJ ARQ case, the receiver will send back an SREJ F3 notification. The transmitter will respond by retransmitting only F3. These are the way these techniques work in a very simplistic overview, even though, the mechanism for each are more complex and can react to several other conditions. 2.2.2 Forward Error Correction FEC coding is a type of encoding process that improves data reliability by introducing a known redundant data pattern prior to transmission. This process enables the decoder system to detect and possibly correct most errors caused by corruption from the channel without requesting retransmission. For this reason, FEC requires more additional error correcting bits than the previous methods to add enough redundancy and provide a high degree of success. Only the receiver is involved with correcting errors resulting in a faster recovery time. This method is more suitable for applications dealing with real time information interchange or time sensitive communications such as in military, satellite, deep space, digital video, mobile telephony or other wireless applications. Adding redundancy enables a suitable decoder in the receiver to reproduce the original data sent. As expected, codes that introduce a large measure of redundancy lower the error rate, but convey relatively less information. The advantage of this is that it reduces the likelihood that all of the original data will be wiped out during a single transmission. On the other hand, it increases transmission bandwidth requirements and/or message delay. 9 EETS 8316 Fall 2003 2.2.2.1 Block Codes Block coding, also known as algebraic coding, was the only type of forward errorcorrection coding in the early days. With these techniques, the encoder adds parity bits into the data sequence using a particular algebraic algorithm. On the receiving end, the decoder applies an inverse of the algebraic algorithm to identify and correct any errors caused by channel corruption. Block error correction codes follow the same process as outlined in Figure 2.1, and are designated as (n, k) codes. In a communication system, a digital information source sends a k-bit data sequence to an FEC encoder. The encoder inserts redundant parity check bits (r-bits) and produces a longer sequence of encoded bits, n= k + r, Codeword. This process produces 2k valid codewords out of 2n possibilities. The code rate efficiency can be found by dividing the rate of information by the channel rate (k/n). A careful design of block codes involves a tradeoff between using an r-parity bits code that is small enough to reduce bandwidth use, and large enough to correct errors. Examples of block codes are Hamming codes, cyclic codes, BCH, and Reed Solomon. 2.2.2.1.1 Hamming Codes Hamming codes can be utilized to correct the presence of single bit errors in a transmitted frame. Hamming codes are not widely used; however, they provide a good illustration of the principles used in block codes (used in computer memories and mass storage devices). The Hamming Distance between two-information bit sequences d (I1, I2) represents the number of bits in disagreement between them. For example, I1= 1101101 and I2= 0100011, then d (I1, I2)= 4. The greater the distance, the lesser the probability of confusion between two-bit sequences. An (n, k) Hamming code is calculated from the following parameters [SCHI, 86]: Codeword Length: n = k + r = 2r – 1 (2.13) Where k represents the information bits and r is the number of n –k redundant parity check bits. Also the Hamming distance, dmin 2t + 1= 3 for t =1, which means single error correction capability and r 3. The process to construct a single error correcting code is to assign a k number of data bits to an n-bits codeword sequence. The value of k is a fixed value and expresses the maximum number of information bits contained in the codeword. An additional number of specific parity check positions (r-bits) are added to the encoded codeword and are used for error control. The parity positions are calculated by either performing an even parity check or XOR calculation over the selected information bits position. At the receiving end, the decoding process recalculates the parity bits from the received codeword and performs an XOR calculation to provide the resulting code, called the checking number or syndrome word. A value of 0 indicates no errors and the information bits are accepted without any modification. This leaves a remaining of 2r – 1 syndrome values (excluding the all zero value) to indicate the presence of any errors. If a single bit error is detected, this scheme provides the bit position where the error occurred, as outlined in Table C.7. In this process, it is possible for errors to occur for any k data or r check bits position of the encoded codeword. In order to detect a single bit error in a transmitted information bit block, the following condition derived from Equation 2.13 is 10 EETS 8316 Fall 2003 needed, 2r n + 1. From this inequality, the values in Table C.1 (Appendix C) can be calculated to provide the n values for a given number of k and r-bits. Data and parity check bits are arranged into the n-bit codeword in the following manner. Parity bits locations are selected by inserting r-parity bits at position that are power of 2 (20, 21, 22, 23, up to 2r), starting at the rightmost position. The rest of the locations are data bit positions (k-bits). To illustrate some of this theory, from Table C.1 the case for a 15-bit position code where n = 15, k = 11 and r = 4 is extracted. This (15,11) Hamming code is included in Table C.2 with an 11-bit input data block of 10101000101. As explained earlier, two techniques are used to determine the value of the Hamming code. One of them utilizes parity checks over the selected positions of the n-bits codeword. This technique uses an even parity calculation, which for any even numbers of 1s, a 0 value is used to represent the r check bit. Otherwise, a 1 is entered. In order for the Hamming code to identify the position of any error in a syndrome word, the following calculations are performed. To determine the value for P1, use any data bit values whose binary representation has a 1 on the first digit position (20). Similarly, to determine P2, use any binary number that has a 1 on the second position (21). P3 is determined from those binary numbers that have a 1 on the third position (22). And P4 from those numbers that have a 1 in the fourth position (23). Examining the binary form for the data bits in Table C.2 we obtain the values for Table C.3. Then, using the information on Table C.2 and C.3 provides the r-value results illustrated in Table C.4. The alternate technique utilizes the values of those positions of the 11-bit data pattern that contain a binary 1, and then uses their binary representation to perform an XOR calculation to determine all of the bits value of the Hamming code. Using this approach produces the values in Table C.5, which provides the same Hamming code value as in Table C.4. At this point, the calculation of the n = k + r codeword pattern is 101000110101110 as shown in Table C.6. The following process is used to explain how a single bit error can be detected and corrected using Hamming codes. Suppose that, after transmitting the complete n-bit codeword, the 11th bit (D7) is altered, and a 101010110101110 pattern is received instead (see Table C.7). At the receiving end, the Hamming code value will be recalculated from the received pattern and compared with the actual Hamming code to determine the presence on an error. If the result of the XOR calculation is a 0, then no error was received, as shown in Table 2.1. Otherwise, the calculation will detect the presence of a single bit error and provide the location so that the error can be corrected as shown in Table 2.2, which indicates the presence of an error in position 11. From this, it can be concluded that a (15,11) single error correcting Hamming code admits 2k-1 = 211-1 = 2047 valid codewords, which excludes the case where all bits are 0s since it may also indicate a no message pattern. Also, the addition of the redundant check bits requires an r/n of the available channel capacity. For this example this means a 4/15 x 100%= 26.7%. 11 EETS 8316 Fall 2003 Table 2.1 Hamming Code Calculation with No Error Position Code Hamming Code 1 1 1 0 15 1 1 1 1 13 1 1 0 1 9 1 0 0 1 6 0 1 1 0 3 0 0 1 1 XOR= Syndrome 0 0 0 0 Table 2.2 Hamming Code Calculation with Single Bit Error Position Code Hamming Code 1 1 1 0 15 1 1 1 1 13 1 1 0 1 11 1 0 1 1 9 1 0 0 1 6 0 1 1 0 3 0 0 1 1 XOR= Syndrome 1 0 1 1 2.2.2.1.2 Cyclic Codes Cyclic codes are part of the family of block error correction codes. The method in this category is widely used to provide error correction capabilities. Cyclic codes employ the same error detection process as the CRC mechanism detailed in section 2.1.2. The difference is that cyclic error correction codes produce an r-bits check code, which references an error pattern to a corresponding syndrome word to enable error correction. In this method, the encoding process calculates a valid codeword by using a digital logic shift register similar to the one used in CRC codes. Equation 2.2 provided the resulting codeword after the encoding process as being: NP(X) = Xr DP(X) + CP(X) If there is no error introduced during transmission, Equation 2.4 provides the value of the decoded codeword as being: NP(X)/GP(X) = [Q(X) + CP(X)/GP(X)] + CP(X)/GP(X) = Q(X) + R (X)+ R(X)= Q(X) 12 EETS 8316 Fall 2003 Expanding on these formulas used for CRC calculation, it can be concluded that if an error occurs, it provides the following polynomial at the receiver: (2.14) YP(X) = NP(X) + EP(X) Where EP(X) represents an e-bits error polynomial containing a value of 1 for each bit error in YP(X). Now, the way to correct the error introduced is to determine what the error pattern was, and add it to the received polynomial using modulo 2 addition (XOR calculation). This provides: (2.15) YP(X) = NP(X) + EP(X) + EP(X) = NP(X) To determine the value of EP(X), the received YP(X) polynomial in Equation 2.14 is divided by the generator polynomial GP(X). This provides: YP(X)/GP(X) = NP(X)/GP(X) + EP(X)/GP(X) Where, (2.16) EP(X)/GP(X) = QE(X) + SP(X)/GP(X) QE(X) is the quotient of the error polynomial and SP(X) is the remainder polynomial or syndrome word. The syndrome word pattern totally depends on the value of EP(X) and contains 2r-1 possible error patterns that can be corrected, which excludes the all zero value since it indicates no errors. For simplification purposes, the case for correcting a single bit error is examined. Utilizing the same polynomial values as in section 2.1.2. DP(X) = 1*X6+0*X5+1*X4+1*X3+1*X2+0*X1+1*X0 GP(X) = 1*X4+0*X3+0*X2+1*X1+1*X0 X4*DP(X)=X4*(1*X6+0*X5+1*X4+1*X3+1*X2+0*X1+1*X0) =1*X10+0*X9+1*X8+1*X7+1*X6+0*X5+1*X4+0*X3+0*X2+0*X1+0*X0 Where r = 4 and can detect up to 15 (24-1) possible error patterns. However, the values used in Equations 2.5 and 2.6 represent a (11,7) Cyclic code where the codeword is 11bits in length and therefore only 11 single bit error pattern can occur. This is outlined in Table 2.3 below and the corresponding derivations are calculated in Appendix D. Going back to section 2.1.2.2, an error was introduced during transmission and the received NP was changed to 10101010110, which represents the value of YP. The calculated syndrome word value obtained in Appendix A from the decoding process was 1011, which detected an error but couldn’t be corrected by the CRC mechanism. From Table 2.3, this syndrome word value is associated with a 00010000000 error pattern. Substituting this values into Equation 2.14 and performing the modulo 2 addition yields: YP= 10101010110 + 00010000000 = 10111010110 Which exactly matches the original error free codeword sent by the transmitter. 13 EETS 8316 Fall 2003 Table 2.3 Cyclic Code Error Patterns Error Pattern (E) Syndrome Word (S) 00000000001 0001 00000000010 0010 00000000100 0100 00000001000 1000 00000010000 0011 00000100000 0110 00001000000 1100 00010000000 1011 00100000000 0101 01000000000 1010 10000000000 0111 2.2.2.1.3 Bose-Chaudhuri-Hocquenhem (BCH) Codes BCH codes are a widely used, special form of a cyclic error correction block code designed for correcting random errors. This method follows a similar approach as the Hamming code method. The following parameters describe this code [SCHI, 86]: Codeword Length: n = 2m – 1 = k + r (2.17) Number of parity bits: r = mt (2.18) Where k represents the information bits, r is the number of n –k redundant parity check bits and t is the number of error bits that can be corrected. Also the Hamming distance is dmin 2t + 1. This code is capable of correcting any combination of t of fewer bit errors in an n-bits codeword. BCH codes relate to the Hamming codes for the case where t = 1, for single error correction, therefore m = r. To determine the capabilities of a BCH code compared to the (15,11) Hamming code examined previously, let t = 2, this gives r = 2m. If n = 15, then from Equations 2.17 and 2.18 m = 4, r = 8 and k = 7. The code is a (15,7) double error correcting BCH code with a code rate efficiency of k/n = 7/15 = 0.47 and an error correction capability of 2/15 = 0.133 = 13.3%. For the (15,11) Hamming code, the code rate is efficiency 11/15 = 0.733; but the capability is 1/15 = 0.067 = 6.7%. Therefore the BCH code is more reliable, but uses more channel capacity for redundancy and reduces code rate efficiency. 2.2.2.1.4 Reed-Solomon (RS) Code RS codes are part of the cyclic based block error correction techniques with a wide range of applications in digital communications and storage. All previous techniques have so far been involved with correction of individual bit errors. RS codes are a special case of 14 EETS 8316 Fall 2003 BCH codes and are design for correcting burst errors, where a series of bits are received in error. Therefore they are arranged in groups of bits or symbols. The encoder takes k data symbols of m-bits and adds an r number of redundant parity symbols to make an n symbol codeword. An RS decoder can correct t symbol errors in a codeword, where 2t = r. The number and type of errors that can be corrected depends on the characteristics of the RS code. The following parameters describe this code [SCHI, 86]: Codeword Length: n = 2m – 1 = k + r (symbols) Number of parity symbols: t = r / 2; (r = 2t) (2.19) (2.20) Where k represents the information bits, r is the number of n –k redundant parity check bits, m is the number of bits per symbol and t is the number of error symbols that can be corrected. Also the Hamming distance is dmin 2t + 1. A commonly used RS code is RS (255, 223) with m equals 8-bits, 1-octet, symbols. This code contains 255 octets per n codeword of, 223 octets of k data symbols and 32 octets of r parity symbols. From 2.20, t equals 16 and indicates the decoder’s ability to correct any 16-symbol errors in a codeword. One symbol error implies that one or more bits in a symbol are altered. Equation 2.19 provides the maximum codeword size length. An RS codeword has 2t syndromes that indicate the presence of errors. 2.2.2.2 Convolutional Codes Another FEC technique is known as convolutional coding. This technique provides error correction by processing input bits as continuous streams of data instead of processing blocks of data as seen in previous techniques. Another difference from block codes is that the encoding process uses memory to calculate the n-bits output values and utilizes not only current k-bit input, but also any previous input value available in the memory register. This code generates continuous error correction so that error checking can be accomplished on a continuous basis. A (n, k, M) convolutional code is defined by three parameters: n, k and M. Where k is the number of inputs bits, n is the number of output bits and M specifies the number of memory shift registers. The rate of the code measures the efficiency of the encoder and is provided by k/n. This code is also specified by using a constraint length (L). There are 2L possible iterations in the encoder memory that map the input to output values. These are called states and are derived from the most recent kbit transition from one state to another. State values indicate the state of memory the encoder is at and are greatly related to the type of output sequence that can be expected. This constraint length factor indicates the number of memory states available to produce the various state outputs and is provided by L = k (M – 1). The encoding process for convolutional codes is depicted in Figure 2.6, which presents a (2,1,3) code and required memory shift registers used for calculating the output values. The rate of the code is ½, which specifies that source data occupy half the channel data rate. “Convolutional encoders can easily correct isolated errors at the expense of doubling the data rate” [BELL, 00]. Mobile telephony systems use about half available channel capacity for error control. From Table 13.19-1 [SCHI, 86], the code generator connections for ½ rate Convolutional coder can be obtained for K = 3. This provides the first output value, O1, by performing an XOR calculation of M1, M2 and M3. The second value, O2, is obtained by the XOR of M1 and M3. 15 EETS 8316 Fall 2003 M1 M2 M3 + + O1 = mod2 (M1 + M2+ M3) O2 = mod2 (M1 + M3) Figure 2.6 Convolutional Encoding Process All memory register values are initialized to zero and output calculations continue until all bits have shifted and emptied through register, which returns to the original zero state value. The shaded memory registers, M1 and M2, represent the number of states (2L = 22) of the code and also provide the state value after each transition. Table E.1, in Appendix E, shows that there are four encoding states values: M1M2 = 00, 01, 10, and 11. These states represent the initial register condition, which changes with every shift transition to identify the output value and next state sequence. The encoder design for convolutional codes uses a table look up to perform encoding. Using Figure 2.6 and Table E.1, an input pattern of 1011101 can be encoded into the values shown in Table 2.4. This table shows the input sequence and state, and output sequence and state of encoder. Table 2.4 Convolutional Encoding Results for a 1011101 Input Pattern Time Current Input Bit State Encoded Output Bit Output State t0 00 1 11 10 t1 10 0 10 01 t2 01 1 00 10 t3 10 1 01 11 t4 11 1 10 11 t5 11 0 01 01 t6 01 1 00 10 So the encoded sequence is 11 10 00 01 10 01 00. There are three possible ways to graphically look at the encoder/decoder operation: state, tree and trellis diagrams. Trellis diagram is the prefer method because it represents the linear time sequence of events. A Trellis diagram is drawn by vertically showing all possible 2L state values versus time. Then, a connection from one state to another is made according to the input value. There are two possible choices at each state that are determined by an input of 0 or 1, as indicated in Table E.1. Using the encoded output bits sequence of Table 2.4 provides the Trellis diagram in Appendix E, Figure E.3. 16 EETS 8316 Fall 2003 A convolutional decoder takes memory into account when trying to estimate the most likely sequence of data that produced the received sequence of code bits. There are two categories for decoding convolutional codes: sequential and maximum likelihood decoding. Sequential decoding uses a systematic procedure to search for a good estimate of the message sequence; however, it requires a great deal of memory, and can suffer from buffer overflow and degradation. This method follows only one trellis path at a time and allows retracing forward and backward as it moves through the trellis. Viterbi decoding falls under the second category and represent the most commonly used method. This method uses two assumptions to reduce the number of path choices. 1) Probability of errors is small; 2) The probability of two errors in a row is much smaller than a single error. Viterbi decoders limit the number of paths to 2kL as the most likelihood paths instead of checking all 2n of them. Then, the decoder compares all the allowable code sequences until the path converges to one. At this point, the path with the smallest Hamming distance (number of mismatched bits) is selected. This method is explained in Appendix E, part E.4. Convolutional coding is generally better when faced with random errors. However, any decoding errors occurring in the convolutional decoder are also likely to occur in bursts. The use of an interleaver between the two encoding stages to deal with any bursts further enhances performance. This process is used particularly in mobile voice systems as well as in commercial broadcasting services and other applications. 3. APPLICATIONS IN WIRELESS ENVIRONMENTS 3.1 Wireless networks. Data transmission through wireless environment is more unpredictable and destructive compared to wireline systems. Wireless voice networks primarily utilize FEC techniques to correct errors introduced by environmental transmission impairments on radio frequency (RF) signals. Some of the voice networks explained below use a combination of error protection methods (i.e. CRC, block and convolutional coding) to further protect most important speech bits. These methods make use of additional redundancy and utilize available bandwidth for the purpose of enabling error control, which is a limited and scarce resource in wireless systems. Wireless data networks, on the other hand, can tolerate a certain amount of transmission delay and can therefore utilize a BEC technique to enable error protection. 3.1.1 Advanced Mobile Phone Service (AMPS). AMPS uses the BCH channel coding method to provide error protection. On the forward link, the voice channels use a BCH (40,28; 11) code and control channels use a (40,28; 5) code. On the reverse link, a (48,36; 5) BCH code is used. This method is Outlined in Figure 3.1 [GOOD, 97]. BCH CODE (n,k;m) Repeat m Times (m,1;m) 17 EETS 8316 Fall 2003 Channel RVC FVC RECC FOCC n 48 40 48 40 k 36 28 36 28 m 5 11 5 5 b/s 662-703 271 1250-1442 1215 Figure 3.1 AMPS Channel Coding Process 3.1.2 Digital-Advanced Mobile Phone Service (D-AMPS). The error control mechanism for D-AMPS employs a combination of CRC and convolutional coding to protect data. The output of the VSELP (Vector Sum Excited Linear Prediction) speech coder produces a 159-bit pattern every 20 ms at a rate of 7.95kb/s (159-bits/20ms). Out of which, 77-bits are classified as class 1 bits and needing more error protection. The remaining 82-bits are class 2 bits and are not provided with any protection. From the class 1 bits, 12-bits are categorized as the most significant speech bits and are labeled class 1a bits. These bits are further protected by a 7-bit error detecting CRC. The remaining 65-bits are called class 1b bits and are not CRC protected. All of the class 1 bits (class 1a, class 1b and 7-bit CRC) are then appended with 5 tail bits and sent through a (2,1,6) rate ½ convolutional encoder with a constraint length of L = 6, which produces a 178-bits coded class 1 bits (2 output bits for each input bit). These are then added together to the 82 class 2 bits to form a 260-bits frame, which are then sent to the interleaver for further processing. The resulting data rate is 260-bits/20ms = 13kb/s. The convolutional encoder uses tail bits in order to account for the length of the shift register and to allow convolutions on the final bits of the frame sequence. This method is outlined in Figure 3.2 [GOOD97]. VSELP Speech Coder Class 2 82 Least Important Bits Class 1 77-Bits Most Significant 12-Bits 65-bits 7-Bit Cyclic Redundancy check Coder 19-Bits Rate ½ Channel Coder 178-Bits Multiplex Interleave 260-Bits Tail 5-Bits Figure 3.2 D-AMPS Channel Coding Process 3.1.3 Global System for Mobile Communications (GSM). GSM uses a similar error protection process as D-AMPS. The output of the Regular Pulse Excited-Linear Predictive Coding (RPE-LPC) speech coder produces a data rate of 260-bits frame every 20ms (260-bits/20ms = 13kb/s). These are divided into a 182 class 1 bits and 78 class 2 bits. Class 2 bits are not protected at all. From the class 1 bits, 50- 18 EETS 8316 Fall 2003 bits are class 1a essential speech bits and are provided the greatest protection by calculating a 3-bit parity block encoding computation. This step produces a 53-bits encoded block and is added to the 132 class 2b bits. These bits are sent to a ½ rate (2,1,5) convolutional encoder with a constraint length of L = 5, which adds 4 tail bits and produces a 378-bits encoded pattern. Finally, the output of the encoder are added to the class 2 bits to form a 456-bits pattern which are sent to the interleaver for further processing and to add protection against burst errors. The resulting data rate is 456bits/20ms = 22.8kb/s, which almost doubles the required bit rate to allow for error protection. This method is outlined in Figure 3.3[GOOD97]. RPE-LPC Speech Coder Class 2 Class 1 182-Bits Most Significant 50-Bits 78 Least Important Bits 132 Class 1b (Important Bits) Calculate 3 Parity bits Rate ½ Channel Coder 53-Bits 378-Bits Multiplex Interleave 456-Bits 4 Tail Bits Figure 3.3 GSM Channel Coding Process 3.1.4 Code Division Multiple Access (CDMA). For a rate set 1 variable bit rate forward channel with an information rate of 8.6kb/s, the output of the Qualcomm Code Excited Linear Prediction (QCELP) speech coder produces a 172-bit frame. These bits are protected by a 12-bits error detecting CRC and sent to a (2,1,9) rate ½ convolutional encoder. The constraint length of 9 indicates that this sequence is appended with 8 tail bits (all 0). This produces a (192 X 2) 384-bits encoded output, which is sent to the interleaver. The resulting data rate is 384-bits/20ms = 19.2kb/s per forward channel. For the reverse channel, the same process is performed as for a forward channel except that the speech coder output is fed to a (3,1,9) rate 1/3 convolutional encoder, which produces a (193 X 3) 576-bits encoded output and is sent to the interleaver for further processing. The resulting data rate is 576-bits/20ms = 28.8kb/s per reverse channel. This method is outlined in Figure 3.4. X=2, Forward Link X=3, Reverse Link QCELP Speech Coder 172-Bits Calculate 12Bit CRC Rate 1/X Channel Coder 384-Bits 576-Bits 8 Tail Bits Figure 3.4 CDMA Channel Coding Process 19 Multiplex Interleave EETS 8316 Fall 2003 3.1.5 Mobitex. Mobitex is a mobile data service that uses packet switching technology to transmit information. As part of the data link layer functions, the Mobitex radio interface utilizes a 16-bit error detecting CRC per each 20-byte data block. At the frame level, it employs the Stop-and-Wait ARQ technique described in Section 2 to correct lost or damaged frames before transmitting next frame. At the block data level, the SREJ ARQ technique is used to selectively retransmit corrupted blocks of data within a frame. 3.1.6 802.11 Wireless LAN (WLAN). The Medium Access Control (MAC) sublayer is responsible for providing reliable data delivery and error control capabilities for 802.11 WLAN. It is also used for determining how devices can obtain access to a shared wireless medium. Transmission of data information over WLAN is subject to the effects of wireless transmission impairments, which result in the loss information frames. For this reason, the MAC frame format includes a 32-bit CRC field for enabling error detection. The MAC frame format is depicted in Figure 3.5 [SERA, 03]. 2 Frame Control 2 Duration 6 Address 1 6 Address 2 6 2 6 Address 3 Sequence Control Address 4 0-2304 Frame Body 4 Octets CRC Figure 3.5 802.11 WLAN MAC Frame Format 4. CONCLUSION This document presented a simple mathematical overview of some of the techniques used to provide error protection in telecommunications systems. As seen in Section 2, different methods are used depending on the application and desired result. These methods are applied to detect and correct corrupted bits or symbols and to recover from errors introduced from transmission impairments. Two categories were presented: error detection and error correction. Error detection techniques can only provide for the detection of errors in a transmitted information sequence. Error correction is left to a different set of techniques such as ARQ or TCP. CRC is an example of a commonly used error detection technique. Error correction is divided into two types, backward and forward error correction. BEC employs error detection with ARQ and is normally the preferred method to use in wired digital data systems were errors are less likely to occurred and where retransmission of corrupted information can be tolerated without major loss of intelligibility. This method, however, is not suitable in some wireless application environments or real time applications. FEC adds sufficient additional redundant bits to allow the receiver to be able to recover from errors without the need for retransmission. This method is mostly used in systems where retransmission of information cannot be tolerated or afforded such as in deep space exploration, military applications, digital video, and wireless communication (i.e. satellite, microwave, mobile telephony). There are two types of FEC codes, block and convolutional codes. Block codes are so called that way because they 20 EETS 8316 Fall 2003 encode information as blocks and are only concern with current message sent during decoding process (i.e. Hamming, Cyclic, BCH and RS codes). Convolutional codes encode information in a stream and rely on memory of previous information received during the decoding process. “Convolutional encoders can correct a much greater number of independent or random bit errors than block codes as long as the corrupted bits are separated by something greater than the channel constraint length” [BELL, 00]. Commonly, different codes and their associated encoding/decoding processes are used to satisfy different systems requirements and applications. Performance and applicability of each technique varies according to the type of error from which wishing to recover. Often, combinations of codes are used to combine the capabilities of different methods on a transmission environment or medium. Error protection coding increases reliability of a communication system but decreases system efficiency. A careful design involves a tradeoff between using a redundancy code that is small enough to reduce bandwidth use and large enough to correct errors. A large number of redundant bits introduces strong protection but also sacrifices available channel bandwidth to carry additional bits, thus reducing the number of information bits that can be transmitted. 21 EETS 8316 Fall 2003 APPENDIX A. CRC Calculation using polynomials This appendix presents the detailed mathematical computations for calculating the CRC value using polynomials and binary sequence explained in section 2.1.2.2. Part A1. CRC Calculation using the Polynomial Method. 1*X6+0*X5+1*X4+0*X3+0*X2+1*X1+0*X0 Q(X) = Quotient 1*X4+0*X3+0*X2+1*X1+1*X0 1*X10+0*X9+1*X8+1*X7+1*X6+0*X5+1*X4+0*X3+0*X2+0*X1+0*X0 -1*X10+0*X9+0*X8+1*X7+1*X6 0*X9+1*X8+0*X7+0*X6+0*X5 -0*X9+0*X8+0*X7+0*X6+0*X5 1*X8+0*X7+0*X6+0*X5+1*X4 -1*X8+0*X7+0*X6+1*X5+1*X4 0*X7+0*X6+1*X5+0*X4+0*X3 -0*X7+0*X6+0*X5+0*X4+0*X3 0*X6+1*X5+0*X4+0*X3+0*X2 -0*X6+0*X5+0*X4+0*X3+0*X2 1*X5+0*X4+0*X3+0*X2+0*X1 -1*X5+0*X4+0*X3+1*X2+1*X1 0*X4+0*X3+1*X2+1*X1+0*X0 -0*X4+0*X3+0*X2+0*X1+0*X0 R(X) 0*X3+1*X2+1*X1+0*X0 Part A2. CRC Calculation using Binary representation. 10011 1 0 1 0 0 1 0 (Q = Quotient) 10111010000 10011 01000 00000 10001 10011 00100 00000 01000 00000 10000 10011 00110 00000 (CP) 0 1 1 0 A-1 EETS 8316 Fall 2003 Part A3. CRC recalculation at receiving end containing no errors. 10011 1 0 1 0 0 1 0 (Q = Quotient) 10111010110 10011 01000 00000 10001 10011 00100 00000 01001 00000 10011 10011 00000 00000 0 Part A4. CRC recalculation containing errors. 10011 1 0 1 1 0 1 1 (Q = Quotient) 10101010110 10011 01100 00000 11001 10011 10100 10011 01111 00000 11111 10011 11000 10011 1011 A-2 EETS 8316 Fall 2003 APPENDIX B. CRC Calculation Using Digital Logic This section describes the technique for calculating the CRC value using the digital logic method explained in 2.1.2.3. First shift provides the following result: 1 1 1 (1 XOR 0 = 1) 0 + 0 0 0 (1 XOR 0 = 1) + 101110 1 + 1 1 0 0 + 101110 1 Second shift provides: 0 0 0 (0 XOR 0 = 0) 1 + 1 0 0 (0 XOR 1 = 1) + 10111 0 + 1 1 01 0 + 10111 01 Third shift provides: 1 1 1 (1 XOR 0 = 1) 0 + 1 1 0 (1 XOR 0 = 1) + 1011 1 + 1 1 101 1 + 1011 101 Fourth shift provides: 0 0 0 (1 XOR 1 = 0) 1 + 1 1 1 101 (0 XOR 1 = 1) + 0 1101 + 1 1 1 101 B-1 + 1101 EETS 8316 Fall 2003 Fifth shift provides: 0 0 0 (1 XOR 1 = 0) 0 + 1 1 (0 XOR 0 = 0) + 1 10 0 + 0 1 + 1 11101 10 11101 Sixth shift provides: 1 1 1 (1 XOR 0 = 1) 0 + 0 1 (1 XOR 0 = 1) + 1 1 1 + 1 0 + 1 011101 1 011101 Seventh shift provides: 0 0 0 (1 XOR 1 = 0) 1 + 1 0 1 (0 XOR 1 = 1) + 0 1011101 + 1 1 0 + 1011101 NOTE: The value of 0110 contained after the final register shift is the CRC value. B-2 EETS 8316 Fall 2003 APPENDIX C. Hamming Code Calculation Part C1. Hamming code calculation. Determining values of n, k and r based on 2r – 1 k + r = n, or, 2r n + 1. TABLE C.1 Codeword Length n Binary Data Bits k Check Bits r 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 0001 0010 0011 0100 0101 0110 0111 1000 1001 1010 1011 1100 1101 1110 1111 0 0 1 1 2 3 4 4 5 6 7 8 9 10 11 1 2 2 3 3 3 3 4 4 4 4 4 4 4 4 Part C2. Calculating r-bit values using Parity Checks Or XOR Calculation. TABLE C.2 Codeword Length (n) 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1 Binary number 1111 1110 1101 1100 1011 1010 1001 1000 0111 0110 0101 0100 0011 0010 0001 Data Bit (k) D11 D10 D9 D8 D7 D6 D5 Check bit (r) Transmitted Block Codes D4 D3 D2 P4 1 1111 0 1 0 0 0 1101 1 1001 C-1 D1 P3 0 1 0110 0 P2 1 0011 P1 EETS 8316 Fall 2003 Part C2.1. Calculating r parity check bit values using parity check. TABLE C.3 P1 (20) P2 (21) P3 (22) P4 (23) 1= 0001 2= 0010 4= 0100 8= 1000 3= 0011 3= 0011 5= 0101 9= 1001 5= 0101 6= 0110 6= 0110 10=1010 7= 0111 7= 0111 7= 0111 11=1011 9= 1001 10=1010 12=1100 12=1100 11=1011 11=1011 13=1101 13=1101 13=1101 14=1110 14=1110 14=1110 15=1111 15=1111 15=1111 15=1111 etc. etc. etc. etc. Using the values from Table C.2 and C.3, the following values are obtained. TABLE C.4 Codeword Length (n) 15 14 13 12 11 10 9 8 7 6 5 4 3 Transmitted Codeword 1 0 1 0 0 0 1 P4 0 1 0 P3 1 2 1 P2 P1 Calculation of Check bits applying TABLE C.2 and C.3 Parity P1 1 - 1 - 0 - 1 - 0 - 0 - 1 - P 0 P2 1 0 - - 0 0 - - 0 1 - - 1 P - 1 P3 1 0 1 0 - - - - 0 1 0 P - - - 1 P4 1 0 1 0 0 0 1 P - - - - - - - 1 C-2 EETS 8316 Fall 2003 Part C2.2. Calculating r check bit values using XOR calculation of the values in Table C.2. TABLE C.5 Position Binary Code 15 1 1 1 1 13 1 1 0 1 9 1 0 0 1 6 0 1 1 0 3 0 0 1 1 XOR 1 1 1 0 Check Bits P4 P3 P2 P1 Part C2.3. Completed codeword pattern calculated from previous Tables. TABLE C.6 Codeword Length (n) 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1 Binary number 1111 1110 1101 1100 1011 1010 1001 1000 0111 0110 0101 0100 0011 0010 0001 Data Bit (k) D11 D10 D9 D8 D7 D6 D5 Check bit (r) Transmitted Codeword Codes D4 D3 D2 P4 1 0 1111 1 0 0 0 1101 1 1 D1 P3 0 1001 1 0 1 0110 1 P2 P1 1 0 0011 Part C2.4. Performing error detection of a codeword pattern received with a single bit error. TABLE C.7 Codeword Length (n) 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1 Binary number 1111 1110 1101 1100 1011 1010 1001 1000 0111 0110 0101 0100 0011 0010 0001 Data Bit (k) D11 D10 D9 D8 D7 D6 D5 Check bit (r) Transmitted Codeword Codes D4 D3 D2 P4 1 1111 0 1 1101 0 1 1011 0 1 1001 C-3 1 D1 P3 0 1 0110 0 1 1 0011 P2 P1 1 0 EETS 8316 Fall 2003 APPENDIX D. Cyclic Code Calculation This section provides the derivations for the error pattern and syndrome words presented in the cyclic codes section 2.2.2.1.2. 0000000 (QE) 10011 00000000001 00000 (Syndrome=S) 0 0 0 1 0000000 (QE) 10011 00000000010 00000 (S) 0 0 1 0 0000000 (QE) 10011 00000000100 00000 (S) 0 1 0 0 0000000 (QE) 10011 00000001000 00000 (S) 1 0 0 0 0000001 (QE) 10011 00000010000 10011 (S) 0 0 1 1 0000010 (QE) 10011 00000100000 10011 00110 00000 (S) 0 1 1 0 0000100 (QE) 10011 00001000000 10011 00110 00000 01100 00000 (S) 1 1 0 0 0001001 (QE) 10011 00010000000 10011 00110 00000 01100 00000 11000 10011 (S) 1 0 1 1 D-1 EETS 8316 Fall 2003 0 0 1 0 0 1 1 (QE) 10011 00100000000 10011 00110 00000 01100 00000 11000 10011 10110 10011 (S) 0 1 0 1 0100110 (QE) 10011 01000000000 10011 00110 00000 01100 00000 11000 10011 10110 10011 01010 00000 (S) 1 0 1 0 1 0 0 1 1 0 1 (QE) 10011 10000000000 10011 00110 00000 01100 00000 11000 10011 10110 10011 01010 00000 10100 10011 (S) 0 1 1 1 D-2 EETS 8316 Fall 2003 APPENDIX E. Convolutional Code Calculation E.1. (2,1,3) Convolutional Encoder, ½ code rate. M1 M2 M3 + + O1 = mod2 (M1 + M2+ U3) O2 = mod2 (M1 + M3) Figure E.1 TABLE E.1 (2,1,3) Convolutional Encoder Current Previous Input Bit Output Output Current State Register Bits Bits Register Bits State s1 s2 M1 M2 M3 0 0 0 0 0 0 1 0 1 0 1 0 1 0 0 1 1 1 1 0 I1 M1 M2 M3 s1 s2 O1 O2 0 0 0 0 0 0 0 0 1 1 0 0 1 0 1 1 0 0 0 1 0 0 1 1 1 1 0 1 1 0 0 0 0 0 1 0 0 1 1 0 1 1 1 0 1 1 0 1 0 0 1 1 0 1 0 1 1 1 1 1 1 1 1 0 Part E.2. Convolutional code state diagram derived from Table E.1. At each state, the input value and corresponding output values are provided as I1 (O1,O2). 0 (00) S00 0 (11) S01 0 (01) 1 (00) 0 (10) 1 (11) 1 (10) S11 S10 1 (01) Figure E.2 E-1 EETS 8316 Fall 2003 Part E.3. Trellis mapping for convolutional code obtained in Table 2.4 with an input sequence 1011101 and encoded output sequence of 11 10 00 01 10 01 00. Dashed lines represent an upward move on the trellis. Solid lines represent a downward move. State t0 11 00 t1 10 (00) t2 00 t3 01 (00) t4 10 (00) (00) (11) (11) (11) (10) (10) (11) (11) (00) (10) (00) (11) (11) (00) t7 (00) (11) (11) (00) t6 00 (00) (11) (11) 01 t5 01 (11) (00) (10) (00) (10) (10) 10 (01) (01) (01) (01) (01) (01) 11 (10) (01) (10) (10) (01) (01) (10) (01) (01) (10) Figure E.3 Part E4. Decoding using Viterbi method and Trellis diagram. For a 01 10 00 01 10 01 00 error pattern. HD is the Hamming distance value and TD is the Trellis diagram value. Step 1, Input 01 State t0 t1 00 Step 2, Input 0110 TD HD 00 t0 t1 t2 1 0000 2 01 1110 1 10 11 1 0011 2 11 1101 3 Step 3,Input 011000 State t0 TD HD t1 t2 t3 TD HD 00 000000 2 01 001110 3 10 111000 1 11 001101 3 E-2 EETS 8316 Fall 2003 Step 4, Input 01100001 State t0 t1 t2 t3 t4 TD HD 00 00000000 3 01 11100010 3 10 00000011 3 11 11100001 1 Step 5, Input 0110000110 State t0 t1 t2 t3 t4 t5 TD HD 00 1110001011 4 01 1110000101 3 10 1110001000 4 11 1110000110 1 Step 6, Input 011000011001 State t0 t1 t2 t3 t4 t5 t6 TD HD 00 111000010111 4 01 111000011001 1 10 111000010100 4 11 111000011010 3 Step 7, Input 01100001100100 State t0 t1 t2 t3 t4 t5 t6 t7 TD HD 00 11100001100111 3 01 11100001101001 4 10 11100001100100 1 11 11100001101010 4 E-3 EETS 8316 Fall 2003 REFERENCES [BAKE, 03] Charles Baker, EETS 8301 Data Communications Course Material, 2003 [BATE, 01] Regis J. Bates and Donald W. Gregory, Voice and Data Communications Handbook, 4th Edition, McGraw-Hill, 2001 [BELL, 00] John C. Bellamy, Digital Telephony, 3rd Edition, John Wiley & Sons, 2000 [BIRD, 03] Eric Bird, EETS 8306 Wireless, Cellular & Personal Telecommunication Course Material, 2003 [GOOD, 97] David J. Goodman, Wireless Personal Communications Systems, AddisonWesley, 1997 [HAYE, 93] John P. Hayes, Introduction to Digital Logic Design, Addison-Wesley, 1993 [IMAI, 90] Hideki Imai, Essentials of Error Control Coding Techniques, Academic Press, 1990 [KURU, 97] Rajan Kuruppillai, Mahi Dontamsetti and Fil J. Consentino, Wireless PCS, McGraw-Hill, 1997 [LEVI, 03] Richard Levine, EETS 8302 Digital Telephony Course Material, 2003 [LINS, 70] S. Lin, An Introduction to Error Correcting Codes, Prentice-Hall, Englewood Cliffs, 1970 [LINT, 99] J. H. van Lint, Introduction to Coding Theory, Springer, 3rd Edition, 1990 [MINO, 91] Daniel Minoli, Telecommunications Technology Handbook, Artech House, 1991 [PETE, 72] W. W. Peterson and E. J. Weldon, Jr., Error Correcting Codes, 2nd Edition, The MIT Press, 1972. [SCHI, 86] Donald L. Schilling and Herbert Taub, Principles of Communication Systems, 2nd Edition, Mac-Graw-Hill, 1986 [SERA, 03] Jila Seraf, EETS 8316 Wireless Networks Course Material, 2003 [STAL, 96] William Stallings, Data & Computer Communications, 6th Edition, PrenticeHall, 1996 [UONB, 02] University of New Brunswick, EE4253 Digital Communications, Error correction and the Hamming code, 02 Apr 02, http://www.ee.unb.ca/tervo EETS 8316 Fall 2003 ACRONYMS ACK AMPS ARQ BCH BER BEC CDMA CDPD CRC D-AMPS DSL DVB DVD ESF FCS FEC GSM HDLC HDTV ISDN LAPB LAPD LSB MAC NACK OSI QCELP REJ RPE-LPC RS RF SONET SREJ TCM TCP TE VSELP XOR Acknowledgement Advanced Mobile Phone Service Automatic Repeat Request Bose-Chaudhuri-Hocquenhem Bit Error Rate Backward Error Correction Code Division Multiple Access Cellular Digital Packet Data Cyclic Redundancy Check Digital – Advanced Mobile Phone Service Digital Subscriber Loop Digital Video Broadcasting Digital Video Display Extended Superframe Frame Check Sequence Forward Error Correction Global System for Mobile Communications High-Level Data Link Control High Definition Television Integrated Services Digital Network Link Access Procedure Balanced Link Access Procedure for the D channel Least Significant Bit Medium Access Control Non-Acknowledgement Open Systems Interconnect Qualcomm Code Excited Linear Prediction Reject Regular Pulse Excited-Linear Predictive Coding Reed-Solomon Radio Frequency Synchronous Optical Networks Selective Reject Trellis Coding Modulation Transmission Control Protocol Terminal Equipment Vector Sum Excited Linear Prediction Exclusive-OR