Analysis Narrative

advertisement

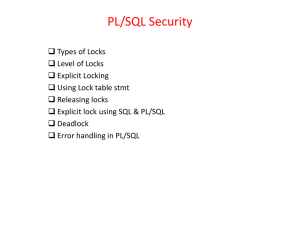

Granularity of Locks and Degrees of Consistency in a Shared Database Authors: J.N. Gray, R.A. Lorie, G. R. Putzolu and I. L. Traiger Presented by: Charles Braxmeier and Stuart Ness Motivation In a multi-user database, it is essential to provide a means for operating concurrent transactions in order to increase the efficiency of the database. At the time of publication of this article, very simple policies providing concurrent processing had already been implemented. These existing policies were not efficient for working in a massively concurrent system. Systems that had fast concurrent policies did not have a fine grain approach to the locking policy, while those policies with fine grain had a large amount of overhead in order to create the locks. Concurrent processing allows a database to process multiple transactions at a given time. The research described in this paper was performed in the mid 1970’s and was not done on current database standards, however this research demonstrated a fast approach to allow for less overhead without reducing the ability to lock the database at different levels. Problem Definition Databases need an efficient means for providing concurrent transactions. The early stages of databases did not focus heavily on this issue because of the single-user mode that most databases originally started with. However, with the move towards large multiuser database systems became normal, it became apparent that concurrent processing was needed. The problem with many early forms of concurrency programming revolved around either large grain locks, (requiring either the whole database, file, or table that the transaction was using, or it would require the individual records which required a large amount of overhead to check each transaction every time a new request was issued. Therefore, explicitly locking an entire sub-tree required a great deal of computation time (no structure to implement an inherited lock). Contributions The article reasons that lock granularity is important to be able to provide efficient concurrent transactions. In addition, he explains that without the finer grained locking, it would not be practical to have a large concurrent system. In order to deal with this, he provides to main mechanisms which would allow for finer-grained resource locking. The first mechanism which he suggests is using an implicit structure to lock the entire sub-tree. This recommendation uses the idea that all nodes underneath a particular node are implicitly locked at the parent’s lock level, or higher. The second mechanism introduces an ‘intention’ mode which allows a given node to lock the ancestors without requiring them to have a full lock. This provides a means for locking a tree above which provides a quick (computationally inexpensive) means for checking to see if two transactions can exist concurrently. In addition to these two modes, the paper also suggests ways to deal with preemption and deadlock with these two new modes. (Deadlock occurs particularly when two transactions which to change there mode in an incompatible way, when they both are coexisting with lower compatible permissions. Concepts – 1 In reference to the main concepts in the paper, there are some key concepts that need to be understood. First, the granularity of locks. As shown in the figure the database is structured in a manner much like a tree. The reasoning in this article, there are some assumptions made as to how the database is setup. The article assumed that the database had the following levels: a database level, which contained multiple areas (folders) which contained many files, and each file contained individual records. The assumption mimics the basic layout of file structure in computer systems. This structure is important to be able to perform the locking mechanism. For instance, a lock at the database level would allow for a complete replacing of all existing files and folders. A lock on an area would allow for a direct replacement of an entire file, and a lock on a file would allow for a mass upload of records. In addition to having a tree structure available for locks, there are also different lock modes. These are five modes: exclusive mode (write mode), share mode (read mode), which both had been used, but also the intention share, intention exclusive, and share and intention exclusive modes. These last three modes were used to provide a clear marker for what type of hold a sub-level had on the particular file. For instance, if a file was marked in IS mode, it could easily be derived that a record was using an S mode. Concepts -2 To understand how these modes fit together, there are certain modes which can cooperate together. The best way to think of this is that if a current session is marked with one of these modes, then that node cannot be locked in a non-compatible mode. (Explain the chart) While a node may need to be locked at a certain level, the path is always locked from root to the desired node. This prevents a need for a large amount of searching to see who should be able to have access. So, if any node along the path were locked in an incompatible way, the transaction would be put onto a waiting queue. (Show the example of how the record would be locked) The article notes that leaf nodes cannot be locked in ‘intention’ mode, since it really would make no sense to allow them to do that. Concepts – 3 The article then goes on to explain how implicit based locking would work. The implicit based locking uses the idea that if an ancestor is held at a certain level than all of that ancestor’s descendants must also be held at that level or higher. For instance, if file F was locked in exclusive write mode, then we could assume that that exclusive lock mode would also apply to record R that exists within file F. So, rather than specify each record that is needed within F, explicitly imposing the exclusive lock at one level allows for all sub-levels to be locked implicitly. The article then points out that unfortunately, most database file systems do not hold a true hierarchical format. It goes onto state that most files will look more like a directed acyclic graph. In the case of the database, this is as simple as stating that a file may have the ability to access via the file structure, or via an index structure. Therefore, in order to move a transaction between files for instance, not only would the file have to be locked, but the index would also have to be locked. The article provides a process which essentially is to create a hierarchical lock, either explicit or implicit on both the file and the index. (Go through example of how this is done) Concepts - 4 The article then goes on to state that the locks can become dynamic by being based on a particular value. This is simply an extension of the Directed Acyclic Graph by adding one condition, if moving from one index interval to another, then both intervals must be locked, along with their required path. However, offering these new modes would not help unless the database was able to schedule and grant the new requests. In the basic form of how it operates, it continually adds requests that are “allowable” modes (see the chart) until the next arriving request no longer is compatible. For example, The node current state is intentional share access and a node wants exclusive access. Then a queue is formed with the Exclusive access as the head node. It then waits until the current processing is compatible or empty, then it takes the next however many requests are compatible with the first mode. One problem that can occur is when a process starts with one permission level and requests a different permission level. Essentially, the way to deal with this is if the permission level is lower than the current level, it does nothing, but if it is higher, it checks to see if the current state will support its higher mode. If yes, then it can change immediately, if not, it must wait with its current access level until the state level will allow it the correct permissions. This can cause the problem of deadlock if multiple processes request higher permissions and sit waiting for the state to clear, because while the transactions wait, they do not leave the current state. The article then says that at this point one or more of the level change requests would have to be preempted to alleviate deadlock. Validation Methodology This paper is more of an informal theory paper, offering no proofs, but using the article to share findings that have been implemented on an IBM research lab system. This essentially amounted to showing that a proof of concept working system had been provided. The unfortunate part is that it lacks a formal proof or any statistical evidence of increased performance improvements. Assumptions A few assumptions were in the article. The first is that the database can be broken into some fashion of a hierarchical form, whether that is a straight hierarchy or a Directed Acyclic Graph, it uses a hierarchical form. The second assumption is that the current database technology is from 1975. Obviously, it is impossible to predict the future, but this assumption means that it does not allow for transcending to all DB types (relational, structured, etc). By removing this assumption, the techniques may not hold up, however, the basic principles of this locking mechanism would be possible, as long as a hierarchical structure was imposed. If a structure cannot be imposed such as in more complex queries, this locking mechanism may lose its advantages. Rewrite Changes If this paper were rewritten, it would need to be reflective of the current state of databases. In addition, comparisons between this method and other methods would be beneficial to show the concurrency vs. overhead tradeoffs, and how this fits into the mix of the options for concurrency. Finally, the process and ideas still are fundamentally realizable given the current hierarchical system that is being used for storage mechanisms. Preserving the notion of the groups and the scheduling and hierarchical system would be beneficial for that part of the database system.