In current years, human action recognition has

advertisement

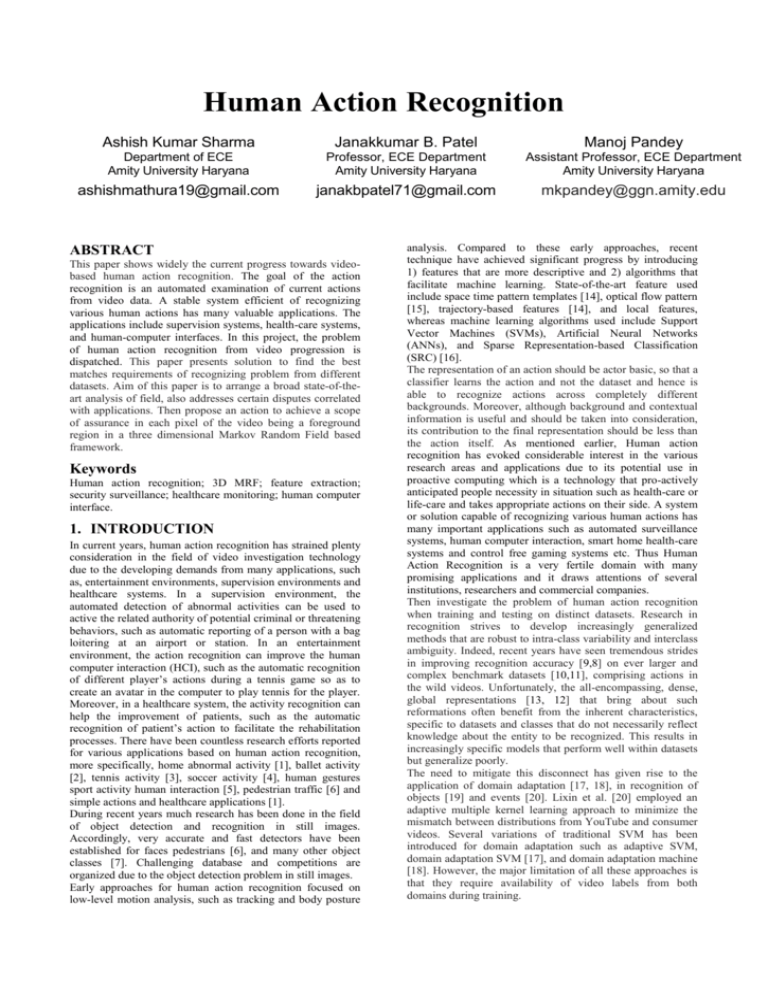

Human Action Recognition

Ashish Kumar Sharma

Janakkumar B. Patel

Manoj Pandey

Department of ECE

Amity University Haryana

Professor, ECE Department

Amity University Haryana

Assistant Professor, ECE Department

Amity University Haryana

ashishmathura19@gmail.com

janakbpatel71@gmail.com

mkpandey@ggn.amity.edu

ABSTRACT

This paper shows widely the current progress towards videobased human action recognition. The goal of the action

recognition is an automated examination of current actions

from video data. A stable system efficient of recognizing

various human actions has many valuable applications. The

applications include supervision systems, health-care systems,

and human-computer interfaces. In this project, the problem

of human action recognition from video progression is

dispatched. This paper presents solution to find the best

matches requirements of recognizing problem from different

datasets. Aim of this paper is to arrange a broad state-of-theart analysis of field, also addresses certain disputes correlated

with applications. Then propose an action to achieve a scope

of assurance in each pixel of the video being a foreground

region in a three dimensional Markov Random Field based

framework.

Keywords

Human action recognition; 3D MRF; feature extraction;

security surveillance; healthcare monitoring; human computer

interface.

1. INTRODUCTION

In current years, human action recognition has strained plenty

consideration in the field of video investigation technology

due to the developing demands from many applications, such

as, entertainment environments, supervision environments and

healthcare systems. In a supervision environment, the

automated detection of abnormal activities can be used to

active the related authority of potential criminal or threatening

behaviors, such as automatic reporting of a person with a bag

loitering at an airport or station. In an entertainment

environment, the action recognition can improve the human

computer interaction (HCI), such as the automatic recognition

of different player’s actions during a tennis game so as to

create an avatar in the computer to play tennis for the player.

Moreover, in a healthcare system, the activity recognition can

help the improvement of patients, such as the automatic

recognition of patient’s action to facilitate the rehabilitation

processes. There have been countless research efforts reported

for various applications based on human action recognition,

more specifically, home abnormal activity [1], ballet activity

[2], tennis activity [3], soccer activity [4], human gestures

sport activity human interaction [5], pedestrian traffic [6] and

simple actions and healthcare applications [1].

During recent years much research has been done in the field

of object detection and recognition in still images.

Accordingly, very accurate and fast detectors have been

established for faces pedestrians [6], and many other object

classes [7]. Challenging database and competitions are

organized due to the object detection problem in still images.

Early approaches for human action recognition focused on

low-level motion analysis, such as tracking and body posture

analysis. Compared to these early approaches, recent

technique have achieved significant progress by introducing

1) features that are more descriptive and 2) algorithms that

facilitate machine learning. State-of-the-art feature used

include space time pattern templates [14], optical flow pattern

[15], trajectory-based features [14], and local features,

whereas machine learning algorithms used include Support

Vector Machines (SVMs), Artificial Neural Networks

(ANNs), and Sparse Representation-based Classification

(SRC) [16].

The representation of an action should be actor basic, so that a

classifier learns the action and not the dataset and hence is

able to recognize actions across completely different

backgrounds. Moreover, although background and contextual

information is useful and should be taken into consideration,

its contribution to the final representation should be less than

the action itself. As mentioned earlier, Human action

recognition has evoked considerable interest in the various

research areas and applications due to its potential use in

proactive computing which is a technology that pro-actively

anticipated people necessity in situation such as health-care or

life-care and takes appropriate actions on their side. A system

or solution capable of recognizing various human actions has

many important applications such as automated surveillance

systems, human computer interaction, smart home health-care

systems and control free gaming systems etc. Thus Human

Action Recognition is a very fertile domain with many

promising applications and it draws attentions of several

institutions, researchers and commercial companies.

Then investigate the problem of human action recognition

when training and testing on distinct datasets. Research in

recognition strives to develop increasingly generalized

methods that are robust to intra-class variability and interclass

ambiguity. Indeed, recent years have seen tremendous strides

in improving recognition accuracy [9,8] on ever larger and

complex benchmark datasets [10,11], comprising actions in

the wild videos. Unfortunately, the all-encompassing, dense,

global representations [13, 12] that bring about such

reformations often benefit from the inherent characteristics,

specific to datasets and classes that do not necessarily reflect

knowledge about the entity to be recognized. This results in

increasingly specific models that perform well within datasets

but generalize poorly.

The need to mitigate this disconnect has given rise to the

application of domain adaptation [17, 18], in recognition of

objects [19] and events [20]. Lixin et al. [20] employed an

adaptive multiple kernel learning approach to minimize the

mismatch between distributions from YouTube and consumer

videos. Several variations of traditional SVM has been

introduced for domain adaptation such as adaptive SVM,

domain adaptation SVM [17], and domain adaptation machine

[18]. However, the major limitation of all these approaches is

that they require availability of video labels from both

domains during training.

There is no question that these techniques improve

performance across datasets, and are significant in their own

right, but it is worth asking whether the same actions in

distinct datasets are truly representative of different domains

or if their specific characteristics are distracting biases that

emanate from data collection criteria and processes. This issue

has been raised recently work by Torralba and Efros [21] for

the problem of image classification and object detection. They

have empirically established that most object recognition

datasets represent close visual world views and have biases

toward specific poses, backgrounds, and locations, etc. In this

study, we show that action recognition datasets too are

prejudiced towards background scenes – a characteristic that

should ideally be inconsequential to human action classes.

Historically, taking a page from image analysis, several video

interest point detectors were introduced, including space time

interest points, Dollar interest points, and spatiotemporal

Hessian detector, etc. The obvious idea was to estimate local

descriptors only at these important locations and ignore the

rest of the video. Representations based on local descriptors

estimated at interest points showed promising results on

simple datasets such as Weizmann and KTH. Even though

these datasets are now considered easier, their generally static,

mostly uniform scene backgrounds, coupled with interest

point detection, ensured a true action representation, largely

devoid of background information. In recent years, the

difficulty in obtaining meaningful locations of interest in

contemporary datasets, coupled with the lack of evaluation of

action localization, has resulted in a shift in research focus

away from interest point detection. Indeed, it has been shown

experimentally, that dense sampling of feature descriptors

generally outperforms interest point [12] and other detectors

(human, foreground, etc.) [22].Several methods have even

been proposed to recognize actions in single images instead of

videos. It is then safe to assume that background scene

information is a key component of the final representation that

allows higher quantitative performance, but in the process

‘learns the dataset’ rather than the action. We maintain that

the goal of action representation schemes and efforts to collect

larger datasets should be to increase intra-class generalization

for which cross-dataset recognition is a reasonable metric.

Aim of this review paper is to analyze problem of

Discrimination between the similar actions such as: Jumping-sitting, Jogging-Walking, Boxing-Stretching etc. and

propose several measures to quantify the effect of scene and

background statistics on action class discriminatively. And

propose methods for obtaining foreground-specific action

representations, using appearance, motion and saliency in a

3D MRF based framework.

This paper shows study the effect of context in the action

recognition problem both in terms of motion features (action)

as well as appearance features (scene). Here by context we

mean anything in the video frames excluding the actor itself.

To classify an action, using basically interested in is the

movements of the body of the actor.

Furthermore, extract different features from the context

(motion/shape) and study effectiveness of each of those

features in scene modeling (while the actor itself is excluded).

This review paper focused on evaluation of the effect of these

background features (scene model). In other words, focused

only assess the amount of gain that we can obtain in action

classification by excluding the background motion features as

well as adding the background static features, such as shape,

as a description of the scene context.

2. FEATURE

EXTRACTION

REPRESENTATION

AND

The important characteristics of image frames are extracted

and represented in a systematical way as features. Feature

extraction have crucial influence in the performance of

recognition, so it is essential to select or represent features of

image frames in a proper process. In a video sequence, the

features that capture the space and time relationship are

known as space-time volumes (STV). In addition to spatial

and temporal information, discrete Fourier transform (DFT) of

image frames mainly captures the image intensity variation

spatially. The space-time volumes and discrete Fourier

transform are global features which are extracted by globally

considering the whole image. Although, the global features

are sensitive to noise, occlusion and variation of viewpoint.

Preferably using global features, some methods are designed

to consider the local image patches as local features. The local

features are designed to be more robust to noise and

occlusion, and possibly to scale and rotation. Also global and

local features, other methods are also designed to directly or

indirectly model human body, so that the pose estimation and

body part tracking techniques can be applied. Furthermore,

the coordinates of the body modeling can be further converted

into lower-dimensional or more discriminative features, like

polar coordinate representation, Boolean features and

geometric relational features (GRF), for effective recognition

purpose.

3. BACKGROUND DISCRIMINABILITY

IN ACTION DATASETS

A recognition dataset should be representative of our

surrounding visual world, and therefore diverse as possible.

Besides illumination, clutter, etc., the sample actions should

vary in terms of actor viewpoint, pose, speed, and articulation.

The background should be diverse as well, but not

discriminative, i.e., it should not aid in recognition of the

action class, or it would limit the generalizability of the class

model, and consequently result in worse cross-dataset

recognition than within dataset. In this section, we quantify

the discriminative power of background scenes in a few well

known action datasets using two methods.

First, we computed motion features on only the background

regions to perform recognition within datasets, and second,

we measured class-wise confusion within datasets using

global scene descriptor.

4. FOREGROUND SPECIFIC ACTION

REPRESENTATION

The problems of foreground-background segmentation, and

human or actor detection are very challenging, and all the

more so, in unconstrained videos that make up the more

recent action datasets. Since goal is to recognize actions,

rather than segmentation, or actor detection, framework does

not attempt to label each pixel or region as foreground or

background. Instead, then estimate the confidence in each

pixel being a part of the foreground, and use it directly to

obtain the codebook as well as the video representation.

4.1. MOTION GRADIENTS

Action is mainly characterized by the motion of moving parts.

Using this important clue to give high confidence to the

locations undergoing articulated motion in a video.

However, since most of the realistic datasets involve moving

camera, simple optical flow magnitude can be high for

background as well. Hence, we used the Frobenius norm of

optical flow gradients. The motion gradient based foreground

confidence, fm is defined as:

4.4. FOREGROUND WEIGHTED

REPRESENTATION

Where, ux, vx, uy, and vy are the horizontal and vertical

gradients of optical flow respectively, and g is a 2D Gaussian

filter with fixed variance.

Another category for segmentation on moving camera is

optical flow, which denotes a displacement of the same scene

in the image sequence at different time instant.

This paper propose to modify the bag-of-words representation

of a video in several important ways. The underlying goal is

to represent the video so that features corresponding to the

actual action that means the foreground, contribute towards

the vocabulary as well as the resulting representation, while

those on the background have minimal effect when training

models or comparing videos. Ideas are described in the

following.

4.2. COLOR GRADIENTS

In many videos, the actor has different color and appearance

than the background, while the background (such as sky or

floor) has relatively uniform color distribution.

Hence, the color gradients can be used as a cue towards

estimating the confidence in location of actor and object

boundaries, while resulting in low responses for background

regions with uniform colors.

Specifically, we compute the color gradient based confidence

in observing a foreground pixel, fc, using the Frobenius norm

of LAB color space given as:

Where (Lx, ax, bx) is the horizontal gradient of the color vector

at (x, y).

4.3. SALIENCY

To use visual saliency as the third cue to estimate the

confidence in observing a foreground pixel.

In sports videos the player receives most of visual attention

and hence represents the most salient part of the video. A

similar observation applies to professional moving camera

videos that follow objects with distinct appearance amid

relatively homogenous backgrounds. Although, our ultimate

goal is to estimate foreground confidences for videos, Due to

large camera motion and noisy optical flow, video or motion

based saliency methods do not always result in reasonable

outputs. Instead, graph based visual saliency used to capture

the salient regions in each frame individually. And chose this

method due its computational efficiency, evident capability in

finding salient regions and natural interpretation as

decomposition of image into neural network.

For computing contrast, luminance, and four orientation maps

corresponding to orientation θ =

{00, 450, 900, 1350} using Gabor filters, all on multiple spatial

scales. In the activation step, a fully connected directed graph

is built where edge weight between two nodes, corresponding

to pixel locations, (i, j) and (p, q) is given as:

Where M (i, j) represents the features at (i, j), and ϕ is a free

parameter. To define a Markov chain used the graph, the

stationary distribution of the chain is computed and treated as

an activation map, A (p, q). A new graph is then defined on all

pixels with the edge weights being:

FOREGROUND CONFIDENCE BASED

HISTOGRAM DECOMPOSITION:

This paper shows that despite weighing the influence of

features on the histogram, the accumulative effect of

background features on different bins of the histogram can

sum up to be significant. This is because of the fact that a

significant number of pixels in the video, and consequently

densely samples descriptors, can have relatively low

foreground confidence. In other words, the number of high

confidence features contributing to the histogram is far less

than those with low confidence of being foreground. This

would not be a problem if features with high and low

confidences were quantized to different words, but that may

not always be the case, especially due to the weighted

codebook.

If the foreground and background regions were divided into

two distinct classes (binary labeled), it would be

straightforward to compute two different histograms for each

type of region. However, given that it is desirable to avoid

thresholding and binarization of foreground confidence,

propose a novel alternative solution.

5. EXPERIMENTAL RESULT

As reported in Table 1, the quantitative results conclusively

demonstrate that the proposed framework for estimation of

foreground confidence is meaningful, and the consistently

higher recognition accuracies serve as an empirical

verification of our conjecture that the dataset specific

background scenes are one of the main causes of deterioration

in recognition accuracy across datasets. Moreover, when

training and testing on distinct datasets, the histogram

decomposition and the newly proposed corresponding

similarity measure perform better than even the foreground

weighted vocabulary and histograms, for all cross-dataset

experiments.

Training

Testing

Weighted

Histogram

decomposition

Olympic

sports

Olympic

sports

78.85

71.67

UCF 50

Olympic

sports

38.46

46.60

Olympic

sports

UCF 50

34.45

39.95

Table 1.

6. CONCLUSION

Although progress in recent video-based human activity

recognition has been encouraging, there are still some

apparent performance issues that make it challenging for realworld deployment. More specifically:

The viewpoint issue remains the main challenge for human

action recognition. In real world activity recognition systems,

the video sequences are usually observed from arbitrary

camera viewpoints; therefore, the performance of systems

needs to be invariant from different camera viewpoints.

However, most recent algorithms are based on constrained

viewpoints, such as the person needs to be in front-view (i.e.,

face a camera) or side-view. Some effective ways to solve this

problem have been proposed, such as using multiple cameras

to capture different view sequences then combining them as

training data or a self-adaptive calibration and viewpoint

determination algorithm can be used in advance. Sophisticated

viewpoint invariant algorithms for monocular videos should

be the ultimate objective to overcome these issues.

Since most moving human segmentation algorithms are still

based on background subtraction, which requires a reliable

background model, a background model is needed that can be

adaptively updated and can handle some moving background

or dynamic cluttered background, as well as inconsistent

lighting conditions. Learning how to effectively deal with the

dynamic cluttered background as well as how to

systematically understand the context (when, what, where,

etc.), should enable better and more reliable segmentation of

human objects. Another important challenge requiring

research is how to handle occlusion, in terms of body–body

part, human–human, human–objects, etc.

Natural human appearance can change due to many factors

such as walking surface conditions (e.g., hard/soft,

level/stairs, etc.), clothing (e.g., long dress, short skirt, coat,

hat, etc.), footgear (e.g., stockings, sandals, slippers, etc.),

object carrying (e.g., handbag, backpack, briefcase, etc.).The

change of human action appearance leads researchers to a new

research direction, i.e., how to describe the activities that are

less sensitive to appearance.

In conclusion, this review provides an extensive survey of

existing research efforts on video-based human action

recognition systems, covering all critical modules of these

systems such as feature extraction object segmentation, and

representation, and activity detection and classification.

Moreover, three application domains of video-based human

activity recognition are reviewed, including surveillance,

entertainment and healthcare. Even if the great progress made

on the subject, many challenges are raised herein together

with the related technical issues that need to be resolved for

real-world practical deployment. Furthermore, generating

descriptive sentences from images or videos is a further

challenge, wherein objects, actions, activities, environment

(scene) and context information are considered and integrated

to generate descriptive sentences conveying key.

7. REFERENCES

[1] Duong, T.V.; Bui, H.H.; Phung, D.Q.; Venkatesh, S.

Activity Recognition and

Abnormality Detection with the

Switching Hidden Semi-Markov Model. In Proceedings of the

IEEE Computer Society Conference on Computer Vision and

Pattern Recognition (CVPR), San Diego, CA, USA, 20–25

June 2005; Volume 1, pp. 838–845.

[2] Blank, M.; Gorelick, L.; Shechtman, E.; Irani, M.; Basri,

R. Actions as Space-time Shapes. In Proceedings of the Tenth

IEEE International Conference on Computer Vision (ICCV),

Beijing, China, 17–21 October 2005; Volume 2, pp. 1395–

1402.Virtual mouse vision based interface, Robertson P.,

Laddaga R., Van Kleek M. 2004.

[3] Y.; Sukthankar, R.; Hebert, M. Spatio-temporal Shape and

Flow Correlation for Action Recognition. In Proceedings of

the IEEE Conference on Computer Vision and Pattern

Recognition (CVPR), Minneapolis, MN, USA, 17–22 June

2007; pp. 1–8.

[4] Lu, W.; Little, J.J. Simultaneous tracking and action

recognition using the PCA-HOG descriptor. In Proceedings of

the 3rd Canadian Conference on Computer and Robot Vision,

Quebec, PQ, Canada, 7–9 June 2006; p. 6.

[5] Du, Y.; Chen, F.; Xu, W. Human interaction

representation and recognition through motion decomposition.

IEEE Signal Process. Lett. 2007, 14, 952–955.

[6] Navneet Dalal and Bill Triggs. Histograms of oriented

gradients for human detection. 2005.

[7] Pedro Felzenszwalb, David McAllester, and Deva

Ramanan. A discriminatively trained, multiscale, deformable

part model. 2008.

[8] H. Wang, A. Kl¨aser, C. Schmid, and C. Liu. Action

recognition by dense trajectories. In CVPR, 2011.

[9] S. Sadanand and J. J. Corso. Action bank: A high-level

representation of activity in video. In CVPR, 2012

[10] K. K. Reddy and M. Shah. Recognizing 50 human action

categories of web videos. MVA, 24(5):971–981, 2012

[11] H. Kuehne, H. Jhuang, E. Garrote, T. Poggio, and T.

Serre. HMDB: a large video database for human motion

recognition. In ICCV, 2011.

[12] H. Wang, A. Kl¨aser, C. Schmid, and C. Liu. Action

recognition by dense trajectories. In CVPR, 2011.

[13] H. Wang, M. Ullah, A. Kl¨aser, I. Laptev, and C.

Schmid. Evaluation of localspatiotemporal features for action

recognition. In BMVC, 2009.

[14]M.Raptis and S.Soatto, ‘‘Tracklet Descriptors for Action

Modeling and Video Analysis,’’European Conf. on Computer

Vision (ECCV), pp. 1-14, 2010.

[15] Z. Lin, Z.Jiang and L.S. Davis, ‘‘Recognizing Action by

Shape-Motion Prototype Trees.’’ IEEE Conf. on Computer

Vision and Pattern Recognition (CVPR), pp.444-451, 2009.

[16] T. Guha and R. K. Ward, ‘‘Learning Space

Representation for Human Action

Recognition,’’ IEEE

Trans. Pattern Anal. Mach. Intell., vol. 34 no.8, pp. 15761588, August 2012

[17] L. Bruzzone and M. Marconcini. Domain adaptation

problems: A dasvm classification

technique and a circular

validation strategy. PAMI, 32(5):770–787, 2010.

[18] L. Duan, D. Xu, and I. W. Tsang. Domain adaptation

from multiple sources: A domain-dependent regularization

approach. IEEE Trans. NNLS, 23(3):504– 518, 2012.

[19] K. Saenko, B. Kulis, M. Fritz, and T. Darrell. Adapting

visual category models to new domains. In ECCV, 2010.

[20] L. Duan, D. Xu, I. W. Tsang, and J. Luo. Visual event

recognition in videos by learning from web data. In CVPR,

2010.

[21] A. Torralba and A. Efros. Unbiased look at dataset bias.

In CVPR, 2011.

[22] A. Kl¨aser, M. Marsza³ek, I. Laptev, and C. Schmid. Will

person detection help bag-of-features action recognition?

Technical Report RR-7373, INRIAGrenoble - Rh^one-Alpes,

2010.