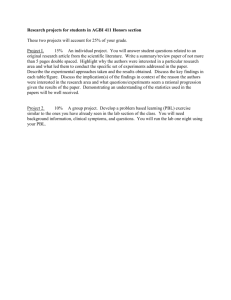

EBLIP Critical Appraisal Checklist

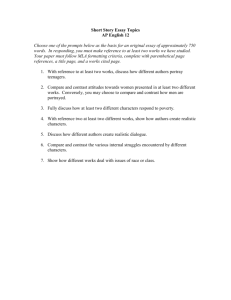

advertisement

Section B: Data Collection Section A: Population EBL Critical Appraisal Checklist Yes (Y) Is the study population representative of all users, actual and eligible, who might be included in the study? There is no clear explanation as to why the population was limited to only undergraduates, rather than including graduate students, faculty and staff, so it is not clear whether all eligible participants were included. Also, the undergraduate population is not described in any detail. Are inclusion and exclusion criteria definitively outlined? The authors specify only that they contacted a “random sample” with no information as to how the sample was selected. Is the sample size large enough for sufficiently precise estimates? The authors do not list the sample size. Is the response rate large enough for sufficiently precise estimates? Although the authors clearly state that 95 students participated in the study, since the sample size was not clearly indicated, the response rate is unclear. Is the choice of population bias-free? This is unclear, as the authors provide few details describing their population, and little information as to how the population was selected and recruited. If a comparative study: Were participants randomized into groups? Were the groups comparable at baseline? If groups were not comparable at baseline, was incomparability addressed by the authors in the analysis? Was informed consent obtained? Not specified in the article. Are data collection methods clearly described? The research methodology is clearly described in the article, and all written instructions, questionnaires and rubrics are provided as appendices. If a face-to-face survey, were inter-observer and intra-observer bias reduced? The role of the proctor, aside from time-keeping, is unclear, and the proctor’s presence could have added an element of bias compared to automatic timekeeping. There is no mention of any attempt to reduce inter/intra-observer bias. Is the data collection instrument validated? Not specified in the article. There is no mention if it was vetted for ethical issues, jargon, or if it was tested on colleagues or students. If based on regularly collected statistics, are the statistics free from subjectivity? Does the study measure the outcome at a time appropriate for capturing the intervention’s effect? It was noted that federated searching had been implemented at all three universities about 1.5 years prior to the study; however, participants were not vetted for previous use/exposure to federated searching. It was also noted that the study did not start off with a hypothesis, so it is not entirely clear which outcome they are measuring—use, preference or quality of results. However, since data was collected immediately after searches were performed by participants, it was agreed that the methods used to measure the issues of time savings and satisfaction of information needs seemed suitable. Is the instrument included in the publication? All written instructions, questionnaires and rubrics are provided as appendices. No (N) Unclear (U) N/A x x x x x x x x x x x x x EBLIP Critical Appraisal Checklist Lindsay Glynn, MLIS Memorial University of Newfoundland lglynn@mun.ca Section C: Study Design Section D: Results Are questions posed clearly enough to be able to elicit precise answers? Many of the librarians present were uncomfortable with the idea of a “hypothetical assignment”, wondering if students can really judge when they have enough information for an assignment that they will never have to complete. However, it was pointed out that students often arbitrarily select resources, even for “real” assignments. Those present did agree that some of the questions in the participant questionnaire were vaguely worded, particularly regarding their level of satisfaction with the citations the students found. Additionally, the Likert scale provided is not well defined, and citation quality was measured for selected results, rather than overall results retrieved. Were those involved in data collection not involved in delivering a service to the target population? This issue is not addressed in the article. Is the study type / methodology utilized appropriate? The authors employed a variety of methods in undertaking their study. Students were observed and times while they completed their searches. A survey questionnaire asked participants about satisfaction and preference. Citation quality was evaluated using two different rubrics. The consensus was that the study overextended itself as it tried to incorporate many different studies into one. While each study type may have been appropriate to the question to which it was applied, the execution of the study as a while was not very effective. Is there face validity? The article was compared to a study conducted by Korah and Cassidy (2010), which has a similar purpose but vastly different study design and results. The group decided that although the design and execution of the study are not ideal, the authors do produce sound data, which indicates face validity. Is the research methodology clearly stated at a level of detail that would allow its replication? The research methodology is clearly described in the article. Was ethics approval obtained? Not specified in the article. Are the outcomes clearly stated and discussed in relation to the data collection? The authors identify four clear questions they wish to address, and analyze the data in relation to these four questions. Are all the results clearly outlined? Calculations were explained to have been adjusted to account for different sample sizes. Are confounding variables accounted for? The article was severely lacking in this respect, as the authors fail to address any confounding variables, including demographic factors such as age, gender, year level and program of study, as well as differences in IT infrastructure at the three schools, previous exposure to information literacy training, etc. The authors do frequently discuss the differences in results between the three schools, though they fail to explain what would explain these differences. Do the conclusions accurately reflect the analysis? The conclusions are very brief, quite vague, and somewhat overstated, but they do reflect the authors’ analysis of their results. For example, one group showed a statistical significance with their answers to the question of time saved, but there was not further inquiry into the reasons behind this major difference. Also, only 2 groups showed a statistical significance in relation to x x x x x x x x x x EBLIP Critical Appraisal Checklist Lindsay Glynn, MLIS Memorial University of Newfoundland lglynn@mun.ca the question of satisfaction with federated searching, but the conclusions place much emphasis on these results. Is subset analysis a minor, rather than a major, focus of the article? Although the authors refer to the differences in results between the three schools, this remains a minor focus of the article. Are suggestions provided for further areas to research? The authors provide a couple of suggestions for future studies. For example, the authors state that the study was limited to only addressing one type of implementation, and suggested that future studies compare different federated search engines. However, the study and its results open up many more possibilities for research that are not addressed by the authors. Is there external validity? The authors do not provide enough information about their sample population to establish whether or not it is representative of the undergraduate populations at the three universities included in the study. There is also insufficient information to draw comparisons to other institutions, or to different years of study or disciplines. It was also pointed out that the universities in question are known to have a potentially older than typical undergraduate population, which could have also been addressed. x x x Calculation for section validity: (Y+N+U=T) Calculation for overall validity: (Y+N+U=T) If Y/T <75% or if N+U/T > 25% then you can safely conclude that the section identifies significant omissions and that the study’s validity is questionable. It is important to look at the overall validity as well as section validity. Section A validity calculation: Y/T = 0/6 = 0% Section B validity calculation: Y/T = 2/7 = 29% Section C validity calculation: Y/T = 4/5 = 80% Section D validity calculation: Y/T = 3/5 = 60% If Y/T ≥75% or if N+U/T ≤ 25% then you can safely conclude that the study is valid. Overall validity calculation: Y/T = 9/23 = 39% General Comments All librarians present agreed that this is an important issue that is very applicable to our situation at McGill—although we are not considering subscribing to, renewing or changing a federated search tool, the questions brought forward in the study have important implications for instruction and reference. However, the study was lacking in many areas, particularly with regards to population selection and recruitment, and data gathering. The participants in the discussion concluded that the study tried to do too much at once and as a result was neither well designed nor well executed. This is unfortunate as some of their questions, most notably regarding the differences in citation quality, had great potential but were not answered clearly enough to inspire confidence. The study does open up a lot of possibilities for further research. In addition to knowing whether or not students are satisfied with federated search tools, it is perhaps even more important to be aware of how they use and interact with such tools. Such insight would allow librarians to offer and teach federated searching in a way that would appeal to students and assist them in their research, as well as offer guidance in developing evaluative criteria relevant to various user needs, and not just librarians’ perceived needs, in the process of selecting or renewing a federated search tool for their institution. Jan Sandink & Vincci Lui, Feb. 25th, 2011 EBLIP Critical Appraisal Checklist Lindsay Glynn, MLIS Memorial University of Newfoundland lglynn@mun.ca