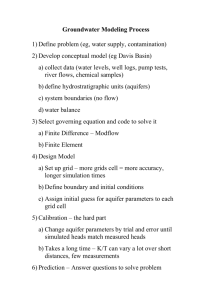

why use grid computing?

advertisement

By: Oscar Andersson 820923-4817 Glenn Eliasson 821012-4890 Table of contents FOREWORD ................................................................................................................................... 3 INTRODUCTION ............................................................................................................................ 4 WHY USE GRID COMPUTING? .................................................................................................. 5 HOW DOES GRID COMPUTING WORK? .................................................................................. 7 SUMMARY ................................................................................................................................... 10 SOURCES ...................................................................................................................................... 10 FOREWORD Before we started with this project we asked ourselves some questions, because none of us know much about grids; What is a grid? What does it do? Why do we use grids? Advantages / disadvantages These are the main questions we want to get answered after we are finished with this project. INTRODUCTION In 1800 Alessandra Volta invented the electrical battery. This invention would be the ground for the future evolvement into our modern electrical society, and made a future of demand for dependable and consistent access to utility power, resulting in the electrical power grid. It was first in the mid of 1990s the computer scientists were inspired by the electrical power grids and began to design and develop an analogous infrastructure called the computational power grid for wide-area and distributed computing. The reason why the power grid was so interesting was that the scientist required more resources than a single computer could provide in a single administrative domain. Connecting several computers and running software in parallel, a practice known as distributed computing had been done long before, but designing a system were each user can take advantage of the collective computing resources in the network was new ground. Just like the invention of the battery eventually led to information exchange through telegraphs, and later the construction of power grids, the computer gave us the Internet and will perhaps lead us into an era were computer power can be tapped through a global computer grid. (It is in fact the many similarities to the power grid that resulted in the word “computing grid”). These abilities of the grids enable sharing, selection and aggregation with computers and storage systems over wide areas. This is perfect when the users solve large-scale resource problems in science, engineering and commerce. WHY USE GRID COMPUTING? It is a well known fact that the computing power of the typical workstation or PC is very poorly utilised. Most of the time only a fraction of the systems resources are used (writing Matlab code, running screensavers..), but sometimes the system will be bogged down with work for long periods of time (running a Matlab simulation..). The traditional solution to this has been to build ever faster computers and supercomputers, however investing in this kind of computational resources can be prohibitively expensive. There exists perhaps a more elegant solution to the problem; using networking technologies it is theoretically possible to dynamically share the computational resources among a large group of users, hence improving the usage of computational resources of the involved systems. Ideally the net result for all users would be a dramatic gain in peak computational power as there would be substantially more resources available for the average user at the particular times when large computations are necessary. Again theoretically this would empower users with vast amounts of available computational power, theoretically far beyond that of expensive supercomputers. To this date it is foremost the prospect of relatively cheap access to huge amounts of computing power that has sparked the interest in grid computing within organisations such as CERN; “When the Large Hadron Collider (LHC) comes online at the CERN in 2007 it will produce more data than any other experiment in the history of physics. Particle physicists have now passed another milestone in their preparations for the LHC by sustaining a continuous flow of 600 megabytes of data per second (MB/s) for 10 days from the Geneva laboratory to seven sites in Europe and the US. CERN plans to use a worldwide supercomputing "Grid" -- similar to the World Wide Web but much more powerful -- of computing centres to handle the data from the collider, which will then be analyzed by more than 6000 scientists all over the world”. -Belle Dumé, PhysicsWeb Also some pharmaceutical companies have shown great interest in using grids for computer aided drug design because of the immense scalability of such systems; “A drug design problem that requires screening 180,000 compounds at three hours each would take a single PC about 61 years to process, and would tie-up a typical 64-node supercomputer for about a year.. The problem can be solved with a large scale grid of hundreds of supercomputers in a day" -Rajkumar Buyya B.E. (Mysore Univ.) and M.E. (Bangalore Univ.). However there exists far longer reaching visions for the applications of grid computing. One such vision is that instead of the smaller corporate and government grids of today, in the future there will be a global grid system that will replace the Internet (which is just an information exchange network) of today. The users of this grid would not only be able to access information and services as before, but also invest into computing power on a pay for use basis in a similar way as we today buy electrical energy thorough the power grid. In addition, the users would be able to access powerful remote services. Another key benefit of a global grid is better load balancing by using available resources in off-peak time zones. One area of debate is whether the average micro-consumer in such a grid would also act as a producer of computing power. The answer to this question seems to depend on if future applications can exploit these small, loosely coupled computational resources. Some claim that in a grid based future there is little need for any significant computing performance in the machines of the average consumers as it would be cheaper to buy this performance through the grid anyway, so the benefit from such a scheme would be questionable. Although there are already several grid systems in use around the world today, most are relatively small and are used within individual companies and organizations in order to efficiently connect and utilize the company resources. This is mainly because of security concerns, many companies are worried about the possibility of theft of sensitive information within larger grids. But there are other problems as well. For a large multi company grid to function there needs to be a good system in place to divide the resources rightfully. This problem seems relatively easy to solve on paper but implementing such rules has proved to be more difficult than previously thought. Another interesting commercial use of grids is for “computer brokers” to lease grid resources to companies. Companies hiring such resources would not have to make the large investments in supercomputers. This system would offer a much more dynamical access to computing power than before, making it cheaper and faster for companies to adapt to changes in computing demand within the organization. One such “computer broker” is Sun Microsystems, who has recently entered the market with its “Sun Grid Compute Utility”, selling grid computing resources to companies on a pay per use basis (as seen in the figure to the right). Of course such a business model would only pay off in the long run for companies with certain resource usage patterns. Another use would be for companies to offload parts of their workload to these leased grids at times of unusually high computational activity to speed up the computational times. . “Compute resources—they must be available and accessible whenever you need them, 24x7. Many organizations are faced with resource utilization issues—they need vast amounts of compute power, but only at certain times. The rest of the time, resources are underutilized.” “The Solution: Sun™ Grid Pay-Per-Use Computing Sun has defined and developed a better solution: pay-per-using computing. This model changes the game—enabling you to purchase computing power as you need it, without owning and managing the assets.” “Simple, Affordable Compute Utility: $1/Hour/CPU The first in the Sun Grid family of offerings is Compute Utility, a $1 per CPU per hour payperuse offering for batch workloads such as Monte Carlo simulations, protein modeling, geologic exploration, and mechanical CAD simulations, among others. This offering meets the needs of our customers for agile, highly available, affordable compute resources on a pay-per-use basis.” HOW DOES GRID COMPUTING WORK? Grids are very complex systems, in order to get a clear view of how grids work we have divided this subject into smaller individual parts. Grid hardware: On the hardware level, a grid system should be very familiar to most people as the hardware building blocks of a grid system is the same as that of the Internet; computer resources and network interconnections connecting them. However as the network of the grid will transfer not only pure information but computations (instructions) as well, it is required to have far greater network transmission rates than what is typical over the Internet. Interestingly however, networking technologies are developing at a far greater rate than processor technologies, as shown by the following slide from [www.globus.org]: This fact is of great importance, as applications that process over a grid will have to be processed in parallel across a large number of geographically separated resources. Many types of computation workloads however require a fair amount of information exchange between each other. The resulting amount of required information exchange between geographically separated resources in the grid and the capability of the network interconnects connecting them is often the performance limiting metric in grid systems today. As networking technologies continue to evolve at much higher rates than processing technologies computing grids will become increasingly effective and useful for new kinds of computational loads. Grid software: Just like the Internet, a grid is built from standardised protocols that enable systems of various hardware and operative systems to function together in the grid. These protocols are commonly divided into 2 parts depending on how close to the end-system they work; Lowlevel services: security, information, directory, resource management (resource trading and resource allocation. High-level services: tools for application development, resource management and scheduling Naturally the most challenging parts of the complex grid software platform is the high-level services and of those resource management and scheduling are especially complicated to implement. It is after all in the control software that all the complexities of grid computing reside. Lets consider some important aspects of software impact on grid operation: Performance: Customized application development tools (SDKs) are needed for application development for the grid systems, as the user applications processed over the grid will have to execute using the protocol framework of the grid system. These SDKs are built to extract maximum application parallelism from the code of the applications in order for the application to be able to execute in a highly parallel fashion across as high number of machines in the grid as practically possible. Ideally the job would be divided into a large amount of “subjobs” that would require as little communication between each other as possible. These “subjobs” would then be distributed to resources across the grid by the high level system services. Of course the extent of parallelism that can be extracted from an application depends highly on the characteristics of the code, and because of this certain types of workloads are far more suitable for grid computations than others. The amount of communication required between the “subjobs” tells how “scalable” the application is. The more scalable an application is the less communication is required and the more efficiently each subjob will be processed. However writing efficient code for grid computation is a hard task and often requires considerable use of complicated mathematics and programming tricks to extract good performance. For instance the programmer might want to implement code algorithms that counter intuitively takes more processing power to process a certain problem, if this measure ensures that the program can run more parallel or scale better than before. Making these kind of decisions can be very hard as the programmer can only guess what kind of resources will be available to the finished program on the grid. There are other sources of performance problems in the grid as well, for instance if many of the subjobs would need to access a certain storage resource in the grid for data, the network traffic of that resource might become congested. There are many such potential problems in the grid that the complicated scheduling software will have to handle properly to keep the grid processing efficiently. Security: Security is rightfully of large concern for grid users. As data is moved around and processed much more freely than over the Internet it is clear that there needs to be strong protection in place to prevent that for instance virus can spread along the workloads. There are more grid specific problems as well, such as preventing other users from examining each others data (data confidentiality) that is processing remotely on their machines in the grid, or manipulate the results of such computations (data integrity). The grid solution to data confidentiality problems is generally the extensive use of encryption techniques. We will not discuss encryption techniques here as the techniques used in most current grids are similar to those currently in use on the Internet. Data integrity when especially important is sometimes tested by running the computations multiple times at different resources. Further, all grid resources are authenticated (identified) after which they are granted certain permissions or access to resources within the grid. Grid layers: The software of the grid is often divided into layers to show the layered architecture of the underlying protocols: Starting at the bottom we have the grid fabric that consists of the distributed resources of the grid which could be computers, storage devices databases or even clusters, supercomputers, sensors or instruments etc. The range of hardware has few constraints and is only defined by the connectivity protocols used. Next the security infrastructure layer provides secure and authorized access to grid resources as discussed above. The core grid middleware performs middle level tasks between the resources. The user level middleware consists of schedulers responsible for aggregating the resources. Grid programming environments are used by application developers to enable applications to run on the grid. At the very top is the Grid Applications which of course constitute the applications that run on the grid. SUMMARY Although one might get the impression that grids might soon replace supercomputers, this is not exactly true. Although grids represent an interesting new way of harnessing vast amounts of unused computing power, it is also important to understand the inherent performance limitations of grid computing. Grids require very complicated control software and many types of software is very hard or impossible to program to run efficiently on grids. So at least in a shorter perspective grids will only be able to successfully replace traditional supercomputers in very specific types of tasks. But there is a lot of grid related activity and development currently going on among research organizations. Also networking technologies are evolving at a very high pace, so perhaps more efficient and capable grids might be closer in the future than most expect. Some anticipate that grid computing will soon start to slowly make its way out onto the Internet and eventually absorb it. As both grids and the Internet is based on similar hardware and standardised use of protocols it is likely that grid elements will be introduced as additional protocols and services that extend the ones currently in use on the Internet. As networking, virtualization and security technology continues to evolve many of the problems inherent in the current generation of grids could be solved and the possibility of another “Internet revolution” based on grid services seems possible. SOURCES This article by Ted Smalley Bowen appeared in the Technology Review News, September 12, 2001. IBM’s Grid Computing website: http://www-136.ibm/ibm/developerworks/grid The Grid: A new infrastructure for 21 century science, physics today, February 2002 Globus website www.globus.org Introduction to Grid computing with Globus, IBM redbook: http://www.redbooks.ibm.com/abstracts/sg246895.html