Cognitive Science and Normativity II

advertisement

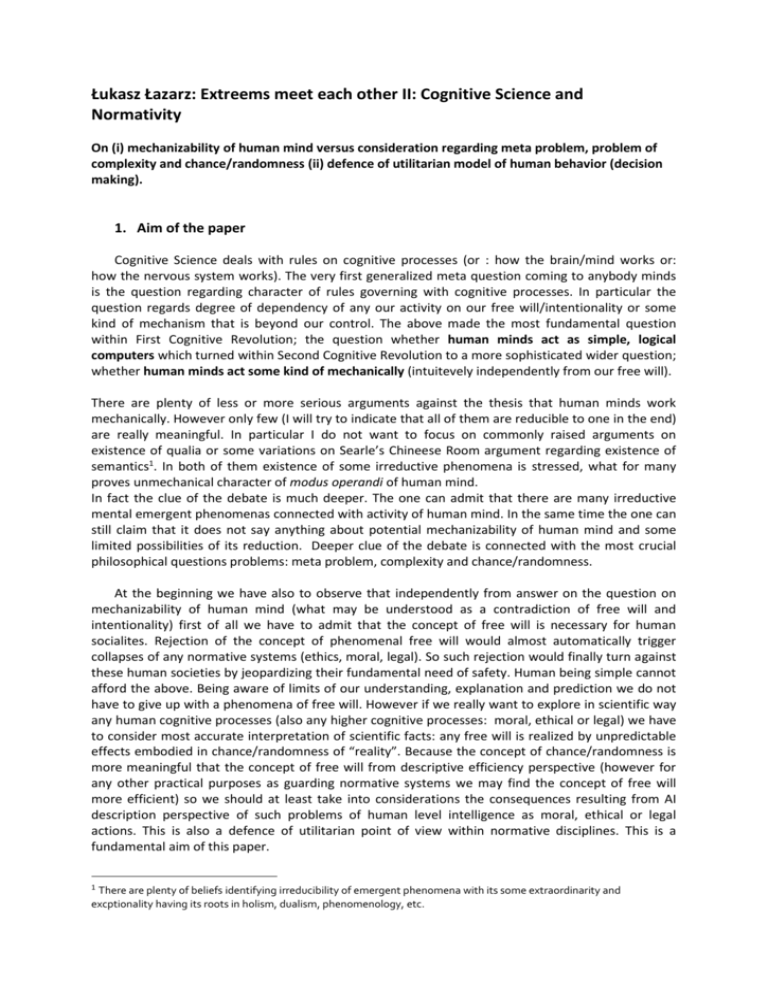

Łukasz Łazarz: Extreems meet each other II: Cognitive Science and Normativity On (i) mechanizability of human mind versus consideration regarding meta problem, problem of complexity and chance/randomness (ii) defence of utilitarian model of human behavior (decision making). 1. Aim of the paper Cognitive Science deals with rules on cognitive processes (or : how the brain/mind works or: how the nervous system works). The very first generalized meta question coming to anybody minds is the question regarding character of rules governing with cognitive processes. In particular the question regards degree of dependency of any our activity on our free will/intentionality or some kind of mechanism that is beyond our control. The above made the most fundamental question within First Cognitive Revolution; the question whether human minds act as simple, logical computers which turned within Second Cognitive Revolution to a more sophisticated wider question; whether human minds act some kind of mechanically (intuitevely independently from our free will). There are plenty of less or more serious arguments against the thesis that human minds work mechanically. However only few (I will try to indicate that all of them are reducible to one in the end) are really meaningful. In particular I do not want to focus on commonly raised arguments on existence of qualia or some variations on Searle’s Chineese Room argument regarding existence of semantics1. In both of them existence of some irreductive phenomena is stressed, what for many proves unmechanical character of modus operandi of human mind. In fact the clue of the debate is much deeper. The one can admit that there are many irreductive mental emergent phenomenas connected with activity of human mind. In the same time the one can still claim that it does not say anything about potential mechanizability of human mind and some limited possibilities of its reduction. Deeper clue of the debate is connected with the most crucial philosophical questions problems: meta problem, complexity and chance/randomness. At the beginning we have also to observe that independently from answer on the question on mechanizability of human mind (what may be understood as a contradiction of free will and intentionality) first of all we have to admit that the concept of free will is necessary for human socialites. Rejection of the concept of phenomenal free will would almost automatically trigger collapses of any normative systems (ethics, moral, legal). So such rejection would finally turn against these human societies by jeopardizing their fundamental need of safety. Human being simple cannot afford the above. Being aware of limits of our understanding, explanation and prediction we do not have to give up with a phenomena of free will. However if we really want to explore in scientific way any human cognitive processes (also any higher cognitive processes: moral, ethical or legal) we have to consider most accurate interpretation of scientific facts: any free will is realized by unpredictable effects embodied in chance/randomness of “reality”. Because the concept of chance/randomness is more meaningful that the concept of free will from descriptive efficiency perspective (however for any other practical purposes as guarding normative systems we may find the concept of free will more efficient) so we should at least take into considerations the consequences resulting from AI description perspective of such problems of human level intelligence as moral, ethical or legal actions. This is also a defence of utilitarian point of view within normative disciplines. This is a fundamental aim of this paper. 1 There are plenty of beliefs identifying irreducibility of emergent phenomena with its some extraordinarity and excptionality having its roots in holism, dualism, phenomenology, etc. 2. Introduction: Meta, complexity, chance/randomness and mechanizability 2.1.Meta problem Meta problem in general, having its source in philosophy, epistemology, logic, mathematics and even linguistics often recognized in problem of metaphysics, frame problem, ceteris paribus clause, regressum ad infinitum, Wittgenstein (Kripke) argument, liar paradox and mainly most prominent examples of Gödel limitation theorems and Turing stop problem clearly shows limits of scientific exploration connected with any finite formalization of way of description.2 We will never create a theory based on any axioms of any given formal system being complete and not contradictory, because of lack of knowledge contained in some meta system. There is an infinitive meta knowledge over the knowledge represented by the given formal system. This meta knowledge may be represented by any number having algorithmic contents of information grater then complexity of given N formal system.3 2.2.Problem of complexity (chance/randomness in classical physics) Problem of complexity originally raised from classical physics (recognized in effects of deterministic chaos), quantum physics and evolutionary biology disciplines also indicates limits of exploration connected with the need of the use of probabilistic methods and consequently the need of explanation with the use of the concept of chance/randomness.4 Randomness of systems in classical physics is connected with their great sensitivity on errors of measurement of initial conditions with regard to their non linearity and in quantum physics and evolutionary biology disciplines chance/randomness is understood as a structural part of explained “reality”. The problem of complexity is also the essence in classical problem of Cognitive Science: mind/brain problem or the concepts of emergence or supervenience (which are often overused by many philosophers). Need of the use of concept of chance/randomness in classical physics may be understood as arising with regard to axiomatization by way of measurement process and setting initial conditions5. When we assume some axioms we formalize them- so according to meta problem we contain in description system incompletness/contradiction which is equal to some fault - according to hilbertian model of complete and not contradictory cognition. When we want to describe the subject phenomena in such a faulty way, we can expect that description of process of complication of this phenomena (by way of adding impact to some other phenomenas or by way of evolution of the phenomena in time) triggers (i) propagation of faultiness of the description and consequently need 2 Gödel K., Über formal unentscheidbare Sätze der Principia Mathematica und verwandter Systeme, I. Monatshefte für Mathematik und Physik 38: 173-98, Chaitin G.J., Twierdzenie Godla a informacja [w] R. Murawski, Współczesna filozofia matematyki, PWN, Warszawa 2002., Turing A., On Computable Numbers, with an Application to the Entscheidungsproblem. London: C.F. Hodgson & Son, 1936-1937, McCarthy J., Hayes J., Some philosophical problems from the standpoint of artificial intelligence. Machine Intelligence, 4:463-502., Sandewall E., An approach to the Frame Problem and its Implementation, Machine Intelligence, 7:195–204, Wittgenstein L., Dociekania filozoficzne, tłum. B. Wolniewicz, Warszawa: PWN 2005, Barwise J., Etchemendy J.,(1987) The Liar. Oxford University Press. 3 Chaitin G.J., Twierdzenie Godla a informacja [w] R. Murawski, Współczesna filozofia matematyki, PWN, Warszawa 2002, s. 343. 4 Mainzer K. Thinking in Complexity: The Complex Dynamics of Matter, Mind and. Mankind. Springer, 1994., Gell-Mann, M. (1995), What is complexity?, Complexity 1. 5 Stannet M., Copmutation and Hypercomputation, „Minds and Machines” 2003, no. 13, s. 141-142, Chaitin G.J., Twierdzenie Godla a informacja [w] R. Murawski, Współczesna filozofia matematyki, PWN, Warszawa 2002, s. 343, Chaitin G.J., Randomness in arithmetic and the decline and fall of reductionism in pure mathematics,'Bulletin of the European Association for Theoretical Computer Science, No. 50 (June 1993), pp. 314-328. of the use of the concept of chance/randomness in prediction of activity of the subject pheneomena and generally its final unpredictability from one side , and (ii) irreducibility (some meaning of this word) of some post and complex phenomenas arisen from the subject phenomena to thereof. The chance/randomness may be simply and consequently understood in classical physics as some meta data over the interpretation frame of measurement process and setting initial conditions (axioms) numbers having algorithmic contents of information grater then complexity of given N system of description. 6 In conclusion we may observe that meta problem and problem of complexity in classical physics regard the same phenomena, but both problems describe its different consequences: (i) meta problem – the consequence of incompletness/contradiction of the description and (ii) problem of complexity – the consequence of limited predictability of subject phenomena/irreducibility of post and more complex phenomenas according to such description. 2.3.Chance/randomness (complexity in quantum physics and evolutionary biological disciplines) In quantum physics chance/randomness becomes structural part of the reality. In opposition to the above some claims the hypothesis of hidden parameters7, what seems to harmonize with the above mentioned meta problem and problem of complexity in classical physics. However it must be underlined that philosophical dispute between those who want to save hypothesis of hidden parameters and those who claims that randomness is a structural part of quantum reality is meaningless because they only differ with argue whether unpredicitibility is because of lack of some existing meta knowledge or because of fact that unpredictability is a structural part of “reality”. The most important is to observe that in both cases we still deal with unpredictability, which may be consequently identified with a numbers having grater contents of information then complexity of M system representing the one’s interpretational frame of these numbers. Because we shall never confound conception of description of the world even if it seems so natural with reality itself, which is beyond of any conceptions 8 we shall really be abstemious with claiming that something is “reality” or its structural part. It seems only lack of humility only determines one to distinguish two kinds of unpredictability or randomness. In evolutionary biological disciplines chance/randomness allows to explain (in frames of evolutionary explanation) emergence of variety of pieces of “reality”. Independently whether we use a concept of chance/randomness within frames of evolutionary biological disciplines, quantum physics, classical physics we describe unpredicitibility with this concept of chance/randomness. With regard to the above I find all the above problems (meta problem and problem of complexity) the same kind in the end I consider all of them may be interpreted as one and the same problem of chance/randomness (or meta problem).9 2.4. Mechanizability of human mind There are plenty of arguments build in connection to the above fundamental problems claiming moderation before coming to serious philosophical conclusions (especially regarding higher cognitive processes as moral, ethical, legal actions) basing on analogy of human mind and its description from 6 Chaitin G.J., Twierdzenie Godla a informacja [w] R. Murawski, Współczesna filozofia matematyki, PWN, Warszawa 2002, pp. 343., Piesko M., Nieobliczalna obliczalność, Wydawnictwo: Copernicus Center, 2011, pp. 7 Heller M., Filozofia przypadku Kosmiczna fuga z preludium i codą, Wydawnictwo: Copernicus Center, 978-83-622-5920-5, 2011, Piesko M., Nieobliczalna obliczalność, Wydawnictwo: Copernicus Center, 2011, pp. 157 8 Duch W., http://www.is.umk.pl/~duch/Wyklady/Kog1/MechKwant.pdf, pp. 165 9 Heller M., Filozofia przypadku Kosmiczna fuga z preludium i codą, Wydawnictwo: Copernicus Center, 978-83-622-5920-5, 2011, Piesko M., Nieobliczalna obliczalność, Wydawnictwo: Copernicus Center, 2011 perspective of contemporary Cognitive Science (in particular Artificial Intelligence). All of them, the same as problems themselves, are reductive to one problem of understanding the chance/randomness and some intuition that we will never be able to understand with mind the mind itself. 2.4.1. Meta problem and mechanizability The assumption that human minds does not regard limitations connected with finite formalization of way of description triggers belief of many that we are not mechanical . The conclusion is accompanied with the next assumption: intuitively mechanizability shall be formalized and consequently any system of description of the subject mechanism is limited with limitations resulting from Godel’s theorems10. It seems to be observed that predominant interpretation of the above indication is that meta problem points only limitations of our cognition and says nothing on mechanizability of human mind or any other physical processes.11 Both assumptions: (i) the assumption that human mind or any other physical processes do not regard problem of incompletness/contradiction and (ii) the assumption that mechanical means ability to be describable with use of any symbolic, formalized means are too profound. First assumption may be questioned with indication that (i) within mathematics thesis of its incompletness was odd at the beginning even when it was already proved and (ii) results of quantum physics clearly show that we shall take more seriously possibility of fact “reality” is some how incomplete or contradictory. The second assumption may questioned with indication that independently from source of our ignorance we always have ability to some (worse) explanation. The use of probabilistic methods freely allows us to include in such description any unpredictable/unknown factors12, so also those which are not describable with the use of any symbolic, formalized means (if such exist). The above may be modeled even theoretically13 and executed practically with use of biological or physical components (cell automats, quantum effects). In the end the running debate is reduced to some theological understanding of concept of chance and randomness. I indicated the very first differences discussing point Chance/randomness (complexity in quantum physics and evolutionary biological disciplines). The question is especially here about whether (i) we deal with just simple chance and randomness the same which is effectively used in any explanation of practical physical processes and later modeled practically - so in the end we understand it as mechanical (ii) or we deal with some another kind of unpredictability which is “responsible” for emergence of many recognized extraordinary phenomena, what proves existence of extraordinary meta “reality” and finally triggers caution with calling this unpredictability mechanical. As already said, finally meta problem is a wider problem of Philosophy of Science, and indicates limits of any formalization. However the debate on philosophical consequences showed in the questioned assumptions that the essence of the problem with explanation and modeling of human mind is its 10 Lucas J.R., Minds, Machines and Gödel, „Philosophy”, vol. XXXVI, Penrose R., Nowy umysł cesarza, ISBN 83-01-11819-9, str. 127-132 11 Krajewski S. Twierdzenie Gödla i jego filozoficzne interpretacje. Od mechanicyzmu do postmodernizmu. Wydawnictwa IFiS, PAN, Warszawa, 2003, s. 366, 12 Unpredictability resulting from use of chance/randomness understood as metadata available beyond of concrete system of description of the model of cognitive process (data having grater contents of information then complexity of M system representing description of the model of cognitive process) 13 Copeland B.J. , Hypercomputation, Minds and Machines 12 (4):461-502. openness and dependency on evolution and learning processes allowing by the use of chance/randomness for available free selfmodification. 1.1.1. Problem of complexity and mechanizability Problem of complexity may be simple presented as an argument against mechanizability by questioning (i) predictability of mental or biological states of mind or brain because of its mechanical complexity and evoluating character in time. Moreover (ii) impossibility of reduction of mind (as emergent structure) to brain is often stressed. It is worth to observe that the open (properties: chance/randomness, selfmodification abilities) and dynamic system in conditions of mechanical and evolutionary (selfmodificating in time) complexity naturally leads to emergence of irreducive phenomenas and its limited predictability. We have to stress that independently from the above indications we still can give some kind of evolutionary/learning explanation of the emergent phenomenas. More reductive explanation we want make more irregularities we have to include in description with chance/randomness. However the most static frame theory of the system (which does not have to selfmodify to be able to explain the subject phenomena effectively) still gives us many information on its properties. In conclusion we also may acknowledge possibility of partial reduction in frames of evolutionary/learning mechanism perspective (independently from impossibility of full reduction and predictability of the system). So if we accept the fundamental fact that science really says us something about described reality - we can ask about the most static frame theory of human mind (basic knowledge on properties of any human cognition), especially human behavior? 2. Development: open models of lower cognitive processes 2.1.General remarks In the second part of the paper I want to show how models of human cognitive processes/mind deal with meta problem and problem of complexity by modeling them with different scale of openness (basing on greater or lesser axiomatic power) what in terms of representation is described with symbolic, subsymbolic and connectionistic methods. In particular I want to show the above on the grounds of disciplines dealing with problem of: (3.2.) representation having its source in linguistics, philosophy, logic, computer sciences, psychology, artificial intelligence, neurobiology (3.3.) choice making having its source in mathematics (in particular game theory), psychology, economics, computer science, artificial intelligence, neurobiology and (3.4.) data processing systems having its source in logic, computer science, artificial intelligence, neurobiology what may be also understood as generalization of the above problems of representation and choice making. I also indicate (3.5.) that all the above problems from neurobiological (connectionistic) perspective are de facto one and the same thing, defined with the way how just neural networks work. Such perspective is currently described in Cognitive Science as an “embodied” perspective. 2.2.Representation On grounds of any discipline regarding representation the trend on replacing static models of representation with more open, able to selfmodification and learning. According to classical theories knowledge is represented with concepts that are static syntetic description of class of some designatum, not a set of descriptions of different subsets or single copies of given class14. The very first approaches to representation problem on grounds of AI most clearly show the essence of properties of first definitions of representation. The very first and most simple method of definition of the concept was description with use of conjunctions of values of features. “A concept is defined as a predicate over some universe of instances, and thus characterizes some subset of the instances. Each instance in this universe is described by a collection of ground literals that represent its features and their values.”15. Similar conceptions prevailed within any other discipline dealing with problem of representation.16 The above methods of building of knowledge has one significant advantage and one disadvantage. The advantage consists in simplicity and possibility of logical acquisition and processing of such knowledge. Disadvantage consists on poor economy of such representation, which quickly leads to its combinatorical explosion and impossibility of further effective computable description. It is very serious limitation regarding any symbolic representation of knowledge. The tendency to make representation models more open and flexible quickly could be observed in (i) probabilistic approaches17, (ii) theory of prototypes18 or (iii) context theory19. The above approaches underlined possibility of change of semantic content of any concept on basis of interaction with the environment and learning processes. The following approaches underlined only feature of openness of the representations : (i) frame theory20, (ii) schema theory21, or (iii) theories of semantic networks22. 2.3.Choice making The analogical process could be observed within disciplines dealing with the problem of choice making. Anyway we can see that the problem of representation may be observed as problem of categorizing decisions what already suggest that essence of the two problems is not very different in fact. There are few fields where assumptions were easily undertaken when exploring mechanism of choice making: (i) variety of possible decisions – decision space (ii) criteria of evaluation of the decision (iii) relation between the above decisions and evaluation (iv) existence of practical limits regarding time of computation and power of computability. 14 Bruner, J., Goodnow, J., & Austin, A. (1956) A Study of Thinking, New York: Wiley; Lewicki A., Procesy poznawcze i orientacja w otoczeniu (1960) Warszawa: PWN; 1960, Psychologia kliniczna w zarysie (1968), Wydawnictwo Naukowe UAM, 1968; Loftus E.F., Leasing questions and the eyewitness report, Cognitive psychology, 7, 560-572. 15Mitchell T.M. Generalization as serach, Artificial Intelligence (18), 1982, s.203-226., Mithell T.M., Keller R.M., KedarCabelli S.T., Explanation-Based Generalization: A Unifying View, Machine Learning 1: 47-80, 1986, 1986 Kluwer Academic Publishers, Boston - Manufactured in The Netherlands, Stacewicz P., Umysł a modele maszyn uczących się, współczesne badania informatyczne w oczach filozofa, EXIT, Warszawa, 2010, str. 78 16 Clark E.V., The ontogenesis of mind. Weisbaden: Akademische Verlagsgesel-schaft Athenaion , 1979 17 Smith E.E, Shoben E.J., Rips, L.J., Structure and process In semantic memory. A featural model for semantic decisions. Psychological Review, 81, 214-241 18 Rosch (1978), Principles of categorization, E: E.H.Rosch, B.B Lloyd, Cognition and categorization, p. 27-48, Hillasale, NJ: Lawrence Erlbaum. 19 Medin D.L., Schaffer, M.M., Context theory of classification learning. Psychological Review, 85, 207-238 20 Minsky M., (1975), Frame theory, . In TINLAP '75: Proceedings of the 1975 workshop on Theoretical issues in natural language processing (1975), pp. 104-116. 21 Rumelhart, D.E. (1980) Schemata: the building blocks of cognition. In: R.J. Spiro etal. (eds) Theoretical Issues in Reading Comprehension, Hillsdale, NJ: Lawrence Erlbaum. 22 Collins, A. M. & Quillian, M. R. (1969). Retrieval time from semantic memory. Journal of Verbal Learning and Verbal Behavior, 8, 240-247, Collins, A. M. & Loftus, E. F. (1975). A spreading-activation theory of semantic processing. Psychological Review, 82(6), 407-428 Classical Decision Theories were aimed at practical purposes (such as game theory) and assumed lack of practical limits regarding time of computation and power of computability (we want to know the best variant of the play of the game), concrete knowledge on possible decisions, criteria of evaluation of the decision and relation between decisions and their evaluations. That is the reason why theories based on Classical Decision Theories are named as normative theories. Derogation of the above assumptions, in particular derogation of the assumption regarding lack of existence of practical limits regarding time of computation and power of computability, and consequently making the choice/decision models learning knowledge on (i) variety of possible decisions – decision space (ii) criteria of evaluation of the decision (iii) relation between the above decisions and evaluation caused emergence of models basing on chance-randomness (basing on statistical and probabilistic methods). The emergence of descriptive models of decision making led to emergence of the following psychological models: (i) theory of satisfying23, (ii) elimination by aspects24, (iii) ABC group heuristics25. However the most prominent example of such open model of decision making is the prospect theory 26 It is worth to observe that quite stable assumption that remained is the assumption on existence of final criteria on evaluation of the decision. Further considerations on the problem of decision making determined Kahneman (after Tversky’s death) to occupy with the problem of final criteria on evaluation of decisions more accurately. Observing difficulties with indication of precise criteria of final utility Kahneman claims for distinguishing different kinds of utilities27. 2.4.Methods: Data processing / logic Problem of data processing may be interpreted as generalization of the above indicated problem of representation and choice making. Mainly data processing was based on logic. Logic has emerged basing on assumptions that (i) we can sign a designatum with the concrete symbol ( “logos” meaning “word”), what (ii) allows for concluding (“logos” meaning “reason” or “mind”) (iii) existence of final true (what results from human need of safety). At the beginning classical logic based on the above assumptions line and sinker. Along with development of computer sciences and emerging AI weakening of the above assumptions triggered emergence of nonmonotonic, undifisible and fuzzy logics. 2.5.Subsymbolism and natural connectionism (link to to point 2.4.1. - episthemological problem - meta problem) Simon H., (1947), Administrative behavior. New York: Macmilan, Simon H., (1956), Rational choice and the structure of environments. Psychological reciew. Administrative behavior. New York: Macmilan 24 Tversky A.,(1972), Elimination by aspects: A theory of choice. Psychological review, 79, 281-299. Tversky A.,(1973), Encoding processes in recognition and recall. Cognitive Psychology, 5, 275-287. 25 Gigerenzer G., Todd P.M., (1999). Fast and frugal perspective. The adaptive toolbox. W: Gigerenzer G., Todd P.M., The ABC Research Group (red: Simple heuristics that make us smart (s. 3-34). Oxford, UK: Oxford University Press., Todd P.M., Miler G.F., (1999). From prode and prejudice to persuation: Satisficing in mate search. W: G. Gigerenzer, P.M. Todd, ., The ABC Research Group (red: Simple heuristics that make us smart (s. 287-308). Oxford, UK: Oxford University Press 26 Kahneman D., Tversky A. (1979). Prospect theory: An analysis of decision under risk. Econometrica, 47, 263-293. Kahneman D., Tversky A. (1984). Choices, values and frames. American Psychologist, 39, 341-350. 23 27 Kahneman Back to Bentham? Explorations of Experienced utility In parallel to development of open models of data processing we learned much how the brain is build. Open models of data processing, inspired with naturalistic models of learning and evolution and finally reductive to some different network or quasi network models where learning process consisting in creation/reinforcement of connections between elements unified in the above mentioned network met face to face that brain is a structure consisting with lots of (ca 100 billions) unified in network (by ca 100 milliards) simple and similar to each other nervous cells (neurons). All the human minds activities are governed with the parallel and distribuited processing taking place in functionality of this neurons network. There is one crucial philosophical issue to be stressed first. We can not simple identify natural neural networks with their computer simulation. First of all we simply we do not have enough neurobiological information to know what kind of information is processed with neurons. We simple model only the idea of how natural model works (within neurocognitive computer science) and only partially real data processing taking place within human brain (within neurocybernetics). Second of all computer simulation of data processing is not in fact parallel. However both differences may be recognized as not significant, because in the first case the difference results only from lack of obtainable (however meaning of obtainable may be questioned) neurophysical knowledge and in the second case (if really paralellity is significant for modeling some of human mind properties, especially emergent properties) we are able to build parallel systems processing information. However the most important seems the third difference regarding potential limits of openness (axiomatic power) of natural neural networks and their computer models, which can indicated with two dimensions (cognitive and evolutionary one). In cognitive dimension we can say there is possibility that there are some physical processes that can not be described with describable with use of any symbolic, formalized means and these processes might be significant for emergence of full functionality of human mind. It is only a potential difference. We have to remember that it is possible that any “reality” and human mind is some how incomplete or contradictory what is equal to fact it is able to be described with use of any symbolic, formalized means. In evolutionary dimension we shall consider what limits of evolutionary selfmodifications we shall take into account to admit it is enough to create human level intelligent system (with all its emergent phenomena). In particular we know brain is quite biologicaly flexible, what means that its biological structure, in particular connections between neurons emerge in short time. Some more stable brain structures emerge by way of evolution. Probably for recognition of a human level intelligence it is enough some set of “local” physics rules governing in shortest time necessary to emergence phenomena of conciussness is indicated. Such a set of physics rules governing with physics system may differ in case of natural neural networks and their computer simulation. Computer sciencist would say that such a set o physics rules is a level of learning of intelligent machine (represented by unchangeable “algorithm” modifying some of elements of schema of action differing from level of action represented by algorithm of activities on direct influence of the system on environment.28 In case of natural neuronal networks some indeterminant factors that make them not able formalized from one side may make them more open form the other side. However when we take into account conclusions made in point 2.4. we have to admit that the same as in case of any other physical processes we use probabilistic and statistical methods (chance/randomness) to avoid consequences of our lack of knowledge. That is also a reason why we have rather in common with building of neural networks and not thinking it up. 28Cichosz P., Systemy uczące się, Wydawnictwa Naukowo-Techniczne, Warszawa, 2000, s. 35-37. That is we more often we talk about possibility of building human level machine with use of some biological (cell automata) or physical components (quantum effects) which potential indeterministic effect will be enough to create the same open system as human mind. That is a main reason why we distinguish that computer simulation of neural networks are symbolic in the end (calling them subsymbolic). In the following point I will show any effects of complexity and chance/randomness of axiomatic power differences (also potential) trigger problem of reduction and what is most important what in the end might be recognized as reductive (from perspective of level of description of human mind). 2.6.Levels of description / levels of reductivibility in conditions of complexity (link to point 2.4.2. - physical problem – problem of complexity) Brain and mind are complex. They are open (mechanically complex) and dynamic. When explaining physical processes in conditions of complexity we always sustain ability to (worse) description (explanation) of the complex phenomena with use of probabilistic methods. It freely allow us to include in such description any unpredictable/unknown factors (also those which are not describable with use of any symbolic, formalized means - if such exist). We can agree that absolute reduction is not possible but as we concluded in 2.5. we still can give some kind of evolutionary/learning explanation of the phenomena. More reductive explanation we want make more irregularities we have to include in chance/randomness. However the most static frame theory which does not have to modify in order to explain the subject phenomena still can give us many information on its properties. Consequently we also may acknowledge possibility of partial reduction in frames of evolutionary/learning mechanism perspective (independently from impossibility of full reduction and predictability of system). This is also an idea of distinguishing different levels of description of human mind (levels having different axiomatization and approximation powers): different levels of symbolic description, subsymbolic up to an idea of naturally connectionstic (mainly not limited with potential limitations of subsymbolic systems functioning on base of binary signal). So, as mention in the end of 2.5., if we accept the fundamental fact that science really says us something about described reality we can asked about the most static frame theory of human mind applied to any kinds of human cognition (where reduction is much more probable)? 3. Conclusions: open model of higher cognitive processes From the neurobiological perspective we have to admit first that all functionality of human mind is realized by neuronal network consisting in network of simple and similar to each nervous cells (neurons). We recognized the fundamental function of the neural network consisting in creation/reinforcement of connections between neurons unified in the above mentioned network. With regard to distribution of information processing in brain is seems that differences between concrete nervous cells (neurons) are neglibigle. We can say that independently from fact that we distinguish a procedural knowledge (regarding action of the agent) from declarative knowledge it doesn’t mean that from neurobiological point of view the way of storage, structure and mechanism of mining of procedural knowledge do not differ then in case of declarative knowledge.29 From “embodied”, neurobiological, connecsionitic perspective both: representations and choice making are performed in the same way, by creation/reinforcement of connections between neurons structures unified in the neural network. However we have also to admit that such “embodied”, neurobiological, connecsionitic perspective is quite useless. From this perspective we can only say all human activities are performed with realization of categorization function (data mining). If such a strong statement is not enough we have to climb up on levels of description and look on the human behavior from some symbolic perspective, even if we know how such a description/explanation is somehow limited (approximation). However all irregularities we include in concept of chance/randomness and emerged concept of goal (including chance/randomness with regard to complex, evolutionary provenance) . The above rational assumptions stand behind rules governing with action of subsymbolic cognitive architectures (i.e. ACT-R)30. Every agent decides basing on maximalization of utility rule. Every option is assigned with probability (P) of reaching a goal and specified value of costs to be beard (C). If we assume, that value of the goal is indicated with G, expected value of the action is PG. Substructing value of the costs we may assume in symbolic way, that utility assigned with a particular action is PG – C. However it has been proved we do not always base on maximalization utility rule.31 This irregularity is a reason why to the mechanism of choice making the randomness element has been introduced. 32 The choice is made within so called conflict resolution process. By use of the same Boltzmann equotation, which is applicable to computation of activation of the single chunk of knowledge, probability of choice of particular action i with assigned utility Ui is indicated as below: P(i)= eU i/t /∑ eU j/t j In conclusion in the static frame of our knowledge on human behavior the concepts of randomness/chance, goals and utility arise. Now we can freely defense such utilitarian model showing that we can not give almost normative answers, its aboslut not reductibility, undepredictiple but most suitable description of human behavior is still with use of concept of chance/randomness including irregularities and concepts of goals and utilities (also includuing in the end some random effects of evolutionary in time complexity). The above shows why in the end the utilitarian models are so popular independently from inept critics basing on faulty understanding of problems indicated in 2 (meta problem, problem of complexity and problem of chance/randomness). 29 Nęcka, E., Orzechowski, J., Szymura, B. (2006). Psychologia poznawcza. Warszawa: Wydawnictwo Naukowe PWN, ANDERSON, J. R. (1983). The Architecture of Cognition. Mahwah, NJ, USA: Lawrence Erlbaum, ANDERSON, J. R. (1990). ąhe Adaptive Character of ąhought. Hillsdale, NJ: Lawrence Erlbaum, ANDERSON, J. R. (1993). Rules of the Mind. Hillsdale, NJ: Lawrence Erlbaum, ANDERSON, J. R., BOTHELL, D., LEBIERE, C. & MATESSA, M. (1998). An integrated theory of list, memory. Journal of Memory and ¸anguage, 38, 341}380, ANDERSON, J. R. & LEBIERE, C. (1998). ąhe Atomic Components of ąhought. Mahwah, NJ, Lawrence Erlbaum, ANDERSON, J. R., MATESSA, M. & LEBIERE, C. (1997). ACT-R: a theory of higher level cognition, and its relation to visual attention. Human}Computer Interaction, 12, 439}462, ANDERSON, J. R., MATESSA, M. & LEBIERE, C. (1998). The visual interface. In J. R. ANDERSON & C. LEBIERE, Eds. The Atomic Components of thought, pp. 143}168. Mahwah, NJ: Lawrence Erlbaum., ANDERSON, J. R. & REDER (1999). The fan effect: new results and new theories. Journal of Experimental Psychology: General, 128, 186}197. 31 Dawes R., (1988), Rational choice in the uncertain world. Harcourt, Brace and Jovanovich, New York. 32 Lovett, M. C. (1998). Choice. In J. R. Anderson, & C. Lebiere (Eds.). The atomic components of thought, 255-296. Mahwah, NJ: Erlbaum. 30 30 My aim is not to deny an idea of free will or intentionality. Taking into account infinity of human unknowledge we can freely interprate unpredictability, an indeterministic factor (chance/randomness). I just want to show everything what is for us too fuzzy knowledge is reductive to randomness and the most accurate knowledge we have is expressed in the above most static symbolic frames of cognitive scientific description of human cognitive processes. For our pure understanding of explained “reality” and human mind it is better way is to replace such concept of free will with the concept of randomness because the latter is more meaningfull and concrete and does to pull controvencial ontological baggage.