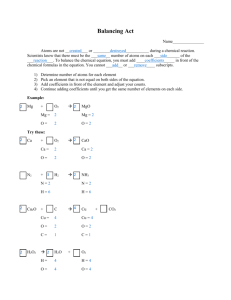

Damage classification in laboratory structures using time series

advertisement

Nearest Neighbor and Learning Vector Quantization classification for

damage detection using time series analysis

Oliver R. de Lautour

Department of Civil and Environmental Engineering

The University of Auckland

Private Bag 92019

Auckland Mail Centre

Auckland 1142

New Zealand

Email: oliver.delautour@beca.com

Piotr Omenzetter (corresponding author)

Department of Civil and Environmental Engineering

The University of Auckland

Private Bag 92019

Auckland Mail Centre

Auckland 1142

New Zealand

Email: p.omenzetter@auckland.ac.nz

Tel.: +64 9 3737599 ext. 88138

Fax: +64 9 3737462

Abstract

The application of time series analysis methods to Structural Health Monitoring (SHM) is a

relatively new but promising approach. This study focuses on the use of statistical pattern

recognition techniques to classify damage based on analysis of the time series model

coefficients. Autoregressive (AR) models were used to fit the acceleration time histories

obtained from two experimental structures; a 3-storey laboratory bookshelf structure and the

ASCE Phase II Experimental SHM Benchmark Structure in undamaged and various damaged

states. The coefficients of the AR models were used as damage sensitive features. Principal

Component Analysis and Sammon mapping were used to firstly obtain two-dimensional

projections for quick visualization of clusters amongst the AR coefficients corresponding to

the various damage states, and later for dimensionality reduction of data for automatic

damage classifications. Data reduction based on the selection of sensors and AR coefficients

was also studied. Two supervised learning algorithms, Nearest Neighbor Classification and

Learning Vector Quantization were applied in order to systematically classify damage into

states. The results showed both classification techniques were able to successfully classify

damage.

Keywords: Structural Health Monitoring, Damage detection, Time series analysis,

Autoregressive models, Nearest neighbor classification, Learning Vector Quantization.

1

1. Introduction

Over the two past decades considerable research efforts have focused on developing robust

and reliable methods for Structural Health Monitoring (SHM), amongst which vibration

based techniques are the most widely used and appear to be the most promising. Extensive

literature reviews of such methods can be found elsewhere [1, 2] and the focus here is

restricted to techniques relevant to this study, namely, the application of time series methods

for damage detection in civil infrastructure and the use of statistical pattern recognition

techniques for damage classification.

Time series analysis techniques, originally developed for analyzing long sequences of

regularly sampled data are inherently suited to SHM applications. However, applications of

such techniques for SHM can still be considered as an emerging and a relatively unexplored

approach. An interesting and promising idea is to use time series coefficients as damage

sensitive features. A pioneering study by Sohn et al. [3] used Autoregressive (AR) models to

fit the dynamic response of a concrete bridge pier. By performing statistical analysis on the

coefficients of the AR models the authors were able to distinguish between the healthy and

damaged structure. In a later study, Sohn et al. [4] applied a similar methodology to health

monitoring of a surface-effect fast patrol boat. However, these studies focused only on

damage detection and the authors did not attempt to classify damage into states

corresponding to its severity. Gul et al. [5] presented a study in which AR coefficients from a

laboratory steel beam with varying support conditions were classified using a clustering

algorithm or multivariate statistics. Omenzetter and Brownjohn [6] used a vector Seasonal

Autoregressive Integrated Moving Average model to detect abrupt changes in strain data

collected from the continuous monitoring of a major bridge structure. The seasonal model

was used because of the strong diurnal component in the data caused by temperature

variations. Nair et al. [7] used an Autoregressive Moving Average time series to model

vibration signals from the ASCE Phase II Experimental SHM Benchmark Structure. The

authors defined a damage sensitive feature used to discriminate between the damaged and

undamaged states of the structure based on the first three AR coefficients. The localization of

the damage was achieved by introducing another feature, also based on the AR coefficients,

found to increase from a baseline value when damage was near. Nair and Kiremidjian [8]

investigated Gaussian Mixture Modeling, an unsupervised pattern recognition technique to

model the first AR coefficients obtained from fitting ARMA time series to an analytical, 12DOF model of the ASCE Phase II Experimental SHM Benchmark Structure. The extent of

damage was shown to correlate well with the Mahalanobis distance between undamaged and

damaged feature clusters.

In order to differentiate between undamaged and damaged systems and locate and quantify

damage analytical techniques are required to interpret patterns in the damage sensitive

features. Such methods can broadly be divided into supervised techniques when data from

damaged structures is available for training of pattern recognition algorithms, and

unsupervised when it is not. Widely employed supervised techniques include Artificial

Neural Networks [9-11], Genetic Algorithms [12], Support Vector Machines [13, 14] and

discriminant analysis [15]. A number of unsupervised methods have also been investigated

and these include outlier detection [16-18], control chart analysis [3, 19] and clustering

analysis [5, 20, 21]. The present study utilizes the techniques of Nearest Neighbor

Classification (NNC) and Learning Vector Quantization (LVQ). These algorithms have been

applied in the past in a number of damage detection studies mostly concerned with faults in

composite materials, mechanical systems and aerospace structures. Philippidis et al. [22] and

2

Godin et al. [23] classified the waveforms of acoustic emission signals recorded during

destructive tensile tests on coupons of composite materials. Raju Damarla et al. [24] and

Doyle and Fernando [25] analyzed high frequency vibration data from damaged composite

panels and classified damage using frequency and time domain features. Tse et al. [26]

studied a tapping machine using statistical features of dynamic forces and torques measured

in the various machine components and identified several states in which the machine was

operating. Abu-Mahfouz [27] considered classification of damage in a gearbox system

combining frequency response data and basic statistical measures of time-domain dynamic

signals. Trendafilova et al. [28] considered detection of damage by examining frequency

response functions of the FEM model of an aircraft wing. Despite the interest these

techniques drew amongst the composite materials, machine and aircraft monitoring

community, the application of NNC and LVQ for classification of damage to civil

infrastructure has not yet been studied.

This study develops a method for structural damage detection that integrates the use of AR

models to establish damage sensitive features and application of the NNC and LVQ

techniques for classification of the AR coefficients depending on damage type and location.

The proposed method firstly fits the structural acceleration time histories in the undamaged

and various damaged states of the system with AR models. The coefficients of these AR

models are then chosen as damage sensitive features. In order to enable visualization of the

data and check the presence of clusters amongst the AR coefficients corresponding to the

different damage states they are initially projected onto two dimensions using Principal

Component Analysis (PCA) and Sammon mapping. The vectors of damage sensitive features,

with dimensions reduced via PCA or selection of subsets of sensors and AR coefficients,

were later analyzed using NNC and LVQ algorithms in order to classify damage into states.

The method has been applied to experimental data obtained from a simple 3-storey laboratory

bookshelf structure excited on a shake table and the more complex ASCE Phase II

Experimental SHM Benchmark Structure excited by a small shaker.

2. Theory

2.1 Autoregressive models

In this study, AR time series models are used to describe the acceleration time histories. AR

models are a staple of time series analysis [29] and are used in the analysis of stationary time

series processes. A stationary process is a stochastic process, one that obeys probabilistic

laws, in which the mean, variance and higher order moments are time invariant. AR models

attempt to account for the correlations of the current observation in time series with its

predecessors. A univariate AR model of order p, or AR(p), for the time series {xt} (t =

1,2,…n) can be written as:

xt 1 xt 1 2 xt 2 ... p xt p at

(1)

where xt,…xt-p are the current and previous values of the series and {at} is a Gaussian white

noise error time series with a zero mean. The AR coefficients 1,…, p can be evaluated

using a variety of methods [29]. In this study, the coefficients were calculated using a least

squares solution. While using the least squares method for small samples may introduce bias

in the AR coefficient identification, for larger samples, as in our case, this bias is usually

negligible [30, 31].

3

2.2 Nearest Neighbor Classification

NNC is a simple supervised pattern recognition technique [32]. Given a set of pre-selected

and fixed reference or codebook vectors mi (i = 1, …, k) corresponding to known classes, an

unknown input vector x is assigned to the class which the nearest mi belongs. Several

distance measures can be used including Manhattan, Euclidean, Correlation and

Mahalanobis. In this research the Euclidean and Mahalanobis distance measures were used.

The Euclidean distance DE(x,y) between two vectors x and y can be calculated using:

DE x, y

x y T x y

(2)

The Mahalanobis distance DM(x,y) between two vectors of the same multivariate distribution

with a covariance matrix can be calculated from:

DM x, y

x y T 1 x y

(3)

The Mahalanobis distance accounts explicitly for the different scales and correlations

amongst vector entries and can be more useful in cases where these are significant.

2.3 Learning Vector Quantization

LVQ is a supervised machine learning technique designed for classification or pattern

recognition by defining class borders [32]. It is similar to NNC in that it uses a set of

codebook vectors and seeks for the minimum of distances of an unknown vector to these

codebook vectors as the criterion for classification. However, unlike in NNC where codebook

vectors are fixed, an iterative procedure is adopted in which the position of the codebook

vectors is adjusted to minimize the number of misclassifications. Learning of the optimal

codebook vector positions can be performed using several algorithms. In this study, the

Optimized-Learning-Rate LVQ1 algorithm was used. This algorithm has an individual

learning rate for each codebook vector, resulting in faster training.

Given a set of initial codebook vectors mi (i = 1, …, k) which have been linked to each class

region, the input vector x is assigned to the class which the nearest mi belongs, i.e. an NNC

task is performed. Let c define the index of the nearest codebook vector, i.e. mc. Learning is

an iterative procedure in which the position of the codebook vectors is adjusted to minimize

the number of misclassifications. At iteration step t let x(t) and mi(t) be the input vector and

codebook vectors respectively. The mi(t) are adjusted according to the following learning

rule:

m c t 1 1 s t c t m c t s t c t x t

m i t 1 m i t for i c

(4)

where s(t) equals +1 or -1 if x(t) has been respectively classified correctly or incorrectly, and

c(t) is the variable learning rate for codebook vector mc:

4

c t

c t 1

1 s t c t 1

(5)

The learning rate must be constrained such that c(t) < 1.

2.4 Principal Component Analysis

PCA is a popular multivariate statistical technique often used to reduce multidimensional

data sets to lower dimensions [33]. Given a set of p-dimensional vectors xi (i = 1, …, n)

drawn from a statistical distribution with mean x and covariance matrix , PCA seeks to

project the data onto a new p-dimensional space with orthogonal coordinates via a linear

transformation.

Decomposition of the covariance matrix by singular value decomposition leads to:

VVT

(6)

where = diag[ 12 , …, 2p ] is a diagonal matrix containing the eigenvalues of ranked in

the descending order 12 … 2p , and V is a matrix containing the corresponding

eigenvectors or principal components. The transformation of a data point xi into principal

components is:

z i VT x i x

(7)

The new coordinates zi are uncorrelated and have a diagonal covariance matrix . Therefore,

the entries of zi are linear combinations of the entries of xi which explain variances 12 ,

…, 2p . To reduce the dimensionality, a selection q < p of principal components can be used

that retains those components that contribute most to the data variance, thus reducing the

dimension of the data to q.

2.5 Sammon mapping

Sammon mapping [34] is a nonlinear transformation used for mapping a high dimensional

space to a lower dimensional space in which local geometric relations are approximated.

Consider a set of vectors xi (n = 1, …, n) in a p-dimensional space and a corresponding set of

vectors yi in a q- dimensional space, where q < p. For visualization purposes q is usually

chosen to be two or three. The distance between vectors xi and xj in p-dimensional space is

given by:

Dij* D x i , x j

(8)

and the distance between the corresponding vectors yi and yj in q-dimensional space is:

Dij D y i , y j

5

(9)

where D is a distance measure, usually the Euclidean distance. Mapping is achieved by

adjusting the vectors yi to minimize the following error function by steepest descent [35]:

E

n

1

i 1 j i Dij*

n

i 1 j i

D

ij

Dij*

2

Dij*

(10)

3. Application to a 3-storey bookshelf structure

The 3-storey experimental bookshelf structure used in this study was approximately 2.1m

high and constructed from equal angle aluminum column sections and stainless steel floor

plates bolted together with aluminum brackets as shown in Figure 1. The stainless steel plates

were 4mm thick and 650mm 650mm square. The column sections were 30mm 30mm

equal angles. Two section thicknesses were used for the columns, either 3.0mm or 4.5mm for

the damaged and undamaged states respectively. Each column was made of 3 0.7m high

segments, rather than one long angle, in order to make them easily replaceable for simulation

of localized damage at different stories. The column sections were fastened at each end with

two M6 bolts to aluminum brackets (Fig. 1b). Additional brackets were installed at the base

of the structure to minimize torsional motion. The whole structure was mounted on a 20mm

plywood sheet fixed with M10 bolts to the shake table.

The structure was instrumented with four uniaxial accelerometers, one for measuring the

table acceleration and one for each storey. Accelerations were measured in the direction of

ground motion at 400Hz using a computer fitted with a data-logging card. Afterwards the

data was decimated by a factor of four for modal analysis and eight for time series modeling.

Damage was introduced into the structure by replacing the original 4.5mm thick columns of a

particular storey with 3.0mm angles. Four damage states were considered; these were labeled

D0, D1, D2 and D3 corresponded to no damage (healthy structure), 1st storey damage, 2nd

storey damage and simultaneous 1st and 2nd story damage. Figure 2 schematically shows

these four damage states together with the percentage of remaining stiffness obtained via

model updating described below.

In order to understand and quantify the dynamic properties of the structure modal analysis

was conducted in each damage state and modal parameters were estimated from the

identification of a discrete state-space model using the Prediction Error Method [36]. For

modal analysis the input into the shake table was white noise. Table 1 shows the estimated

natural frequencies fe and percentage changes of the frequencies fe in states D1, D2, and D3

in relation to D0. Modal damping was approximately 1% for all modes. The modal analysis

results were used to update simple lumped mass-spring models of the structure. The updating

was performed in order to assess the structural stiffness in different damage states. The

masses lumped at each storey were calculated from known section sizes and initial estimates

of lateral storey stiffness were provided from simple hand calculations. Modal frequencies

were used to update the mass-spring models using a sensitivity matrix approach and the

analytical and experimental mode shapes were checked using the Modal Assurance Criterion

(MAC) [37]. From these models the lateral stiffness of each storey in each damage state was

estimated. The results from model updating are given in Table 2. Reductions in lateral

stiffness between 7-10% were observed (see also Figure 2).

6

The objective of the damage detection study was to classify seismic responses measured in

the four damage states and to that end eight scaled earthquake records were used to excite the

structure. Table 3 lists the earthquakes used, the Peak Ground Acceleration (PGA) of the

original and scaled records, the duration of the record and the frequency at which the

earthquake was sampled. The earthquakes were scaled so that a range of response amplitudes

was obtained, while ensuring no yielding of the structure occurred. The acceleration time

history of each storey was modeled using a univariate AR model. Although the structural

response due to earthquake motion would normally be considered as nonstationary, analysis

of small segments only can satisfactorily preserve stationarity [38].

In this study, the optimal AR model was selected using the Akaike Information Criterion

(AIC) [39]. The AR coefficients were estimated from a 500-point window advancing 100

points until the end of the record was reached. Averaging AICs over the 3 stories showed a

minimum at order 24. Model diagnostic checking, comprising testing the stationarity and

normality of the residuals and significance of sample residual Autocorrelation Function

(ACF) and Partial Autocorrelation Function (PACF), was conducted [29]. The KolmogorovSmirnov test [40] was used to test for normality of the residuals and the Ljung-Box statistic

[29] was used to test for correlation. The tests indicated that the residuals conformed to a

Gaussian white noise process. A data set of 388 points (vectors of AR coefficients)

containing 97 points for each damage state was obtained.

3.1 Damage classification in the 3-storey bookshelf structure

Using three univariate AR(24) models, one for each floor, resulted in 72-dimensional vectors

of damage sensitive features. As a preliminary investigation, to visualize and check the

presence of clusters in the data, PCA and Sammon mapping were used to create two and

three-dimensional projections of the vectors of AR coefficients. The results of the twodimensional projections are shown in Figures 3 and 4 for PCA and Sammon mapping,

respectively. Projection of the data onto the first two principal components showed no clearly

defined clusters with data points from all four damage states scattered amongst one another.

In contrast, the Sammon map showed some organization of the data into overlapping bands,

although again, no distinct clusters could be drawn. Using three-dimensional mappings did

not provide a better separation. These preliminary insights indicate that higher dimensional

data need to be investigated to achieve separation of the AR coefficients from different

damage states. Simple visual techniques will then be inadequate and more advanced

approaches such as NNC and LVQ are required.

The two previously described pattern recognition techniques, NNC and LVQ were used to

classify damage into the states D0-D3. Two data reduction techniques were investigated: (i)

projection of the data onto a lower dimensional space using PCA, and (ii) selection of subsets

AR coefficients. The reason for studying the two data reduction techniques is that PCA

systematically picks up feature components that contribute most to its variance, which may

on the other hand be difficult to achieve through selecting AR coefficients by a trial and error

approach. Due to the computational effort required for Sammon mapping this technique was

not used at this stage.

The feature dimension was reduced by projecting the AR coefficients onto the first 60, 40,

30, 20 or 10 principal components using PCA. The 388-point data set, consisting of 97 points

from each damage state was randomly divided into 300 codebook vectors and 88 testing

7

points respectively. Five different random sets of codebook vectors were considered. Using

NNC and averaging the results from five runs, the obtained number of misclassifications and

percentage errors is given in Table 4 for both the Euclidean and Mahalanobis distance

measures. The Mahalanobis distance measure outperformed the Euclidean by a considerable

margin and adequate results with 6% misclassifications were obtained using 20 principal

components. Good classification results, 5% or less misclassifications, were achieved using

more than 30 principal components while excellent classification, 1% or less

misclassifications, required 60 principal components. Using the Euclidean distance measure a

large number of misclassifications were obtained, in excess of 34% misclassifications. The

difference in performance between the Mahalanobis and Euclidean distance measures could

be explained by the fact that the Mahalanobis distance accounts for the different scales of

each principal component.

Four subsets of AR coefficient were investigated with (i) first five coefficients, (ii) first ten

coefficients, (iii) first fifteen and (iv) first twenty coefficients selected from all three models.

Using NNC and the Mahalanobis distance, the obtained number of misclassifications and

percentage errors is given in Table 5 as an average of five runs with different codebook

vectors. Good classification, less than 5% errors, was obtained using 10 AR coefficients or

more. Similar performance between the two data reduction techniques was observed for

smaller feature dimensions. For example, using 10 AR coefficients, equating to a feature

dimension of 30, 4 misclassifications were recorded. When using 30 principal components, 4

misclassifications were also recorded. However, for larger feature dimensions PCA

performed better than selection of subsets of AR coefficients. Using 20 AR coefficients and

60 principal components, 3 and 1 misclassifications were obtained respectively.

Although NNC performed well, performance could be improved by using a more advanced

classification technique such as LVQ. The LVQ classification was used with the Mahalanobis

distance measure and the PCA dimensionality reduction technique. The 388-point data set

was divided into 300 points for training and 88 points for testing. The number of codebook

vectors was chosen to be 30, 50 or 100. These were initialized by random selection from the

training data set. The results averaged from five runs with different initialized codebook

vectors are shown in Table 6. The table shows that increasing the number of codebook

vectors reduced the number of misclassifications. Good classifications were obtained when

20 or more principal components were used while excellent classification was achieved using

30 principal components. Overall, LVQ performed better than NNC with excellent

classifications obtained using 30 principal components and much smaller numbers of

codebook vectors compared to 60 principal components using NNC.

4. Application to ASCE Phase II Experimental SHM Benchmark Structure

The ASCE Phase II Experimental SHM Benchmark Structure [41] is a 4-storey 2-bay by 2bay steel frame with a 2.5m 2.5m floor plan and a height of 3.6m (Figure 5a). The columns

were B1009 sections and the floor beams were S7511 sections, all sections were Grade

300 steel. The beams and columns were bolted together. Bracing was added in all bays with

two 12.7mm diameter threaded steel rods, see Figure 5b. Additional mass was distributed

around the structure to make it more realistic. Four 1000kg floor slabs were placed on the 1st,

2nd and 3rd floors, one per bay. On the 4th floor, four 750kg slabs were used. Two of the slabs

per floor were placed off-centre to increase the coupling between translational and torsional

motion.

8

In the following discussions the locations in the structure are referred to using their respective

geographical directions of north (N), south (S), east (E) and west (W).

A total of 9 damage scenarios were simulated on the structure, these involved the removal of

bracing and the loosening of bolts in the floor beam connections. Table 7 lists the damage

states and gives a description of damage. The different configurations give a mixture of

minor and extensive damage cases.

A series of ambient and forced vibration tests were carried out on the structure. Of primary

interest in this study were the forced random vibration tests conducted using an electrodynamic shaker mounted on the SW bay of the 4th floor on the diagonal. Input into the shaker

was band-limited 5-50Hz white noise. The structure was instrumented with 15

accelerometers; three accelerometers each for the base, 1st, 2nd, 3rd and 4th floors. These were

located on the E and W frames to measure motion in the N-S direction and in the centre to

measure E-W motion. Acceleration data was recorded at 200Hz using a data acquisition

system and filtered with anti-aliasing filters.

The optimal model order was selected using AIC and the residual error series was checked

for confirmation of a Gaussian white noise process following the same process as described

earlier. Univariate AR(30) models were adopted and fitted to the acceleration data from each

of the 15 accelerometers. This resulted in the dimension of feature (AR coefficients) vectors

of 300. The AR coefficients were estimated using least squares from 1000-point segments

advancing 200 points until the end of the record was reached. A data set of 1035 feature

vectors was obtained, 115 from each configuration.

4.1 Damage classification in the ASCE Phase II Experimental SHM Benchmark Structure

Preliminary investigations showed that projection of the data onto the first two principal

components allows much clearer clustering in the data to be seen than in the case of the 3storey bookshelf structure (Figure 6). Two larger clusters were apparent that consisted of the

configurations 1-6 and configurations 7-9. Some overlapping existed between certain damage

configurations, in particular configurations 1, 5 and 6. Using Sammon mapping, see Figure 7,

six large-scale clusters could be seen. Two of the clusters consisted of configurations 1, 5,

and 6 and configurations 3 and 4, while the remaining clusters were solely formed by a single

configuration.

Two data reduction techniques were once again investigated (i) projection of the data onto a

lower dimensional space using PCA and (ii) selection of subsets of AR coefficients and/or

accelerometers. The reason for considering the selection of sensors as a data reduction

method was that in practical situations one will not have the comfort of having a large

number of accelerometers to perform PCA but will rather have to decide on their practically

feasible numbers and locations before deploying a monitoring system.

For the purpose of damage classification using NNC, the feature dimension was reduced by

projection of the data onto the first 20, 10, 5 or 3 principal components. The 1035-point data

set was randomly divided into 700 codebook vectors and 335 testing points. Averaging the

results from five runs with different selection of codebook vectors the number of

misclassifications and percentage errors is given in Table 8. In this case, similar performance

was obtained using both the Euclidian and Mahalanobis distance measures. This can be

9

attributed to the fact that the data from different damage configuration seems to be well

separated. Excellent performance (1% or less misclassifications) was obtained using only 10

principal components. These results represent significant reduction in dimensionally while

good accuracy was maintained.

Reducing the number of AR coefficients and/or accelerometers may adversely affect

information contained in the data and degrade the performance of the damage detection

method. Therefore several combinations of reduced AR coefficients and accelerometers were

systematically investigated to ascertain their practical minimum numbers. The number of AR

coefficients was reduced by selecting the first few coefficients only. This was chosen to be

either (i) the first coefficient, (ii) the first two coefficients, (iii) the first three coefficients, (iv)

the first four coefficients, or (v) the first six coefficients. Similarly, the number of

accelerometers and their location was either (i) the full set (15 accelerometers), (ii) omitted

accelerometers located on the base and those of the W face measuring N-S motion (8

accelerometers), or (iii) same as case (ii) but with all remaining accelerometers on stories 1

and 3 omitted (4 accelerometers). The numbers of misclassifications using NNC and the

Euclidean distance averaged for five runs with different codebook vectors and for different

subsets of AR coefficients and/or accelerometers are shown in Figure 8. The boundaries of

5% and 1% percent misclassifications are also shown in the figure. It can be seen that good

classification was obtainable using at least 2 AR coefficients and/or 4 accelerometers (feature

dimension 8), while excellent performance required at least 3 AR coefficients and/or 4

accelerometers (feature dimension 12). These results are only slightly inferior compared to

PCA.

LVQ classification was applied to PCA-reduced data with the same number of principal

components as previously and the same sized training and testing data sets were used. The

results from NNC showed that performance was similar for both distance measures, and

hence only the Euclidean distance was chosen for LVQ. The number of codebook vectors

was either 50, 100 or 200. These were initialized by random selection from the training set.

The results obtained from averaging the number of misclassifications from five runs are

shown in Table 9. The table shows that increasing the number of codebook vectors generally

resulted in better performance. Excellent performance was obtained using 20 principal

components for 100 and 200 codebook vectors. Good classification was still achieved using

10 principal components, however, errors became significant once fewer principal

components were used. Overall, performance was slightly worse than NNC.

5. Conclusions

SHM and damage detection methods can benefit from the applications of time series analysis

techniques, which were developed for understanding long, regularly sampled sequences of

data. In this study, a supervised damage detection and classification method using AR models

and NNC and LVQ statistical pattern recognition algorithms has been developed and applied

to a simple 3-storey laboratory bookshelf structure and more complex ASCE Phase II

Experimental SHM Benchmark Structure. Acceleration time histories of the structures in

different simulated damage cases and under dynamic excitation were recorded and fitted

using AR models. The coefficients of these AR models were chosen as damage sensitive

features. Dimensionality reduction of the damage sensitive feature was achieved via PCA and

Sammons mapping and served the purpose of visualization of clusters amongst the AR

coefficients and lessening computational burden of the pattern recognition techniques.

Selection of subsets the AR coefficients and accelerometers was also investigated as a means

10

for data reduction. Systematic damage classification was studied using the NNC and LVQ

supervised learning algorithms with either Euclidian or Mahalanobis distance measures.

The studies on the 3-storey laboratory structure demonstrated that for localized stiffness

reduction of 7% to 10% the AR coefficient corresponding to different damage states, when

projected on two dimensions, do not form clearly separable clusters. However, when the

number of principal components used was increased to 60 and 30 respectively, both NNC and

LVQ with the Mahalanobis distance measure were able to classify damage with no more than

approximately 1% of misclassifications. The Euclidian measure, on the other hand produced

approximately 35% misclassifications. Using selection of AR coefficient subsets as an

alternative feature reduction technique, NNC produced approximately 3% misclassifications

with a feature dimension of 60, indicating the systematic approach using PCA offered some

benefits. For the data from the ASCE Phase II Experimental SHM Benchmark Structure

much more clearly delineated clusters of AR coefficients were seen in two dimensions. In

this case the performance of both distance measures was comparable. The results using NNC

and LVQ showed that approximately 1% misclassifications could be achieved using 20

principal components. The selection of AR coefficient subsets showed similar

misclassifications to PCA for equivalent feature dimensions when analyzed using NNC.

Overall, the results showed that AR coefficients perform well as damage sensitive features,

NNC and LVQ are reliable tools for damage classification, and significant reductions in the

data dimensionality could be achieved whilst maintaining good performance.

In its current form outlined in the paper the proposed damage detection method faces some

challenges. Firstly, the supervised nature of the method requires data from damaged states to

be available. This represents a practical challenge to the applicability of the method to real

life structures. However, in a real-world application data from damaged states could be

obtained from an analytical model of the structure. Secondly, in their basic forms used in the

paper both NNC and LVQ use a predefined number of clusters or damage states and if

presented with a feature from another damage state they will classify it incorrectly as

belonging to one of those states. This problem could be addressed by accounting for

probabilistic or possibilistic nature of cluster classification. A mapping between the physical

changes in the structure due to damage and resulting positions of corresponding feature

clusters may be established, in practice using an analytical structural model. Then,

probabilities or membership functions can be constructed based on distances from a damage

feature to the given predefined clusters. Samples with probabilities or membership function

values close to one will be assigned to the respective clusters and those with smaller values

will not. For samples rejected from the predefined clusters their damage could be inferred by

interpolating the mapping between the physical changes in the structure due to damage and

the resulting positions of feature clusters. The extensions of the proposed method outlined is

this paragraph will be studied in future.

Acknowledgement

The authors would like to express their gratitude for the Earthquake Commission Research

Foundation of New Zealand for their financial support of this research (Project No.

UNI/535).

References

11

1.

2.

3.

4.

5.

6.

7.

8.

9.

10.

11.

12.

13.

14.

15.

16.

17.

Doebling SW, Farrar CR, Prime MB, Shevitz DW. Damage identification and health

monitoring of structural and mechanical systems from changes in their vibration

characteristics: A literature review. Los Alamos National Laboratory: Los Alamos,

NM, USA, 1996.

Sohn H, Farrar CR, Hemez FM, Shunk DD, Stinemates DW, Nadler BR. A review of

structural health monitoring literature: 1996–2001. Los Alamos National Laboratory:

Los Alamos, NM, USA, 2003.

Sohn H, Czarnecki JA, Farrar CR. Structural health monitoring using statistical

process control. Journal of Structural Engineering 2000; 126: 1356-1363.

Sohn H, Farrar CR, Hunter NF, Worden K. Applying the LANL statistical pattern

recognition paradigm for structural health monitoring to data from a surface-effect

fast patrol boat. Los Alamos National Laboratory: Los Alamos, NM, USA, 2001.

Gul M, Catbas FN, Georgiopoulos M. Application of pattern recognition techniques

to identify structural change in a laboratory specimen. Sensors and Smart Structures

Technologies for Civil, Mechanical and Aerospace Systems 2007, San Diego, CA,

2007; 65291N1-65291N10.

Omenzetter P, Brownjohn JMW. Application of time series analysis for bridge

monitoring. Smart Materials and Structures 2006; 15: 129-138.

Nair KK, Kiremidjian AS, Law KH. Time series-based damage detection and

localization algorithm with application to the ASCE benchmark structure. Journal of

Sound and Vibration 2006; 291: 349-368.

Nair KK, Kiremidjian AS. Time series based structural damage detection algorithm

using Gaussian mixtures modeling. Journal of Dynamic Systems, Measurement and

Control, Transactions of the ASME 2007; 129: 285-293.

Wu X, Ghaboussi J, Garrett JH. Use of neural networks in detection of structural

damage. Computers and Structures 1992; 42: 649-659.

Elkordy MF, Chang KC, Lee GC. Neural networks trained by analytically simulated

damage states. Journal of Computing in Civil Engineering 1993; 7: 130-145.

Ni YQ, Zhou XT, Ko JM. Experimental investigation of seismic damage

identification using PCA-compressed frequency response functions and neural

networks. Journal of Sound and Vibration 2006; 290: 242-263.

Ruotolo R, Surace C. Damage assessment of multiple cracked beams: Numerical

results and experimental validation. Journal of Sound and Vibration 1997; 206: 567588.

Worden K, Lane AJ. Damage identification using support vector machines. Smart

Materials and Structures 2001; 10: 540-547.

He H-X, Yan W-M. Structural damage detection with wavelet support vector

machine: Introduction and applications. Structural Control and Health Monitoring

2007; 14: 162-176.

Garcia G, Butler K, Stubbs N. Relative performance of clustering-based neural

network and statistical pattern recognition models for nondestructive damage

detection. Smart Materials and Structures 1997; 6: 415-424.

Worden K, Manson G, Fieller NRJ. Damage detection using outlier analysis. Journal

of Sound and Vibration 2000; 229: 647-667.

Omenzetter P, Brownjohn JMW, Moyo P. Identification of unusual events in multichannel bridge monitoring data. Mechanical Systems and Signal Processing 2004; 18:

409-430.

12

18.

19.

20.

21.

22.

23.

24.

25.

26.

27.

28.

29.

30.

31.

32.

33.

34.

35.

36.

37.

38.

39.

Park G, Rutherford AC, Sohn H, Farrar CR. An outlier analysis framework for

impedance-based structural health monitoring. Journal of Sound and Vibration 2005;

286: 229-250.

Fugate ML, Sohn H, Farrar CR. Vibration-based damage detection using statistical

process control. Mechanical Systems and Signal Processing 2001; 15: 707-721.

Manson G, Worden K, Holford K, Pullin R. Visualisation and dimension reduction of

acoustic emission data for damage detection. Journal of Intelligent Material Systems

and Structures 2001; 12: 529-536.

da Silva S, Dias Junior M, Lopes Junior V, Brennan MJ. Structural damage detection

by fuzzy clustering. Mechanical Systems and Signal Processing 2008; 22: 1636-1649.

Philippidis TP, Nikolaidis VN, Anastassopoulos AA. Damage characterization of

carbon/carbon laminates using neural network techniques on AE signals. NDT & E

International 1998; 31: 329-340.

Godin N, Huguet S, Gaertner R, Salmon L. Clustering of acoustic emission signals

collected during tensile tests on unidirectional glass/polyester composite using

supervised and unsupervised classifiers. NDT & E International 2004; 37: 253-264.

Raju Damarla R, Karpur P, Bhagat PK. A self-learning neural net for ultrasonic signal

analysis. Ultrasonics 1992; 30: 317-324.

Doyle C, Fernando G. Detecting impact damage in a composite material with an

optical fibre vibration sensor system. Smart Materials and Structures 1998; 7: 543549.

Tse P, Wang DD, Atherton D. Harmony theory yields robust machine fault-diagnostic

systems based on learning vector quantization classifiers. Engineering Applications of

Artificial Intelligence 1996; 9: 487-498.

Abu-Mahfouz IA. A comparative study of three artificial neural networks for the

detection and classification of gear faults. International Journal of General Systems

2005; 34: 261-277.

Trendafilova I, Cartmell MP, Ostachowicz W. Vibration-based damage detection in

an aircraft wing scaled model using principal component analysis and pattern

recognition. Journal of Sound and Vibration 2008; 313: 560-566.

Wei WWS. Time series analysis: Univariate and multivariate methods. 2nd ed.

Pearson: Boston, 2006.

Hamilton JD. Time series analysis. Princeton University Press: Princeton, NJ, 1994.

Harvey AC. Time series models. 2nd ed. The MIT Press: Cambridge, MA, 1993.

Kohonen T. Self-organizing maps. Springer: Berlin, 1997.

Sharma S. Applied multivariate techniques. Wiley: New York, 1997.

Sammon JJW. Nonlinear mapping for data structure analysis. IEEE Transactions on

Computers 1969; C-18: 401-409.

Flexer A. On the use of self-organizing maps for clustering and visualization.

Intelligent Data Analysis 2001; 5: 373-384.

Ljung L. System identification toolbox: User's guide. Version 6. Mathworks: Natick,

MA, 2006.

Friswell MI, Mottershead JE. Finite element model updating in structural dynamics.

Kluwer Academic Publishers: Dordrecht, 1995.

Takanami T, High precision estimation of seismic wave arrival times, in The practice

of time series analysis, H. Akaige and G. Kitagawa, Editors. Springer: New York,

1999; 79-94.

Akaike H. New look at the statistical model identification. IEEE Transactions on

Automatic Control 1974; AC-19: 716-723.

13

40.

41.

Sheskin D. Handbook of parametric and nonparametric statistical procedures.

Chapman and Hall: Boca Raton, FL, 2003.

ASCE

Structural

Health

Monitoring

Committee.

http://cive.seas.wustl.edu/wusceel/asce.shm/.

14

Table 1. Natural frequencies and percentage changes at different damage states for 3-storey

bookshelf structure.

fe (Hz)

Mode

D0

st

1

1.928

2nd

5.52

3rd

8.55

a

Based on D0.

D1

1.879

5.43

8.30

D2

1.837

5.46

8.09

D3

1.840

5.42

8.15

D1

-2.5%

-1.6%

-2.9%

fe (%)a

D2

-4.7%

-1.0%

-5.3%

D3

-4.6%

-1.8%

-4.6%

de Lautour and Omenzetter

15

Table 2. Updated stiffnesses from analytical models for 3-storey bookshelf structure.

Stiffness of 1st storey (N/m)

Stiffness of 2nd storey (N/m)

Stiffness of 3rd storey (N/m)

D0

3.77104

0.60104

0.77104

D1

3.49104

0.60104

0.77104

D2

3.77104

0.54104

0.77104

D3

3.49104

0.54104

0.77104

de Lautour and Omenzetter

16

Table 3. Earthquake records used to excite 3-storey bookshelf structure.

Earthquake

Duzce 12/11/1999

Erzincan 13/3/1992

Gazli 17/5/1976

Helena 31/10/1935

Imperial Valley 19/5/1940

Kobe 17/1/1995

Loma Prieta 18/10/1989

Northridge 17/1/1994

PGA (g)

0.535

0.496

0.718

0.173

0.313

0.345

0.472

0.568

Scaled PGA (g)

0.027

0.033

0.048

0.035

0.031

0.035

0.047

0.038

Duration (sec)

25.885

20.780

16.265

40.000

40.000

40.960

39.945

40.000

Sampling frequency (Hz)

200

200

200

100

100

100

200

50

de Lautour and Omenzetter

17

Table 4. Number and percentage of misclassifications using NNC and PCA reduced data for

3-storey bookshelf structure.

Number of principal components

60

40

30

20

10

Euclidean distance

31 (35%)

34 (39%)

30 (34%)

34 (39%)

34 (39%)

Mahalanobis distance

1 (1%)

3 (3%)

4 (5%)

5 (6%)

10 (11%)

de Lautour and Omenzetter

18

Table 5. Number and percentage of misclassifications using NNC and subsets of AR

coefficients for 3-storey bookshelf structure.

Number of AR coefficients selected per model

20

15

10

5

Mahalanobis distance

3 (3%)

4 (5%)

4 (5%)

10 (11%)

de Lautour and Omenzetter

19

Table 6. Number and percentage of misclassifications using LVQ for 3-storey bookshelf

structure.

Number of principal components

30

20

10

30

0 (0%)

4 (5%)

17 (19%)

Number of codebook vectors

50

100

0 (0%)

0 (0%)

3 (3%)

3 (3%)

15 (17%)

14 (16%)

de Lautour and Omenzetter

20

Table 7. Damage configurations for ASCE Phase II Experimental SHM Benchmark

Structure.

Configuration

1

2

3

4

5

6

7

8

9

Damage

No damage

All bracing removed on the E face

Bracing removed on floors 1-4 on a bay on the SE corner

Bracing removed on floors 1 and 4 on a bay on the SE corner

Bracing removed on floors 1 on a bay on the SE corner

All bracing removed on E face and floor 2 on N face

All bracing removed

Configuration 7 + loosened bolts on floors 1-4 on E face N bay

Configuration 7 + loosened bolts on floors 1 and 2 on E face N bay

de Lautour and Omenzetter

21

Table 8. Number and percentage of misclassifications using NNC for ASCE Phase II

Experimental SHM Benchmark Structure.

Number of principal components

20

10

5

3

Euclidean distance

1 (0.3%)

5 (1%)

23 (7%)

62 (19%)

Mahalanobis distance

1 (0.3%)

5 (1%)

24 (7%)

65 (19%)

de Lautour and Omenzetter

22

Table 9. Number and percentages of misclassifications using LVQ for ASCE Phase II

Experimental SHM Benchmark Structure.

Number of principal components

20

10

5

3

Number of codebook vectors

50

100

200

6 (2%)

5 (1%)

4 (1%)

13 (4%)

10 (3%)

6 (2%)

24 (7%)

29 (9%)

23 (7%)

75 (22%)

68 (20%)

67 (20%)

de Lautour and Omenzetter

23

(a)

(b)

(c)

Figure 1. 3-storey laboratory bookshelf structure: (a) general view, (b) detail of column-plate

joint, and (c) dimensions and accelerometer locations.

de Lautour and Omenzetter

24

Legend:

100%of stiffness

100%of stiffness

100%of stiffness

100%of stiffness

4.5mm thick angle

100%of stiffness

100%of stiffness

90%of stiffness

90%of stiffness

93%of stiffness

100%of stiffness

93%of stiffness

D1

D2

D3

3.0mm thick angle

100%of stiffness

D0

Figure 2. Damage states in 3-storey bookshelf structure showing percentage of lateral

stiffness at each story.

de Lautour and Omenzetter

25

Figure 3. Projection of data from 3-storey bookshelf structure onto the first two principal

components.

de Lautour and Omenzetter

26

Figure 4. Projection of data from 3-storey bookshelf structure via Sammon mapping.

de Lautour and Omenzetter

27

(a)

(b)

Figure 5. ASCE Phase II Experimental SHM Benchmark Structure: (a) general view, and (b)

beam-column joint and bracing.

de Lautour and Omenzetter

28

Figure 6. Projection of data from ASCE Phase II Experimental SHM Benchmark Structure

onto the first two principal components.

de Lautour and Omenzetter

29

Figure 7. Projection of data from ASCE Phase II Experimental SHM Benchmark Structure

via Sammon mapping.

de Lautour and Omenzetter

30

Figure 8. Number of misclassifications using subsets of AR coefficient and/or accelerometers

for ASCE Phase II Experimental SHM Benchmark Structure.

de Lautour and Omenzetter

31