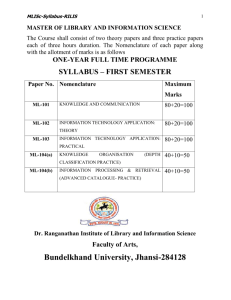

Analysis Environments for Community Repositories:

advertisement

Technology Trends for Information Retrieval in the Net Bruce R. Schatz Information Infrastructure evolves, as better technology becomes available to support basic needs. For technology to be mature enough to be incorporated into standard infrastructure, it must be sufficiently generic. That is, the technology must be robust and readily adaptable to many different applications and purposes. For Information Infrastructure to support Semantic Retrieval in a fundamental way, several new technologies must be incorporated into the standard support to facilitate Concept Navigation. In particular, the rise of four technologies is critical: document protocols for information retrieval, extraction parsers for noun phrases, statistical indexers for context computations, communications protocols for peer-to-peer computations. Together, these generic technologies support semantic indexing of community repositories. A document can be stored in a standard representation. Concepts can be extracted from a document with some level of semantics. These concepts can be utilized to transform a document collection into a searchable repository, by indexing the documents with some level of semantics. Finally, the resultant indexing can be utilized to semantically federate the knowledge of a community, by concept navigation across distributed repositories that comprise relevant sources. The Rise of Web Document Protocols has made it possible to store documents in a standard representation. Prior to the worldwide adoption of a single format to represent documents, collections were limited to those that could be administered by a single central organization. Prime examples were Dialog, for bibliographic databases consisting of journal abstracts, and Lexis/Nexis, for full-text databases consisting of magazine articles. The widespread adoption of WWW (World-Wide Web) Protocols enabled global information retrieval, which in turn increased the volume to the point that semantic indexing has become necessary to enable effective retrieval. In particular, the current situation was caused by the universal distribution of servers that store documents in HTML (HyperText Markup Language) and retrieve documents using HTTP (HyperText Transmission Protocol). Many more organizations could now maintain their own collections, since the information retrieval technology was now standard enough to enable information providers to directly store their own collections, rather than transferring them to a central repository archive. Standard protocols implied that a single program could retrieve documents from multiple sources. Thus the WWW protocols enabled the implementation of Web browsers. In particular, NCSA Mosaic proved to be the right combination of streamlined standards and flexible interfaces to attract millions of users to information retrieval for the first time [1]. As the number of documents increased, identifying initial documents to hypertext browse from became a major problem. Then, web searchers began to dominate web browsers as the primary interface to global information space. These searches across so many documents with such variance showed the weakness of syntactic search, such as the word matching used within Dialog, and increased the demand for semantic indexing embedded within the infrastructure. The response of the WWW designers has been to extend the markup languages from formating to typing. HTML has tags (markups) to indicate how to display phrases, such as centering or boldfacing. HTML has now evolved into XML (eXtensible Markup Language), which enables syntactic specification of many types of units, including custom applications. XML is the web version of markup languages used in the publishing industry, such as SGML (Standard Generalized Markup Language), which are used to tag the structure of documents, such as sections and figures. SGML has also been used for many years for scholarly documents to tag the types of the phrases, e.g. recording the semantics of names in the humanities literature as “this is a person, place, book, painting”. The major activities towards the Semantic Web are developing infrastructure to type phrases within documents [2], for use in search engines and other software. Languages are being defined to enable authors to provide metadata describing document semantics within the text. There are a number of such languages under development [3], supporting definition of ontologies, which are formal definitions of the important concepts in a given domain. Use of such languages will be by authors of documents, and thus subject to the limitations of author reliability and accuracy. These limitations have proven significant with earlier languages, such as SGML, thus encouraging the development of automatic tagging techniques whenever possible to augment any manual tagging. Document standards eliminate the need for format converters for each collection. Extracting words becomes universally possible with a syntactic parser. But, extracting concepts requires a semantic parser, which extracts the appropriate units from documents of any subject domain. Many years of research into information retrieval have shown that the most discriminating units for retrieval in text documents are multi-word noun phrases. Thus, the best concepts in document collections are noun phrases. The Rise of Generic Parsing has made it possible to automatically extract concepts from arbitrary documents. The key to context-based semantic indexing is identifying the “right size” unit to extract from the objects in the collections. These units represent the “concepts” in the collection. The document collection is then processed statistically to compute the co-occurrence frequency of the units within each document. Over the years, the feasible technology for concept extraction has become increasingly more precise. Initially, there were heuristic rules that used stop words and verb phrases to approximate noun phrase extraction. Then, there were simple noun phrase grammars for particular subject domains. Finally, the statistical parsing technology became good enough, so that extraction was computable without explicit grammars [4]. These statistical parsers can extract noun phrases quite accurately for general texts, after being trained on sample collections. This technology trend approximates meaning by statistical versions of context. This trend in information retrieval has been a global trend in recent years for pattern recognition in many areas. Computers have now become powerful enough that rules can be practically replaced by statistics in many cases. Global statistics on local context has replaced deterministic parsing. For example, in computational linguistics, the best noun phrase extractors no longer have an underlying definite grammar, but instead rely on neural nets trained on typical cases. The initial phases of the DARPA TIPSTER program, a $100M effort to extract facts from newspaper articles for intelligence purposes, were based upon grammars, but the final phases were based upon statistical parsers. Once the neural nets are trained on a range of collections, they can parse arbitrary texts with high accuracy. It is even possible to determine automatically the type of the noun phrases, such as person or place, with high precision [5]. Once the units, such as noun phrases, are extracted, they can be used to approximate meaning. This is done by computing the frequency with which the units occur within each document 2 across the collection. In the same sense that the noun phrases represent concepts, the contextual frequencies represent meanings. These frequencies for each phrase form a space for the collection, where each concept is related to each other concept by co-occurrence in context. The concept space is used to generate related concepts for a given concept, which can be used to retrieve documents containing the related concepts. The space consists of the interrelationships between the concepts in the collection. Concept navigation is enabled by a concept space computed from a document collection. The technology operates generically, independent of subject domain. The goal is enable users to navigate spaces of concepts, instead of documents of words. Interactive navigation of the concept space is useful for locating related terms relevant to a particular search strategy. The Rise of Statistical Indexing has made it possible to compute relationships between concepts within a collection. Algorithms for computing statistical co-occurrence have been studied within information retrieval since the 1960s [6]. But it is only in the last few years that the statistics involved for effective retrieval have been computationally feasible for real collections. These concept space computations combine artificial intelligence for the concept extraction, via noun phrase parsing, with information retrieval for the concept relationship, via statistical co-occurrence. The technology curves of computer power are making statistical indexing feasible. The coming period is the decade that scalable semantics will become a practical reality. For the 40year period from the dawn of modern information retrieval in 1960 to the present worldwide Internet search of 2000, statistical indexing has been an academic curiosity. Techniques such as co-occurrence frequency were well-known, but computable only on collections of a few hundred documents. The practical information retrieval on real-world collections of millions of documents relied instead on exact match of text phrases, such as embodied in full-text search. The speed of machines is changing all this rapidly. The next 10 years, 2000-2010, will see the fall of indexing barriers for all real-world collections. For many years, the largest computer could not semantically index the smallest collection. After the coming decade, even the smallest computer will be able to semantically index the largest collection. Hero experiments in the late 1990s performed semantic indexing on the complete literature of scientific disciplines [7]. Experiments of this scale will be routinely carried out by ordinary people on their watches (palmtop computers) less than 10 years later, in the late 2000s. The TREC (Text REtrieval Conference) competition [8] is organized by the National Institute for Standards and Technology (NIST). It grew out of the DARPA TIPSTER evaluation program, starting in 1992, and is now a public indexing competition entered annually by international teams. Each team generates semantic indexes for gigabyte document collections using their statistical software. Currently, semantic indexing can be computed by the appropriate community machine, but in batch mode. For example, a concept space for 1K documents is appropriate for a laboratory of 10 people and takes an hour to compute on a small laboratory server. Similarly, a community space of 10K documents for 100 people takes an hour on a large departmental server. Each community repository can be processed on the appropriate-scale server for that community. As the speed of machines increases, the time of indexing will decrease from batch to interactive, and semantic indexing will become feasible on dynamically specified collections. 3 When the technology for semantic indexing becomes routinely available, it will be possible to incorporate this indexing directly into the infrastructure. At present, the WWW protocols make it easy to develop a collection to access as a set of documents. Typically, the collection is available for browsing but not for searching, except by being incorporated into web portals, which gather documents via crawlers for central indexing. Software is not commonly available for groups to maintain and index their own collection for web-wide search. The Rise of Peer-Peer Protocols is making it possible to support distributed repositories for small communities. This trend is following the same pattern in the Internet in the 2000s as did email in the ARPAnet in the 1960s, where person-person communications became the dominant service in infrastructure designed for station-station computations. Today, there are many personal web sites, even though traffic is dominated by central archives, such as home shopping and scientific databases, which drive the market. The Net at present is fundamentally a client-server model, with few large servers and many small clients. The clients are typically user workstations, which prepare queries to be processed at archival servers. As the number of servers increases and the size of collections decreases, the infrastructure will evolve into a peer-peer model, where user machines exchange data directly. In this model, each machine is both a client and a server at different times. There are already significant peer-peer where simple protocols enable users to directly share their datasets. These are driven by the desires of specialized communities to directly share with each other, without the intervention of central authorities. The most famous example is Napster for music sharing, where files on a personal machine in a specified format can be made accessible to other peer machines, via a local program that supports the sharing protocol. Such file sharing services have become so popular, that the technology is breaking down due to lack of searching capability that can filter out copyrighted songs. There are many examples in more scientific situations of successful peer-peer protocols [9]. Typically, these programs implement a simple service on an individual user’s machine, which performs some small computation on small data that can be combined across many machines into a large computation on large data. For example, the SETI@home software is running as a screensaver on several million machines across the world, each computing the results of a radio telescope survey from a different sky region. Computed results are sent to a central repository for a database Searching for ExtraTerrestrial Life (SETI) across the entire universe. Similar net-wide distributed computation, with volunteer downloads of software onto personal machines, has computed large primes and broken encryption schemes. For-profit corporations have used peer-to-peer computing for public-service medical computations [10]. Nearly a million PCs have already been volunteered for the United Devices Cancer Research Program, using a commercial version of the SETI@home software. Generalized software to handle documents or databases exists at a primitive level for peerpeer protocols. The local PCs are currently used only as processors for computations for a small segment of the database from the central site. True data-centered peer-peer is not available, where each PC computes on its own locally administered database and the central site only merges the database computation from each local site. Data-centered peer-peer is necessary for global search of local repositories, and will become feasible as technology matures. The Net will evolve from the Internet to the Interspace, as information infrastructure supports semantic indexing for community repositories. The Interspace supports effective navigation 4 across information spaces, just as the Internet supports reliable transmission across data networks. Internet infrastructure, such as the Open Directory project [11], enables distributed subject curators to index web sites within assigned categories, with the entries being entire collections. In contrast, Interspace infrastructure, such as automatic subject indexing [12], will enable distributed community curators to index the documents themselves within the collections. Increasing scale of community databases will force evolution of peer-peer protocols. Semantic indexing will mature and become infrastructure at whatever level technology will support generically. Community repositories will be automatically indexed, then aggregated to provide global indexes. Concept navigation will be a standard function of global infrastructure in 2010, much as document browsing has become in 2000. Then the Internet will have evolved into the Interspace. References B. Schatz and J. Hardin, “NCSA Mosaic and the World-Wide Web: Global Hypermedia Protocols for the Internet”, Science, Vol. 265, 12 Aug. 1994, pp. 895-901. 2. T. Berners-Lee, et. al. , “The Semantic Web”, Scientific American, Vol. 284, May 2001, pp. 35-43. 3. D. Fensel, et. al., “The semantic Web and its languages”, IEEE Intelligent Systems, November/December 2000, pp. 67-73. 4. T. Strzalkowski, “Natural Language Information Retrieval”, Information Processing & Management, Vol. 31, 1996, pp. 397-417. 5. D. Bikel, et. al., “NYMBLE: A High-Performance Learning Name Finder”, Proc. 5th Conf. Applied Natural Language Processing, Mar. 1998, pp. 194-201. 6. P. Kantor, “Information Retrieval Techniques”, Annual Review Information Science & Technology, Vol. 29, 1994, pp. 53-90. 7. B. Schatz, “Information Retrieval in Digital Libraries: Bringing Search to the Net”, Science, Vol. 275, 17 Jan. 1997, pp. 327-334. 8. D. Harman (ed), Text REtrieval Conferences (TREC), National Institute Standards & Technology (NIST), http://trec.nist.gov 9. B. Hayes, “Collective Wisdom”, American Scientist, Vol. 86, Mar-Apr 1998, pp. 118-122. 10. Intel Philanthropic Peer-to-Peer Program, www.intel.com/cure 11. Open Directory Project, www.dmoz.org 12. Y. Chung, et. al., “Automatic Subject Indexing Using an Associative Neural Network”, Proc. 3rd Int’l ACM Conference Digital Libraries, Jun. 1998, Pittsburgh, pp. 59-68. 1. 5