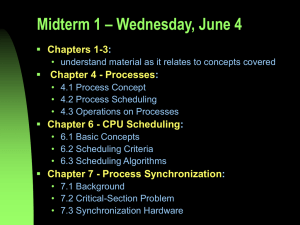

Chapter 2

advertisement

2

PROCESSES

1) PROCESSES

a) Introduction to Processes:

i) Pseudoparallelism: rapid switching back and forth of the CPU between programs

ii) Multiprocessor: has two or more CPUs sharing the same physical memory

b) THE PROCESS MODEL:

i) Sequential Process: Each process has its own virtual CPU

ii) Multiprogramming: CPU switches from one program to another

PROCESS HIERARCHIES: Processes are created by the FORK system call, which creates an identical

copy of the calling process. The selected program is loaded into memory via an exe system call.

When the process is done, it executes an exit system call to terminate, returning to the invoking

process a status code of 0, or a nonzero error code. Click here to check the system call.

Process States: Informally, process is a program in execution

1. Running

2. Ready

3. Blocked

c) Implementation of Processes: The O/S maintains a table, with an entry per process.

Figure 2-4 Page 53

d) THREAD: Known as lightweight process (LWP), which has its own address space and a single

thread of control. A basic unit of CPU utilization, and consists of a program counter, a register set

and a stack space. A heavyweight process is a task with one thread.

i)

ii)

MULTIPLE-PROCESSES: operates independently of others; each process has its own program

counter, stack register, and address space “jobs performed by processes are either related or

unrelated.” It is more efficient to have one process containing multiple threads serve the

same purpose.

Threads operate in the same manner as processes and can be in running, ready, or blocked

state.

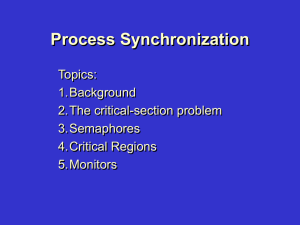

2) Interprocess Communication (IPC): Provides a mechanism to allow processes to communicate and

to synchronize their actions.

a) Three important issues:

i) How one process can pass information to another

ii) Making sure two or more processes don’t get into each other’s way when engaging in critical

activities

iii) Ensure proper sequencing when dependencies are present

b) RACE CONDITIONS: Where two or more processes are reading or writing some shared data and

the final result depends on who runs precisely when

c) CRITICAL SECTIONS: Preventing more than one process from reading and writing the shared data

at the same time “Mutual Exclusion”. The part of the program where the shared memory is

accessed is called the critical region or critical section. Four conditions must hold to have a

good solution:

(a)

(b)

(c)

(d)

No two processes may be simultaneously inside their critical section

No assumptions may be made about speeds or the number of CPUs

No process running outside its critical section region may block other processes

No process should have to wait forever to enter its critical section

“Pi”

While true do

<preludei>

Enter_CSi

CSi

Exit_ CSi

<postludei>

od

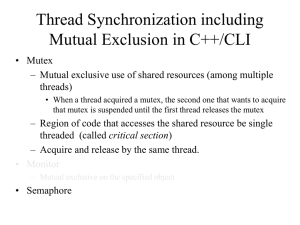

d) MUTUAL EXCLUSION WITH BUSY WAITING:

(1) Disabling Interrupts: Once in its critical region, disable all interrupts and then just before

leaving it re-enable them.

(a) Problems:

(i) If one process did it, and never turned them on again

(ii) If the system is multiprocessor, disabling interrupts affects only the CPU that

executed the disable instruction; the other ones continue running and can access

the shared memory

(2) Lock Variables (software solution): A single shared (lock) variable that is initially 0.

When a process enters its CS, it tests the lock. If lock is 0, set lock to 1 and then enter

CS. If lock is 1, wait until lock is 0. 0 means no process is in its critical section. 1 means

that some process is in its critical section.

(a) Problems:

(i) Two processes will be in their CS at the same time. One process reads the lock

and sees that it’s 0. Before it can set it to 1, another process runs and sets it to 1.

Two processes will be running in their critical sections simultaneously.

(3) Strict Alteration: “Busy Waiting”. It doesn’t satisfy condition 3 because taking turns is not

a good idea when one of the processes is much slower than the other.

(4) Peterson’s Solution: Combining the idea of taking turns and of lock variables, solution

was devised to the mutual exclusion problem doesn’t require strict alternation.

What happens when both processes enter call enter_region almost simultaneously?

(5) The TSL Instruction “TEST AND SET LOCK”: no special instruction is needed.

enter_region:

tsl register,lock

cmp register,#0

jne enter_region

ret

| copy lock to register and set lock to 1

| was lock zero?

| if it was non zero, lock was set, so loop

| return to caller; critical region entered

leave_region:

move lock,#0

ret

| store a 0 in lock

| return to caller

Figure 2-10. Setting and clearing locks using TSL.

TSL reads the contents of memory word into a register and then stores a nonzero value at that

memory address.

(6) Sleep and Wakeup: unlike Peterson’s and TSL solutions, Sleep and Wakeup doesn’t

require busy waiting. In effect, the process saves CPU time. Sleep is a system call that

causes the caller to block, that is, be suspended until another process wakes it up.

Wakeup call has one parameter, the process to be awakened.

(7) Producer-Consumer Problem “Bounded Buffer Problem” Two processes share a buffer.

(a) Producer: Puts information into a fixed-size buffer

(b) Consumer: Takes information out.

(c) What if Producer wants to put a new item in the buffer while it’s full?

(i) Producer goes to sleep

(ii) Producer is awakened when consumer has removed one or more items

(d) What if Consumer wants to remove an item from the buffer while it’s empty?

(i) Consumer goes to sleep

(ii) Consumer is awakened when producer puts something in the buffer

#define N 100

int count = 0;

void producer(void)

{

while (TRUE) {

produce_item();

if (count == N) sleep();

enter_item();

count = count + 1;

if (count == 1) wakeup(consumer);

}

}

void consumer(void)

{

while (TRUE) {

if (count == 0) sleep();

remove_item();

count = count - 1;

if (count == N-1) wakeup(producer);

consume_item();

}

}

/ * number of slots in the buffer * /

/ * number of items in the buffer * /

/ * repeat forever * /

/ * generate next item * /

/ * if buffer is full, go to sleep * /

/ * put item in buffer * /

/ * increment count of items in buffer * /

/ * was buffer empty? * /

/ * repeat forever * /

/ * if buffer is empty, got to sleep * /

/ * take item out of buffer * /

/ * decrement count of items in buffer * /

/ * was buffer full? * /

/ * print item * /

Figure 2-11. The producer-consumer problem with a fatal race condition.

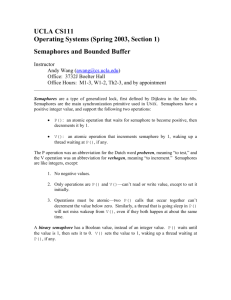

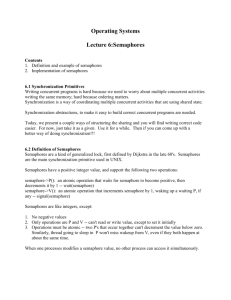

e) Semaphore: Synchronization tool and introduced by Dijkstra. Is accessed only through two

standard atomic (within single memory cycle, can’t be interrupted) operations: wait & signal

“Down & Up”, or Proberen to test & Verhogen to increment.

i) The fundamental principle is this:

(1) Two or more processes can cooperate by means of simple singles, such that a process

can be forced to stop at a specified place until it has received a specific signal.

(2) To transmit a signal via semaphore s, a process executes the primitive signal(s).

(3) To receive a signal via semaphore s, a process executes the primitive wait(s).

(4) If the corresponding signal hasn’t yet been transmitted, the process is suspended until

the transmission takes place.

A semaphore is a variable that has an integer values upon which three operations are

defined:

1. A semaphore may be initialized to a nonnegative value.

2. The wait operation decrements the semaphore value. If the value becomes negative,

then the process executing the wait is blocked.

3. The signal operation increments the semaphore value. If the value isn’t positive, then a

process blocked by a wait operation is unblocked “one fewer process sleeping on it.”

A binary semaphore may only take the values 0 and 1 “easier to implement.”

A queue is used to hold processes waiting on the semaphore.

struct semaphore

int count

queueType queue;

}

struct binary_semaphore {

enum (zero, one) value;

queueType queue;

};

void wait(semaphore s)

{

s.count--;

if (s.count < 0)

{

place this process in s.queue;

block this process

}

}

void signal(semaphore s)

{

s.count ++;

if (s.count <= 0)

{

remove a process P from s.queue

place process P on ready list

}

}

void waitB(binary_semaphore s)

{

if (s.value == 1)

s.value = 0;

else

{

place this process in s.queue;

block this process;

}

}

void signalB(semaphore s)

{

if (s.queue.is_empty())

s.value = 1;

else

{ remove a process P from s.queue;

place process P on ready list;

}

}

Definition of Counting Semaphores

Definition of Binary Semaphores

Idea: To make waiting within P Non-Busy by executing P & V by some virtual machine (O/S

kernel capable of suspending the caller

P & V can then be small and can be protected by inhibiting interrupts or TSL locks

The implementation of a semaphore with a waiting queue may result in a situation where two

or more processes are waiting indefinitely for an event that can be caused by only one of the

waiting processes

The event in question is the execution of a signal operation. When such a state is reached,

these processes are said to be deadlocked.

Another problem related to deadlocks is indefinite blocking or starvation, a situation where

processes wait indefinitely within the semaphore. Indefinite blocking may occur if we add and

remove processes from the list associated with a semaphore in LIFO order.

ii) Solving the Producer-Consumer Problem using Semaphores “Deadlock is possible”

#define N 100

typedef int semaphore;

semaphore mutex = 1;

semaphore empty = N;

semaphore full = 0;

/ * number of slots in the buffer * /

/ * semaphores are a special kind of int * /

/ * controls access to critical region * /

/ * counts empty buffer slots * /

/ * counts full buffer slots * /

void producer(void)

{ int item;

while (TRUE) {

produce_item(&item);

down(&empty);

down(&mutex);

enter_item(item);

up(&mutex);

up(&full);

}

}

/ * TRUE is the constant 1 * /

/ * generate something to put in buffer * /

/ * decrement empty count * /

/ * enter critical region * /

/ * put new item in buffer * /

/ * leave critical region * /

/ * increment count of full slots * /

void consumer(void)

{ int item;

while (TRUE) {

/ * infinite loop * /

down(&full);

/ * decrement full count * /

down(&mutex);

/ * enter critical region * /

remove_item(&item);

/ * take item from buffer * /

up(&mutex);

/ * leave critical region * /

up(&empty);

/ * increment count of empty slots * /

consume_item(item);

/ * do something with the item * /

}

}

Figure 2-12. The producer-consumer problem using semaphores.

iii) Binary Semaphores “A solution to the infinite-buffer Producer/Consumer problem”:

/* Program producer / consumer */

int n;

binary_semaphore s = 1;

binary_semaphore delay = 0;

void producer()

void (consumer)

{

{

while (true)

int m; /* local variable */

{

waitB(delay);

produce();

while (true)

waitB(s);

{

append();

waitB(s);

n++;

take();

if(n==1) signalB(delay);

n--;

signalB(s);

m = n;

}

signalB(s);

}

consume();

if (m==0) waitB(delay);

}

}

void main () {

n = 0;

parbegin (producer, consumer);}

f)

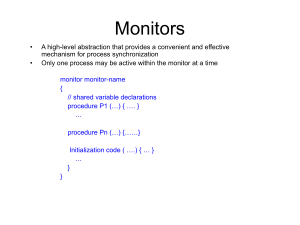

Monitors: Introduced by Hoare. A higher-level synchronization primitive and easier to control

than semaphores. It is implemented in Java, Pascal-Plus, concurrent Pascal, etc. It is a

collection of procedures, variables, and data structures that are grouped together on a package

“Module”. It is an ADT for concurrent programming that allows people to put monitor locks on any

object.

i) Mutual exclusion is automatically enforced when processes access ADT concurrently. The

local data variables are accessible only by the monitor’s procedures and not any external

procedures.

ii) The unconditional suspension/Activation mechanism is available. A process enters the

monitor by invoking one of its procedures.

iii) Only one process may be executing in the monitor at a time; any other process that has

invoked the monitor is suspended, waiting for the monitor to become available.

monitor ProducerConsumer

condition full, empty;

integer count;

procedure enter;

begin

if count = N then wait(full);

enter_item;

count := count + 1;

if count = 1 then signal(empty)

end;

procedure remove;

begin

if count = 0 then wait(empty);

remove_item;

count := count - 1;

if count = N - 1 then signal(full)

end;

count := 0;

end monitor;

procedure producer;

begin

while true do

begin

produce_item;

ProducerConsumer.enter

end

end;

procedure consumer;

begin

while true do

begin

ProducerConsumer.remove;

consume_item

end

end;

Figure 2-14. The producer-consumer problem with monitors.

-

The compiler not the programmer arranges for the mutual exclusion.

By turning all critical sections into monitor procedures, no two processes will ever execute their

critical regions at the same time.

-

-

Condition variables, along with two operations on them WAIT and SIGNAL will take care of the

blocking and unblocking states.

A process doing a signal must exit the monitor immediately. “Signal is the final statement in a

monitor procedure.”

Condition variables are not counters. They don’t accumulate signals for later use the way

semaphores do.

The WAIT must come before the SIGNAL.

If the producer inside a monitor procedure discovers that the buffer is full, it will be able to

complete the WAIT operation without having to worry about the possibility that the scheduler

may switch to the consumer just before the WAIT completes.

The consumer will not even be let into the monitor at all until the WAIT is finished and the

producer has been marked as no longer runnable.

Monitors are not usable except in few programming languages. Also, none of the primitives

provides for information exchange between machines.

g) Message Passing: Interprocess communication that uses 2 primitives SEND & RECEIVE.

i) Design issues for Message Passing Systems:

(1) To guard against lost messages, receiver must send and acknowledgement

(2) If sender didn’t receive the acknowledgement, retransmit the message

(3) What if message is received but acknowledgement is lost?

(a) Sender will resubmit the message, so the receiver will get it twice.

(b) Each message has a consecutive sequence numbers so the receiver knows that the

message is a duplicate and ignore the second message.

(4) Authentication: real life server vs. imposter!

ii)

The Producer-Consumer Problem with Message Passing:

#define N 100

/ * number of slots in the buffer * /

void producer(void)

{

int item;

message m;

/ * message buffer * /

while (TRUE)

{

produce_item(&item);

/ * generate something to put in buffer * /

receive(consumer, &m);

/ * wait for an empty to arrive * /

build_message(&m, item);

/ * construct a message to send * /

send(consumer, &m);

/ * send item to consumer * /

}

}

void consumer(void)

{

int item, i;

message m;

for (i = 0; i < N; i++) send(producer, &m);

/ * send N empties * /

while (TRUE)

{

receive(producer, &m);

/ * get message containing item * /

extract_item(&m, &item);

/ * extract item from message * /

send(producer, &m);

/ * send back empty reply * /

consume_item(item);

/ * do something with the item * /

}

}

Figure 2-15. The producer-consumer problem with N messages.

-

(1) Consumer starts out by sending N empty messages slots in shared memory buffer.

Whenever the producer has an item to give to the consumer, it takes an empty message

and sends back a full one.

(2) The total number of messages remains constant in time.

(3) If the producer works faster than the consumer:

(a) All the messages will end up full waiting for the consumer

(b) The producer will be blocked waiting for an empty message

(4) If the consumer works faster than the producer:

(a) All the messages will be empties waiting for the producer to fill them up

(b) The consumer will be blocked waiting for a full message

Using Mailboxes: used to buffer a certain number of messages.

When a process tries to send to a mailbox that is full, it is suspended a message is removed

from that mailbox, making room for a new one.

Both Producer/consumer create mailboxes large enough to hold N messages.

An alternative is to use rendezvous no message buffering, full copying, and selective

waiting. If SEND is done before RECEIVE, then the sending process is blocked until

RECEIVE occurs and vice versa.

3) Classical IPC Problems:

a) The Dining Philosophers Problem:

#define N 5

/ * number of philosophers * /

void philosopher(int i)

/ * i: philosopher number, from 0 to 4 * /

{

while (TRUE)

{

think();

/ * philosopher is thinking * /

take_fork(i);

/ * take left fork * /

take_fork((i+1) % N);

/ * take right fork; % is modulo operator * /

eat();

/ * yum-yum, spaghetti * /

put_fork(i);

/ * put left fork back on the table * /

put_fork((i+1) % N);

/ * put right fork back on the table * /

}

}

Figure 2-17. A nonsolution to the dining philosophers problem.

i)

Deadlock is imminent if all philosophers decided to eat at the same time Starvation.

#define N 5

#define LEFT (i-1)%N

#define RIGHT (i+1)%N

#define THINKING 0

#define HUNGRY 1

#define EATING 2

/ * number of philosophers * /

/ * number of i’s left neighbor * /

/ * number of i’s right neighbor * /

/ * philosopher is thinking * /

/ * philosopher is trying to get forks * /

/ * philosopher is eating * /

typedef int semaphore;

int state[N];

semaphore mutex = 1;

semaphore s[N];

/ * semaphores are a special kind of int * /

/ * array to keep track of everyone’s state * /

/ * mutual exclusion for critical regions * /

/ * one semaphore per philosopher * /

void philosopher(int i)

{

while (TRUE)

{

think();

take_forks(i);

eat();

put_forks(i);

}

}

/ * i: philosopher number, from 0 to N-1 * /

void take_forks(int i)

{

down(&mutex);

state[i] = HUNGRY;

test(i);

up(&mutex);

down(&s[i]);

}

/ * i: philosopher number, from 0 to N-1 * /

void put_forks(i)

{

down(&mutex);

state[i] = THINKING;

test(LEFT);

test(RIGHT);

up(&mutex);

}

/ * i: philosopher number, from 0 to N-1 * /

/ * repeat forever * /

/ * philosopher is thinking * /

/ * acquire two forks or block * /

/ * yum-yum, spaghetti * /

/ * put both forks back on table * /

/ * enter critical region * /

/ * record fact that philosopher i is hungry * /

/ * try to acquire 2 forks * /

/ * exit critical region * /

/ * block if forks were not acquired * /

/ * enter critical region * /

/ * philosopher has finished eating * /

/ * see if left neighbor can now eat * /

/ * see if right neighbor can now eat * /

/ * exit critical region * /

void test(i)

/ * i: philosopher number, from 0 to N-1 * /

{

if (state[i] == HUNGRY && state[LEFT] != EATING && state[RIGHT] != EATING)

{

state[i] = EATING;

up(&s[i]);

}

}

Figure 2-18. A solution to the dining philosopher’s problem.

The Readers and Writers Problem:

i) More than one process is allowed to read the data at one time but if one process is updating,

no other process may access the database, not even the readers.

typedef int semaphore;

semaphore mutex = 1;

semaphore db = 1;

int rc = 0;

void reader(void)

{

while (TRUE)

{

down(&mutex);

rc = rc + 1;

if (rc == 1) down(&db);

up(&mutex);

read_data_base();

down(&mutex);

rc = rc - 1;

if (rc == 0) up(&db);

up(&mutex);

use_data_read();

}

}

/ * use your imagination * /

/ * controls access to ’rc’ * /

/ * controls access to the data base * /

/ * # of processes reading or wanting to */

/ * repeat forever * /

/ * get exclusive access to ’rc’ * /

/ * one reader more now * /

/ * if this is the first reader ... * /

/ * release exclusive access to ’rc’ * /

/ * access the data * /

/ * get exclusive access to ’rc’ * /

/ * one reader fewer now * /

/ * if this is the last reader ... * /

/ * release exclusive access to ’rc’ * /

/ * noncritical region * /

void writer(void)

{

while (TRUE)

{

think_up_data();

down(&db);

write_data_base();

up(&db);

}

/ * repeat forever * /

/ * noncritical region * /

/ * get exclusive access * /

/ * update the data * /

/ * release exclusive access * /

}

Figure 2-19. A solution to the readers and writers problem.

If more than one reader is admitted to read the database, then there comes a time where a writer

might be suspended forever and eventually starve.

4) Process Scheduling:

ii) Scheduler: The part of the O/S that makes the decision in regards to which process runs first.

iii) Scheduling Algorithm: The algorithm used by the scheduler which tries to achieve:

(1) Fairness: each process gets its fair share of the CPU

(2) Efficiency: Keep the CPU busy 100% of the time

(3) Response time: minimize response time for interactive users

(4) Turnaround: minimize the time batch users must wait for output

(5) Throughput: maximize the number of jobs processed per hour

Note: Since the amount CPU’s time available is finite, then it is hard to implement a fair solution

at all times. Also, every process is unique and unpredictable which adds to the complication that

the scheduler has to deal with.

iv) Preemptive Scheduling: running processes that are temporarily suspended

v) Non-Preemptive Scheduling: running processes that are run to completion “not suitable for

multiple computer users.”

b) Round Robin Scheduling: simplest and fairest. Each process is assigned a time interval

“quantum.” When quantum is up or a process blocks, the CPU is preempted and given to

another process. Figure 2-22 shows how it works.

Note: a short quantum causes too many process switches and lowers the CPU efficiency, but

setting a long one causes poor response to short interactive requests. Any time the CPU switches

from one process to another, a certain time is dedicated to save and load registers and memory

maps, updating various tables and lists context switch.

c) Priority Scheduling: Each process is assigned a priority, and the runnable process with the

highest priority is allowed to run. To prevent high-priority processes from running indefinitely, the

scheduler may decrease the priority of the currently running processes at each clock tick “clock

interrupt”.

i) Processes that are highly I/O bound should be given the CPU immediately when needed to

let it start its next I/O requests. You don’t want such processes to occupy large chunks of

memory for unnecessarily long time.

d) Multiple Queues: Set up priority classes where processes in the highest class were run for one

quantum. Processes in the next highest class were run for two quanta. Whenever a process

used up all the quanta allocated to it, it was moved down one class.

e) Shortest Job First: Optimal when all jobs are available simultaneously.

-

f)

Turnaround time for the batch job A is 8 minutes, for B 12, for C 16, and D 20 is minutes

The average is 14 minutes

If we use shortest job first, turnaround time for, for B 4, for C 8, D 12 and A is 20 minutes

The average is 11

It is apparent that shortest job first produces the minimum average response time. The

problem is figuring out which of the currently runnable processes is the shortest one.

One approach is to make estimates based on past behavior and run the process with the

shortest estimated running time. Taking a weighted sum of the estimated time per command

for some terminal does it.

Guaranteed Scheduling: If there are n users logged on in while you are working, you will receive

about 1/n of the CPU power. It is difficult to implement.

g) Lottery Scheduling: gives processes lottery tickets for various system resources such as CPU

time. Whenever a scheduling decision is made, a ticket is chosen at random, and the process

holding it gets the resource. Processes may be given extra tickets, to increase their odds of

winning.

i) A process holding a fraction of f of the tickets will get about a fraction f of the resource in

question. Ex: if a process holds 20 out of outstanding 100 tickets, it’ll have 20% chance of

winning each lottery.

ii) If a new process shows up and is granted some tickets, at the very next lottery it will have a

chance of winning in proportion to the number of tickets it holds.

iii) Cooperating processes may exchange tickets if they wish.

(1) Ex: when a client sends a message to a server and then blocks, it may give all of its

tickets to the server, to increase the chance of the server running next. When the server

is finished, it returns the tickets so the client can run again.

h) Real-Time Scheduling “hard real time”: there are absolute deadlines that must be met “autopilot

in an aircraft, safety control in nuclear reactor, etc.”

i) The Scheduler schedules the processes in such a way that all deadlines are met.

ii) Soft real time means that missing an occasional deadline is tolerable.

iii) Real-time behavior is achieved by dividing the program into number of processes each of

whose behavior is predictable and known in advance.

iv) The events that a real-time system may have to respond to is categorized as periodic

(occurring at regular intervals) or aperiodic (occurring unpredictably.)

If there are m periodic events and event i occurs with period Pi and requires Ci seconds of

CPU time to handle each event, then the load can only be handled if

m

i

C

1

P

i

i 1

A real-time system that meets this criteria is said to be schedulable.

Ex: A real system with periods 100, 200, and 500 msec. If these events require 50, 30, 100 msec

of CPU time per event, the system us schedulable because 0.5+ 0.15 + 0.2 < 1.

i)

Two-level Scheduling: If insufficient memory is available, some of the processes have to be kept

on disk, in whole or in part.

i)

Higher-level scheduler: is invoked to remove process that have been in memory long enough

and load the ones that have been on disk too long.

ii)

Lower-level scheduler: restricts itself to only running processes that are actually in memory.

iii) Criteria that high-level scheduler could use:

(1) How long has it been since the process was swapped in or out.

(2) How much CPU time has the process had recently

(3) How big is the process?

(4) How high is the priority?