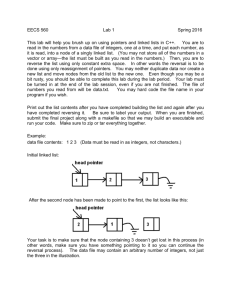

Data mining with SAS Enterprise Miner

advertisement