MergedocSat10Oct - Monitoring and Evaluation NEWS

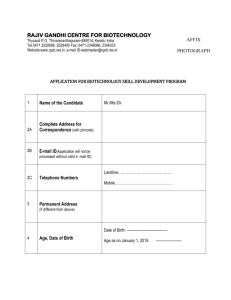

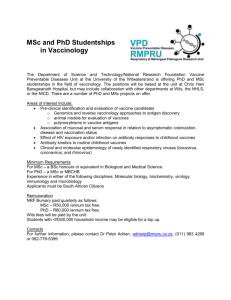

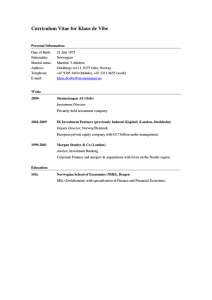

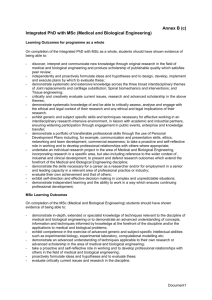

advertisement