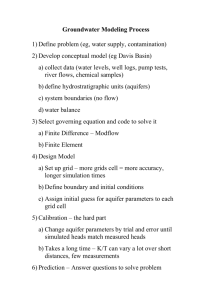

How to Build a Grid

advertisement

SURA Cyberinfrastructure Workshop: Grid Application Planning & Implementation January 5 – 7, 2005, Georgia State University Notes from breakout sessions on: How to Build a Grid/Different Grid Technologies (January 7, a.m.) Facilitated by: Phil Emer, MCNC Scribe: Mary Trauner, Georgia Institute of Technology A number of key questions were posed throughout the breakout session. The following notes are organized by question coupled with the discussion that took place for each. A grid can be considered as an access method or platform. 1) What are considered reasonable platforms for compute and storage? Anything with a power supply? Anything in a controlled environment? Anything in a controlled lab? State-wide resources Phil stated that at MCNC, the decision was: Not a desktop Something in a controlled environment Something that peers across universities had built in a consistent manner. Amy Apon (U of Arkansas) worked with students to build an X-Grid (Apple) that included some Linux systems. This led to a course on building grids and tools for the grids. 2) Is anyone considering grids as a way to harness unused cycles and/or to avoid a [HPC] purchase? One person has done so with Avaki; they are currently looking at United Devices. The Avaki grid has paid off. Now Avaki software is basically free to universities Support for it is [the fee for support] is reasonable. 3) What middleware is reasonable? <Discussed later> 4) What manpower is needed? <Not discussed> 5) Should grids support heterogeneity? If you support multiple sites, campuses, virtual organizations, it [heterogeneity] will happen. For example, MCNC supports a grid comprised of: Duke: Linux with Sun Grid Engine and Ethernet NC State: A Sun SMP with Grid Engine UNC: Linux with Myrnet and LSF 6) Does Globus work in a heterogeneous environment? One site has implemented this at GT2. There is uncertainty and concern about the next version since it uses web services. Some in the group were uncertain if this was a step forward. NMI provided a stable stack. Now there is a bifurcation of those building infrastructures from those at virtual organizations based on the application interfaces. Where do those interfaces tie in? At this stage, end users present an application to their infrastructure people for a grid solution. 7) Who has built a grid? Jorg Schwarz (Sun) responded from two perspectives: Within an institution, you just need: Resource manager Directory structure (LDAP, Punch, etc.) Accounting Across universities, add: GT2 A portal A command line interface 8) Is a grid an HPC solution or a network piece? Several responded that they considered it as HPC. One person commented that he considered it HPC because it appeared to be an outgrowth from the NSF centers on how to do research. We discussed the terms Grid versus grid (big versus little “g”.) What must a network provider provide for a grid beyond what an ISP might provide? Earlier availability Something only a university will consume A grid gateway Beyond bandwidth o Network to compute o An access tier to resources Application specific grids tend to want a portal (veneer) whereas general grid users tend to prefer lower level access like command line interfaces. This launched into a discussion of “point of view”. Utility and presentation become important based on point of view when deciding access methods. An exampled posed was a backup service. This still leverages middleware, but the use of a backup service is more “grid” than “Grid”. 9) How does one build a grid file system or data grid while immersed in an AFS solution? <Discussions of this tended to be spread out across several subsequent questions.> 10) How long does it take to build a grid? This depends on how many pieces you need. (Kerberos, AFS, etc.?) You must start with: Authentication Data access Local load sharing environment This led to the question “Is a grid a cluster or vice versa?” Authentication, data access, and load sharing help answer this. Whether your users are from a single administrative domain or multiple domains is also part of the answer. Certificate authorities were discussed. Multiple CA’s within a grid (aka different policies for each compute resource) is problematic. MCNC got around this [campus access/use policies] by purchasing the equipment that was placed at each university, thus providing unified policies, authentication, etc. 11) What should I do in a closed environment with multiple OS platforms, where the applications have dependencies on certain libraries? What middleware and DRM [Distributed Resource Manager] will you use? DRM options include Condor G PBS Grid Engine LSF Maui Load Leveler The DRM provides: Execution-host clients to monitor loads (memory, CPU, etc.) Submit-host/master client to collect execution-host information, build queues Does not allow interactive access to execution nodes (interactive nodes are generally provided separately.) Note that Globus does not provide a DRM. GRAM is the Globus Toolkit interface between Globus and the DRM. Another thing to note is that GridFTP is not needed in a local environment; AFS [,scp] or other would be used instead. And scheduling across clusters is not handled by Globus out of the box. Metaschedulers can be built to handle this. It usually requires some building or scripting. Grid Engine (Sun) will do this. 12) Are there some good grid terminology resources? Several mentioned the IBM Redbook as a good source [title and/or ISBN?] 13) What layers do you need to connect clusters into a grid? First, you need to identify an initial exercising application. So this question is hard to completely answer without knowing the application. Jorg (Sun) disagreed, saying an authentication platform that could verify user info was enough to do a general-purpose grid. He proposed the following diagram. LDRM: Local resource manager A or B: SMP system, cluster, set of clusters, particular applications, etc. (Phil noted that this is a compute-centric grid. A data grid may be different.) For example, someone could log onto the portal at A to submit a Blast job. B may be the Blast server. B will get the job, know how to get data from A and return the results to A. Something like GridLab metascheduling was recommended for review. 14) How do you move data? Who gets to access input and output? How does it [data] get in and out? This is not necessarily complicated, but it requires some choices for middleware selection. When is the data replicated? How does data location affect performance? When do you need to add data access servers? Data grids have similar authentication issues but add: Data access method Replication Tools Virtual LAN and Cluster on Demand were mentioned. (??) 15) How do you deploy a consistent image across multiple OS platforms? Consistency is difficult. Adding the middleware complicates it. Application domain specificity will simplify it. Maytal Dahan (TACC) described their hub and spoke trust philosophy. Phil (MCNC) asked if this wouldn’t implode into forcing homogeneity. Victor Bolet (GSU) said it would implode to the use of standards not OS or platform.) Maytal went on to say that they are dealing with standards and middleware. She added that researchers think grids are still just too hard to use. So portals are important. Now as portals become standardized, they can interoperate so which one you choose isn’t as important now. You don’t have to adopt a particular one. 16) Are grids good? How? Where are we going or trying to go? We should eventually look at grids supporting science and research the same way as the block box that the Internet has become for us today. Scheduling, Certificate Authorities, etc should be transparent. So how do we build the black box? Visualization and workflow aspects are important to how the end-user interfaces to the results (and the speed/quantity at which they receive them.) As infrastructure people, we may not have those skills. Maytal (TACC) said some middleware may be heading in this direction. Visualization widgets, engines, and instruments could be something we need to think more about, consider. Kazaa and peer-to-peer applications are grid-like things. Grid is like another version or “second coming”. Grids need to “spew” services that just work, in a similar manner as the peer-to-peer applications. But the basic or fundamental requirement: run an executable that reads data and returns results. 17) Are there any automatic methods? There is no “grid in a box.” The NMI toolkit is a good place to start. Choosing the Globus API (2, 3, or 4) is a big question. (See below) Implementing a Metascheduler: Rudimentary scheduling, like round-robin, isn’t too difficult if you have some sort of access control. CSF: Community Scheduler Framework VGRS (VeriSign Global Registry Services) LSF has a multi-cluster solution, but it is very expensive Globus Toolkit Review: Pre “web services” components are pretty safe and stable. 2.4 is the most stable for infrastructure. Those looking for things like grid applications have gone with 3.0. There is concern about 4.0 which is using WSRF (delayed until April.) If 4.0 is not rock solid, the results (difficulties?) will be dramatic. Today, for production services, run 2.4 (or some 2.x.) Run 3.9.4 if you want to play with the WSRF beta. (Comments that it crashes a lot?) Other grid toolkits: .net: OSGI is going away WSRF <missed those comments> SRM/SRB: Jefferson Lab Unicore: Grid toolkit developed in Europe Phil (MCNC) mentioned that they wrote an initial “kick start” guide for an enterprise grid. He listed a diagram: 18) Should we ask SURA to host a mailing list on building grids? If interested, let Mary Fran know (maryfran@sura.org) 19) Afterthoughts from the facilitator (Phil Emer/MCNC) I think that these notes do a great job of capturing the flow of the conversation and the types of questions that were on the table (nice, Mary!). I am not quite sure what to do with these notes though…some workshops are probably in order…My gut feeling based on listening to the types of questions folks were asking is that this is what people want/need to do vis-à-vis building grids: a) Provide "elegant" access to centralized HPC resources. So grid as a front end access method to HPC resources. At MCNC we call this the enterprise grid. Several attendees mentioned wanting to provide such an interface to a collection of heterogeneous resources (though in most cases the resources were not distributed). You don't really need grid to do this as you can apply DRM's like LSF and grid engine. Adding a grid interface here allows applications and users to access the resources in a more transparent and potentially cross-domain kind of way. IMO adding the grid access method makes access to high throughput computing accessible to folks that are not traditional command-line driven scientists. b) Save money and increase user happiness by more effectively using resources. There is a bit of momentum building around the notion of cluster or resource on demand. So for instance having a pool of resources and say imaging a system on the fly to support a particular application for a particular user and releasing the resource when done. Some (including folks at NC State) believe that it may be cheaper and easier to build this kind of a system than the land of milk and honey (grid) where you somehow can apply middleware to any combination of hardware and software platform and get a consistent, deterministic result. So for instance some applications may simply run cheaper or faster or whatever on a particular OS - so deal with that and image an optimized system on the fly and release the resources when you're done. Only problem is this is almost anti-grid in that it punts on the notion of being able to build the perfect middleware stack. c) Build more effective Virtual Organizations that share data, applications, tools, gear, etc. The first two examples above are more from the point of view of an organization that is in the business of running infrastructure – a service provider. Here the perspective is a user group. So the Florida example comes to mind where a Biologist is providing services to Biologists and some grid tools make sense for managing computation, data management, application support and the like. Defining workflows and building portals that approximate those workflows while maintaining the notion of access control and "ownership" comes to mind here.