P{A A, A A, A B }

advertisement

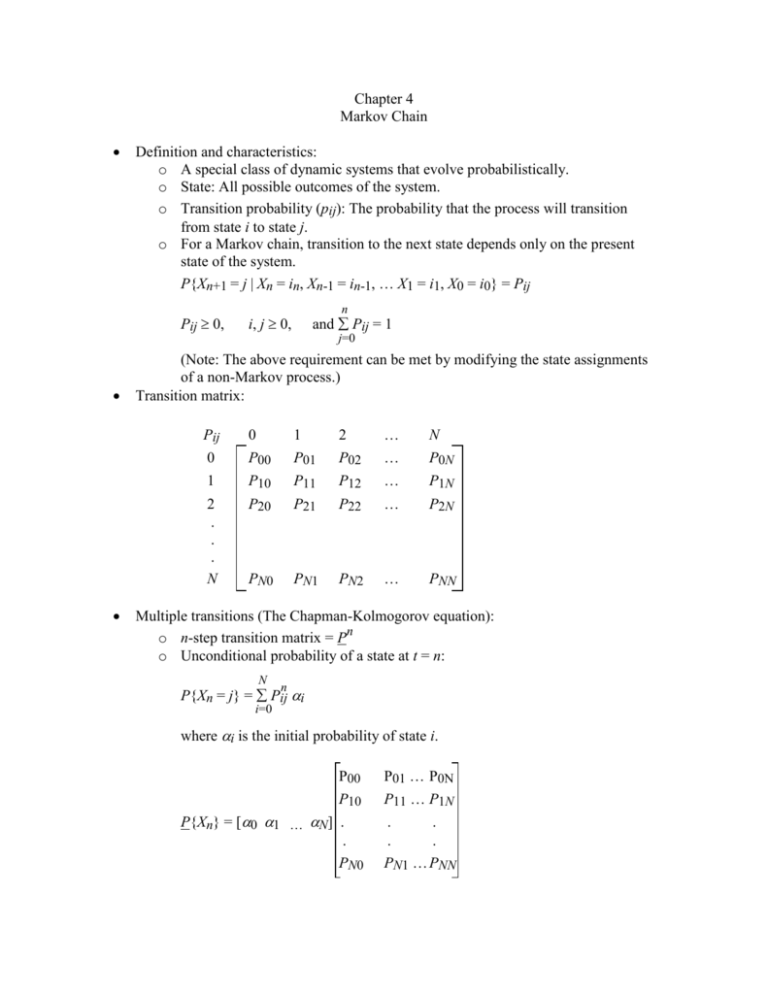

Chapter 4

Markov Chain

Definition and characteristics:

o A special class of dynamic systems that evolve probabilistically.

o State: All possible outcomes of the system.

o Transition probability (pij): The probability that the process will transition

from state i to state j.

o For a Markov chain, transition to the next state depends only on the present

state of the system.

P{Xn+1 = j | Xn = in, Xn-1 = in-1, … X1 = i1, X0 = i0} = Pij

Pij 0,

n

i, j 0,

and Pij = 1

j=0

(Note: The above requirement can be met by modifying the state assignments

of a non-Markov process.)

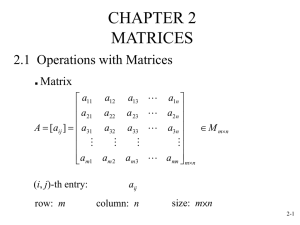

Transition matrix:

Pij

0

1

2

…

N

0

P00

P01

P02

…

P0N

1

P10

P11

P12

…

P1N

2

.

.

.

N

P20

P21

P22

…

P2N

PN0

PN1

PN2

…

PNN

Multiple transitions (The Chapman-Kolmogorov equation):

o n-step transition matrix = Pn

o Unconditional probability of a state at t = n:

N

n

P{Xn = j} = Pij i

i=0

where i is the initial probability of state i.

P10

P01 … P0N

P11 … P1N

P{Xn} = [0 1 … N] .

.

PN0

.

.

.

.

PN1 … PNN

P00

Markov chain example

Question: The following transition matrix describes the grade change of a college

student from one year to another. Find the probability that

a) an “A” incoming freshman would become a “B” student after the first year;

b) an “A” incoming freshman would become a “B” student after the second year;

c) an “A” incoming freshman would become a “B” student after the third year;

d) an “A” incoming freshman would graduate with an “A” average;

e) a “B” incoming freshman would graduate with a “C” average.

f) If the grade distribution of incoming freshman are:

A – 10%

B – 40%

C – 30%

D – 20%

F – 0%

Find the grade distribution of the graduates.

A

B

C

D

F

A

0.5

0.25

0

0

0

B

0.5

0.5

0.25

0

0

C

0

0.25

0.5

0.25

0

D

0

0

0.25

0.5

0.5

F

0

0

0

0.25

0.5

a) P{A B} = 0.5

b) P{A B, B B}

= 0.5 0.5

=

0.25

P{A A, A B}

= 0.5 0.5

=

0.25

0.50

c) P{A B, B B , B B} = 0.5 0.5 0.5

+

=

0.125

P{A B, B A , A B} = 0.5 0.25 0.5

=

0.0625

P{A B, B C , C B} = 0.5 0.25 0.25

=

0.03125

P{A A, A B , B B} = 0.5 0.5 0.5

=

0.125

P{A A, A A, A B } = 0.5 0.5 0.5

=

0.125

0.46875

d) 0.273

e) 0.25

f)

( .1 .4 .3 .2 0 ) A

4

0.154 0.295 0.256 0.205 0.09

o Problems: 4.2, 4.3, 4.7, 4.8

+

Classification of states:

n

o Accessible (i j): Pij > 0 for some n 0.

o Communication (i j): i j and j i

ii

ijji

i k and j k i j

o Class: States that communicate are said to be in the same class. Any two

classes are either identical or disjoint (no overlap).

o Irreducible Markov chain: All states communicate with each other (one class,

no transient states).

o Absorbing state: Pii = 1

o Recurrent state: A state that will be revisited infinitely often.

o Transient state: Each time the process departs from the state there will be a

positive probability that the process will never again reenter the state. As a

result, the process will reenter a transient state a limited number of times.

o Properties of recurrent states:

A finite-state Markov chain must have at least one recurrent state.

Any state that communicates with a recurrent state must also be

recurrent.

All states in a irreducible finite-state Markov chain are recurrent.

o Problems: 4.14

Limiting probability (long-run proportion, stationary probability)

o Periodic state: A state is said to be periodic with a period d when the

probability of returning to itself in n steps is 0 unless n is an integer multiple

of d.

o An aperiodic state has a period of 1 – it can return to itself in any number of

steps.

o Positive recurrent state: If the expected time for a recurrent state to return to

itself is finite, then it is a positive recurrent state.

o Positive recurrence is a class property.

o In Markov chains with a finite number of states, all recurrent states are

positive recurrent.

o Ergodic states: Positive recurrent, aperiodic states

o The limiting probability of Ergodic Markov chains can be computed using the

following simple procedures:

Lim Pn =

n

is the unique, nonnegative solution of the following equations:

N

j = i Pij

i=0

N

i = 1

j=0

j0

o If the limiting probabilities of a (3 X 3) Markov chain is:

a11

P a 21

a31

a13

a 23

a33

a12

a 22

a32

a11

n

lim P lim a 21

n

n

a31

a11

lim a 21

n

a13

a12

a 22

a 23

a12

a 22

a32

a13

1 2 3

a 23 1 2 3

1 2 3

a33

n

n

a13

a11

a 23 lim a 21

n

a13

a33

1 2 3 a11

1 2 3 a 21

1 2 3 a31

a12

a 22

a32

a13

a 23

a33

a13

a 23

a33

a12

a 22

a 23

n 1

a11

a 21

a32

1 2 3 1 2 3 a11

1 2 3 1 2 3 a 21

1 2 3 1 2 3 a31

1 2 3 a11 1 a 21 2 a31 3

1 2 3 a11 1 a 21 2 a31 3

1 2 3 a11 1 a 21 2 a31 3

a12

a 22

a32

n 1

a12

a 22

a32

n

a13 a11

a 23 a 21

a33 a31

a12

a 22

a32

a13

a 23

a33

a12 1 a 22 2 a32 3

a12 1 a 22 2 a32 3

a12 1 a 22 2 a32 3

a13 1 a 23 2 a33 3

a13 1 a 23 2 a33 3

a13 1 a 23 2 a33 3

1 a11 1 a 21 2 a31 3 a11 1 1 a 21 2 a31 3 0

2 a12 1 a 22 2 a32 3 a12 1 a 22 1 2 a32 3 0

3 a13 1 a 23 2 a33 3 a13 1 a 23 2 a33 1 3 0

a11 1

a12

a13

a 21

a22 1

a 23

a31 1 0

a32 2 0

a33 1 3 0

a13

a 23

a33

and

1 2 3 1

o This is a set of linear equations that can be solved with different techniques.

The new matrix equation set is not linearly independent. As a result, we can

obtain the limiting probabilities by solving two of the matrix equations and the

normalization equation (1 + 2 + 3 = 1).

o Solution techniques:

Numerical techniques

Analytical techniques

Symmetry

Special technique:

1. Let 1 = 1 (or any other convenient value)

2. Substitute 1 in to the equation set to solve for 2 and 3

3. Normalize the results:

1

1 2 3

2

1 2 3

3

1 2 3

1, final

2, final

3, final

o In general, the limiting probabilities of an N X N Markov chain can be

obtained from the following set of equations:

1 2 N 1

a11 1

a

21

.

.

a N 1

a12

.

.

.

.

.

.

.

.

.

.

aN 2

.

.

a22 1

1 0

a2 N 2 .

. .

.

.

. 0

a NN 1 N 0

a1N

o Again, because of the linear dependence of the matrix equation set, you need

to ignore one (your choice) of the matrix equations.

o With modern computing equipment the easiest way to calculate the limiting

probabilities is perhaps by calculating PN directly – Just keep rising the power

of P until convergence is observed.

o Problems: 4.18, 4.19, 4.21, 4.23, 4.29, 4.33, 4.35

o For general Markov chains use the Cayley-Hamilton theorem (EEE-241).

Meantime spent in transient states (Ex. 4.26 and 4.27, p. 227):

Sij = Expected time period spent in state j, given that the process starts in state i.

fij = the probability that the process makes a transition into state j, given that it

starts in state i.

PT = the transition matrix of transient states

S = (I – PT)-1

fij = (sij – ij) / sjj

Branching process:

o Mean number of offspring:

= j Pj

Pj = P{j new offspring}

j=0

o P{population dies out} = 0

0 = 1 for 1

For > 1, 0 = smallest positive root of the following equation

0 = 0 j pj

j=0

o Examples:

P0 = P1 = P2 = 1/3, Pj = 0 for j > 2

= 0*(1/3) + 1*(1/3) + 2*(1/3) = 1 0 = 1

P0 = 1/2, P1 = 1/4, P2 = 1/4, Pj = 0 for j > 2

= 0*(1/2) + 1*(1/4) + 2*(1/4) = 3/4 0 = 1

P0 = 0.1, P1 = 0.5, P2 = 0.4, Pj = 0 for j > 2

= 0*(0.1) + 1*(0.5) + 2*(0.4) = 1.3 (O.K.)

0 = 0.1 + 0.50 +0.402 402 – 50 + 1 = 0

0 = 0.25 or 1 0 = 0.25 (25% chance die out)

If P0 = 0, then the die out probability is always 0 (why???).

> 1 (why???)

One of the roots is always 0 (why???), which makes it the smallest

positive root, thus 0 = 0.

o Problem: 4.66

Time reversible Markov chain:

o Ergodic process: Ensemble average = time average

o The reverse process is also a Markov chain with transition probability:

Qij = (j / i) Pji

o If Qij = Pji then the Markov chain is called time reversible.

o Problem: 4.73

0

0.5

0.5

0.25

0.5

0.25

0.25

0.25

0.5

0 = 0.2, 1 = 2 = 0.4

0 P01 = 0.1

1 P12 = 0.1

2 P20 = 0.1

1 P10 = 0.1

2 P21 = 0.1

0 P02 = 0.1