Data supplement 11

advertisement

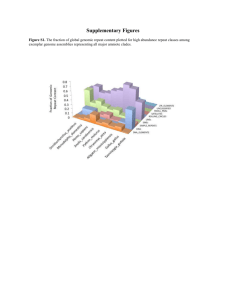

Supplementary Material

Simple Linear Regression for a Quantitative Trait

Consider a quantitative trait locus with two alleles A and a having frequencies PA and Pa ,

respectively. Denote the genotypic values of the genotypes AA, Aa and aa by G11 , G12 and G22 ,

respectively. A genetic additive effect is given by (Appendix A)

PAG11 ( Pa PA )G12 PaG22 .

Let Yi be a phenotype value of the i-th individual. A simple linear regression model for a

quantitative trait is given by (Appendix A)

Yi X i i

where

2 Pa , AA

X i Pa - PA , Aa .

2 P , aa

A

(1)

i are independent and identically distributed normal variables with zero mean and variance e2 .

We add a constant Pa PA into X i . Then, the indicator variable will be transformed to

1 , AA

X i 0 , Aa

1 , aa

(2)

which is a widely used indicator variable in quantitative genetic analysis.

Consider a marker locus with two alleles M and m having frequencies PM and Pm ,

respectively. Let D be the disequilibrium coefficient of the linkage disequilibrium (LD) between

the marker and the quantitative trait loci. The relationship between the phenotypic value Yi and

the genotype at the marker locus is given by

Yi m X im m i ,

(3)

where indicator variable X i is given by

X

m

i

2 Pm , MM

Pm - PM , Mm.

2 P , mm

M

(4)

Assuming the genetic model for the quantitative trait in equation (1), it can be shown that

a. s.

ˆ m

D

,

PM Pm

which implies that the estimators ̂ m at the trait locus is ˆ m and hence is a consistent

estimator.

Assume that the marker is located at the genomic position t and the trait locus is at the

genomic position s. Let D(t , s ) be the disequilibrium coefficient between the marker and trait

loci. Let ˆ (t ) be the estimated genetic additive effect at the marker locus and (s ) be the true

additive effect at the trait locus. Let PM (t ) and Pm (t ) be the frequencies of the marker alleles M

and m at the genomic position t, respectively. Then, ˆ (t ) will be almost surely convergent to

a.s.

ˆ (t )

D ( s, t )

( s) .

PM (t ) Pm (t )

(5)

Suppose that there are K trait loci which are located at the genomic positions s1,, sK . The j-th

trait locus has the additive ( s j ) . Then, we have

a . s.

ˆ (t )

1

PM (t ) Pm (t )

K

D(t , s

j )( s j )

,

(6)

j 1

where D(t , s j ) is the disequilibrium coefficient between the marker at the genomic position t and

the trait locus at the genomic position s j .

Multiple Linear Regression for a Quantitative Trait

Consider the L marker loci which are located at the genomic positions t1 ,, t L . The

multiple linear regression model for a quantitative trait is given by

Yi m

L

X

il l

i

(7)

i 1

where indicator variables X il are similarly defined as that in equation (4). Let

PM l and Pml be the frequencies of the alleles M l and ml of the marker located at the genomic

positions tl , respectively, D (tl , t j ) be the disequilibrium coefficient of the LD between the

marker at the genomic position tl and the marker at the genomic position t j . Assume that there

are K trait loci s as defined before. Let D (t j , sk ) be the disequilibrium coefficient of the LD

between the marker at the genomic position t j and the trait at the genomic position sk . By a

similar argument as that in Appendix B, we have

a.s.

ˆ m PA1 ,

(8)

where ˆ m [ˆ1 ,, ˆ L ]T ,

2 PM 1 Pm1 D(t1 , t2 )

D (t , t ) 2 P P

2 1

M 2 m2

PA

D(t L , t1 ) D(t L , t2 )

K

K

j 1

j 1

D(t1 , t L )

D(t1 , t L )

2 PM L PmL

[2 D(t1 , s j )( s j ),,2 D(t L , s j )( s j )]T .

If we assume that all markers are in linkage equilibrium, then equation (8) is reduced to

ˆ (t )

1

PM t Pmt

k

D (t , s

j 1

j

)( s j ) ,

which is exactly the same as equation (6). In other words, under the assumption of linkage

equilibrium among markers, multiple linear regression can be decomposed into a number of

simple regressions.

Substituting the above equation into equation (7) and taking limit will lead to the function

linear model (10) that will be discussed in the next section.

B-Splines

B-splines are Let the domain [0,1] be subdivided into knot spans by a set of nondecreasing numbers 0 u 0 u1 u 2 u m 1 . The ui s are called knots. The i-th B-spline

basis function of degree p, written as Bi , p (t ) is defined recursively as follows (Ramsay and

Silverman 2005):

1 if u i t u i 1

Bi ,0 (t )

0 otherwise

Bi , p (t )

u i p 1 t

t ui

Bi , p 1 (t )

Bi 1, p 1 (t ) .

ui p ui

u i p 1 u i 1

B-Spline Basis functions have two important features:

(1) Basis function Bi , p (t ) is non-zero only on p 1 knot spans

[u i , u i 1 ), [u i 1 , u i 2 ), , [u i p , u i p 1 ).

(2) Given any knot span [ui , ui 1 ) , there are at most p 1 degree p basis functions that are nonzero, namely:

Bi p , p (t ), Bi p 1, p (t ), Bi p 2, p (t ), , Bi 1, p (t ) and Bi , p (t ).

Methods for basis function selection

Basis function selection is carried out by LASSO regression. Consider a sequence of SNPs:

t1 ,..., tk with t0 t1 ... tk tk 1 and t 3 t 2 t1 t0 , tk 1 tk 2 tk 3 tk 4 . Let Bk (u, t ) be a

cubic B-spline. Assume that we have the observed data

{( ui , d i )}in1 . A regression model is given by

di f (ui ) i , i 1,2,..., n .

(9)

We approximate f (t ) by a B-spline:

S (u )

k m

B ( u, t ) .

l 1

l

(10)

l

The regression spline estimate fˆ (u )

k m

ˆ B (u, t ) is obtained by minimizing

l

l

l 1

F ( )

k m

k m

1 n

[d i l Bl (ui , t )]2 | l | ,

2 i 1

l 1

l 1

(11)

where m 4 . Let d [d1 ,..., d n ]T , [1 ,..., k m ]T , and

B1 (u1 , t ) Bk m (u1 , t )

.

M

B (u , t ) B (u , t )

k m

n

1 n

Then, equation (11) can be reduced to

F ( )

k m

1

( d M)T ( d M) | l | .

2

l 1

A point * which is a minimum of function F () is to satisfy1

(12)

0 F (* ).

The optimization problem (12) can be rewritten as

k m

k m

k m

1

F () (d M l l M j j )T (d M l l M j j ) | l | .

2

l 1, j

l 1, j

l 1

(13)

Differentiating equation (13) and setting it to be zero, we have

M Tj rj M Tj M j j s j 0,

where rj d

k m

M

l 1, j

l

l

, M j is the j-th column vector of the matrix M,

sing ( j ) if j 0

sj

j 0.

[1,1]

Thus,

M Tj M j j M Tj rj s j , or

M Tj rj

j T

T

sj .

MjMj MjMj

Therefore,

M Tj rj

M Tj rj

ˆ j sign ( T

)(| T

| T

)

MjMj MjMj

MjMj

if x 0

x

( x)

0

otherwise

(14)

Algorithms:

Step 1: initialization

( 0) ( M T M ) 1 M T d .

Step 2: for v 1,2,...,

Step 3: for j 1,2,..., k m

rj d

ˆ

( v 1)

j

k m

M

l 1, j

l

(v)

l

,

M Tj rj

M Tj rj

sign ( T

)(| T

| T

)

MjMj MjMj

MjMj

End

If || (jv 1) ( v )j ||2 then

(jv )

(jv 1) , j 1,2,..., k m,

Go to step 2

Else

Stop (convergence)

Appendix A: A statistical model for genetic effects

The statistical model for three genotypic values can be expressed as

G11 21 e1

G12 1 2 e2

G22 2 2 e3

(A1)

where ei (i 1,2,3) are the respective deviation of the genotypic values from their expectations on

the basis of a perfect fit of the model. We then obtain estimators of , 1 and 2 by minimizing

Q p 2 (G11 2 1 ) 2 2 pq(G12 1 2 ) 2 q 2 (G11 2 2 ) 2 .

Setting

Q

Q

Q

0,

0 and

0 , and solving these equations,

u

1

2

we obtain

ˆ PA2G11 2 PA PaG12 Pa2G22

1 Pa [ PAG11 ( Pa PA )G12 PaG22 ]

2 PA [ PAG11 ( Pa PA )G12 Pa G22 ] .

The substitution effect is defined as

1 2 PAG11 ( Pa PA )G12 PaG22 ,

which is often termed as a genetic additive effect.

Appendix B: The convergence of least square estimator of the regression coefficients

Let Y [Y1 ,, Yn ]T , X m [ X 1m ,, X nm ]T , , [ m , m ]T , [1 , 2 , n ]T , W [1, X m ]T ,

1 [1,1,,1]T . Then equation (3) can be written in a matrix form:

Y W

(B1)

The least square estimator of the regression coefficients is given by

ˆ (W TW ) 1W T Y

(B2)

It follows from equation (4) that

E[ X 1m ] 2 Pm PM2 2( Pm PM )( Pm PM ) 2 PM Pm2

2 Pm PM ( PM Pm PM Pm ) 0,

E[( X 1m ) 2 ] (2 Pm ) 2 PM2 2( Pm PM ) 2 ( Pm PM ) ( 2 PM ) 2 Pm2

2 Pm PM .

Since

1

1 T

W W

n

1 X im

n i

1

X im

n i

,

1

m 2

(

X

)

i

n i

by large number theory, we have

0

1

1 T

W W

.

a. s.

n

0 2 Pm PM

It follows from equations (3) and (4) that

E[Y1 ]

(B3)

2

E[ X 1m X 1 ] 2 Pm [( 2 Pa ) PMA

2( Pa PA ) PMA PMa 2 PA ( PMa ) 2 ]

( Pm PM )[( 2 Pa )2 PMA PmA 2( Pa PA )( PMA Pma PMa PmA ) 2(2 PA ) PMa Pma ]

2

2

(2 PM )[( 2 Pa ) PmA

2( Pa PA ) PmA Pma (2 PA ) Pma

]

4 Pm PM D 2( Pm PM ) 2 D 4 Pm PM D

2D

By the large number theory,

1

Yi

1 T

n i

a.s

W Y

D .

n

1 X imYi

n i

(B4)

Combining equations (B3) and (B4), we obtain

a. s.

m

D

.

PM Pm

(B5)

When the marker is at the trait locus, we have

a .s .

m .

References

1. Friedman J, Hastie T, Höfling, H and Tibshirani R. Pathwise coordinate optimization.

Ann. Appl. Stat. 2007; 1: 302-332.