A. linear composite kernels (initial HS

advertisement

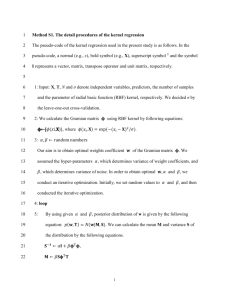

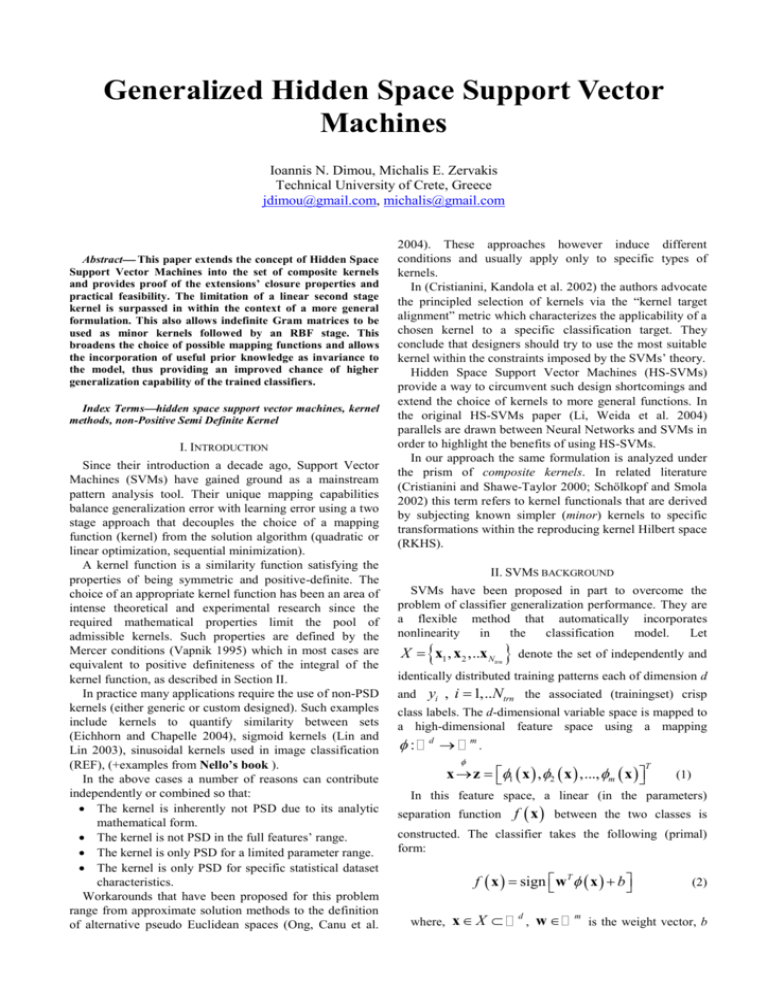

Generalized Hidden Space Support Vector Machines Ioannis N. Dimou, Michalis E. Zervakis Technical University of Crete, Greece jdimou@gmail.com, michalis@gmail.com Abstract This paper extends the concept of Hidden Space Support Vector Machines into the set of composite kernels and provides proof of the extensions’ closure properties and practical feasibility. The limitation of a linear second stage kernel is surpassed in within the context of a more general formulation. This also allows indefinite Gram matrices to be used as minor kernels followed by an RBF stage. This broadens the choice of possible mapping functions and allows the incorporation of useful prior knowledge as invariance to the model, thus providing an improved chance of higher generalization capability of the trained classifiers. Index Termshidden space support vector machines, kernel methods, non-Positive Semi Definite Kernel I. INTRODUCTION Since their introduction a decade ago, Support Vector Machines (SVMs) have gained ground as a mainstream pattern analysis tool. Their unique mapping capabilities balance generalization error with learning error using a two stage approach that decouples the choice of a mapping function (kernel) from the solution algorithm (quadratic or linear optimization, sequential minimization). A kernel function is a similarity function satisfying the properties of being symmetric and positive-definite. The choice of an appropriate kernel function has been an area of intense theoretical and experimental research since the required mathematical properties limit the pool of admissible kernels. Such properties are defined by the Mercer conditions (Vapnik 1995) which in most cases are equivalent to positive definiteness of the integral of the kernel function, as described in Section II. In practice many applications require the use of non-PSD kernels (either generic or custom designed). Such examples include kernels to quantify similarity between sets (Eichhorn and Chapelle 2004), sigmoid kernels (Lin and Lin 2003), sinusoidal kernels used in image classification (REF), (+examples from Nello’s book ). In the above cases a number of reasons can contribute independently or combined so that: The kernel is inherently not PSD due to its analytic mathematical form. The kernel is not PSD in the full features’ range. The kernel is only PSD for a limited parameter range. The kernel is only PSD for specific statistical dataset characteristics. Workarounds that have been proposed for this problem range from approximate solution methods to the definition of alternative pseudo Euclidean spaces (Ong, Canu et al. 2004). These approaches however induce different conditions and usually apply only to specific types of kernels. In (Cristianini, Kandola et al. 2002) the authors advocate the principled selection of kernels via the “kernel target alignment” metric which characterizes the applicability of a chosen kernel to a specific classification target. They conclude that designers should try to use the most suitable kernel within the constraints imposed by the SVMs’ theory. Hidden Space Support Vector Machines (HS-SVMs) provide a way to circumvent such design shortcomings and extend the choice of kernels to more general functions. In the original HS-SVMs paper (Li, Weida et al. 2004) parallels are drawn between Neural Networks and SVMs in order to highlight the benefits of using HS-SVMs. In our approach the same formulation is analyzed under the prism of composite kernels. In related literature (Cristianini and Shawe-Taylor 2000; Schölkopf and Smola 2002) this term refers to kernel functionals that are derived by subjecting known simpler (minor) kernels to specific transformations within the reproducing kernel Hilbert space (RKHS). II. SVMS BACKGROUND SVMs have been proposed in part to overcome the problem of classifier generalization performance. They are a flexible method that automatically incorporates nonlinearity in the classification model. Let X x1 , x2 ,..x Ntrn denote the set of independently and identically distributed training patterns each of dimension d and yi , i 1,..Ntrn the associated (trainingset) crisp class labels. The d-dimensional variable space is mapped to a high-dimensional feature space using a mapping : d m . x z 1 x , 2 x ,..., m x T (1) In this feature space, a linear (in the parameters) separation function f x between the two classes is constructed. The classifier takes the following (primal) form: f x sign w T x b where, x X d , w m (2) is the weight vector, b the bias term, and f x the crisp prediction of the model after applying a sign() function to the soft output. Under the standard SVMs formulation (Cristianini and Shawe-Taylor 2000; Schölkopf and Smola 2002) in the primal space the objective function associated with the problem’s solution is defined as Ntrn 1 T min w w C i w ,b ,ξ 2 i 1 (3) subject to the constraints yi w T xi b 1 i i 0, (4) i 1...N trn where C is the regularization parameter, and i are the slack variables that allow for misclassification of some samples. This model formulation attempts to find a balance between maximization of the margin separating the two classes, which corresponds to regularization, (term 1) and minimization of misclassifications (term 2). By solving the corresponding Lagrangian, the problem can also be formulated in the dual space as the equivalent classifier k x, xi exp x xi 2 2 2 (9) where the parameter denotes the kernel width. The choice of kernel affects how the linear separation hyperplane in the feature space relates to the nonlinear separation hyperplane in the original variables’ space. Regardless of the kernel function used, the solution approach leads to a quadratic optimization problem. In order for a Gram matrix to be an admissible SVM kernel is has to satisfy 2 distinct properties: Symmetry Positive (semi)definiteness The later in the discrete case amounts to: f x f x K x , x 0 i xi X x j X j i j (10) and in the continuous case to: f x f x K x , x dx dx i j i j i j 0 (11) X X These conditions pose a problem and greatly limit the choice of kernel functions. III. INDEFINITE KERNELS Ntrn T f x sign i yi x xi b i 1 Where i (5) are weight parameters called support values of the training cases and Since the mappings yi the corresponding crisp labels. x can be complex or unspecified by design, it is more convenient to implicitly work in the feature space by defining a positive definite a kernel function k x, x i x x i T (6) that allows us to formulate appropriate hidden spaces which ensure better separability. The corresponding matrix K ij k xi , x j , usually referred to as the Gram matrix, can be calculated and stored once for the trainingset and once for the testset and used repetitively in (5). Apart from the trivial linear kernel k x, xi xT xi other commonly polynomial kernel used kernel (7) functions k x, xi xT xi 1 , p are the p and Gaussian radial basis function kernel (8) The fact that Mercer conditions and PSD requirement significantly limit the pool of available functions has been recognized early on in the development of SVM algorithms. This has resulted in the widespread use of very few admissible kernels, the most prominent of which is the RBF kernel. Despite its optimality and theoretically infinite mapping capability (VC confidence), this generic kernel suffers from its own design. The bandwidth parameter is not easy to optimize and can result in suboptimal performance. This has been referred to as the “no free lunch” theorem attributed to (Cristianini and Shawe-Taylor 2000). The analogy between statistical density estimation kernels and SVMs has triggered the idea of sharing kernel functions between the two domains (REF). In practice this leads to analytical forms that are not guaranteed to be PSD. Additionally many authors have proposed numerous kernels that attempt to incorporate domain specific invariances in the classification model. Typical examples of such kernels found in literature include: The sigmoid (hyperbolic tangent) kernel (Lin and Lin 2003) has been evaluated in the past due to its correspondence to neural networks’ sigmoid activation function. k (xi , x j ) tanh ai xiT x j b (12) It is in general non-PSD and recent experimental work has shown the sigmoid kernel to be asymptotically identical to the RBF kernel (REF). The Epanenchikov kernel (Li and Racine 2007). k xi , x j 3 1 xi x j 4 2 (13) This kernel’s analytical form does not lend itself to a proof of positive definiteness. One metric that can be used to measure the perfomance of kernels is MISE (mean integrated squared error) or AMISE (asymptotic MISE). The Epanechnikov kernel minimizes AMISE and is therefore optimal. Other kernel efficiencies are measured in comparison to Epanechnikov kernel. The negative distance kernel (REF) has been proposed in an effort to incorporate translation invariance to the SVM model. k ND xi , x j xi x j (14) Mahalanobis distance kernels have been used in problems where matching probability distributions is required, as they characterize the shape of the data. k Mah xi , x j xi xi Cij 1 x j x j T (15) Cij is the convolution matrix of the two vectors indexed by i and j. Additionally the need for indefinite kernels occurs in many distance based metrics and when the data structure corresponds to non-Euclidean spaces (i.e. kernels on sets, kernels on trees). In fact most dissimilarity based kernels of the form k xi , x j f xi x j HS-SVMs are derived by utilizing a special kind of mapping to the hidden space, a symmetric function ' xi k1 xi , x k1 x, xi k1 x z k1 x1 , x , k1 x 2 , x ,..., k1 x N , x (17) Using this function the corresponding hidden space can be expressed as T m N dimensional Z z z k1 x1 , x , k1 x2 , x ,.., k1 x N , x , x X (18) We can proceed in a way analogous to the standard SVMs’ formulation and define the decision function in the primal space y x sign wT z x b (19) and the corresponding function in the dual space N y x sign i yi z x z xi b i 1 N sign i yi k2 xi , x b i 1 (16) (20) are not positive definite. where the kernel function used by HS-SVMs IV. WORKAROUNDS TO USING INDEFINITE KERNELS To overcome the problem of using indefinite kernel formulations researchers have proposed approximations of indefinite kernels (REF), use of pseudo Euclidean spaces (pE) and limiting the kernel function to an area that is guaranteed to be PSD. In the general case it has been proven that the exponentation operation on arbitrary feature matrixes results is admissible kernel matrixes (Kondor R and J 2002). Other solutions include the empirical kernel mapping (EKM) which takes S¨S as a new kernel matrix (Scholköpf, Weston et al. 2002), and the Saigo kernel which is constructed by substracting the smallest negative eigenvalues from the original non-PSD kernel’s diagonal (Saigo, Vert et al. 2004). Still the use of non-PSD kernels can be applied, with the acknowledged limitation that there is a high risk of converging to a local minima of the error function surface. This however negates one of the core advantages of SVMs over neural networks. According to Haasdonk (REF) such kernels result to svms which cannot be seen as margin maximizers. V. EXTENDING HS-SVMS Having outlined the baseline of nonlinear soft-margin SVMs, and indefinite kernel problems we can now establish the HS-SVMs formulation and the proposed extensions. As introduced by Li in (Li, Weida et al. 2004), Ntrn k2 xi , x j k1 x n , xi k1 x n , x j n 1 (21) k1 x, xi k 1 x, x j T is an inner product of the minor kernels and hence positive semi-definite, therefore qualifying as a valid RKHS kernel. In this work we further extend the above formulation and redefine the HS-SVMs’ kernel as a more general functional in the form of k2 ( xi , x j ) k2 k1 x, xi , k1 x, x j (22) This way we have two consecutive kernels are shown in Figure 1. Depending on the choice of k1 and k2 , different mapping spaces can be introduced to handle specific classification problems. For demonstration purposes we will analyze some basic combinations of known SVM kernels and justify their properties. A. k2 linear composite kernels (initial HS-SVMs) If we opt to use a linear second stage kernel resulting HS-SVM kernel becomes: k2 , then the k(x,xi) = φ(xi) xi y(xi) φ(x)φ(xi) φ(xi) xi φ(xi) xi k1(x,xi) = k2(x,xi) = φ(x)φ(xi) k1(x,xi)k1(x,xi) y(xi) k1(x,xi) = k2(x,xi) = φ(x)φ(xi) k2[k1(x,x’) k1(x’,xi)] y(xi) Figure 1 Hidden space mappings for SVMs (top), HS-SVMs (middle) and generalized HSSVMs (bottom) (25) k x i , x j k 2 k 1 x, x i , k 1 x , x j k1 x, xi k 1 x, x j T (23) = ' xi ' x j T k1 polynomial - k2 linear: k x i , x j k 2 k 1 x , x i , k 1 x, x j k1 x, xi k 1 x, x j T This model reduces to the original HS-SVMs proposed by Li. In fact this assertion holds irrespective of the choice of k1 . Some earlier implementations of this family of models include: k1 linear- k2 linear: k x i , x j k 2 k 1 x, x i , k 1 x, x j k 1 x, x i k 1 x , x j = xT xi 1 p x x 1 , p p T j It has been shown that this class of composite kernels achieves a more sparse representation of the problem space, in the sense that in a training phase it results in a lower number of support vectors. T = x, x i (24) x, x j B. k2 polynomial kernels: Even with traditional SVMs linear class separability is seldom achievable. An alternative that balances computational requirements with mapping power is in the k1 RFB - k2 linear: k xi , x j k2 k 1 x, xi , k 1 x, x j k1 x, xi k 1 x, x j T x x 2 x xi 2 j = exp exp 2 2 2 2 form of polynomial k2 kernels: k xi , x j k2 k1 x, xi , k1 x, x j k 1 x, x i k 1 x , x j 1 , p T p (26) Comparative results on the performance of this similarity mapper are given in Section 4. C. k Mah rbf xi , x j k2 k 1 x, xi , k 1 x, x j k2 RBF kernels: In order to obtain maximum flexibility of the decision boundaries the prominent model is a parametrized RBF (Gaussian) kernel. This scenario leads to the following formulation: k xi , x j k2 k1 x, xi , k1 x, x j T T 1 x x C x x x x Cij 1 x j x j i i ij j j i i = exp 2 2 k x, x k x, x 1 i 1 j exp 2 2 2 (27) In order to be able to utilize such complex kernels we first have to prove their admissibility as RKHS mappings. In general the sufficient conditions for a function k xi , x j to correspond to a dot product in the feature Where is the function’s spread/bandwidth parameter. An additional benefit of using generalized HS-SVMs lies in the ability to wrap and utilize indefinite minor kernels. To this end we provide the derivations for the sigmoid, Epanenchikov, negative distance and Mahalanobis kernel functions. We implemented only the rbf as a second stage kernel since in practice it the most usually used functional. D. sigmoid-RBF composite kernel: The ksig rbf xi , x j k2 k1 x, xi , k 1 x, x j E. Epanenchikov-RBF composite kernel: The k Ep rbf xi , x j k2 k1 x, xi , k1 x, x j 3 1 xi x 4 = exp 2 k ND rbf xi , x j k2 k1 x, xi , k1 x, x j xi x x x j = exp 2 2 2 G. Mahalanobis-RBF composite kernel: The i 2 j i j i j 0 (28) X X where f x L2 X . However direct evaluation of the above expression is often infeasible. In the context of this work, proofs of the admissibility of the above functionals as SVM kernels are given in the appendix. The proofs are largely straightforward and rely on the kernel properties defined in (Cristianini and ShaweTaylor 2000). From a broader perspective certain SVM kernel functions have intuitive meaning. RBF kernels are metrics of the smooth weighted distances of a given sample xi to second order statistic that measures the similarity of the k x , x f x f x dx dx all other samples in the same class. Then a standard HSSVM kernel function with k1 : RBF can be regarded as a 3 1 x x j 4 2 2 F. Negative Distance-RBF composite kernel: The space F are defined by the Mercer theorem: tanh a xT x b tanh a x T x b i j j j = exp 2 2 distances of a given sample 2 2 xi to the corresponding distances of all other samples in the class. This can be used as an indication to the cluster’s overall compactness. VI. EXPERIMENTAL RESULTS In order to evaluate the performance of generalized HSSVM kernels we used known non-PSD kernels as minor kernels and applied them to a series of benchmark datasets. The first test scenario included indefinite sigmoid kernels (Luss and d’Aspremont 2008) used on 3 datasets (diabetes, german and ala) from the UCI repository (Newman, Hettich et al. 1998). Additional results on the same datasets have also been reported in (Lin and Lin 2003). The breast cancer diagnosis dataset (Wisconsin) contains 683 complete cytological tests described by 9 integer attributes with values between 1 and 10. The outcome is a binary variable indicating the benign or malignant nature of the tumor. The diabetes diagnosis dataset was first collected to investigate wether the patients show signs of diabetes according to the World Health Organization criteria. The polulation consists of female Pima Indians, aged 21 and onler, living near Phoenix, Arizona. It contains 768 instances each described by 8 continuous varibles and a binary outcome variable. Te German credit dataset contains dataused to evaluate credit applications in Germany. It has 1000 cases. The version that we used each case is described by 24 continuous attributes. The second test scenario included … will outline two exemplary applications which can benefit from the use of non-standard, non-positive definite structure-based k1 kernels. The classification of microarray data has become a standard tool in many biological studies. Gene expressions of distinct biological groups are compared and classified according to their gene expression characteristics, in tumor diagnosis (Golub TR, Slonim DK et al. 1999). Kernel methods play important roles in such disease analyses, especially when classifying data with SVMs and other kernel methods classify such data based on the feature or marker genes that are correlated with the characteristics of the groups. In most of those studies, only standard kernels such as linear, polynomial, and RBF, which take vectorial data as input, have been popularly used and generally successful. Other than the above vectorial data kernel family, there is another family called structured data kernel family that has been studied in many other fields including bioinformatics and machine learning. The structured data kernel family conveys structural or topological information with or without numerical data as input to describe data. For example, the string kernel for text classification (Lodhi H, Saunders C et al. 2002), the marginalized count kernel (Tsuda K, Kin T et al. 2002) for biological sequences, the diffusion kernel (Kondor R and J 2002) and the maximum entropy (ME) kernel (Tsuda K and WS 2004) for graph structures are well known in the biological field. In microarray analysis, one of the main issues that hamper accurate and realistic predictions is the lack of repeat experiments, often due to financial problems or rarity of specimens such as minor diseases. Utilization of public or old data together with one's current data could solve this problem; many studies combining several microarray datasets have been performed (Warnat P, Eils R et al. 2005; Nilsson B, Andersson A et al. 2006). However, due to the low gene overlaps and consistencies between different datasets, the vectorial data kernels are often unsuccessful in classifying data from various datasets if naïvely integrated (Warnat P, Eils R et al. 2005). Part of the solution may lay on devising new kernels that can handle this type of problem (Fujibuchi and Kato 2007). When using different available genes from each contributing dataset statistical measures are employed to provide an invariant kernel representation. For example we can incorporate a kNND RBF gene distance metric as a k1 kernel along with an ME k2 in an HS-SVM. Our aim is to develop kernels that are robust to heterogeneous and noisy gene expression data. The classification of text based on term presence and proximity is also an evolving research field especially in relation to the improvement of web search results and document categorization [REF]. Standard methods such as the bag-of-words [REF] actually create a histogram of work frequencies that can be used by established vectorial kernels (linear, polynomial, RBF). These methods however disregard the relative location of the terms, thus providing a suboptimal classification solution. In order to incorporate structural information of this kind we have to resolve to metrics that might not comply with the Mercer conditions and their equivalent kernel matrix positive semidefiniteness. Once again using them inside the HS-SVM context we can, without significant increase in complexity benefit from a more suitable invariance mapper. Both datasets 2 and 3 had normalized predictors in the 0,1 range and were available in 100x stratified randomizations. The dimensionality and features of the three used datasets are shown in Table I. TABLE I COMPARISON OF SVM CLASSIFIERS’ ACCURACY k1 composite kernel k2 lin poly rbf lin poly rbf lin poly rbf lin poly rbf sigmoid Epanenchikov Negative Dist Mahalanobis lin lin lin poly poly poly rbf rbf rbf rbf rbf rbf rbf microarray 0 0.888 0 0 0 0 0 0 0 0 0 0 datasets web search 0 0.888 0 0 0 0 0 0 0 0 0 0 Διάλεξα 3 biomedical datasets ώστε να μπορούμε να το δικαιολογήσουμε και σε in-between journals (Artificial Intelligence in Medicine, BMC Medical Informatics and Decision Making, etc). TABLE II COMPARISON OF SVM CLASSIFIERS’ #SVS composite kernel k2 k1 lin poly rbf lin poly rbf lin lin lin lin poly microarray 0 0.888 0 0 0 0 0 datasets web search 0 0.888 0 0 0 0 0 poly rbf lin poly rbf sigmoid Epanenchikov Negative Dist Mahalanobis poly poly rbf rbf rbf rbf rbf rbf rbf 0 0 0 0 0 0 0 0 0 0 TABLE III COMPARISON OF SVM CLASSIFIERS’ CONCORDANCE INDEX k1 composite kernel k2 lin poly rbf lin poly rbf lin poly rbf lin poly rbf sigmoid Epanenchikov Negative Dist Mahalanobis lin lin lin poly poly poly rbf rbf rbf rbf rbf rbf rbf microarray 0 0.888 0 0 0 0 0 0 0 0 0 0 datasets web search 0 0.888 0 0 0 0 0 0 0 0 0 0 VII. CONCLUSIONS AND SUMMARY This paper showed how the existing methodology of HSSVMs can be extended to a more general class of kernel functions and applied to real world problems such as DNA sequencing. The methodology presented here is not strictly restricted to SVMs. Due to the nature of these algorithms the derived functional can be used as modules in other kernel methods including K-PCA. APPENDIX Admissibility proofs of generalized HS-SVMs kernels. In order to derive the proofs that the functionals proposed in equations (23),(26),(27) are admissible kernels we make use of some basic properties of RKHS kernels, which are analysed in (Cristianini and Shawe-Taylor 2000) (secton 3.3.2). For simplicity we list them here as well. We assume that k1 and k2 are valid kernels over the set X X , X d ,a f is a real valued and that function on X . Then the following functions are also valid kernels: 1. 2. k xi , x j k1 xi , x j k2 xi , x j k xi , x j ak1 xi , x j 3. k xi , x j k1 xi , x j k2 xi , x j 4. k xi , x j f xi f x j 5. k xi , x j k3 xi , x j Regarding the kernels introduced here the following derivations hold: i. Assuming k1 xi , x j is a valid kernel, for equation (26) we have: k x, x 1 k x i , x j k 2 k 1 x, x i , k 1 x , x j k 1 x , x i k 1 x , x j 1 T where k3 is a kernel by property (3) and k4 by property (1). Finally k is a valid kernel as a power of k4 by property (3). ii. Similarly for equation (27) we have: k xi , x j k2 k 1 x, xi , k 1 x, x j p 3 k x, x k x , x 1 i 1 j exp 2 2 2 j p k 4 x, x j p k x ,x 2 3 i j exp 1 k x , x exp k x , x exp 4 i j 5 i j 2 2 2 2 where k3 is a valid kernel by property (1) and k4 is also one by property (3). Then k5 is also a valid kernel by property (2). Using the exponent’s infinite series expansion ex 1 x x 2 x3 xn ... , x , n 2! 3! n! we get exp k5 xi , x j 1 k5 xi , x j k5 xi , x j 2! 2 ... k5 xi , x j which is an admissible RHKS kernel by properties (3) and (1). n! n , n (29) REFERENCES Cristianini, N., J. Kandola, et al. (2002). "On kernel target alignment." Journal of Machine Learning Research 1. Cristianini, N. and J. Shawe-Taylor (2000). An introduction to Support Vector Machines and other kernelbased learning methods. Cambridge ; New York, Cambridge University Press. Eichhorn, J. and O. Chapelle (2004). Object categorization with SVM: Kernels for Local Features, Max Planck Institute for Biological Cybernetics. 137. Fujibuchi, W. and T. Kato (2007). "Classification of heterogeneous microarray data by maximum entropy kernel." BMC Bioinformatics 8. Golub TR, Slonim DK, et al. (1999). "Molecular Classification of Cancer: Class Discovery and Class Prediction by Gene Expression Monitoring." Science(286): 531-537. Kondor R and L. J (2002). Diffusion kernels on graphs and other discrete structures. 19th Intl Conf on Machine Learning (ICML), San Francisco, CA, Morgan Kaufmann. Li, Q. and J. S. Racine (2007). Nonparametric econometrics : theory and practice. Princeton, N.J., Princeton University Press. Li, Z., Z. Weida, et al. (2004). "Hidden space support vector machines." Neural Networks, IEEE Transactions on 15(6): 1424-1434. Lin, H.-t. and C.-J. Lin (2003). A Study on Sigmoid Kernels for SVM and the Training of non-PSD Kernels by SMO-type Methods, National Taiwan University. Lodhi H, Saunders C, et al. (2002). "Text classification using string kernels." The Journal of Machine Learning Research(2): 419-444. Luss, R. and A. d’Aspremont (2008). "Support Vector Machine Classification with Indefinite Kernels."? ? Newman, D. J., S. Hettich, et al. (1998). "UCI Repository of machine learning databases." from www.ics.uci.edu/~mlearn/),Irvine. Nilsson B, Andersson A, et al. (2006). "Cross-platform classification in microarray-based leukemia diagnostics." Haematologica 6(91): 821-824. Ong, C. S., S. Canu, et al. (2004). Learning with NonPositive Kernels. In Proc. of the 21st International Conference on Machine Learning (ICML). Saigo, H., J. Vert, et al. (2004). "Protein homology detection using string alignment kernels." Bioinformatics 20(11): 1682-9. Schölkopf, B. and A. J. Smola (2002). Learning with kernels : support vector machines, regularization, optimization, and beyond. Cambridge, Mass., MIT Press. Scholköpf, B., J. Weston, et al. (2002). A Kernel Approach for Learning From Almost Orthogonal Patterns. 13th European Conference on Machine Learning, Helsinki. Tsuda K, Kin T, et al. (2002). "Marginalized kernels for biological sequences." Bioinformatics 18: 268275. Tsuda K and N. WS (2004). "Learning kernels from biological networks by maximizing entropy." Bioinformatics(20): 326-333. Vapnik, V. (1995). The Nature of Statistical Learning Theory. N.Y., Springer. Warnat P, Eils R, et al. (2005). "Cross-platform analysis of cancer microarray data improves gene expression based classification of phenotypes." BMC Bioinformatics 6.