A Stable Comparison-Based Sorting Algorithm to Accelerate Join

advertisement

A Stable Comparison-Based Sorting Algorithm to

Accelerate Join-based Queries

Hanan Ahmed-Hosni Mahmoud, and Nadia Al-Ghreimil

Abstract— Sorting is an integral component of most

database management systems. The performance of

several DBMS and DSMS queries is dominated by the

cost of the sorting algorithm. Stable sorting algorithms

play an important role DBMS queries. In this paper, we

present a novel stable sorting algorithm. The algorithm

scans the unsorted input array and arrange it into m sorted

subarrays. This scan requires linear time complexity. Kway merge then merges the sorted subarrays into the final

output sorted array. The proposed algorithm keeps the

stability of the keys intacted. The M-way merge requires

O m log m time complexity. The proposed algorithm has

a time complexity , in the average case, in the order of O

(m log m)) element comparisons. Experiments are

performed showing the proposed algorithm to outperform

other stable sorting algorithms join-based queries.

Keywords—Stable sorting , auxiliary storage sorting,

Sorting.

I. INTRODUCTION

A stable sort is a sorting algorithm which when applied

two records will retain their order when sorted according

to a key, even when the two keys are equal. Thus those

two records come out in the same relative order that they

were in before sorting, although their positions relative to

other records may change. Stability of a sorting algorithm

is a property of the sorting algorithm, not the comparison

mechanism. For instance, quick Sorting algorithm is not

stable while Merge Sort algorithm is stable, inspite that

both algorithms might have used the same comparison

technique.

Sorting is an integral component of most database

management systems (DBMSs). sorting can be both

computation-intensive as well as memory intensive. The

performance of several DBMS queries is often dominated

by the cost of the sorting algorithm. Most DBMS queries

will require sorting with stable results.

External memory sorting algorithms reorganize large

datasets. They typically perform two phases. The

first phase produces a set of files; the second phase

processes these files to produce a totally ordered output of

the input data file. An important external memory sorting

algorithms is Merge-Based Sorting where input data is

partitioned into data chunks of approximately equal size,

sorts these data chunks in main memory and writes the

“runs” to disk. The second phase merges the runs in main

memory and writes the sorted output to the disk. Our

proposed algorithm partitions the input data into data

chunks that is already sorted, and then writes these runs to

the disk. Secondly, it merges the runs in main memory

and writes the sorted output to the disk..

External memory sorting performance is often limited by

I/O performance. Disk I/O bandwidth is significantly

lower than main memory bandwidth. Therefore, it is

important to minimize the amount of data written to and

read from disks. Large files will not fit in RAM so we

must sort the data in at least two passes but two passes are

enough to sort huge files. Each pass reads and writes to

the disk. CPU-based sorting algorithms incur significant

cache misses on data sets that do not fit in the L1, L2 or

L3 data caches [32]. Therefore, it is not efficient to sort

partitions comparable to the size of main memory. This

results in a tradeoff between disk I/O performance and

CPU computation time spent in sorting the partitions. For

example, in merge-based external sorting algorithms, the

time spent in Phase 1 can be reduced by choosing run

sizes comparable to the CPU cache sizes. However,this

choice increases the time spent in Phase 2 to merge a

large number of small runs.

In this paper, a new stable sorting algorithm that reduces,

simultaneously, the number of comparisons, element

moves, and required auxiliary storage will be presented.

Our algorithm operates when used as an internal sort, in

the average case, with at most m log m element

comparisons, m log m element moves, and exactly n

auxiliary storage. no auxiliary arithmetic operations with

indices will be needed, where m is the average size of the

subarrays produced from the input array in linear time as

will be indicated, n is the size of the input array to be

sorted.

The proposed algorithm has been tested in extensive

performance studies. Experimental results have shown

that when the proposed algorithm is adapted to sort

externally, it accelerates the computation of equi-join and

non-equi-join

queries in databases, and numerical

statistic queries on data streams. The benchmarks used in

the performance studies are databases consisting of up to

one million values.

In the following sections, the proposed algorithm, and the

computational requirement analysis will be presented in

details. The proposed algorithm with the proof of stability

will be presented in section 2 analysis of the time

complexity will be carried in section 3 analysis of

external version of the proposed algorithm will be

discussed in section 4. Experimental results are presented

in section 5. Conclusions and references will follow.

should give preferences to Si to that from Sj , I< j

whenever a tie occurs between them. This will be

discussed further in the following section.

II. THE PROPOSED STABLE SORTING ALGORITHM

The main idea of the proposed algorithm is to divide the

elements of the input array into some disjoint segments

σ0, σ1, . . . , σ m, of equal lengths L, where L is equal to

n/m, such that all elements in a segment σi is sorted

according to its key. The sorted array is obtained by Mway merging these segments.

The New Proposed Algorithm

Algorithm StableSorting

{

// the first part of the proposed algorithm is the stable

formation of segments, an additional m subarrays SUB j (

j ranges from 1 to m) of length L elements are required//

i=1 // i is set to the first element of the input

array//

DOWHILE (n ≥ i)

{

STEP1: Read elementi from the input array;

STEP2:

j=1; append =0;

Do until (append =1 or j>m)

{ IF elementi ≥ Last-element in subarray SUBj;

THEN {append elementi to SUBj; append = 1;}

ELSE append = 0}

IF (append = 1 )

THEN {i++ ; goto STEP1};

Else { append elementi to tem subarray SUBt

M-way-merge(SUBt and SUBm) into MERGEDSUB;

Subdivide(MERGED-SUB) into 2 subarrays

SUBm ans SUBt,; go to STEP2}

}

// the second part is to M-way merge the resultant sub

arrays//

M-way-merge(SUBj) into final output array

}

There is some issues that have to be considered

to guarantee stability. First, The input array has to be

scanned in sequential order from first elemnt to last

elemnt. Subarrays are formed one at a time starting from

left to right. A new subarry is only formed if the existing

subarrays could not meet the condition to append a new

element from the original input array.In order to

determine where to place a specific element in the

subarrays, the element should be placed in the first

subarray (from left to right) that meets the condition that

this element is greater than or equal to the last element in

the subarray. Also, some issues have to be considered in

merging. the m-way-merge operation that produces the

output list C, selects values from subarrays S1, S2, …Sm

III. MERGING

In this section, the M-way-merge algorithm is

going to be discussed. 2-way-merge is discussed first to

show complexity measures and stability issues of the

algorithm. The discussion is then generalized to the

proposed more general case of m-way-merge.

Merging is the process whereby two sorted lists of

are combined to create a single sorted list. Originally, most

sorting was done on large database systems using a technique

known as merging. Merging is used because it easily allows a

compromise between expensive internal Random Access

Memory (RAM) and external magnetic mediums where the

cost of RAM is an important factor. Originally, merging is an

external method for combining two or more sorted lists to

create a single sorted list on an external medium.

The most natural way to merge two lists of m1 and n1

presorted data values is to compare the values at the

lowest (smallest) locations in both lists and then output

the smaller of the two. The next two smallest values are

compared in a similar manner and the smaller value

outputted. This process is repeated until one of the lists is

exhausted. Merging in this way requires m1+n1-1

comparisons and m1+n1 data moves to create a sorted list

of m1+n1 elements.The figure below illustrates an

instance of this natural merge operation. The order in

which each value in the A and B list is selected for output

to the C list is labelled by their subscript in the second

listing of A and B. Merging in this manner is easily made

stable. In addition, this lower limit is guaranteed

regardless of the nature of the input permutation.

A merge algorithm is stable if it always leaves sequences

of equal data values in the same order at the end of

merging. For example, if we have the set of indexed

values in the A list and the B list as follows.

Subarrays A and B

If the merge algorithm is such that it keeps the

indices of a sequence of equal values from a given list in

sorted order, i.e. they are kept in the same order as they

were before the merge as illustrated in the output example

for the above input, the merge algorithm is said to be

stable. Otherwise, the algorithm is said to be unstable.

Output when the merge operation is stable is illustrated as

follows

there is no indices or any other hidden data structures

required for the execution of the algorithm. Auxiliary

storage for The proposed sorting algorithm O (n)

The resultant of the merge operation

VI.

Stability is defined such that values from A are

always given preference whenever a tie occurs between

values in A and in B, and that the indices of equal set of

values are kept in sorted order at the end of the merge

operation.

The proposed m-way-merge operation can be

implemented as a series of 2-way merge operations with

preserving stability requirements, or can be merge m lists

in the same time. The 2-way-merge operation can be

generalized for m sorted lists. Where k is some positive

integer > 2. m pointers can be used to keep track of the

next smallest value in each list during the merge

operation. It will be shown that the minimum number of

comparisons you can do when merging m sorted lists,

each consisting of L data items is nlog2(m) - (m-1), where

n = mL.

III.

TIME COMPLEXITY

Scanning the elements of the input array of n elements

and divide it into m sorted subarrays is done in linear

time, requires O n comparison and O n moves. The size

of each of the m subarray is of size L which is equal to

n/m, which leads to the conclusion that the time

complexity of the m-way-merge is n log L. therefore the

time complexity of the proposed algorithm is O (n log

m)+n)) which results in time complexity of n log m,

where m is much smaller than n.

ANALYSIS OF THE STABILITY OF THE

PROPOSED ALGORITHM

In order to prove that the proposed algorithm is a

stable sorting algorithm, it should be proved that the

restriction we imposed on the divison of the original

array into subarrays keeps the stability intact. It

should also be proved that restrictions imposed on the

m-way-merge operation meet the stability condition.

The following example illustrate the technique of the

proposed algorithm. This illustration will help

understanding the mechanism of the new sorting

algorithm and will help also in the required proofs.

4

2

10

15

3 10

15

7 8

1

17

Original array

4

SUB1

10

15

15

2

SUB2

3

10

17

7

SUB3

8

16

18

1

SUB4

9

9

6

SUB5

Merge subarrays SUB4 and SUB5 into SUB4,5

IV. ANALYSIS OF NUMBER OF MOVES

Scanning elements and moving them to their appropriate

subarray requires n moves. M-way-merge algorithm will

require n moves. Therefore number of moves of the

proposed algorithm proportional to n.

1

6

SUB4,5

9

Subdivide SUB4,5 into SUB4 and SUB5

1

SUB 4

V.

SPACE COMPLEXITY

There is extra auxiliary storage equal to an array of the

same size as the input array. Clever implementation of

this algorithm can decrease the required auxiliary storage.

required for The proposed stable sorting algorithm, but

9

SUB5 (SUBt)

6

9

9

16

18

9

9

6

External memory sorting algorithms reorganize large

datasets. They typically perform two phases. The first

phase produces a set of files; the second phase processes

these files to produce a totally ordered permutation of the

input data file. An important external memory sorting

algorithms is Merge-Based Sorting where input data is

partitioned into data chunks of approximately equal size,

sorts these data chunks in main memory and writes the

“runs” to disk. The second phase merges the runs in main

memory and writes the sorted output to the disk. Our

proposed algorithm partitions the input data into data

chunks that is already sorted, and then writes these runs to

the disk. Secondly, it merges the runs in main memory

and writes the sorted output to the disk.

External memory sorting performance is often limited by

I/O performance. Disk I/O bandwidth is significantly

lower than main memory bandwidth. Therefore, it is

important to minimize the amount of data written to and

read from disks. Large files will not fit in RAM so we

must sort the data in at least two passes but two passes are

enough to sort huge files. Each pass reads and writes to

the disk. Hence, the two-pass sort throughput is at most

CPU-based sorting algorithms incur significant cache

misses on data sets that do not fit in the L1, L2 or L3 data

caches. Therefore, it is not efficient to sort partitions

comparable to the size of main memory. This results in a

tradeoff between disk I/O performance

and CPU

computation time spent in sorting the partitions. For

example, in merge-based external sorting algorithms, the

time spent in Phase 1 can be reduced by choosing run

sizes comparable to the CPU cache sizes. However, this

choice increases the time spent in Phase 2 to merge a

large number of small runs.

In this paper, the proposed algorithm is adapted to

perform external sorting using run sizes comparable to the

available RAM size to minimize time required for the

second part of the algorithm which is the final merging

operation.. This is done by assuming the input array to be

sorted is stored in a file on the hard disk called FILE1.

FILE1 is much larger than main memory available.

Assume available main memory is g bytes. We copy the

first g/2 of FILE1 to the main memory. The other g/2 of

the main memory is divided into m blocks. We perform

the proposed algorithm on the portion of File1 in the main

memory, the output will be stored in the m blocks in the

main memory, the output will be sorted during the second

VII.

PERFORMANCE STUDY

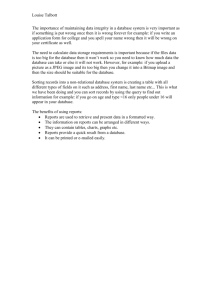

Performance study is being carried on and it comprises

two folds. First fold studies the performance of the

proposed algorithm as an internal sorting algorithm and

compared its performance to other well known internal

sorting algorithms. The second fold studies the

performance of the external sorting version of the

proposed algorithm comparing its performance to other

external sorting algorithms. Also the external version is

used as a sorting algorithm for carrying join based

queries on large databases and compare the performance

of such technique with the performance of join based

queries using other external sorting algorithms.

8

Mergsort

execution time in milliseconds

VI. EXTERNAL SORTING VERSION OF THE

PROPOSED ALGORITHM

part of the proposed algorithm ( the merged part). The g/2

bytes of the sorted output will be stored back in FILE1

instead of its first g/2 bytes. Then we repeat the same

procedure on the next g/2 bytes from FILE1 until the

whole file is exhausted. The second part is to m-waymerge the sorted g/2 chunks of FILE1. The merge

procedure is done externally.

proposed algorithm witm m=4

7

6

5

4

3

2

1

1000

2000

3000

4000

5000

6000

7000

8000

number of keys

(a)

8

k-way merge sort

execution time in milliseconds

The last element is 6 and there is no place to put in

any of the 4 subarrays so we have to use the

temporary subarray SUBt .

proposed algorithm (m=8)

7

6

5

4

3

2

1

1000

2000

3000

4000

5000

number of keys

(b)

6000

7000

8000

REFERENCES

8

execution time in milliseconds

Quick sort

proposed algorithm (m=4)

[1]

7

6

[2]

5

[3]

4

[4]

3

2

[5]

1

1000

2000

3000

4000

5000

6000

7000

8000

[6]

number of keys

[7]

(c)

Fig. 3: Comparing The proposed sorting algorithmwith : (a)

Mergesort, (b) k-way Mergesort, and (c) quicksort

[8]

[9]

Mergsort

k-way merge sort

Quick sort

8

[10]

proposed algorithm (m=16)

proposed algorithm (m=8)

proposed algorithm (m=4)

execution time in milliseconds

7

[11]

6

[12]

5

4

[13]

3

[14]

2

[15]

1

1000

2000

3000

4000

5000

6000

7000

8000

number of keys

[16]

Fig. 4: The running times of sorting algorithms in milliseconds

for a number of keys to be sorted ranging from 1000 to 8000

keys each key is 20 characters long.

Conclusion

In this paper, a new sorting algorithm was presented.

This algorithm is stable sorting algorithm with O(n log

m), comparisons where m is much smaller than n, and

O(n) moves and O(n) auxiliary storage. Comparison of

the new proposed algorithm and some well-known

algorithms has proven that the new algorithm outperforms

the other algorithms. Further, there are no auxiliary

arithmetic operations with indices required. Besides, this

algorithm was shown to be of practical interest, in joinbased queries.

[17]

[18]

[19]

[20]

[21]

A. LaMarca and R. E. Ladner, “The influence of caches on the

performance of heaps,” Journal of Experimental Algorithmics, vol.

1, Article 4, 1996.

D. Knuth, The Art of Computer Programming: Volume 3 / Sorting

and Searching, Addison-Wesley Publishing Company, 1973.

Y. Azar, A. Broder, A. Karlin, and E. Upfal, “Balanced

allocations,” in Proceedings of 26th ACM Symposium on the

Theory of Computing, 1994, pp.593-602.

Andrei Broder and Michael Mitzenmacher.“Using multiple hash

functions to improve IP lookups,” in Proceedings of IEEE

INFOCOM, 2001.

Jan Van Lunteren, “Searching very large routing tables in wide

embedded memory,” in Proceedings of IEEE Globecom,

November 2001.

Ramesh C. Agarwal, “A super scalar sort algorithm for RISC

processors,” SIGMOD Record (ACM Special Interest Group on

Management of Data), 25(2):240–246, June 1996.

R. Anantha Krishna, A. Das, J. Gehrke, F. Korn, S.

Muthukrishnan, and D. Shrivastava, “Efficient approximation of

correlated sums on data streams,” TKDE, 2003.

A. Arasu and G. S. Manku, “Approximate counts and quantiles

over sliding windows,” PODS, 2004.

N. Bandi, C. Sun, D. Agrawal, and A. El Abbadi, “Hardware

acceleration in commercial databases: A case study of spatial

operations,” VLDB, 2004.

Peter A. Boncz, Stefan Manegold, and Martin L. Kersten,

“Database architecture optimized for the new bottleneck: Memory

access,” in Proceedings of the Twenty-fifth International

Conference on Very Large Databases, 1999, pp. 54–65.

T. H. Cormen, C. E. Leiserson, R. L. Rivest, and C. Stein,

Introduction to Algorithms. MIT Press, Cambridge, MA, 2nd

edition, 2001.

Abhinandan Das, Johannes Gehrke, and Mirek Riedewald,

“Approximate join processing over data streams,” in Proceedings

of the 2003 ACM SIGMOD international conference on

Management of data, ACM Press, 2003, pp.40-51.

A. LaMarca and R. Ladner, “The influence of caches on the

performance of sorting,” in Proc. of the ACM/SIAM SODA, 1997,

pp. 370–379.

Stefan Manegold, Peter A. Boncz, and Martin L. Kersten, “What

happens during a join? Dissecting CPU and memory optimization

effects,” in Proceedings of 26th International Conference on Very

Large Data Bases, 2000, pp. 339–350.

A. Andersson, T. Hagerup, J. H°astad, and O. Petersson, “Tight

bounds for searching a sorted array of strings,” SIAM Journal on

Computing, 30(5):1552–1578, 2001.

L. Arge, P. Ferragina, R. Grossi, and J.S. Vitter, “On sorting

strings in external memory,” ACM STOC ’97, 1997, pp.540–548.

M.A. Bender, E.D. Demaine, andM. Farach-Colton, “Cacheoblivious B-trees,” IEEE FOCS ’00, 2000, pp.399–409.

J.L. Bentley and R. Sedgewick, “Fast algorithms for sorting and

searching strings,” ACM-SIAM SODA ’97, 1997, pp.360–369.

G. Franceschini, “Proximity mergesort: Optimal in-place sorting in

the cacheoblivious model,” ACM-SIAM SODA ’04, 2004, pp.284–

292.

G. Franceschini. “Sorting stably, in-place, with O(n log n)

comparisons and O(n) moves,” STACS ’05, 2005.

G. Franceschini and V. Geffert. “An In-Place Sorting with O(n log

n) Comparisons and O(n) Moves,” IEEE FOCS ’03, 2003, pp.

242–250.