contents - Language Log - University of Pennsylvania

advertisement

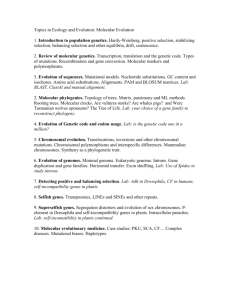

Page 1 Specific Aims Identification and characterization of the genes and cellular aberrations responsible for the onset and progression of malignancies arising in childhood are major goals of pediatric cancer research. Recent advances in genomic and proteomic research has made available many large data sets relevant to these goals. However, sufficient resources for systematically and comprehensively collecting, managing, and integrating these data are currently lacking for molecular biology in general, and for cancer-specific applications in particular. To address these issues, we previously created a novel computational procedure that effectively catalogues position- and functionally-based genomic information (eGenome), and which distributes this information via an interactive, user-friendly Internet site to researchers involved in identifying human disease loci. Here, we propose to create a parallel resource that focuses upon cancerspecific cellular data, to which we would initially target pediatric malignancies. The proposed resource (provisionally named cGenome), would create a collective knowledgebase directly linking positional genomic, functional genomic, and proteomic information with available pediatric cancer-oriented genotypic and phenotypic observations. Specific Aim 1: Compile an evidence set of pediatric clinico-molecular observations. We will identify all sizable Internet- or database-accessible data sets pertaining to the molecular biology of human neoplasia. This will include curated lists of genes, loci, and proteins implicated in neoplasia; collections of cancer-specific chromosomal rearrangements and/or gene mutations; transcriptional and translational profiles; whole-genome analyses of malignant cells; and cancer research literature. From this information, we will compile a comprehensive set of evidence expressions, each of which link a tumor phenotypic observation to a molecular observation in a specific pediatric malignancy. This expression set will be consolidated into a non-redundant subset and standardized by use of a modular and scalable molecular ontology. Furthermore, we will link proof of each observation directly to the fraction of the cancer literature which supports the observation. Specific Aim 2: Integrate this evidence set with existing molecular and clinical data. Each evidence expression will have a molecular, tumor type, and proof component. We will link molecular components to basic cellular data sets within eGenome corresponding to each component, such as the gene involved, site of chromosomal rearrangement, or protein affected. In addition, we will integrate cancer-specific variation, transcriptional, proteomic, and functional molecular data sets that will link directly to applicable individual evidence events. We will link the tumor component to trial/protocol, epidemiological, diagnosis, presentation, and therapy-based clinical information corresponding to the malignant subtype and, whenever possible, directly to the specific observation. This information will be integrated using a relational database management system, and relevant data sets will be parsed and imported into the database to build a comprehensive knowledgebase. Specific Aim 3: Analyze and annotate the knowledgebase to improve accuracy and integrity. Initially, we will track molecular-clinical evidence events that directly conflict with each other, such as: BCR is involved in “t(9;22)(q34;q11)” or “t(9;12)(q34;q11)”. These conflicts will be automatically determined and annotated in the web resource’s display of this information, and will also serve as an internal quality control mechanism to assure proper data integration and standardization. Similarly, data that appears to be incorrect, such as an aberrant reporting of a commonly occurring cytogenetic rearrangement, will be identified and annotated. Subsequently, we will identify and report evidence events that appear to be biologically incompatible, such as a gene that is reported to be deleted and over-expressed within the same malignancy by different reference sources. We will also incorporate a number of quality control procedures to track and maintain the cGenome database holdings. Specific Aim 4: Create a web resource for public dissemination of the compiled information. We will create a freely accessible Internet website to serve as a front end for the cGenome relational database. A variety of search and output options will be provided. Structurally- (e.g. chromosomal position, gene name, Page 2 DNA sequence), functionally- (e.g. tissue, type of malignancy, protein function), and clinically- (e.g. malignant subtype, supporting study) directed searching mechanisms will be provided. Query result pages will be dynamically generated and will contain positional, functional, and descriptive data of the gene, transcript, protein, pathway, malignancy, clinical features, or literature citation of interest. Individual pages will also contain element-specific hyperlinks to supplementary data at many external websites. Supportive web content, such as background information, definitions/help, site navigation, and methodological details will also be included. The website will act as an Internet portal for entry into molecular pediatric cancer information. Page 3 Hypothesis Two significant gaps currently hinder cancer research: a gap between data generation capabilities and data managment/analysis capabilities; and a second gap between the generic molecular, cancer-specific molecular, and translational/clinical cancer information universes. We hypothesize that a systematic effort to unite and deliver these information sets will empower cancer researchers, providing increased efficiency as well as an opportunity for higher order analyses of malignancy. Background Molecular biology and cancer. The human genome project (HGP) is dramatically accelerating both the pace and philosophy in which disease-related research is performed (1). Technical advances have shifted the major molecular research bottleneck from data generation to data processing, heightening the importance of computational approaches (2-7). This transformation has tremendous potential, including the possibility of systems-based understanding of disease, but it also poses complex challenges. As genomics and proteomics are leading this paradigm shift, research related to cancer as a molecular disease will be greatly impacted. A substantial number of genomic and proteomic abnormalities playing causative roles in neoplasia have been identified (8-11), and these successes are anticipated to translate to the clinic. Most of these successes have been facilitated by an obvious molecular signature. Even with such clues, elucidation of these loci usually requires substantial experimentation (12-14). However, most malignancy-locus relationships have not yet been identified (15). Moreover, the majority of these relationships likely manifest as post-genomic aberrations, such as dysregulated transcriptional or translational levels, which will complicate their identification (16-18). Only a few recently implicated molecular aberrations in neoplasia have yet employed computational approaches as the primary means for discovery (19-25). Despite the availability of substantial genomic and functional genomic data, these resources have not yet accelerated cancer locus identification; nor have they shifted the approach in which these loci are usually identified to computation-centric methods. The amount of available human molecular information is staggering, including a draft sequence of the genome, determination of most functional cellular elements, identification of the vast majority of DNA-based variation, and elucidated representative structures for most protein families (1,26-38). As the HGP continues to generate large-scale genomic, functional genomic, and proteomic data sets, cancer researchers are finding themselves awash in the means, but not the ends, in which to identify important molecular dysregulations. We now have sufficient data in which to accelerate malignant disease research, but the tools in which to manage and utilize this information are still lacking (11). Supplying these needs is of critical importance for basic, translational, and clinical cancer researchers in order to create a “bench-to-bedside” information pipeline. The cancer knowledge universe. The quality and quantity of both basic and cancer-specific molecular data already publicly available makes computationally-focused approaches to identifying molecular aberrations in cancer feasible, but data sources are currently widely dispersed and poorly integrated, severely hampering effective mining strategies. Some of the basic molecular genomic and proteomic data is consolidated at several large distribution centers (33,39,40). These and other resources are invaluable as entry points into genomic and functional genomic information. However, the approach taken by these groups has largely been representational rather than comprehensive. In addition, many large data sets exist as repositories rather than as curated sets. This has led to confusion and inefficiency among end-users of this information. A significant number of sizable cancer genomic and functional genomic data sets have been recently generated and are available publicly, including lists of genes known to be involved in neoplasia, chromosomal rearrangement compilations, whole-genome analyses of malignant cells, tumor expression profiles, and locus-specific mutation databases (Appendix Table 1). Unfortunately, this substantial and collectively comprehensive array of information is not yet well coordinated with either basic genomic or clinical cancer data. There have been only sporadic efforts to compile together a wide range of cancer Page 4 molecular data, most notably the Infobiogen Oncology Atlas, Cancer Genome Anatomy Project, and Human Transcriptome resources (33,41-54). However, these data sets are not well integrated with each other, and they rarely cross the generic molecular–cancer molecular and cancer molecular–cancer translational/clinical information boundaries. Conversely, clinical cancer information is comprehensive and well integrated within its own universe. For example, the NCI’s CancerNet provides a wide-ranging resource for cancer professionals (55). Contained within CancerNet are CANCERLIT and PDQ, which together provide access to the scientific cancer literature, expert-drawn summaries covering various oncology topics, directories of physicians, a clinical trials database, and epidemiological data (56-58). Other independent online resources provide additional compilations of information useful to clinical oncologists (Appendix Table 1). However, while this approximates a seamless clinical cancer knowledgebase, there is virtually no interconnectivity with molecular information. We know of no group that is seeking to systematically assimilate molecular and clinical cancer information in a comprehensive manner. This lack of integration requires researchers themselves to connect data from various sources. Data mining that crosses sub-disciplinary boundaries, such as determining “known drugs targeting genes within the region of chromosome 1p36.3 deleted in neuroblastomas”, is extremely difficult. Preliminary results. Our laboratory has created a process which collects available human genomic information and integrates it into a single, comprehensive catalog (59,60). The aim is to deliver this data, from as many sources as possible, to biomedical researchers involved in disease gene identification without requiring bioinformatics and/or genomics expertise to successfully utilize these data. We first created a pilot Internet resource (CompView) for human chromosome 1 in August, 1999 (61). This resource localized genes, polymorphisms, and other genomic elements to precise chromosomal positions. We then integrated additional genomic and functional genomic features relative to this localization framework, including SNPs, DNA clones, cytogenetic anchors, and transcript clusters. The resulting integrated data set yielded a genomic catalog with resolutions, order confidences, and element populations superior to previously available resources (62). The CompView data is accessible through a web resource which has been widely used and acknowledged by chromosome 1 researchers (61-66). CompView has aided candidate disease gene searches for numerous groups, including localization of tumor suppressor loci for meningioma and neuroblastoma (67,68). A subsequent project (eGenome) seeks to integrate available human genomic and functional genomic data by building upon the CompView procedure. The eGenome methodology: 1) creates integrated foundations of objective genomic data representing each human chromosome; 2) increases depth to the chromosomal foundations by adding supplemental structural genomic data sets; and 3) layers subjective functional genomic and proteomic data onto the structural foundations. A large collection of DNA sequence-defined elements was localized within the genome with four separate techniques: radiation hybrid (RH), genetic linkage, cytogenetic, and DNA sequence localization procedures (1,27-29,69,70). From this structural framework, integration of additional data sets was easily achieved. eGenome currently integrates 2.7 million genomic elements: 51,903 RH-based localizations, 12,461 genetic linkage-based localizations, 14,706 cytogenetic localizations, 51,334 DNA sequence-based localizations, 36,402 genes/EST cluster representations, 116,608 large-insert DNA clones, and 2.5 million SNPs, altogether tracking 3.6 million names and aliases. The resulting knowledgebase tracks both localizations and nomenclature in a systematic and comprehensive manner. Because genomic elements usually have multiple independently determined genomic localizations, this provides powerful quality control. Identified discrepancies are annotated as such in the eGenome database and collectively analyzed for recurrent patterns indicating specific data source and data analysis inaccuracies. Collection of a centralized genomics knowledgebase has also aided in precise nomenclature management, allowing users to immediately collect non-redundant sets of genomic data. The eGenome database structure is shown in Appendix Figure 1. To disseminate eGenome to the public, we developed an Internet site providing graphical, ideographic-, and text-based query options for data perusal. For text-based query results, users can perform a simple text search using gene names or database IDs, define a region with two flanking markers, select a cytogenetic Page 5 band in an ideogram, or choose a cytogenetic band or range from a list. Query result options include definition of a defined region in a customizable Java applet. Query results display pertinent information about the element of interest, including cytogenetic, sequence, RH, and/or genetic linkage positions; transcript clusters; aliases; large-insert clones containing the element; and linked SNPs. Direct hyperlinks are provided to element-specific data in external databases (e.g. GenBank records). Additional tools incorporate analysis utilities. eGenome query and results interface examples are included in Appendix Figure 2. Overall, the website incorporates or directly links to 50 external databases, thus creating elementcustomized data portals to a wide network of genomic, sequence, and functional data (62,64). The eGenome website launched publicly in January, 2002 (61). Compilation of these data sets has allowed us to study the human genome on a chromosome-wide basis, including cytogenetic band patterns on chromosome 1 and sequence-chromosome breakage comparisons for chromosome 22 (62,71). The eGenome project places our laboratory in a position to efficiently add disease-specific components to this basic genomic knowledgebase. eGenome creates a solid foundation of integrated genomic and, increasingly, functional genomic data. We have designed a conceptual framework for appending cancer molecular data to this foundation, using a single, unified database schema (Figure 1), which we provisionally call cGenome. This framework is a conceptual model for how cellular, translational, and clinical information can be related together. The central components are the categories Evidence and Malignancy. This relationship links disease information to cellular information, and the links are provided by specific instances of evidence tying an observed clinical phenotype to its corresponding observed molecular dysregulation event(s). The schema has been designed to encompass all malignancy information; however, this proposal targets pediatric neoplasia as a test case. Genome Literature Clinical Figure 1. Proposed skeletal database structure for cGenome. Arrows depict data flow direction. This structure is discussed in greater detail later in the proposal. Using several publicly available Genomic curated cancer-specific data sets, we have compiled lists of 4,759 cancervariations associated loci and 466 established Translational gross chromosomal abnormalities Transcriptome for 84 malignant subtypes. These Proteome two lists have been cross-referenced to each other through shared gene symbols and also to their matching Pathways eGenome records. For example, the t(9;22)(q34;q11) observed in AML, ANLL, and CML is related to the genes ABL and BCR, to literature references characterizing the abnormality in each leukemic subtype, and to base genomic data in eGenome (e.g. sequence position, polymorphisms, and mRNA sequence). While this is a relatively preliminary and non-systematic procedure for relating cancer genotypes and phenotypes, it has served as an instrumental first step in establishing rigorous data handling and integration procedures. Significance Reductionist-based approaches to cancer research have been historically successful in providing an exceedingly rich base of molecular and clinical observations. Optimal use of this knowledge requires novel interdisciplinary and systems-based methods to understand malignancy in its entirety, which in turn requires sufficient resources to organize, express, and deliver relevant information directly into the research laboratory. The proposed project aims to take the first critical steps toward this eventual goal. An integrated information resource providing instantaneous, comprehensive, and facile data-gathering functionality to pediatric cancer researchers would be a dramatic improvement over inefficient "web-surfing" techniques Evidence: Variation Transcription Translation Function Malignancy Page 6 often used for extracting public data. The cGenome project would give users an entry point into a boundary-free universe of cancer knowledge spanning all facets of neoplasia. It would also provide opportunities for discovering new or previously unrecognized associations between molecular and clinical observations. For the current project, we are utilizing pediatric malignancy for the formulation of a working prototype, which will be designed to be easily scalable for encompassing all malignant subtypes. This project should help shorten the pediatric cancer gene discovery process, which could subsequently improve our understanding and intervention of childhood neoplasia. In time, interconnectivity with eGenome and other, similar research knowledgebases could establish a computationally-based information network spanning all cancer-related fields in biomedicine. Proposed Methods Specific Aim 1: Compile an evidence set of observed pediatric clinico-molecular observations. Approach. Aim 1 will be conducted in two phases. Phase I will concentrate upon associations between cancer genomic abnormalities and malignant subtypes, with the goal of collecting a comprehensive set of genomic observations in pediatric neoplasia. This will utilize a moderate number of established database sources and a simplified ontology for describing clinico-molecular associations. Phase II will expand the associations to transcriptional, functional, and cellular domains. This phase will also expand the ontology syntax and vocabulary, the number of integrated data sets, and the association with cancer research literature. cGenome advisory board. We will assemble an external advisory board for this project. This advisory board will be comprised of local researchers investigating the molecular genomics of various malignancies (see Appendix). The board will advise the group in all aspects of the project, with emphasis on identifying appropriate data sets for inclusion and means for efficient data delivery. Phase 1: Utilization of established central data sets. We will collect lists of cancer-implicated genes, gene mutations, and chromosomal abnormalities from existing publicly available lists (Appendix Table 1). We have already collected a static version of most of these data sets. Any relevant databases that are subsequently made available will be identified by periodic literature and Internet searches. Data access and processing. All of our targeted central data sets have non-restrictive data re-distribution policies, but the manner in which the data are accessible varies. Our Technology Transfer Office and/or legal counsel will aid us with determination of whether individual data sets can be used for collective redistribution. For each site, we will collect the relevant information either from a provided database dump or by writing an automated retrieval agent to automatically retrieve and parse the set of web pages comprising the requisite data, in a site-specific manner. Retrieved data will then undergo quality control processing (Aim 3). Simplified ontology creation. We will construct a simplified “molecular evidence in pediatric cancer” ontology for use as the central kernel of the cGenome database structure. This will have the following syntax: REAL M ELEMENT is ACTION in MALIGNANCY according to SOURCE This can be thought of as an expression of molecular evidence, with each capitalized phrase a variable class. The vocabulary would include (but not be restricted to) the following: Gene WT1 Transcript MYC Protein p70S6K is is is mutated in over-expressed in phosphorylated in Wilms tumor medulloblastoma multiple myeloma according to according to according to Reference A Database B Website C Each class vocabulary set would be selected using various means. The REALM and ACTION categories would be created after consultation with the advisory board, other cancer researchers, and reviews of the literature. The ELEMENT category would be defined by eGenome and by curated external lists of cancer- Page 7 associated genes. The SOURCE category would initially be populated by the integrated data sets as well. Our consultant, Dr. Michael Liebman, will assist us with details of the ontology creation (see Appendix). Data translation. For each central data set, we would convert each line of data into an evidence expression conforming to our ontology syntax. This would require the creation of a parsing and conversion algorithm specific for each data set. Each algorithm would be relatively straightforward, as the data composition for each set is fixed by the external database curators, thus requiring only a limited number of conversion functions. For example, one line of data from Infobiogen (46), after parsing, reads as: Infobiogen | t(3;12)(q26;p13) | myeloid lineage: MDS in transformation, ANLL, BC-CML | Gene Name MDS1 Infobiogen in column 1 is identified as the source, t(3;12)(q26;p13) in column 2 is identified as the action, etc., with each of the variable classes represented in the line of data. This line of data translates to three element expressions, one for each malignancy. The final expressions would then be: Gene Gene Gene MDS1 MDS1 MDS1 is is is translocated translocated translocated in in in MDS ANLL B-cell CML according to according to according to Infobiogen Infobiogen Infobiogen In this way, expressions can be automatically generated by simple conversion algorithms reflecting the data structure used by the data set. In fact, the data sets drive the ontology creation. For the example above, the ontology would expand to include an additional class to capture the particular type of translocation. During expression generation, this subclass dictates a database table listing all known cancer-associated translocations. Data standardization. Certain variable names will require adherence to established standards. Gene names will be converted to official Gene Symbols, as designated by the HUGO Gene Nomenclature Committee (HGNC), which eGenome already records (62,72). The eGenome locus-naming algorithm will be used to convert non-standard gene or protein names used in the data set. Cytogenetic and gene mutation labeling will adhere to ISCN and HUGO MDI standards, respectively (73,74). Malignancy classification will follow the International Classification for Diseases in Oncology (ICD-O) guidelines (75). The ICD-O scheme creates clinical and/or molecular marker-based sub-classifications for some malignancies, such as separate categories for “Acute myeloid leukemia, t(8;21)(q22;q22)” and “Acute myeloid leukemia, t(15;17)(q22;q11-12)”. We will convert this classification into an object-oriented hierarchy, such that both entries above will be subclasses within a parent class “Acute myeloid leukemia”. This will enable us to include malignancy data from sources not adhering precisely to the ICD-O guidelines. We will assess integrated data set structures to determine when this hierarchical methodology will be necessary. Literature citations will follow the MEDLINE standard (76). Assembled expression sets will then undergo quality control routines to assure integrity (Aim 3). Phase II: Ontology expansion. Subsequently, we will expand the ontology to include additional detail and scope, which will largely be dictated by the content of newly identified data sets. Because of the modular nature of the ontology, expansions to the vocabulary and syntax can be made easily, making this approach highly scalable. We will primarily expand the ontology in two areas: tumor classification and data source. Introduction of malignancy sub-classifications. Integrated data sets may include sub-classifications within a malignancy class, such as the clinical stage, histological information, site, clinico-biological marker data, event frequency, and/or cell line/animal strain. We will expand our tumor classification ontology to reflect these parameters. For example, the portion of the expression syntax relating to malignancy could be expanded to: in FREQUENCY of HISTOLOGY STAGE SITE TYPE MALIGNANCY with MARKER(S) a specific instance of which could translate to something like: in 70% of undifferentiate d IV-S adrena l primary neuroblastoma s wit h triploidy Page 8 Staging information would adhere to the American Joint Committee on Cancer guidelines (77). Each class would require creating a standardization and consolidation algorithm, which would translate a library of terms such as “9 of 12 tumors” to “75%” or “late-stage neuroblastoma” to “stage III or IV neuroblastoma”. Inclusion of literature: In Phase II, we will explore using the cancer literature itself to build additional expressions. Optimally, we would use natural language text processing (NLP) to directly extract and build expressions from abstracts or full-text cancer research articles (78,79). However, the lack of effective NLP tools for biomedicine places comprehensive text mining beyond the scope of the current proposal. As an approximation, we will parse the literature database using the Medical Subject Headings (MeSH) controlled vocabulary provided by MEDLINE (76). We will acquire CANCERLIT, the cancer-specific subset of MEDLINE from the National Library of Medicine (57,80,81). During the course of selecting variables for each class in our evidence ontology, we will match MALIGNANCY and ACTION variable names with existing MeSH terms (e.g. “Chromosome Deletion”). We will also search CANCERLIT record titles and abstracts for gene/protein names. We will write a pattern-matching algorithm to compare the ACTION and MALIGNANCY vocabularies, as well as the eGenome name/aliases list, against the title, abstract, and MeSH headings of each CANCERLIT record. The set of all publications with one or more matches in each of these three categories will be compiled, and a subset familiar to the laboratory and advisory board will be evaluated as a validation set. As the ontology expands, additional classes will likely be eligible for crossreferencing to MEDLINE. Matching records will be related to their corresponding evidence expressions through the SOURCE class. Computational Considerations. Aim 1 is largely dependent upon computational approaches, but no step requires a high level of computational expertise. The agent-based retrieval of core data sets, parsing of the data sets, extraction of evidence expressions, and CANCERLIT parsing and pattern matching all can be accomplished using straightforward programming in Perl. The CANCERLIT database (~5 GB) will be manipulated using the 8-processor p660 server in our Bioinformatics Core Facility (which the PI directs). Potential Difficulties/Alternatives. Certain candidate data sets may have restrictive data usage policies. We will explore these by direct consultation with the database curators. Otherwise, we will either obtain a relevant, unrestricted use subset of the data set, or provide query links through our website (Aim 2). Certain core data sets might not be comprehensive for some classes. Because well-established and commonly observed events are more prevalent in the literature, we anticipate that our evidence set will be inclusive of these events (e.g. RB1 mutations in retinoblastoma), and thus will serve as the basis for a more systematic, NLP-based, approach in the future. If it is evident that the evidence expression library is underrepresenting certain molecular aspects, we will explore developing a limited NLP approach, where certain exact phrases are identified in the literature (such as “protein X methylates protein Y”). The ACTION class may not be comprehensively defined by the central data sets. If this occurs, we will explore alternate sources to either augment or completely provide definition of such class variables. For the ACTION class, candidate sources include the MEDLINE MeSH headings and the Gene Ontology Consortium’s GO terms (76,82). The latter is a dynamic controlled vocabulary of the molecular function, biological process and cellular component of organismal elements. GO is comprehensive but not cancerspecific, so it would require modification. We are in the planning stage of incorporating GO into eGenome. Specific Aim 2: Integrate this evidence set with existing molecular and clinical data. Approach. Aim 2 will consist of identifying key additional molecular and clinical data sets for inclusion, defining integration strategies for each data set, and developing a relational database management system (RDBMS) to capture and relate this information. Linking to eGenome. eGenome content is currently being expanded to include proteomic and functional information. We will use eGenome as a comprehensive set of molecular objects, which will link to cGenome via the ELEMENT class (gene/transcript/protein name). This relationship will relate the evidence expressions to all molecular information regarding each ELEMENT (e.g. genomic sequence, DNA clones, chromosomal position, polymorphisms, transcripts, protein structure, interacting molecules). This relationship allows molecular-based queries of the evidence. Thus, searching for “9p21 and primary central Page 9 nervous system lymphomas (PCNSL)” immediately provides genomic and proteomic information regarding CDKN2A (p16), which would be related to 9p21 through eGenome and to PCNSL through the evidence expression: Gene CDKN2A is homozygously deleted in PCNSL according to Cobbers et al., Brain Pathol (1998) The query would ask the database for all objects localized to the cytogenetic band 9p21 that are also related to PCNSL. The result would provide a list of these objects, including CDKN2A, which if selected further would list genomic and proteomic data for CDKN2A itself. Additional molecular data sets. We will integrate additional relevant data sets to add depth to cGenome. Candidate sets will be identified in consultation with the advisory board, from personal knowledge, and by periodic literature and Internet searches. We will initially target data sets pertaining to gene mutations, chromosomal abnormalities, and expression profiles. Appendix Table 1 lists 58 such target databases. To effectively manage dependencies, we will usually link databases rather than importing data. For databases with search interfaces, we will replicate the query URL. For example, if viewing the cGenome NF1 record, a hyperlink that searches for NF1 in the NF1 mutation database will be displayed on our NF1 web page. A few databases will warrant partial or full import into cGenome, such as the CGAP EST/SAGE data (50,51). We will communicate with all data providers to facilitate data linking and/or data exchange, and to assist with data management, maintenance, and dependency issues. For most sources, the core integrators will either be a gene/protein symbol or a malignancy type. Over time, newly available data sets will be evaluated for integration. Clinical data. We will identify and investigate candidate clinical data sets in conjunction with the advisory board. Target clinical data sets include clinical trials listing services, the SEER epidemiology data, pediatric cancer registries, the NCI 3D drug structure database, malignancy summary texts, NIH-funded grants, and clinical practice guidelines (Appendix Table 1). This integration will revolve around the set of malignancies we construct (Aim 1). The majority of these data sets will integrate via specific links from cGenome records, such as one-click searching from the cGenome AML record for all registered clinical trials pertaining to AML. Creation of a database structure. We will expand the eGenome RDBMS for this project. We will have separate eGenome and cGenome web interfaces that link to each other through common elements (e.g. genes implicated in malignancy), but are served by a single database. Records for molecular objects already exist within eGenome. These molecular tables will link through the ELEMENT class of the evidence expression syntax. ELEMENTS will be many-to-many related with the remaining classes of the cGenome ontology (Figure 1). The remaining integrated data sets will populate the appropriate tables for each class: mutational databases will relate to genes and/or malignancies depending upon their focus; transcriptional profiles will relate to genes; genomic abnormalities will relate to the ACTION class; literature will relate to the REFERENCE class; clinical information will relate to the MALIGNANCY class. Project management. The number of databases being considered for inclusion is similar to the number currently integrated by eGenome. As such, we recognize the dynamic nature of the data sets and our critical dependency upon them. Accordingly, both global project management and component-based dependency management of all project components will be acceded high priorities. Each proposed addition will be assessed as to its impact upon all other components of the project. We will construct a dependency map that quantitatively defines the interrelationship between each component, which will be used to identify which components are potentially affected if an alteration of an existing component is made. As many of the data sets are dynamic, we will periodically reintegrate these sets, either by communication of new releases by source curators, or by automated periodic downloading, comparison, and integration of each data set. Computational Considerations. Completion of Aim 2 will require a robust database/server architecture, multiple dedicated database servers, multiple web servers, and mail/ftp capabilities. All of this exists on a lesser scale for eGenome. We will scale up and modify our existing configuration for this project. Page 10 c/eGenome database. The database will be written using an Oracle-based RDBMS. Oracle is the most mature, feature-rich, and cross-platform SQL database software available. All components of Oracle required for this project are available free of charge through a site license from the University of Pennsylvania (83). Database table construction and table inter-relationships will be created using the Oracle Database tools package. Algorithms for mining, analyzing, and formatting central data sets will be written in Perl on the staging server. cGenome’s application logic will be contained in stored procedures within the database, written in PL/SQL or Java. Additional algorithms for analyzing and exporting data will also be written within Oracle. Software configuration management and component dependency will be performed using the UNIX-standard cvs and ‘make’ utilities, respectively. High-level project management will be facilitated using Details 3 (AEC). Operating system. The hardware configuration will run using Linux OS. Linux was chosen because of its ability to be implemented on both desktop and high-performance server machines existing within the same configuration. Linux provides a tool-rich, stable, high-performance operating system with strong industry support. It also allows our database to be independent of any particular vendor’s hardware. Numerous stable, pre-packaged distributions of Linux for all major platforms are available (e.g. RedHat, SuSE). Hardware configuration. The current state, expected initial launch, and expected year 3 hardware configurations are detailed in Appendix Figure 3. By year 3 of the project, the configuration would project to two load-balanced database servers with built-in redundancy, a development server, three parallel web servers, and a mail/ftp server. All servers would be dedicated solely to cGenome/eGenome and housed in a limited access, climate-controlled data center. The two main database servers will be connected to a 1 Gbps Ethernet Storage Area Network (SAN), which will facilitate rapid replication of the database. Also on the SAN will be a third database server for development, staging, and to run the mass storage backup device. Potential Difficulties/Alternatives. The large number of potential data sources will need to be carefully selected and managed in terms of integration levels and data maintenance. To manage these challenges, we will: 1) work closely with our advisory board to prioritize candidate data sets; 2) also prioritize data sets by genome >proteome >clinical; 3) integrate in a graduated fashion as necessary, by hyperlinks only >import of database identifiers only >complete integration; and 4) holding database integration/upkeep effort constant. This strategy has worked well for eGenome. We will also strictly require proper documentation of each new component of the structure, followed by integration into our dependency map for systematic site management. We have calculated approximate data serving rates that the proposed system configuration will be able to deliver. This rate appears to be sufficient to easily handle perceived demand. If it cannot, it is straightforward to scale cGenome by adding database servers in parallel, as the database is not transactional. If this is still insufficient, we can utilize our Bioinformatics Core Facility's 8-processor AIX-based server as an additional Oracle database engine. Our system architecture could also easily accommodate alternative database software. Unanticipated loss of eGenome support would not greatly effect cGenome operation or integration with any existing eGenome data, but it would potentially hinder the development of integrated functional molecular data. In this event, we would concentrate more upon genomic and functional genomic aspects of the project and would make every effort to supplement the cGenome data with the most critical proteomic/interaction data sets. Specific Aim 3: Analyze and annotate the knowledgebase to improve accuracy and integrity. Approach. As cGenome manages information only as a third party, much conflicting evidence would not be able to be disambiguated. Therefore, our efforts will rely more upon identifying and annotating conflict than attempting to “correct” discrepant data. In some cases, persistent error patterns may afford an opportunity for direct intervention. Our strategy is to interconnect data quality/data integrity checks at all points of the process. Standardization of data sets. We will carefully craft the initial scripts for reducing the central data sets to their evidence expressions. For each ontology class, we will create a “standard” vocabulary. This will be Page 11 straightforward for those classes with pre-supplied standards (e.g. MALIGNANCY) but will require more effort for other classes (e.g. ACTION). The central data sets will first be converted to tab-delimited files, with each column corresponding to a data subtype that roughly translates to an ontology class. We will compare all entries in a data column against the corresponding class vocabulary list. Non-matching entries will be candidates for either translation to a vocabulary standard (i.e. n-myc to MYCN), or directing modification of/addition to the vocabulary. These mismatches will be investigated manually to determine the class of mismatch and what the proper solution should be. Each iteration with another added data set will serve as a training set, with the result being both a more precise and complete vocabulary, and a more robust converting script. Each class training will initially be labor-intensive but will become more automated with every subsequent iteration. A similar procedure will be employed for integration of any peripheral data sets. Identification of redundant terms. The data set reduction scripts will also be written to detect redundant terms, both before and after individual data sets have been translated into evidence expressions, and after each merge of expressions between data sets. These terms may manifest from completely redundant entries in two different data sets, or from related entries in the same or different data sets that are translated into the exact same expression (22q11 deletion in PNET vs. 22q11 loss of heterozygosity in PNET). We will carefully inspect the set of “identical” terms to ensure integrity. For example, “22q11 deletion” is not equivalent to “22q11.2 deletion” and requires hierarchical object orientation for resolution. This reiterative training process will incrementally improve both the redundancy algorithm and the evidence expression set. Internal quality control. We will use various standard techniques for ensuring that our own data maintains integrity over time. Quality control checks will be written into each stored procedure that the database or an external script executes, including tracking file sizes, data record counts, and incorporating database field entry constraints. These procedures will greatly assist in the reduction of internallyintroduced errors. Discrepancy annotation and resolution. We will annotate discrepancies by marking an expression variable as discrepant. Besides being investigated further, these discrepancies will be annotated on the web display. An example of this would be two independent and discrepant reports of the identical (2;13) translocation in alveolar rhabdomyosarcoma (ARMS): one involving PAX3 at 2q35 and another incorrectly reporting the breakpoint at 2q34. After annotation in the database (and subsequent follow-up after flagging), the associated web records would display a prominent notification such as: “This translocation breakpoint has been reported to map to two different cytogenetic positions.”. Conflicting data will be manually investigated. In some cases, gross data errors or other recurrent patterns of conflict may be identified for certain data sources. These will be investigated further and corrected if possible, preferably in consultation with the data provider. Conflicting expressions. We will explore annotating evidence statements which seemingly conflict biologically. We will study the ACTION class to identify contrasting terms, such as over-expressed/underexpressed, amplified/deleted, or phosphorylated/dephosphorylated. If such contrasting terms appear in otherwise identical expressions, they may constitute opposing observations worthy of annotation. We will then analyze the evidence expression set for examples of ACTION-contrasted expressions and study these further. Annotations of this type approximate expert review commentary; however, these automated conflict annotations will require careful intervention. Computational Considerations. Aim 3 requires only a moderate level of computational expertise. All of the data checking routines can be accomplished using straightforward programming in Perl. Potential Difficulties/Alternatives. Our conflict detection/resolution strategy will likely miss some errors, but the rate should be lower than for the component data sources. We are prepared to accept a certain low level of error and will pursue conflict resolution only if globally beneficial. Most evidence expressions will have supportive literature citations, which are themselves sources of conflict resolution for end-users. Specific Aim 4: Create a web resource for public dissemination of the compiled information. Page 12 Approach. We seek to both deliver immediately critical information pertaining to a user’s specific interest, and to simultaneously provide an extended network to relevant supportive data stored elsewhere on the Internet. This resource will be freely available without restrictions to the public via the Internet. Website structure. The basic layout of the web resource will follow the eGenome model, which employs a dynamic data query/data result schema integrated with static support content. The cGenome site will include a variety of search interfaces which produce object-specific data records; introductory, navigatory, and methodology sections; a data repository; and an interwoven help/tutorial section. Global organization, layout, search/retrieval strategies, and feature additions will be reviewed with the advisory board. Site design, layout, and authoring will be performed using a combination of software packages (Macromedia, Adobe). Query interface. Fields within the ELEMENT, ACTION, MALIGNANCY, and SOURCE classes will be indexed. This will allow users to search for any combination of molecular and clinical terms, such as “manuscripts citing transcripts over-expressed in advanced stage germ cell tumors” or “SNPs within genes with inactivating mutations in osteosarcoma with active clinical trials”. The ontology syntax will guide query interface construction. For example, a malignancy pop-up window would list all malignant types within our malignancy vocabulary, and the user could select the one of interest to narrow the query parameters. We will provide Boolean string text search and both text- and ideographically-defined genomic localization search interfaces. We will also develop a customizable query page where a user can input any combination of molecular and/or clinical terms. Query result options will include choices for sorting or selecting data according to different criteria (e.g. chromosomal location, presumed protein function), allowing a user to customize the result formatting. Our advisory board will play a key role in conceptualizing these interfaces. Query results. Query results will display all collected information about the object queried. There will be two initial record types: Molecular Objects (genes/transcripts/proteins), and Malignancies. Molecular Object records will include object name, aliases, description, and type; genomic position; polymorphisms; DNA clones; cancer-specific aberrations; transcripts, ESTs, tissue distribution, and transcriptional profiles; protein function; interacting molecules; pathways; and protein structures. Also, the Molecular Object records will list all evidence expressions, grouped by malignancy, that the object is associated with. In the example “CDKN2A is homozygously deleted in PCNSL (Cobbers et al., Brain Pathol 8:263-276, 1998)”, CDKN2A would be linked to the eGenome CDKN2A record, PCNSL would be linked to the cGenome PCNSL record, and the reference would be linked to its PubMed entry. Malignancy records would include a text summary of the malignancy, subtypes, stages, clinical and biological markers, epidemiological data, clinical trials, NIH-funded grants, and a list of all evidence expressions pertaining to the malignancy, grouped by molecular object type. For both record types, a portion of this information will be listed directly in the object record, some will be summarized versions of (and linked to) full corresponding records in eGenome (including our genomic graphical viewer), and some will link to external databases. External links will be object-specific whenever possible and will be created on-the-fly by the database. Summary tables, which would be returned if more than one entry fit a query, would list a quick summary of each matching record. Supportive content. Considerable effort will be placed into designing a facile website, and into adding sufficient support material which is accessible and intelligible. Foremost will be a series of help pages covering all aspects of the site. All input and output pages will have direct links to the proper help pages or to term definitions. We will also include overview and methods sections, a site map, navigation tools, and an interactive “getting started” tutorial. We will expand our existing eGenome email-based support and feedback management systems for tracking and responding to user questions, for cGenome. We will also create a data repository containing the entire database holdings to facilitate power users and data sharing. Site management. The initial and subsequent releases will be beta-tested by in-house staff using a variety of operating system/browser platforms. Beta testing will employ a routine incorporating a standard set of page accesses and queries, with actual and expected results compared. We will track the operating systems and browsers used by c/eGenome site visitors and will aim for compatibility with the most popular combinations. After in-house beta testing, we will release the site to our advisory board and their laboratories for further testing. We will log all visits and requests using the software package Analog (84). Page 13 Most importantly, the website and its components will be included in our dependency management system (Aim 2). Resource promotion. Our institution is strongly supportive of this project (see Appendix). We will work with various departments in our institution to broaden exposure to the resource in the biomedical community and the general public. This will include press releases, scientific publications, scientific meeting announcements, and email/website post notifications. Computational Considerations. Completion of Aim 4 will require a robust web hosting configuration that seamlessly interfaces with the c/eGenome database architecture. We will scale up and modify the existing eGenome configuration for this project. cGenome is strongly supported by our Information Technology Department (see letter of support), who will assist with the network aspects of this project when needed. Hosting hardware and software. When mature, cGenome will consist of two scalable, load-balanced web servers (Aim 2 and Appendix Figure 3). For web serving, we will employ AOLserver hosted within a UNIX environment (85). AOLserver is available on virtually all UNIX-like operating systems, allowing cGenome to be hardware-independent, and it can be interfaced with Oracle using a third-party database driver (86). Web requests. User queries posted through the website to the database will be handled by presentation logic scripts written in TCL. All query returns will be dynamically generated (on-the-fly) HTML, generated by and mediated through TCL-encoded scripts. Other page requests will be retrievals of static html files. Email messages and ftp requests will be handled by UNIX operating system-supplied software. We will also employ hyperlink validity software to automatically determine if hyperlinks to various databases retain integrity (87). Network and security. All servers will be connected to the LAN, currently 100BaseT, with a dedicated hub to the intranet backbone. Internally, the network consists of a 1 Gbps fibre ring connecting all main hubs. The Internet gateway is currently two load-balanced DS3 45 Mbps connections with redundant main routing stations and ISPs. The servers will be protected by a dual load-balanced firewall (Check Point). Site maintenance. All machines will be supplied with emergency backup power as well as a UPS backup power unit (Symmetra 8kVA, APC). All servers will reboot automatically if power is lost and then restored, and all c/eGenome services will recommence automatically upon reboot. We will employ redundant scripts to poll the web and database servers at 1-minute intervals. Consecutive failures will result in automated notification of multiple staff members. Remote reboot hardware will allow system administrators to reboot the servers by telephone. The loss of a single machine will not disrupt service because each service (ftp, http, database) will be provided by two servers. Full on-site and off-site backups of all hard drives will be provided by a Dell PowerVault 120T Autoloader. Maintenance of the site beyond the funded period would likely be through subsequent and parallel grants; however, a static cGenome site could be hosted indefinitely at minimal expense. Potential Difficulties/Alternatives. We project this hosting configuration to easily meet all server demand; however, we have support from our institution to provide increased bandwidth solutions, such as upgrading the Internet gateway capacity or creating a dedicated 2nd gateway to the ISP, if necessary. Our web server configuration is linearly scalable. If linear additions to the number of web servers is inadequate, we would instead configure the Bioinformatics Core Facility’s 8-processor server as the primary web server. We have consciously created an architecture that is hardware- and software-independent. For each web configuration component, numerous alternatives are available. The hardware can be supplied by a number of vendors. Various other web server and scripting language combinations are also possible. Overall timeline Preliminary identification of cancer genomic data sources has already been completed. We anticipate the initial phase of data integration, consolidation, and management to be complete by 12 months. Site design would take place in parallel. We project an initial cGenome launch date at approximately 18 months. Further data additions and improvements to the site, configuration, database, and ontology would occur in year 2 and would be the primary focus of the work in year 3. Page 14 References 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. 17. 18. 19. 20. 21. 22. 23. 24. 25. 26. Lander ES, Linton LM, Birren B, Nusbaum C, Zody MC, Baldwin J, Devon K, Dewar K, Doyle M, FitzHugh W, et al.: Initial sequencing and analysis of the human genome. Nature, 409: 860-921, 2001. Meldrum D: Automation for genomics, part one: preparation for sequencing. Genome Res, 10: 95-104, 2000. Meldrum D: Automation for genomics, part two: sequencers, microarrays, and future trends. Genome Res, 10: 1081-1092, 2000. Einarson MB: Encroaching genomics: adapting large-scale science to small academic laboratories. Mol Cell Biol, 20: 5184-5195, 2000. Benton D: Bioinformatics--principles and potential of a new multidisciplinary tool. Trends Biotechnol, 14: 261-272, 1996. Smith TF: Functional genomics--bioinformatics is ready for the challenge. Proceedings of the International Conference on Intelligent Systems for Molecular Biology; ISMB, 8: 239-250, 2000. Boguski MS: Biosequence exegesis. Science, 286: 453-455, 1999. Buckley I: Oncogenes and the nature of malignancy. Adv Cancer Res, 50: 71-94, 1988. Weinberg RA: Oncogenes, antioncogenes, and the molecular bases of multistep carcinogenesis. Cancer Res, 49: 3713-3721, 1989. Knudson AG: Antioncogenes and human cancer. Proc Natl Acad Sci U S A, 90: 10914-10921, 1993. Inherited Disease Genes Identified by Positional Cloning. National Human Genome Research Institute. Available at: http://genome.nhgri.nih.gov/clone/. Ballabio A: The rise and fall of positional cloning? Nat Genet, 3: 277-279, 1993. Collins FS: Positional cloning: Let's not call it reverse anymore. Nat Genet, 1: 3-6, 1992. Collins FS: Positional cloning moves from perditional to traditional. Nat Genet, 9: 347-350, 1995. Cairns J: The interface between molecular biology and cancer research. Mutat Res, 462: 423-428, 2000. Jones PA, and Laird PW: Cancer epigenetics comes of age. Nat Genet, 21: 163-167, 1999. Cox PM, and Goding CR: Transcription and cancer. Br J Cancer, 63: 651-662, 1991. Zhang W, Laborde PM, Coombes KR, Berry DA, and Hamilton SR: Cancer genomics: promises and complexities. Clin Cancer Res, 7: 2159-2167, 2001. Brinkmann U, Vasmatzis G, Lee B, Yerushalmi N, Essand M, and Pastan I: PAGE-1, an X chromosome-linked GAGE-like gene that is expressed in normal and neoplastic prostate, testis, and uterus. Proc Natl Acad Sci U S A, 95: 10757-10762, 1998. Hough CD, Sherman-Baust CA, Pizer ES, Montz FJ, Im DD, Rosenshein NB, Cho KR, Riggins GJ, and Morin PJ: Large-scale serial analysis of gene expression reveals genes differentially expressed in ovarian cancer. Cancer Res, 60: 6281-6287, 2000. Huminiecki L, and Bicknell R: In silico cloning of novel endothelial-specific genes. Genome Res, 10: 1796-1806, 2000. Loging WT, Lal A, Siu IM, Loney TL, Wikstrand CJ, Marra MA, Prange C, Bigner DD, Strausberg RL, and Riggins GJ: Identifying potential tumor markers and antigens by database mining and rapid expression screening. Genome Res, 10: 1393-1402, 2000. Nakamura TM, Morin GB, Chapman KB, Weinrich SL, Andrews WH, Lingner J, Harley CB, and Cech TR: Telomerase catalytic subunit homologs from fission yeast and human. Science, 277: 955-959, 1997. Scheurle D, DeYoung MP, Binninger DM, Page H, Jahanzeb M, and Narayanan R: Cancer gene discovery using digital differential display. Cancer Res, 60: 4037-4043, 2000. Schmitt AO, Specht T, Beckmann G, Dahl E, Pilarsky CP, Hinzmann B, and Rosenthal A: Exhaustive mining of EST libraries for genes differentially expressed in normal and tumour tissues. Nucleic Acids Res, 27: 4251-4260, 1999. Cuticchia AJ: Future vision of the GDB human genome database. Hum Mutat, 15: 62-67, 2000. Page 15 27. Dausset J, Cann H, Cohen D, Lathrop M, Lalouel JM, and White R: Centre d'etude du polymorphisme humain (CEPH): collaborative genetic mapping of the human genome. Genomics, 6: 575-577, 1990. 28. Rodriguez-Tome P, and Lijnzaad P: RHdb: the Radiation Hybrid database. Nucleic Acids Res, 29: 165166, 2001. 29. Cheung VG, Nowak N, Jang W, Kirsch IR, Zhao S, Chen XN, Furey TS, Kim UJ, Kuo WL, Olivier M, et al.: Integration of cytogenetic landmarks into the draft sequence of the human genome. Nature, 409: 953-958, 2001. 30. McPherson JD, Marra M, Hillier L, Waterston RH, Chinwalla A, Wallis J, Sekhon M, Wylie K, Mardis ER, Wilson RK, et al.: A physical map of the human genome. Nature, 409: 934-941, 2001. 31. Genome Monitoring Table. European Bioinformatics Institute. Available at: http://www.ebi.ac.uk/genomes/mot/. 32. Deloukas P, Schuler GD, Gyapay G, Beasley EM, Soderlund C, Rodriguez-Tomé P, Hui L, Matise TC, McKusick KB, Beckmann JS, et al.: A physical map of 30,000 human genes. Science, 282: 744-746, 1998. 33. Wheeler DL, Church DM, Lash AE, Leipe DD, Madden TL, Pontius JU, Schuler GD, Schriml LM, Tatusova TA, Wagner L, et al.: Database resources of the National Center for Biotechnology Information: 2002 update. Nucleic Acids Res, 30: 13-16, 2002. 34. Boguski MS, and Schuler GD: ESTablishing a human transcript map. Nat Genet, 10: 369-371, 1995. 35. Gasteiger E, Jung E, and Bairoch A: SWISS-PROT: connecting biomolecular knowledge via a protein database. Curr Issues Mol Biol, 3: 47-55, 2001. 36. Edgar R, Domrachev M, and Lash AE: Gene Expression Omnibus: NCBI gene expression and hybridization array data repository. Nucleic Acids Res, 30: 207-210, 2002. 37. Sherry ST, Ward MH, Kholodov M, Baker J, Phan L, Smigielski EM, and Sirotkin K: dbSNP: the NCBI database of genetic variation. Nucleic Acids Res, 29: 308-311, 2001. 38. Westbrook J, Feng Z, Jain S, Bhat TN, Thanki N, Ravichandran V, Gilliland GL, Bluhm W, Weissig H, Greer DS, et al.: The Protein Data Bank: unifying the archive. Nucleic Acids Res, 30: 245-248, 2002. 39. The Wellcome Trust Sanger Institute. The Wellcome Trust Sanger Institute. Available at: http://www.sanger.ac.uk/. 40. Stoesser G, Baker W, van den Broek A, Camon E, Garcia-Pastor M, Kanz C, Kulikova T, Leinonen R, Lin Q, Lombard V, et al.: The EMBL Nucleotide Sequence Database. Nucleic Acids Res, 30: 21-26, 2002. 41. CancerGene. Infobiogen, EMBnet, Villejuif, France. Available at: http://caroll.vjf.inserm.fr/cancergene/. 42. NCI Human OncoChip Genes List. Center for Information Technology and the Division of Clinical Sciences, National Cancer Institute, Bethesda MD, USA. Available at: http://nciarray.nci.nih.gov/gi_acc_ug_title.shtml. 43. Resources for molecular cytogenetics. Cytogenetics Unit, Sezione di Genetica DAPEG, University of Bari, Italy. Available at: http://www.biologia.uniba.it/rmc/. 44. Chromosomal Abnormalities in Cancer. Cytogenetics Laboratory, Waisman Center, University of Wisconsin, Madison WI, USA. Available at: http://www.waisman.wisc.edu/cytogenetics/BMproject/CancerCyto.htmlx. 45. Online CGH Tumor Database. CGH group, Institute of Pathology, University Hospital Charité, Berlin, Germany. Available at: http://amba.charite.de/~ksch/cghdatabase/start.htm. 46. Huret JL, Dessen P, and Bernheim A: Atlas of genetics and cytogenetics in oncology and haematology, updated. Nucleic Acids Res, 29: 303-304, 2001. 47. Cancer Genome Anatomy Project. National Cancer Institute. Available at: http://www.ncbi.nlm.nih.gov/ncicgap/. 48. Strausberg RL: The Cancer Genome Anatomy Project: new resources for reading the molecular signatures of cancer. J Pathol, 195: 31-40, 2001. 49. Krizman DB, Wagner L, Lash A, Strausberg RL, and Emmert-Buck MR: The Cancer Genome Anatomy Project: EST sequencing and the genetics of cancer progression. Neoplasia, 1: 101-106, 1999. Page 16 50. Lash AE, Tolstoshev CM, Wagner L, Schuler GD, Strausberg RL, Riggins GJ, and Altschul SF: SAGEmap: a public gene expression resource. Genome Res, 10: 1051-1060, 2000. 51. Lal A, Lash AE, Altschul SF, Velculescu V, Zhang L, McLendon RE, Marra MA, Prange C, Morin PJ, Polyak K, et al.: A public database for gene expression in human cancers. Cancer Res, 59: 5403-5407, 1999. 52. Mitelman Database of Chromosome Aberrations in Cancer. National Cancer Institute. Available at: http://cgap.nci.nih.gov/Chromosomes/Mitelman. 53. Emmert-Buck MR, Strausberg RL, Krizman DB, Bonaldo MF, Bonner RF, Bostwick DG, Brown MR, Buetow KH, Chuaqui RF, Cole KA, et al.: Molecular profiling of clinical tissue specimens: feasibility and applications. Am J Pathol, 156: 1109-1115, 2000. 54. Kirsch IR, and Ried T: Integration of cytogenetic data with genome maps and available probes: present status and future promise. Semin Hematol, 37: 420-428, 2000. 55. CancerNet. National Cancer Institute. Available at: http://www.cancer.gov. 56. Physician Data Query. National Cancer Institute. Available at: http://www.cancer.gov/cancer_information/pdq/. 57. CANCERLIT. National Cancer Institute. Available at: http://www.cancer.gov/cancer_information/cancer_literature/. 58. Hankey BF: The surveillance, epidemiology, and end results program: a national resource. J Natl Cancer Inst, 91: 1017-1024, 1999. 59. Gregory SG, Vaudin M, Wooster R, Mischke D, Coleman M, Porter C, Schutte BC, White P, and Vance JM: Report of the Fourth International Workshop on Human Chromosome 1 Mapping. Cytogenet Cell Genet, 78: 154-182, 1998. 60. Vance JM, Matise TC, Wooster R, Schutte BC, Bruns GAP, Van Roy N, Brodeur GM, Tao YX, Gregory S, Weith A, et al.: Report of the Third International Workshop on Human Chromosome 1 Mapping 1997. Cytogenet Cell Genet, 78: 153-182, 1997. 61. eGenome. The Children's Hospital of Philadelphia. Available at: http://genome.chop.edu. 62. White PS, Sulman EP, Porter CJ, and Matise TC: A comprehensive view of human chromosome 1. Genome Res, 9: 978-988, 1999. 63. Jones MM: With funding decreased, researchers rely on donations to complete GDB 2000. Bioinform, 4: 1-9, 2000. 64. White PS, and Matise TC: Genomic mapping and mapping databases. In: Baxevanis AD, and Ouellette BFF (ed), Bioinformatics: A practical guide to the analysis of genes and proteins, pp. 111-153, Wiley (New York), 2001. 65. Human Chromosomes Index. Human Genome Organization, London, UK. Available at: http://www.gdb.org/hugo/chr1/. 66. Human Chromosome Launchpad. Oakridge National Laboratory, Oak Ridge TN, USA. Available at: http://www.ornl.gov/hgmis/launchpad/chrom01.html. 67. Sulman EP, Dumanski JP, White PS, Zhao H, Maris JM, Mathiesen T, Bruder C, Cnaan A, and Brodeur GM: Identification of a consistent region of allelic loss on 1p32-34 in meningiomas: correlation with increased morbidity. Cancer Res, 58: 3226-3230, 1998. 68. White PS, Thompson PM, Seifried BA, Sulman EP, Jensen SJ, Guo C, Maris JM, Hogarty MD, Allen C, Biegel JA, et al.: Detailed molecular analysis of 1p36 in neuroblastoma. Med Pediatr Oncol, 36: 3741, 2001. 69. YAC/BAC FISH Mapping Resource. Max-Planck-Institute for Molecular Genetics. Available at: http://www.mpimg-berlin-dahlem.mpg.de/~cytogen/. 70. Hudson TJ, Stein LD, Gerety SS, Ma J, Castle AB, Silva J, Slonim DK, Baptista R, Kruglyak L, Xu SH, et al.: An STS-based map of the human genome. Science, 270: 1945-1954, 1995. 71. Matise TC, Porter CJ, Buyske S, Cuticchia AJ, Sulman EP, and White PS: Systematic evaluation of map quality: human chromosome 22. Am J Human Genet, in press. 72. White JA, McAlpine PJ, Antonarakis S, Cann H, Eppig J, Frazer K, Frezal J, Lancet D, Nahmias J, Pearson P, et al.: Guidelines for Human Gene Nomenclature. Genomics, 45: 468-471, 1997. Page 17 73. Mitelman F: ISCN 1995. An international system for human cytogenetic nomenclature. Karger (Basel), 1995. 74. Teebi SA: 10th International Hugo mutation database initiative meeting, 19 April 2001, Edinburgh, Scotland. Hum Mutat, 3: 179-184, 2001. 75. International Classification for Diseases In Oncology Supplemental ICD-O-3 List. National Cancer Institute. Available at: http://training.seer.cancer.gov/module_icdo3/icd_o_3_lists.html. 76. McEntyre J, and Lipman D: PubMed: bridging the information gap. CMAJ, 164: 1317-1319, 2001. 77. Fleming ID, Cooper JS, Henson DE, Hutter RVP, Kennedy BJ, Murphy GP, O'Sullivan B, Sobin LH, and Yarbro JW: AJCC cancer staging manual. Lippincott (Philadelphia), 1997. 78. Krauthammer M: Using BLAST for identifying gene and protein names in journal articles. Gene, 259: 245-252, 2000. 79. Rzhetsky A: A knowledge model for analysis and simulation of regulatory networks. Gene, 259: 245252, 2000. 80. Leasing data from the National Library of Medicine. National Cancer Institute. Available at: http://www.nlm.nih.gov/databases/leased.html. 81. Perry DJ: Keeping up with the cancer literature--PDQ ACCESS. Journal of Medical Practice Management, 4: 41-46, 1988. 82. Consortium TGO: Creating the gene ontology resource: design and implementation. Nat Genet, 25: 2529, 2000. 83. Office of Software Licensing. University of Pennsylvania. Available at: http://www.businessservices.upenn.edu/softwarelicenses/products/oracle.html. 84. Analog: Logfile analysis software package. Stephen Turner. Available at: http://www.analog.cx/. 85. AOLserver: America Online's Open-Source web server. AOLserver.com. Available at: http://www.aolserver.com/. 86. Oracle Driver for AOLserver. ArsDigita. Available at: http://www.arsdigita.com/free-tools/oracledriver.html. 87. Checkbot: HTML link verification tool. Degraaff.org. Available at: http://degraaff.org/checkbot/. 88. @Cancer. @LIfe.com. Available at: http://atcancer.com/cancer/. 89. Cancer Genetics Web. CancerIndex.org. Available at: http://www.cancerindex.org/geneweb/geneweb.htm. 90. cancernetwork.com. SCP Communications, Inc. Available at: http://www.cancernetwork.com/. 91. cancersourceMD.com. iKnowMed, Inc. Available at: http://www.cancersourceMD.com. 92. Children's Cancer Web. CancerIndex.org. Available at: http://www.cancerindex.org/. 93. Children's Oncology Group. Available at: http://www.childrensoncologygroup.org/. 94. Collins KA: The CRISP system: an untapped resource for biomedical research project information. Bull Med Lib Assoc, 11: 31-35, 2000. 95. Cancer Genes by Chromosomal Locus. Waldman Laboratory, UCSF Cancer Center, University of California at San Francisco Medical Center, San Francisco CA, USA. Available at: http://cc.ucsf.edu/people/waldman/GENES/completechroms.html. 96. HmutDB. European Bioinformatics Institute. Available at: http://www.ebi.ac.uk/mutations/central/. 97. Krawczak M, Ball EV, Fenton I, Stenson PD, Abeysinghe S, Thomas N, and Cooper DN: Human gene mutation database-a biomedical information and research resource. Hum Mutat, 15: 45-51, 2000. 98. Beroud C, Collod-Beroud G, Boileau C, Soussi T, and Junien C: UMD (Universal mutation database): a generic software to build and analyze locus-specific databases. Hum Mutat, 15: 86-94, 2000. 99. Gottlieb B, Beitel LK, Lumbroso R, Pinsky L, and Trifiro M: Update of the androgen receptor gene mutations database. Hum Mutat, 14: 103-114, 1999. 100. GeneDis: APC-Familial Adenomatous Polyposis Database. Tel Aviv University. Available at: http://life2.tau.ac.il/GeneDis/Tables/APC/apc.html. 101. Laurent-Puig P, Beroud C, and Soussi T: APC gene: database of germline and somatic mutations in human tumors and cell lines. Nucleic Acids Res, 26: 269-270, 1998. Page 18 102. Ataxia-Telangiectasia Mutation Database. Virginia Mason University. Available at: http://www.vmresearch.org/atm.htm. 103. Vasen HF, Mecklin JP, Khan PM, and Lynch HT: The International Collaborative Group on HNPCC. Anticancer Res, 14: 1661-1664, 1994. 104. Momand J, Jung D, Wilczynski S, and Niland J: The MDM2 gene amplification database. Nucleic Acids Res, 26: 3453-3459, 1998. 105. NNFF International NF1 Genetic Mutation Analysis Consortium Mutation Database. National Neurofibromatosis Foundation. Available at: http://www.nf.org/nf1gene/nf1gene.home.html. 106. NF2 Mutation Map. Harvard University. Available at: http://neuro-trials1.mgh.harvard.edu/nf2/. 107. Hahn H, Wicking C, Zaphiropoulous PG, Gailani MR, Shanley S, Chidambaram A, Vorechovsky I, Holmberg E, Unden AB, Gillies S, et al.: Mutations of the human homolog of Drosophila patched in the nevoid basal cell carcinoma syndrome. Cell, 85: 841-851, 1996. 108. RB1-Gene Mutation Database. Institut für Humangenetik. Available at: http://www.dlohmann.de/Rb/mutations.htm. 109. Cariello NF, Douglas GR, Gorelick NJ, Hart DW, Wilson JD, and Soussi T: Databases and software for the analysis of mutations in the human p53 gene, human hprt gene and both the lacI and lacZ gene in transgenic rodents. Nucleic Acids Res, 26: 198-199, 1998. 110. Soussi T, Dehouche K, and Beroud C: p53 website and analysis of p53 gene mutations in human cancer: forging a link between epidemiology and carcinogenesis. Hum Mutat, 15: 105-113, 2000. 111. p53 Mutation Database. Tokyo University. Available at: http://p53.genome.ad.jp/. 112. Hernandez-Boussard T, Rodriguez-Tome P, Montesano R, and Hainaut P: IARC p53 mutation database: a relational database to compile and analyze p53 mutations in human tumors and cell lines. International Agency for Research on Cancer. Hum Mutat, 14: 1-8, 1999. 113. Tuberous Sclerosis Mutation Database. University of Wales. Available at: http://archive.uwcm.ac.uk/uwcm/mg/tsc_db/. 114. TSC Variation Database. Harvard University. Available at: http://zk.bwh.harvard.edu/ts/. 115. Beroud C, Joly D, Gallou C, Staroz F, Orfanelli MT, and Junien C: Software and database for the analysis of mutations in the VHL gene. Nucleic Acids Res, 26: 256-258, 1998. 116. Jeanpierre C, Beroud C, Niaudet P, and Junien C: Software and database for the analysis of mutations in the human WT1 gene. Nucleic Acids Res, 26: 271-274, 1998. 117. The Cancer Genome Project. The Wellcome Trust Sanger Institute. Available at: http://www.sanger.ac.uk/CGP/. 118. Human Cancer Genome Project. Ludwig Institute, Brazil. Available at: http://www.ludwig.org.br/ORESTES/. 119. Caron H, van Schaik B, van der Mee M, Baas F, Riggins G, van Sluis P, Hermus M-C, van Asperen R, Boon K, Voûte PA, et al.: The human transcriptome map: clustering of highly expressed genes in chromosomal domains. Science, 291: 1289-1292, 2001. 120. CenterWatch clinical trials listing service. CenterWatch.com. Available at: http://www.centerwatch.com/. 121. CyberMedTrials. CyberMedTrials.org. Available at: http://www.cybermedtrials.org. 122. Milne GW: National Cancer Institute Drug Information System 3D database. J Chem Inf Comput Sci, 34: 1219-1224, 1994. 123. Anonymous: Web site offers database of national guidelines. Genes Dev, 15: 839-844, 2001. Page 19 Appendix Table 1: Candidate databases to integrate into cGenome Database Data formatting standards American Joint Committee on Cancer: Cancer Staging Manual HUGO Gene Nomenclature Committee International Classification of Disease for Oncology International System for Human Cytogenetic Nomenclature PubMed/MEDLINE General cancer databases/resources for Phase I inclusion @cancer Cancer Genetics Web CancerGene CANCERLIT cancernetwork.com CancerSourceMD.com Children’s Cancer Web Children’s Oncology Group Computer Retrieval of Information on Scientific Projects dbEST Oncogene/Tumor Suppressor Gene List NCI Human OncoChip Genes List The Waldman Group: Cancer Genes by Chromosomal Locus General mutation databases/initiatives HmutDB Human Gene Mutation Database HUGO Mutation Database Initiative Online Mendelian Inheritance in Man Universal Mutation Database Initiative Locus or disease-specific mutation databases Androgen Receptor Mutations Database GeneDis: APC-Familial Adenomatous Polyposis Database APC Mutation Database Ataxia-Telangiectasia Mutation Database International Collaborative Group on HNPCC MDM2 Database MEN1 Mutation Database NNFF International NF1 Genetic Mutation Analysis Consortium NF2 Mutation Map PTCH Mutation Database RB1-Gene Mutation Database SUR1 Mutation Database Human p53 database p53 Database p53 Mutation Database International Agency for Research on Cancer TP53 database Tuberous Sclerosis Mutation Database TSC Variation Database VHL Mutation Database WT1 Mutation Database Chromosomal abnormalities Atlas of Genetics and Cytogenetics in Oncology and Haematology Cancer Chromosome Aberration Project Cancer Genome Project Chromosomal Abnormalities in Cancer Online CGH Tumor Database Resources for Molecular Cytogenetics Appendix Table 1 cont. Host Reference AJCC HUGO WHO ISCN Natl. Library of Medicine (77) (72) (75) (73) (76) @Life.com CancerIndex.org Infobiogen NCI SCP Communications iKnowMed CancerIndex.org COG NIH NCBI NCI UCSF (88) (89) (41) (57) (90) (91) (92) (93) (94) (33) (42) (95) EMBL U. of Wales HUGO/EBI NCBI U. Rene Descartes (96) (97) (74) (33) (98) McGill U. Tel Aviv U. Institut Curie Virginia Mason U. U. Leiden City of Hope GENEM NNFF Harvard U. Karolinska Institutet Institut für Humangenetik U. Rene Descartes U. North Carolina Institut Curie U. Tokyo IARC U. Wales Harvard U. U. Rene Descartes U. Rene Descartes (99) (100) (101) (102) (103) (104) (98) (105) (106) (107) (108) (98) (109) (110) (111) (112) (113) (114) (115) (116) Infobiogen NCI Sanger Institute U. Wisconsin U. Charité U. Bari (46) (54) (117) (44) (45) (43) Page 20 Transcriptional Profiling Data Cancer Genome Anatomy Project (ESTs, SAGE) Human Cancer Genome Project (ESTs) The Human Transcriptome Map (SAGE) Clinically-oriented resources Clinical Trials Listing Service CyberMedTrials Drug Information System 3D database National Guideline Clearinghouse PDQ Cancer Information Summaries PDQ Clinical Trials Database Surveillance, Epidemiology, and End Results Program NCI Ludwig Institute, Brazil U. Amsterdam (47) (118) (119) CenterWatch.com CyberMedtrials.org NCI AHRQ NCI NCI NCI (120) (121) (122) (123) (56) (56) (58)