Column Oriented Databases Vs Row Oriented Databases

advertisement

ITK - 478

Column Oriented Databases Vs Row

Oriented Databases

Special Interest Activity

Submitted by: Venkat. K & Rakesh. K

11/19/2007 Updated: 12/03/2007

Column Oriented Databases Vs Row Oriented Databases

Table of Contents

Introduction ......................................................................................................................... 3

MonetDB............................................................................................................................. 4

Monet Architecture ......................................................................................................... 5

VOC Data set: ................................................................................................................. 8

Queries in MonetDB: ........................................................................................................ 11

TPC-H Benchmark............................................................................................................ 13

TPC-H DDL .................................................................................................................. 14

For MonetDB ................................................................................................................ 14

For Oracle ..................................................................................................................... 17

TPC-H Data Insertion ....................................................................................................... 19

MonetDB/SQL .................................................................................................................. 21

Queries .............................................................................................................................. 21

LucidDB ............................................................................................................................ 26

Main features: ................................................................................................................... 26

Query Optimization and Execution .............................................................................. 29

Data insertion ................................................................................................................ 32

Advantages and Disadvantages: ....................................................................................... 34

Summary: ...................................................................................................................... 34

References: ........................................................................................................................ 35

Page |2

Column Oriented Databases Vs Row Oriented Databases

Introduction [1][2]

Database that we find extensively is a row oriented database which stores data in rows. It

has high performance for the OLTP i.e. online transaction processing. This document

talks about Column oriented database that stores data by column.

After leading the team that worked on the C-Stotre, an open source column oriented

database Mike Stonebraker along with 6 other have come up with the market product of

the column oriented database, Vertica. Mike Stonebraker is considered as one of the

major contributor to the column oriented database.

The document takes you through different column oriented databases that are in the

market and the installation procedures and details of two such databases.

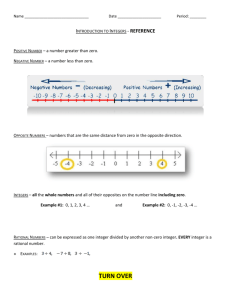

The following example illustrates the difference between them

Consider the Project table which has three column pjno, status, pjtitle and pjno is the

primary key.

Pjno

5555

6666

7777

Status

A

C

A

Pjtitle

Marketing

Inventory

Order entry

The row store implementation of the table is stored in the file as following. Here values

of different attributes from the same tuple are stored consecutively.

5555,A,Marketing;6666,C,Inventory;7777,A,Order Entry;

The column store implementation of the table is stored in the file as following. Here the

values of same attributes are stored consecutively. The column oriented database stores

data in columns and are joined with the help of the IDs. It also has the feature of

compressing the data by column wise with the help of projections. It stores the repeated

data in the columns as one.

5555,6666,7777; A,C,A; Marketing, Inventory, Order entry;

The row store architecture is well suited for OLTP operations where as column store

architecture is suited for OLAP operations.

The following are the advantages [1]

Page |3

Column Oriented Databases Vs Row Oriented Databases

While row stores are extremely "write friendly", in that adding a row of data to a

table requires a simple file appending I/O, column stores perform better for

complex read queries. For tables with many columns and queries that use only

few of them, a column store can confine its reads to the columns required,

whereas a row store must read the entire table. In addition, the storage efficiency

properties of column stores can greatly reduce the number of actual disk reads

required to satisfy a query.

Column data is of uniform type. Therefore it is much easier to compress than row

data, and NULL values need never be stored. Row stores cannot omit columns

from any row and still achieve direct random access to a table, because random

access requires that the data for each row be of fixed width. In column stores, this

is trivially true because of type uniformity within a single column's storage,

allowing omission of NULL values and therefore efficient storage of wide,

sparsely populated tables. In practice, row stores can and do implement tables

with variable-width rows, but this require either some form of indirect access or

giving up random access in favor of some type of fast ordered access, e.g. B-trees

(used in the architecture of lucid DB). However, both storage efficiency and code

complexity of such approaches generally compare unfavorably to

implementations of sparse column stores.

The same above concept is used in all of the following databases and in the databases we

are going to talk about.

Open source C-store

MonetDB

LucidDB

Metakit

Proprietary

BigTable

Sybase IQ

Xplain

KDB

DataProbe

We performed our special interest activity on MonetDB and LucidDB.

MonetDB [4]

MonetDB is an open source high-performance database management system developed at

the National Research Institute for Mathematics and Computer Science (CWI; Centrum

voor Wiskunde en Informatica) in the Netherlands. It was designed to provide high

performance on complex queries against large databases, e.g. combining tables with

hundreds of columns and multi-million rows. MonetDB has been successfully applied in

Page |4

Column Oriented Databases Vs Row Oriented Databases

high-performance applications for data mining, OLAP, GIS, XML Query, text and

multimedia retrieval. MonetDB internal data representation is memory-based, relying on

the huge memory addressing ranges of contemporary CPUs, and thus departing from

traditional DBMS designs involving complex management of large data stores in limited

memory.

MonetDB is one of the first database systems to focus its query optimization effort on

exploiting CPU caches.

The MonetDB family consists of:

MonetDB/SQL: the relational database solution

MonetDB/XQuery: the XML database solution

MonetDB Server: the multi-model database server

Monet Architecture

1. Query language parser – reads SQL (structured query language) from the user and

checks for the syntax.

2. Query rewriter – rewrites the query into some normal form.

3. Query optimizer – translates the logical description of the query into a query plan.

4. Query executor – executes the physical query and produces the result.

5. Access methods – system services to access data from the tables.

6. Buffer manager – handles caching of table data stored in the table.

7. Lock manager – system services for locking the transaction.

8. Recovery manager – when the transactions are commit it makes sures that data is

persistent and erases when there is not.

Page |5

Column Oriented Databases Vs Row Oriented Databases

The architecture emphasis that the multiple front ends can connect to the back end. We

can have relational, object oriented queries as front end and have MonetDB as back-end.

The intermediate language between the front end and back end is the MIL (Monet

Interpreter Language).

Query execution is divided into strategic and tactical phase. The strategic optimization is

done in front end while the tactical phase is done at run-time in the Monet Query

Executor.

Monet uses binary table model where are all the tables consists of exactly two columns.

These are called as Binary Association Tables. Each front end uses mapping rules to map

logical data model as seen as the end user onto binary tables in Monet. In case of

relational model relational tables are vertically fragmented, by storing each column from

a relational table in a separate BAT. The right column of the BATs holds the column

value and left column holds the row or object identifier.

Consider the following tables

A relational data model can be stored in Monet by splitting each relational table by

column. Each column becomes a BAT that holds the column values in the right column

(tail) and object identifier (OID) in left column (head). The relational tuples can be

reconstructed by taking all tail values of the column BATs with the same OID.

The tables are decomposed into following columns

Page |6

Column Oriented Databases Vs Row Oriented Databases

MIL is a procedural block-structured language with standard control structures like ifthen-else and while loops.

The following is the MIL translation of the SQL

Installation of MonetDB [5]

MonetDB is an open source database. It can be downloaded from this link

http://monetdb.cwi.nl/projects/monetdb//Download/index.html#SQL

The following are the tools for MonetDB

DBVisualizer

Squirrel

Page |7

Column Oriented Databases Vs Row Oriented Databases

Aqua Data Studio

iSQL

We used the DBVisualizer for our activity. The driver settings for it are

DBVisualizer

VOC Data set:

Exploring the wealth of functionality offered by MonetDB/SQL is best started using a toy

database. For this we use the VOC database which provides a peephole view into the

administrative system of an early multi-national company, the Vereenigde geoctrooieerde

Oostindische Compagnie (VOC for short - The (Dutch) East Indian Company)

established on March 20, 1602.

We used Oracle Server which is given access as part of the course with the following

details for connection using SqlDeveloper. We have also run the queries by running the

oracle in our system instead of college server using following credentials.

Connection Name: Test10G (You can give anything)

Username & Password (my computer ID and password)

Oracle is default

Connection type: Basic

Page |8

Column Oriented Databases Vs Row Oriented Databases

Scripts –

CREATE TABLE "voyages" (

"number"

integer NOT NULL,

"number_sup"

char(1) NOT NULL,

"trip"

integer,

"trip_sup"

char(1),

"boatname"

varchar(50),

"master"

varchar(50),

"tonnage"

integer,

"type_of_boat"

varchar(30),

"built"

varchar(15),

"bought"

varchar(15),

"hired"

varchar(15),

"yard"

char(1),

"chamber"

char(1),

"departure_date" date,

"departure_harbour" varchar(30),

"cape_arrival"

date,

"cape_departure" date,

"cape_call"

boolean,

"arrival_date"

date,

"arrival_harbour" varchar(30),

"next_voyage"

integer,

"particulars"

varchar(530)

);

CREATE TABLE "craftsmen" (

Page |9

Column Oriented Databases Vs Row Oriented Databases

"number"

integer NOT NULL,

"number_sup"

char(1)

NOT NULL,

"trip"

integer,

"trip_sup"

char(1),

"onboard_at_departure" integer,

"death_at_cape"

integer,

"left_at_cape"

integer,

"onboard_at_cape"

integer,

"death_during_voyage" integer,

"onboard_at_arrival" integer

);

CREATE TABLE "impotenten" (

"number"

integer NOT NULL,

"number_sup"

char(1)

NOT NULL,

"trip"

integer,

"trip_sup"

char(1),

"onboard_at_departure" integer,

"death_at_cape"

integer,

"left_at_cape"

integer,

"onboard_at_cape"

integer,

"death_during_voyage" integer,

"onboard_at_arrival" integer

);

CREATE TABLE "invoices" (

"number" integer,

"number_sup" char(1),

"trip"

integer,

"trip_sup" char(1),

"invoice" integer,

"chamber" char(1)

);

CREATE TABLE "passengers" (

"number"

integer NOT NULL,

"number_sup"

char(1)

NOT NULL,

"trip"

integer,

"trip_sup"

char(1),

"onboard_at_departure" integer,

"death_at_cape"

integer,

"left_at_cape"

integer,

"onboard_at_cape"

integer,

"death_during_voyage" integer,

"onboard_at_arrival" integer);

CREATE TABLE "seafarers" (

"number"

integer NOT NULL,

"number_sup"

char(1)

NOT NULL,

"trip"

integer,

"trip_sup"

char(1),

"onboard_at_departure" integer,

"death_at_cape"

integer,

"left_at_cape"

integer,

"onboard_at_cape"

integer,

"death_during_voyage" integer,

"onboard_at_arrival" integer

P a g e | 10

Column Oriented Databases Vs Row Oriented Databases

);

CREATE TABLE "soldiers" (

"number"

integer NOT NULL,

"number_sup"

char(1)

NOT NULL,

"trip"

integer,

"trip_sup"

char(1),

"onboard_at_departure" integer,

"death_at_cape"

integer,

"left_at_cape"

integer,

"onboard_at_cape"

integer,

"death_during_voyage" integer,

"onboard_at_arrival" integer

);

CREATE TABLE "total" (

"number"

integer NOT NULL,

"number_sup"

char(1)

NOT NULL,

"trip"

integer,

"trip_sup"

char(1),

"onboard_at_departure" integer,

"death_at_cape"

integer,

"left_at_cape"

integer,

"onboard_at_cape"

integer,

"death_during_voyage" integer,

"onboard_at_arrival" integer

);

ALTER TABLE "voyages" ADD PRIMARY KEY ("number", "number_sup");

ALTER TABLE "craftsmen" ADD PRIMARY KEY ("number", "number_sup");

ALTER TABLE "impotenten" ADD PRIMARY KEY ("number", "number_sup");

ALTER TABLE "passengers" ADD PRIMARY KEY ("number", "number_sup");

ALTER TABLE "seafarers" ADD PRIMARY KEY ("number", "number_sup");

ALTER TABLE "soldiers" ADD PRIMARY KEY ("number", "number_sup");

ALTER TABLE "total" ADD PRIMARY KEY ("number", "number_sup");

ALTER TABLE "craftsmen" ADD FOREIGN KEY ("number", "number_sup")

REFERENCES "voyages" ("number", "number_sup");

ALTER TABLE "impotenten" ADD FOREIGN KEY ("number", "number_sup")

REFERENCES "voyages" ("number", "number_sup");

ALTER TABLE "invoices" ADD FOREIGN KEY ("number", "number_sup")

REFERENCES "voyages" ("number", "number_sup");

ALTER TABLE "passengers" ADD FOREIGN KEY ("number", "number_sup")

REFERENCES "voyages" ("number", "number_sup");

ALTER TABLE "seafarers" ADD FOREIGN KEY ("number", "number_sup")

REFERENCES "voyages" ("number", "number_sup");

ALTER TABLE "soldiers" ADD FOREIGN KEY ("number", "number_sup")

REFERENCES "voyages" ("number", "number_sup");

ALTER TABLE "total" ADD FOREIGN KEY ("number", "number_sup")

REFERENCES "voyages" ("number", "number_sup");

Queries in MonetDB:

P a g e | 11

Column Oriented Databases Vs Row Oriented Databases

We performed the following ran the following queries on MonetDB, Oracle (SQL

Developer) in both college and one on our system. The time given in the box is the time

taken from their respective GUI clients.

The data used for the queries and schema is directly taken from the MonetDB website

under VOC copy.

Note:

1. Time provided for the each query varies each time we run the query.

2. We have left out the Query plan as DBvisualizer used for the MonetDB is an trail

pack it doesn’t support the query plan feature.

Query

Oracle:

Execution time

.819

0.505(from the system)

select count(*) from “passengers”;

MonetDB:

.0106

select count(*) from Passengers;

Query

Execution time

Oracle:

3.513

select * from “craftsmen”;

MonetDB:

1.007(from the system)

0.406

select * from craftsmen;

Query

Oracle:

Execution time

4.97

SELECT number from “impotenten”;

0.505(from the system)

MonetDB:

.093

SELECT "number" from impotenten;

Query

Oracle:

Execution time

1.0041

SELECT COUNT(*) FROM "voyages"

WHERE "particulars" LIKE '%_recked%';

0.153(from the system)

MonetDB:

0.39

SELECT COUNT(*) FROM voyages

WHERE particulars LIKE '%_recked%';

P a g e | 12

Column Oriented Databases Vs Row Oriented Databases

Query

Execution time

Oracle:

0.56

SELECT "chamber", CAST(AVG("invoice") AS integer) AS

average

FROM "invoices"

WHERE "invoice" IS NOT NULL

GROUP BY "chamber"

ORDER BY average desc;

MonetDB:

0.507(from the system)

0.313

SELECT chamber,CAST(AVG(invoice) AS integer) AS average

FROM invoices

WHERE invoice IS NOT NULL

GROUP BY chamber

ORDER BY average DESC;

Query

Execution time

Oracle:

SELECT voyages.number FROM voyages inner join craftsmen

on voyages.number = craftsmen.number and

voyages.number_sup = craftsmen.number_sup

WHERE voyages.particulars LIKE '%_recked%';

0.506

MonetDB:

SELECT voyages.number FROM voyages inner join craftsmen

on voyages.number = craftsmen.number and

voyages.number_sup = craftsmen.number_sup

WHERE voyages.particulars LIKE '%_recked%';

0.26

0.504(from the system)

TPC-H Benchmark

The TPC Benchmark (TPC-H) is a decision support benchmark. It consists of a suite of

business oriented ad-hoc queries and concurrent data modifications. The queries and the

data populating the database have been chosen to have broad industry-wide relevance.

This benchmark illustrates decision support systems that examine large volumes of data,

execute queries with a high degree of complexity, and give answers to critical business

questions. It is stated that the results of the TPC-H benchmark ran by the MonetDB

company is 10 times faster in Monetdb when compare to MySQL and PostgreSQL.

P a g e | 13

Column Oriented Databases Vs Row Oriented Databases

TPC-H

SCHEMA

TPC-H DDL

Because of the difference in data types support in MonetDB and Oracle we needed to

define DDL separately.

For MonetDB

create table part(

p_partkey int ,

p_name varchar(55),

p_mfgr char(25),

p_brand char(10),

p_type varchar(25),

p_size int,

p_container char(10),

p_retailprice decimal(10,5),

p_comment varchar(23));

P a g e | 14

Column Oriented Databases Vs Row Oriented Databases

alter table part add primary key(p_partkey);

create table supplier(

s_suppkey int ,

s_name varchar(25),

s_address varchar(100),

s_nationkey int,

s_phone varchar(20),

s_acctbal decimal(10,5),

s_comment varchar2(120));

alter table supplier add primary key(s_suppkey);

ALTER TABLE supplier ADD FOREIGN KEY (s_nationkey)

REFERENCES nation(n_nationkey );

create table partsupp(

ps_partkey int,

ps_suppkey int,

ps_availqty int,

ps_supplycost decimal(10,5),

ps_comment varchar(220));

alter table partsupp add primary key(ps_partkey, ps_suppkey);

ALTER TABLE partsupp ADD FOREIGN KEY (ps_partkey)

REFERENCES part (p_partkey );

ALTER TABLE partsupp ADD FOREIGN KEY (ps_suppkey)

REFERENCES supplier (s_suppkey );

create table customer(

c_custkey int,

c_name varchar(30),

c_address varchar(100),

c_nationkey int,

c_phone varchar(30),

c_acctbal decimal(10,5),

c_mktsegment varchar(20),

c_comment varchar(150));

alter table customer add primary key(c_custkey);

ALTER TABLE customer ADD FOREIGN KEY (c_nationkey)

REFERENCES nation(N_nationkey );

Create table nation(

n_nationkey int,

n_name varchar(50),

n_regionkey int,

n_comment varchar(200));

alter table nation add primary key(n_nationkey);

P a g e | 15

Column Oriented Databases Vs Row Oriented Databases

ALTER TABLE nation ADD FOREIGN KEY (n_regionkey)

REFERENCES region (r_regionkey );

Create table region(

R_regionkey int,

R_name varchar(25),

R_comment varchar(180));

alter table region add primary key(R_regionkey);

create table orders(

o_orderkey int,

o_custkey int,

o_orderstatus varchar(10),

o_totalprice decimal(10,5),

o_orderdate varchar(20),

o_orderpriority varchar(20),

o_clerk varchar(20),

o_shippriority int,

o_comment varchar(100));

alter table orders add primary key(o_orderkey);

ALTER TABLE orders ADD FOREIGN KEY (o_custkey)

REFERENCES customer (c_custkey );

Create table lineitem(

L_orderkey int,

L_partkey int,

L_suppkey int,

L_linenumber int,

L_quantity decimal(10,5),

L_extendedprice decimal(10,5),

L_discount decimal(10,5),

L_tax decimal(10,5),

L_returnflag varchar(10),

L_linestatus varchar(10),

L_shipdate varchar(20),

L_commitdate varchar(20),

L_receiptdate varchar(20),

L_shipinstruct varchar(30),

L_shipmode varchar(20),

L_comment varchar(50));

alter table lineitem add primary key(l_orderkey,l_linenumber);

ALTER TABLE lineitem ADD FOREIGN KEY (L_orderkey)

REFERENCES orders (o_orderkey );

P a g e | 16

Column Oriented Databases Vs Row Oriented Databases

ALTER TABLE lineitem ADD FOREIGN KEY (L_partkey)

REFERENCES part (p_partkey );

ALTER TABLE lineitem ADD FOREIGN KEY (L_partkey,l_suppkey)

REFERENCES partsupp (ps_partkey,ps_suppkey );

ALTER TABLE lineitem ADD FOREIGN KEY (L_suppkey)

REFERENCES supplier (s_suppkey );

For Oracle

create table part(

p_partkey integer ,

p_name varchar(55),

p_mfgr char(25),

p_brand char(10),

p_type varchar2(25),

p_size integer,

p_container char(10),

p_retailprice decimal(10,5),

p_comment varchar2(23));

alter table part add primary key(p_partkey);

create table supplier(

s_suppkey integer ,

s_name varchar(25),

s_address varchar(100),

s_nationkey integer,

s_phone varchar(20),

s_acctbal decimal(10,5),

s_comment varchar2(120));

alter table supplier add primary key(s_suppkey);

ALTER TABLE supplier ADD FOREIGN KEY (s_nationkey)

REFERENCES nation(n_nationkey );

create table partsupp(

ps_partkey integer,

ps_suppkey integer,

ps_availqty integer,

ps_supplycost decimal(10,5),

ps_comment varchar2(220));

alter table partsupp add primary key(ps_partkey, ps_suppkey);

ALTER TABLE partsupp ADD FOREIGN KEY (ps_partkey)

REFERENCES part (p_partkey );

ALTER TABLE partsupp ADD FOREIGN KEY (ps_suppkey)

REFERENCES supplier (s_suppkey );

create table customer(

c_custkey integer,

P a g e | 17

Column Oriented Databases Vs Row Oriented Databases

c_name varchar2(30),

c_address varchar2(100),

c_nationkey integer,

c_phone varchar2(30),

c_acctbal decimal(10,5),

c_mktsegment varchar2(20),

c_comment varchar2(150));

alter table customer add primary key(c_custkey);

ALTER TABLE customer ADD FOREIGN KEY (c_nationkey)

REFERENCES nation(N_nationkey );

Create table nation(

n_nationkey integer,

n_name varchar2(50),

n_regionkey integer,

n_comment varchar2(200));

alter table nation add primary key(n_nationkey);

ALTER TABLE nation ADD FOREIGN KEY (n_regionkey)

REFERENCES region (r_regionkey );

Create table region(

R_regionkey integer,

R_name varchar2(25),

R_comment varchar2(180));

alter table region add primary key(R_regionkey);

create table orders(

o_orderkey integer,

o_custkey integer,

o_orderstatus varchar2(10),

o_totalprice decimal(11,5),

o_orderdate varchar2(20),

o_orderpriority varchar2(20),

o_clerk varchar2(20),

o_shippriority integer,

o_comment varchar2(100));

alter table orders add primary key(o_orderkey);

ALTER TABLE orders ADD FOREIGN KEY (o_custkey)

REFERENCES customer (c_custkey );

Create table lineitem(

L_orderkey integer,

L_partkey integer,

P a g e | 18

Column Oriented Databases Vs Row Oriented Databases

L_suppkey integer,

L_linenumber integer,

L_quantity decimal(10,5),

L_extendedprice decimal(10,5),

L_discount decimal(10,5),

L_tax decimal(10,5),

L_returnflag varchar2(10),

L_linestatus varchar2(10),

L_shipdate varchar2(20),

L_commitdate varchar2(20),

L_receiptdate varchar2(20),

L_shipinstruct varchar2(30),

L_shipmode varchar2(20),

L_comment varchar2(50));

alter table lineitem add primary key(l_orderkey,l_linenumber);

ALTER TABLE lineitem ADD FOREIGN KEY (L_orderkey)

REFERENCES orders (o_orderkey );

ALTER TABLE lineitem ADD FOREIGN KEY (L_partkey)

REFERENCES part (p_partkey );

ALTER TABLE lineitem ADD FOREIGN KEY (L_partkey,l_suppkey)

REFERENCES partsupp (ps_partkey,ps_suppkey );

ALTER TABLE lineitem ADD FOREIGN KEY (L_suppkey)

REFERENCES supplier (s_suppkey );

TPC-H Data Insertion

We have downloaded the reference dataset for all the tables in the schema from

www.tpc.prg. The reference data which we got was delimited by bars. So we had to use

some program which takes each token and insert in the specified column.

We used a Java Program to insert rows into the MonetDB as the tool which we are using

DbVisualzer(Trial Edition) did not allow us to import data. The following is the java

program to insert data into customer table

import java.util.*;

import java.io.*;

import java.sql.*;

public class customerscanner {

public static void main(String[] args) throws FileNotFoundException {

Scanner in = new Scanner(System.in);

String insertSQL = " INSERT

INTO "

+ " sys.customer "

+ " ( c_custkey,c_name,c_address, c_nationkey, c_phone, c_acctbal, " +

" c_mktsegment,c_comment)"

+" VALUES ( ";

String url = "jdbc:monetdb://localhost/demo";

Connection con;

P a g e | 19

Column Oriented Databases Vs Row Oriented Databases

Statement stmt;

try {

Class.forName("nl.cwi.monetdb.jdbc.MonetDriver");

} catch(java.lang.ClassNotFoundException e) {

System.err.print("ClassNotFoundException: ");

System.err.println(e.getMessage());

}

while(in.hasNext())

{

String sql = "";

String line = in.nextLine();

StringTokenizer tokenizer = new StringTokenizer(line);

String token = tokenizer.nextToken("|");

sql = insertSQL + token + ",";

token = tokenizer.nextToken("|");

sql += "'"+ token + "',";

token = tokenizer.nextToken("|");

sql += "'"+ token + "',";

token = tokenizer.nextToken("|");

sql += token + ",";

token = tokenizer.nextToken("|");

sql += "'"+ token + "',";

token = tokenizer.nextToken("|");

sql += token + ",";

token = tokenizer.nextToken("|");

sql += "'"+ token + "',";

token = tokenizer.nextToken("|");

sql += "'"+ token + "');";

System.out.println(sql);

try {

con

"monetdb");

//

=

DriverManager.getConnection(url,"monetdb",

System.out.println("Connected to the DB.");

// Create a Statement

stmt = con.createStatement();

//

//

//

P a g e | 20

// Execute the Statement

System.out.println("\nExecuting statement ...");

if(stmt.executeUpdate(sql)<1)

{

System.err.println("An error occurred");

}

System.out.println("table created");

// clean up

stmt.close();

con.close();

sql = "";

} catch(SQLException ex) {

System.err.println("SQLException: " + ex.getMessage());

Column Oriented Databases Vs Row Oriented Databases

}

}

}

}

The following is the code which runs the class and inserts in the specified table.

java -cp .;"C:\Program Files\CWI\MonetDB5\share\MonetDB\lib\monetdb-1.6-jdbc.jar" customerscanner

<"C:\Documents and Settings\friend\Desktop\478\referenceDataSet_2.5\TPCH250_sf1\customer.tbl.1"

For insertion in oracle we used SQLDeveloper which has import data option from excel

sheet. We converted delimited data into excel sheet and named the columns same as the

columns in the table. We then imported it and matched with columns in the table.

MonetDB/SQL

Queries

We performed different TPC_H queries on both MonetDB and Oracle for performance

analysis.

Business Question

The Pricing Summary Report Query provides a summary pricing report for all lineitems

shipped as of a given date. The ship date is 1998-12-01. The query lists totals for

extended price, discounted extended price, discounted extended price plus tax, average

quantity, average extended price, and average discount. These aggregates are grouped by

RETURNFLAG and LINESTATUS, and listed in ascending order of RETURNFLAG

and LINESTATUS. A count of the number of lineitems in each group is included [5].

Query:

select

l_returnflag,

l_linestatus,

sum(l_quantity) as sum_qty,

sum(l_extendedprice) as sum_base_price,

sum(l_extendedprice*(1-l_discount)) as sum_disc_price,

sum(l_extendedprice*(1-l_discount)*(1+l_tax)) as sum_charge,

avg(l_quantity) as avg_qty,

avg(l_extendedprice) as avg_price,

avg(l_discount) as avg_disc,

count(*) as count_order

from

lineitem

where

l_shipdate <= '1998-12-01'

group by

l_returnflag,

l_linestatus

P a g e | 21

Column Oriented Databases Vs Row Oriented Databases

order by

l_returnflag,

l_linestatus;

Response time :

Oracle – 0.177 sec /1.05897(from the system)

MonetDB – 0.063 sec

Query – 2

This query finds which supplier should be selected to place an order for a given part in a

given region.

Business question

The Minimum Cost Supplier Query finds, in a given region, for each part of a certain

type and size, the supplier who can supply it at minimum cost. If several suppliers in that

region offer the desired part type and size at the same (minimum) cost, the query lists the

parts from suppliers with the 100 highest account balances. For each supplier, the query

lists the supplier's account balance, name and nation; the part's number and manufacturer;

the supplier's address, phone number and comment information.

select s_acctbal, s_name, n_name, p_partkey, p_mfgr, s_address, s_phone, s_comment

from part, supplier, partsupp, nation, region

where

p_partkey = ps_partkey

and s_suppkey = ps_suppkey

and p_size > 1

and p_type like '%STEEL'

and s_nationkey = n_nationkey

and n_regionkey = r_regionkey

and r_name = 'AFRICA'

and ps_supplycost = (

select

min(ps_supplycost)

from

partsupp, supplier,

nation, region

where

p_partkey = ps_partkey

and s_suppkey = ps_suppkey

and s_nationkey = n_nationkey

and n_regionkey = r_regionkey

and r_name = 'AFRICA'

)

order by

s_acctbal desc,

n_name,

s_name,

p_partkey;

P a g e | 22

Column Oriented Databases Vs Row Oriented Databases

Response time:

Oracle – 0.764 sec /0.568 (from the System)

MonetDB – 0.016 sec

Query – 3

This query seeks relationships between customers and the size of their orders.

Business Question

This query determines the distribution of customers by the number of orders they have

made, including customers who have no record of orders, past or present. It counts and

reports how many customers have no orders, how many have 1, 2, 3, etc. A check is

made to ensure that the orders counted do not fall into one of several special categories of

orders. Special categories are identified in the order comment column by looking for a

particular pattern.

select c_custkey, count(*) as custdist

from (select c_custkey,count(o_orderkey)

from

customer left outer join orders on

c_custkey = o_custkey

and o_comment not like '%requests%'

group by c_custkey) c_counts

group by c_custkey

order by custdist desc,c_custkey desc;

Response Time

Oracle – 3.762 sec /0.5104(from the system)

MonetDB – 0.062 sec

Based on above queries it clearly shows that MonetDB is much faster than Oracle.

MonetDB More Funcrions

Profile Statement:

The profile statement gives out the execution time, optimize time, parser time for the

queries.

sql> set profile= true;

sql> select count(*)from tables;

sql> select * from profile;

P a g e | 23

Column Oriented Databases Vs Row Oriented Databases

Profile statement for the query 3 in the benchmark data, the optimize is 0 because we have not used any

indexes

Explain Statement:

The explain statement gives the code that is executed behind the query statements. As

said earlier the database is stored in bat files in the monetDB we can see the output of the

below statements involves different bat files.

sql>explain select count(*) from orders;

+------------------------------------------------------------------------------+

|function user.s2_3():void;

|

| _1:bat[:oid,:int]{rows=4000:lng,bid=23374} :=

:sql.bind("sys","orders","o_orderkey",0);

| _6:bat[:oid,:int]{rows=0:lng,bid=28316} :=

P a g e | 24

|

|

|

Column Oriented Databases Vs Row Oriented Databases

:sql.bind("sys","orders","o_orderkey",1);

|

| constraints.emptySet(_6);

|

| _6:bat[:oid,:int]{rows=0:lng,bid=28316} := nil;

|

| _8:bat[:oid,:int]{rows=0:lng,bid=27098} :=

|

:sql.bind("sys","orders","o_orderkey",2);

|

| constraints.emptySet(_8);

|

| _8:bat[:oid,:int]{rows=0:lng,bid=27098} := nil;

|

| _11{rows=4000:lng} := algebra.markT(_1,0@0);

|

| _1:bat[:oid,:int]{rows=4000:lng,bid=23374} := nil;

|

| _12{rows=4000:lng} := bat.reverse(_11);

|

| _11{rows=4000:lng} := nil;

|

| _13{rows=1:lng} := aggr.count(_12);

|

| _12{rows=4000:lng} := nil;

|

| sql.exportValue(1,"sys.","count_","int",32,0,6,_13,"");

|end s2_3;

|

|

Trace Statement:

This statement gives the time taken for each statement of the code behind file of the SQL

statement.

sql>trace select count(*) from orders;

+-------------+----------------------------------------------------------------+

|

0 usec |

| 78000 usec |

:

mdb.setTimer(_2=true)

|

_1:bat[:oid,:int] := sql.bind(_2="sys", _3="orders",

|_4="o_orderkey", _5=0)

|

| 94000 usec | _6:bat[:oid,:int] := sql.bind(_2="sys", _3="orders",

:

|_4="o_orderkey", _7=1)

|

|

|

| 31000 usec | constraints.emptySet(_6=<tmp_67234>bat[:oid,:int]{0})

| 31000 usec | _6:bat[:oid,:int] := nil;

|

| 16000 usec | _8:bat[:oid,:int] := sql.bind(_2="sys", _3="orders",

P a g e | 25

|

|

Column Oriented Databases Vs Row Oriented Databases

:

|_4="o_orderkey", _9=2)

|

| 62000 usec | constraints.emptySet(_8=<tmp_64732>bat[:oid,:int]{0})

| 32000 usec | _8:bat[:oid,:int] := nil;

|

|

| 31000 usec | _11 := algebra.markT(_1=<tmp_55516>bat[:oid,:int]{4000},

:

|_10=0@0)

|

|

| 31000 usec | _1:bat[:oid,:int] := nil;

|

| 31000 usec | _12 := bat.reverse(_11=<tmp_6231>bat[:oid,:oid]{4000})

| 63000 usec | _11 := nil;

|

|

| 31000 usec | _13 := aggr.count(_12=<~tmp_6231>bat[:oid,:oid]{4000})

| 31000 usec | _12 := nil;

|

|

+-------------+----------------------------------------------------------------+

| 4000

|

+-------------+----------------------------------------------------------------+

| 16000 usec | sql.exportValue(_7=1, _15="sys.", _16="count_", _17="int", |

:

|_18=32, _5=0, _19=6, _13=4000, _20="")

|640000 usec | user.s3_3()

|

|

+-------------+----------------------------------------------------------------+

Optimizer control:

We can also control on how we going to optimize using Optimizer control.

LucidDB [7]

LucidDB is the first and only open-source RDBMS purpose-built entirely for data

warehousing and business intelligence. Most database systems (both proprietary and

open-source) start life with a focus on transaction processing capabilities, then get

analytical capabilities bolted on as an afterthought (if at all). By contrast, every

component of LucidDB was designed with the requirements of flexible, highperformance data integration and sophisticated query processing in mind.

Main features:

Category

Storage

P a g e | 26

Feature

Benefits

Very high data compression rates for columns with

many repeated values; reduced I/O for queries which

Column-store tables

access only a subset of columns; greater cache

effectiveness

Column Oriented Databases Vs Row Oriented Databases

Automatically adapts to either bitmap or btree

representation depending on data distribution (even

using both in the same index for different portions of

Intelligent indexing the same table), yielding optimal data compression,

reduced I/O, and fast evaluation of boolean

expressions, without the need for a DBA to choose

index type

Supports read/write concurrency with snapshot

consistency, allowing readers to access a table while

Page-level

multi- data is being bulk loaded or updated; versioning at

versioning

page-level is much more efficient than transactional

multi-versioning schemes such as row-level

versioning or log-based page reconstruction

Avoids reading fact table rows which are not needed

Star join optimization

by query

Optimization Cost-based

join

ordering and index No hints required

selection

Can scale to number-crunch even the largest datasets

Hash

in limited RAM via skew-resistant disk-based

join/aggregation

partitioning

High performance and greater cache and disk

Coming

soon: effectiveness because LucidDB can almost always

Intelligent prefetch predict exactly which disk blocks are needed to

Execution

satisfy a query

Tables can be loaded directly from external sources

via SQL; no separate bulk loader utility is required

INSERT/UPSERT as (for performance, loads are never logged at the rowbulk load

level, yet are fully recoverable via page-level undo);

the SQL:2003 MERGE statement provides standard

upsert capability

Allows LucidDB to connect to heterogeneous

SQL/MED

external data sources via foreign data wrappers and

architecture

access their content as foreign tables

Allows foreign tables in any JDBC data source to be

JDBC foreign data

queried via LucidDB, with filters pushed down to the

wrapper

source where possible

Connectivity

Flat file foreign data Allows flat files (e.g. BCP or CSV format) to be

wrapper

queried as foreign tables via LucidDB

Allows new foreign data wrappers (e.g. for accessing

Pluggability

data from a web service) to be developed in Java and

hot-plugged into a running LucidDB instance

Allows new functions and transformations to be

Extensibility SQL/JRT architecture

developed in Java and hot-plugged into a running

P a g e | 27

Column Oriented Databases Vs Row Oriented Databases

User-defined

functions

User-defined

transformations

SQL:2003

JDBC

Standards

J2EE

LucidDB instance; LucidDB also comes with a

companion library of common ETL functions

(applib)

Allows the set of builtin functions to be extended

with custom user logic

Allows new table functions (such as custom logic for

data mining operators or CONNECT BY queries) to

be added to the system

Smooths migration of applications to and from other

DBMS products

Allows connectivity from popular front-ends such as

the Mondrian OLAP engine

Java architecture enables deployment of LucidDB

into a J2EE application server (just like hsqldb or

Derby); usage of Java as the primary extensibility

mechanism makes it a snap to integrate with the

many enterprise API's available

Architecture:

The core consists of a top-half implemented in Java and a bottom half implemented in

C++. This hybrid approach yields a number of advantages:

the Java portion provides ease of development, extensibility, and integration, with

managed memory reducing the likelihood of security exploits

P a g e | 28

Column Oriented Databases Vs Row Oriented Databases

the C++ portion provides high performance and direct access to low-level

operating system, network, and file system resources

the Java runtime system enables machine-code evaluation of SQL expressions via

a combination of Java code generation and just-in-time compilation (as part of

query execution)

The sections below provide high-level overviews of some of the most innovative

components.

In LucidDB, database tables are vertically partitioned and stored in a highly compressed

form. Vertical partitioning means that each page on disk stores values from only one

column rather than entire rows; as a result, compression algorithms are much more

effective because they can operate on homogeneous value domains, often with only a few

distinct values. For example, a column storing the state component of a US address only

has 50 possible values, so each value can be stored using only 6 bits instead of the 2-byte

character strings used in a traditional uncompressed representation.

Vertical partitioning also means that a query that only accesses a subset of the columns of

the referenced tables can avoid reading the other columns entirely. The net effect of

vertical partitioning is greatly improved performance due to reduced disk I/O and more

effective caching (data compression allows a greater logical dataset size to fit into a given

amount of physical memory). Compression also allows disk storage to be used more

effectively (e.g. for maintaining more indexes).

The companion to column store is bitmap indexing, which has well-known advantages

for data warehousing. LucidDB's bitmap index implementation takes advantage of

column store features; for example, bitmaps are built directly off of the compressed row

representation, and are themselves stored compressed, reducing load time significantly.

And at query time, they can be rapidly intersected to identify the exact portion of the

table which contributes to query results.

Although LucidDB is primarily intended as a read-only data warehouse, write operations

are required for loading data into the warehouse. To allow reads to continue during data

loads and updates, LucidDB uses page versioning. Data pages are read based on a

snapshot of the data at the start of the initiating transaction. When a page needs to be

updated, a new version of the page is created and chained from the original page. Each

subsequent write transaction will create a new version of the page and add it to the

existing page chain. Therefore, long-running, read-only transactions can continue to read

older snapshots while newer transactions will read more up-to-date snapshots. Pages that

are no longer in use can be reclaimed so the page chains don't grow forever.

Query Optimization and Execution

LucidDB's optimizer is designed with the assumptions of a data warehousing

environment in mind, so no hints are needed to get it to choose the best plan for typical

P a g e | 29

Column Oriented Databases Vs Row Oriented Databases

analytical query patterns. In particular, cost-based analysis is used to determine the order

in which joins are executed, as well as which bitmap indexes to use when applying tablelevel filters and star join optimizations. The analysis uses data statistics gathered and

stored as metadata in the system catalogs, allowing the optimizer to realistically compare

one option versus another even when many joins are involved. By using cost-based

analysis in a targeted fashion for these complex areas, LucidDB is able to consider a large

space of viable candidates for join and indexing combinations. By using heuristics in

other areas, LucidDB keeps optimization time to a minimum by avoiding an explosion in

the search space.

LucidDB is capable of executing extract/transform/load processes directly as pipelined

SQL statements, without any external ETL engine required.

Installation

It can be installed from the following website. We installed the windows version it is still

beta version. Lucid 0.7.2 is the latest http://www.luciddb.org/

First you need to set the environment variable for JAVA_HOME.

After that from the command prompt we installed the install.sh file from install folder.

Then we opened the luciddb server.bat file . Once the server is listening we starting

running the queries from sqlclient.

Jdbc url : jdbc:luciddb:rmi://localhost

Website – http://www.luciddb.org

Classname – com.lucidera.jdbc.luciddbrmidriver

lucidDbClient jar files are located at plugin folder.

Set Query Benchmark Results

This page provides the LucidDB-specific setup needed to run the classic Set Query

Benchmark.

Create Schema

First, create the schema and table which will hold the loaded data:

CREATE SCHEMA SQBM;

SET SCHEMA 'SQBM';

CREATE TABLE BENCH1M (

KSEQ INTEGER PRIMARY KEY,

K2 INTEGER,

K4 INTEGER,

K5 INTEGER,

K10 INTEGER,

K25 INTEGER,

K100 INTEGER,

K1K INTEGER,

K10K INTEGER,

P a g e | 30

Column Oriented Databases Vs Row Oriented Databases

K40K INTEGER,

K100K INTEGER,

K250K INTEGER,

K500K INTEGER,

S1 VARCHAR(8),

S2 VARCHAR(20),

S3 VARCHAR(20),

S4 VARCHAR(20),

S5 VARCHAR(20),

S6 VARCHAR(20),

S7 VARCHAR(20),

S8 VARCHAR(20)

);

Data Source

For the data source we downloaded the luciddb-sqbm-testdata from the website which

has the file name bench1m.csv. The following sql inserts the data into the table. The flat

file is created as a foreign server.

LucidDb as the plugin SQL/MED for accessing flat files. The data file may be associated

with a control file that colntains column descriptions.

The flat file wrapper is accessed by SQL/MED interface.

The data wrapper is defined as sys_file_wrapper. A foreign server is defined which

describes the directory of files corresponding to single schema. data files can be accessed

by creating a foreign table . The plugin is included as part of the LucidDB distribution; a

corresponding foreign data wrapper instance named SYS_FILE_WRAPPER is

predefined by LucidDB initialization scripts. A File foreign data wrapper provides the

capability to mediate access to file foreign servers. You can define a flat file foreign

server to access files in a particular directory.

CREATE SERVER FF_SERVER

FOREIGN DATA WRAPPER SYS_FILE_WRAPPER

OPTIONS(

DIRECTORY '../luciddb-sqbm-testdata/',

FILE_EXTENSION '.csv',

CTRL_FILE_EXTENSION '.bcp',

FIELD_DELIMITER ',',

LINE_DELIMITER '\n',

QUOTE_CHAR '"',

ESCAPE_CHAR '',

WITH_HEADER 'yes',

NUM_ROWS_SCAN '3'

);

CREATE FOREIGN TABLE BENCH_SOURCE (

C1 INTEGER,

C2 INTEGER,

C4 INTEGER,

C5 INTEGER,

C10 INTEGER,

C25 INTEGER,

C100 INTEGER,

P a g e | 31

Column Oriented Databases Vs Row Oriented Databases

C1K INTEGER,

C10K INTEGER,

C40K INTEGER,

C100K INTEGER,

C250K INTEGER,

C500K INTEGER

)

SERVER FF_SERVER

OPTIONS(

SCHEMA_NAME 'BCP',

FILENAME 'bench1M'

);

Data insertion

We executed the following SQL to load the data from the flat file into the LucidDB table.

This will load actual data for the columns whose names start with K, whereas per the

benchmark specs, it will synthesize constant data for the columns whose names starts

with S.

INSERT INTO BENCH1M (

KSEQ,K2,K4,K5,K10,K25,K100,K1K,K10K,K40K,K100K,K250K,K500K, S1, S2, S3, S4,

S5, S6, S7, S8)

SELECT C1,C2,C4,C5,C10,C25,C100,C1K,C10K,C40K,C100K,C250K,C500K,

'12345678', '12345678900987654321', '12345678900987654321',

'12345678900987654321', '12345678900987654321',

'12345678900987654321',

'12345678900987654321', '12345678900987654321'

FROM BENCH_SOURCE;

Creating indexes in LucidDB

0: jdbc:luciddb:rmi://localhost:5434> CREATE INDEX B1M_K2_IDX ON BENCH1M(K2);

No rows affected (2.453 seconds)

0: jdbc:luciddb:rmi://localhost:5434> CREATE INDEX B1M_K4_IDX ON BENCH1M(K4);

No rows affected (1.75 seconds)

0: jdbc:luciddb:rmi://localhost:5434> CREATE INDEX B1M_K5_IDX ON BENCH1M(K5);

No rows affected (1.109 seconds)

0: jdbc:luciddb:rmi://localhost:5434> CREATE INDEX B1M_K10_IDX ON BENCH1M(K10);

No rows affected (1.125 seconds)

0: jdbc:luciddb:rmi://localhost:5434> CREATE INDEX B1M_K25_IDX ON BENCH1M(K25);

No rows affected (6.719 seconds)

0: jdbc:luciddb:rmi://localhost:5434> CREATE INDEX B1M_K1K_IDX ON BENCH1M(K1K);

No rows affected (9.422 seconds)

0: jdbc:luciddb:rmi://localhost:5434> CREATE INDEX B1M_K10K_IDX ON BENCH1M(K10K) ;

No rows affected (20.625 seconds)

0: jdbc:luciddb:rmi://localhost:5434> CREATE INDEX B1M_K40K_IDX ON BENCH1M(K40K);

;

No rows affected (19.625 seconds)

0: jdbc:luciddb:rmi://localhost:5434> CREATE INDEX B1M_K100K_IDX ON BENCH1M(K100

K);

No rows affected (13.203 seconds)

0: jdbc:luciddb:rmi://localhost:5434> CREATE INDEX B1M_K250K_IDX ON BENCH1M(K250

K);

No rows affected (15.75 seconds)

P a g e | 32

Column Oriented Databases Vs Row Oriented Databases

0: jdbc:luciddb:rmi://localhost:5434> CREATE INDEX B1M_K500K_IDX ON BENCH1M(K500

K);

No rows affected (14.875 seconds)

0: jdbc:luciddb:rmi://localhost:5434> CREATE INDEX B1M_K2_K100_IDX ON BENCH1M(K2

,K100);

No rows affected (17.25 seconds)

0: jdbc:luciddb:rmi://localhost:5434> CREATE INDEX B1M_K4_K25_IDX ON BENCH1M(K4,

K25);

No rows affected

0: jdbc:luciddb:rmi://localhost:5434> CREATE INDEX B1M_K10_K25_IDX ON BENCH1M(K1

0,K25);

No rows affected (15.297 seconds)

Queries:

select * from bench1m;

lucidDB

339.563 sec - 6 mins

Oracle - 15 mins

SELECT COUNT(*) FROM BENCH1M

WHERE

(KSEQ BETWEEN 40000 AND 41000

OR KSEQ BETWEEN 42000 AND 43000

OR KSEQ BETWEEN 44000 AND 45000

OR KSEQ BETWEEN 46000 AND 47000

OR KSEQ BETWEEN 48000 AND 50000)

AND K10 = 3;

luciddb - 1.25 sec

Oracle -34.44 sec

SELECT KSEQ, K500K FROM BENCH1M

WHERE

K2 = 1

AND K100 > 80

AND K10K BETWEEN 2000 AND 3000;

lucidDB - 6.25 sec

Oracle - 7.289 sec

SELECT K2, K100, COUNT(*) FROM BENCH1M

GROUP BY K2, K100;

luciddb - 1.156

Oracle - 2.03 sec

P a g e | 33

Column Oriented Databases Vs Row Oriented Databases

Advantages and Disadvantages:

Pros:

1. Data compression: The repeating column values are represented by the single

column value and also different projections can be used for storing the column in

a format that is used mostly.

2. Improved Bandwidth Utilization: Only the required data is read from the disk, it

does not read any extra data or columns as in the case of the row oriented

database.

3. Improved Code Pipelining: CPU cycle performance is saved with the column

oriented as we use the performance only for the required attributes.

4. Improved cache locality: the cache in the column oriented contains only the

required data instead of the unnecessary data which is the case for the row

oriented database.

Cons:

1. Increased Disk Seek Time: As multiple columns are read in parallel increases the

time disk seek time.

2. Increased cost of Inserts: Small inserts will need more time because as column

oriented data is stored in columns, multiple places needs to be updated.

3. Increases tuple reconstruction costs: while interfacing with the Drivers and JDBC

the reconstruction of the row from these columns takes more time and offsets the

advantages of the column oriented database.

Summary:

Column architecture doesn’t read unnecessary columns

Avoids decompression costs and perform operations faster.

Use compression schemes allow us to lower our disk space requirements.

After the completion we felt that determining of the performance of a database

system we need to consider different factors which we have left out such as the

CPU cost, Plan etc.

5. From the above advantages and disadvantages we can say that column oriented

databases can be used only in the places where the requirements satisfies the

advantages of column oriented databases as in OLAP, Data mining etc..,.

1.

2.

3.

4.

P a g e | 34

Column Oriented Databases Vs Row Oriented Databases

References:

1. Wikipedia, http://en.wikipedia.org/wiki/Column-oriented_DBMS

Accessed – 14-sep-2007

2. http://db.lcs.mit.edu/projects/cstore/abadisigmod06.pdf

Accessed – 14-sep-2007

3. http://marklogic.blogspot.com/2007/03/whats-column-oriented-dbms.html

Accessed – 14-sep-2007

4. http://en.wikipedia.org/wiki/MonetDB

Accessed – 14-sep-2007

5. http://monetdb.cwi.nl/projects/monetdb/SQL/QuickTour/index.html

Accessed – 14-sep-2007

6. Compression and Query Execution within Column Oriented Databases by

Miguel C. Ferreira , MASSACHUSETTS INSTITUTE OF TECHNOLOGY

June 2005

7. http://www.luciddb.org/

Accessed by 30-nov-2007.

P a g e | 35