NSF Proposal - Seidenberg School of Computer Science and

advertisement

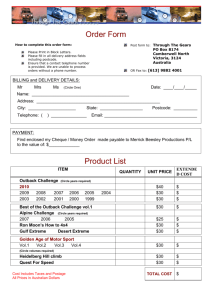

Narrative 8a 2/13/16 5:12 AM Combining human and machine capabilities for improved accuracy and speed in critical visual recognition tasks Proposal to National Science Foundation Solicitation for Computer Vision NSF 03-602 4201 Wilson Blvd. Arlington, VA 22230 Submitted by The School of Computer Science and Information Systems Pace University, Pleasantville, NY 10570-9913 and Department of Electrical, Computer, and Systems Engineering Rensselaer Polytechnic Institute, Troy, NY 12180-3590 1 Narrative 8a 2/13/16 5:12 AM 1 Technical Proposal 1.1 Introduction We are interested in enhancing human-computer interaction in applications of pattern recognition where higher accuracy is required than is currently achievable by automated systems, but where there is enough time for a limited amount of human interaction. This topic has so far received only limited attention from the research community. Our current, model-based approach to interactive recognition was originated at Rensselaer Polytechnic Institute (RPI)1, and then investigated jointly at RPI and Pace University2. Our success in recognizing flowers establishes a methodology for continued work in this area. We propose herein to extend our approach to interactive recognition of foreign signs, faces, and skin diseases. Our objective is to develop guidelines for the design of mobile interactive object classification systems; to explore where interaction is, and where it is not, appropriate; and to demonstrate working recognition systems in three very different domains. Human participation in operational pattern recognition systems is not a new idea. All classification algorithms admit some means of decreasing the error rate by avoiding classifying ambiguous samples. The samples that are not classified are called "rejects," and must, in actual applications, be classified by humans. Reject criteria are difficult to formulate accurately because they deal with the tails of the statistical feature distributions. Furthermore, most classifiers generate only confidence, distance, or similarity measures rather than reliable posterior class probabilities. Several samples – always more than two – must be rejected to avoid a single error3. The efficiency of handling rejects is important in operational systems, but does not receive much attention in the research literature. In other systems, the interaction takes place at the beginning: the patterns are located or isolated manually4. Our motivation for interactive pattern recognition is simply that it may be more effective to make parsimonious use of human visual talent throughout the process rather than only at the beginning or end. We are particularly interested in human-computer symbiosis through direct, model-based interaction with the image to be classified, where human and computer steps alternate, and in visual reference to already labeled images. We believe that our approach is quite general, and propose to demonstrate this by tackling three entirely different applications with the same method. Automated systems still have difficulty in understanding foreign language signs and in recognizing human faces. As an application of sign recognition, imagine a tourist or a soldier who fails to recognize signs, possibly including military warnings or hazards. We therefore propose a handheld device with an attached camera and associated interactive visual sign recognition program (Fig. 1). While a computer is good at recognizing strings of foreign characters or symbols and at translating them into English, it may fail to segment the regions of interest, and to correct perspective distortion. But humans are good at locating words and characters and at rectifying images even though they may have inadequate knowledge of foreign languages. Face recognition has obvious scientific, commercial, and national security applications (Fig. 2). The highest recognition rates are achieved by systems where face location, isolation, and 2 Narrative 8a 2/13/16 5:12 AM registration depend on human help, and where humans resolve any remaining ambiguities (which are often attributed to inadequate reference photos). However, in contrast to our approach, very few of the previous systems attempt to systematically integrate human interaction throughout the entire process. Interactively crop a ROI Interactive slant/size/rotation/ affine transform “Art and playing” Interactive segmentation Language translation “미술 과 놀이” Interactive recognition Fig. 1: Interactive visual system for sign recognition. Crop a face Interactive feature extraction Top 3 results <prev next> Sung-Hyuk Cha 72% Charles C. Tappert 11% George Nagy Interactive recognition 6% Fig. 2: Interactive visual system for face recognition. 3 Narrative 8a 2/13/16 5:12 AM Many conditions that pose a threat to human life and well-being cause visible changes of the outer surface of the skin. Such conditions can be potentially diagnosed by visual recognition. The integrated computer-camera system could be operated by the patient, or by personnel with very limited training. Aside from the intrinsic suitability of this domain for research on interactive recognition, even imperfect recognition of skin diseases would have major societal impact. The availability of a low-cost, portable screening or diagnostic tool would be of significant benefit to the following constituencies: 1. Military personnel deployed in situations conducive to skin lesions (extreme weather conditions, inadequate hygiene, disease-bearing rodents or insects), or exposed to biological attack. 2. Industrial and agricultural workers who handle noxious chemicals, plants or animals, or very hot or cold materials. 3. Civilian populations exposed to toxins (e.g. cutaneous anthrax, smallpox, plague, and tularemia). 4. Subjects hesitant to consult medical personnel unless absolutely necessary, e.g. youths with acne or sexually transmitted diseases, and indigent old people with eczema. The proposed system could suggest further action to persons afflicted with symptoms that indicate potential danger. 5. Paramedical personnel who require additional training in identifying malignant skin conditions. 6. Segments of the general population in areas with a shortage of dermatologists (rural districts and third-world countries). Early identification of conditions like melanoma has a huge impact on the chance of successful treatment. The time is right for launching an intensive study of computer-aided methods for identification, screening, triage, and improved data collection of skin conditions that affect large segments of the population even under normal conditions. During the last few years, large, well-organized digital atlases of skin conditions have become widely available. Unlike flower databases, these atlases typically contain many pictures of the same condition, enabling statistically valid studies of the diagnostic accuracy of proposed systems. The John Hopkins Dermatology Image Atlas – a cooperative undertaking of several hundred specialists – contains 10,000 images that represent nearly 1000 different diagnoses. Over 200 of the diagnoses have more than 10 pictures each. The dermatology image atlas of the University of Erlangen is of similar size. We have also located several smaller and more specialized collections. Many of the images are publicly accessible on the web, while others can be obtained on compact disk, for research purposes, from the sponsoring organizations. Skin diseases are typically categorized into about 100 types by body site (scalp, face, anogenital), organization (annular, reticulated, clustered linear), pattern (asymmetric central, intertrigenous), morphology (depressed, plaqulous, ulcerated, blister, pustule), and coloration. From the perspective of image processing, many of these indicators can be defined in terms of spatial and 2 Narrative 8a 2/13/16 5:12 AM spectral distributions (e.g., color texture). Additional diagnostic information, such as pain, fever, itching, bleeding, chronology (onset, diurnal variation) and phenomenology (insect bite, change of diet, handling chemicals) would have to be entered by the patient or operator. Therefore, wholly automated operation is completely excluded, and consequently the subject has so far largely eluded the attention of the image processing and machine learning communities. Photographing appropriate segments of the skin is clearly the most sensitive part of the necessary information acquisition. More than one image may be necessary, from different angles and distances, and perhaps with different illuminations (including IR). Conducting the examination in privacy, at a time and place of the patient's choosing, may increase participation. The system will include dynamically updated information about the probability of occurrence and the risk (expected cost) of errors of omission and commission for the various disease conditions in different regions and seasons. For example, under normal conditions, it would take extremely strong evidence to suggest smallpox. The above three applications differ significantly from flower recognition and from each other. OCR and machine translation are well established, face recognition shows striking improvement at every competition, while only a few dozen papers have been published on image processing for skin disease or flowers. Sign recognition requires obtaining a bilevel foreground-background image, face recognition makes use of both geometry and intensity, for flower, color and gross shape have proved most important, and skin disease categorization depends on color texture. Contextual information for some signs and flowers may be based on location, but little has been proposed so far for face identification. Unlike signs and faces, the recognition of skin lesions requires not only interaction with the images, but also solicitation of relevant facts known only to the patient. Clients are mainly amateur botanists for flowers, travelers for signs, law enforcement officers for faces, and medical personnel and "patients" for skin disease. All three applications, however, require mobile sensors and only low-throughput processing, and can benefit from intervention by minimally trained personnel. All of them will also allow us to explore the advantages of taking additional pictures in the course of the recognition process. 1.2 Related Prior Studies Research on interactive mobile object recognition systems is rare: although interactive systems are often built for commercial applications, they are not investigated scientifically. We have found no work at all on direct, iterative interaction with images for object recognition, which is the major focus of this proposal. In the handwriting analysis area, Cha and Srihari have measured features interactively with dedicated graphical user-interface toolsD4, and Nagy et al. have explored interactive model adjustment for feature extraction and object recognitionD1,5,6,7. 1.2.1 Interactive recognition of flowers Computer vision systems still have difficulty in differentiating flowers from rabbits8. Flower guides are cumbersome, and they cannot guarantee that a layperson unfamiliar with flowers will achieve accurate classification. We observed that it takes two minutes and fifteen seconds on average to identify a flower using a field guide or key. We therefore developed a PC-based system called CAVIAR (Computer Assisted Visual InterActive Recognition), and a mobile, hand-hand 3 Narrative 8a 2/13/16 5:12 AM prototype called IVS (Interactive Visual System) for identifying flowers2. The mobile setting presents the additional challenge of an unconstrained and unpredictable recognition environment. In IVS and CAVIAR, the user may interact with the image anytime that he or she considers the computer's response unsatisfactory. Some features are extracted directly, while the accuracy of others is improved by adjusting the fit of the computer-proposed model. Fitting the model requires only gestalt perception, rather than familiarity with the distinguishing features of the classes (Figure 3). The computer makes subsequent use of the parameters of the improved model to refine not only its own statistical model-fitting process, but also its internal classifier. Classifier adaptation is based on decision-directed approximation9,10,11, and therefore benefits by human confirmation of the final decision. The automated parts of the system gradually improve with use, thereby decreasing the need for human interaction. As an important byproduct, the human's speed of interaction and judgment of when interaction is beneficial also improves. Our experiments demonstrated both phenomena. (a) (b) Fig. 3 (Color): The rose curve (blue), superimposed on the unknown picture, plays a central role for the communication (interaction) between human and computer. (a) Initial automatic rose curve is poor, so is the recognition. (b) After the user adjusting some rose curve parameters, the computer displays the correct candidate. Our evaluation of CAVIAR on 30 naïve subjects and 612 wildflowers of 102 classes (species) showed that (1) the accuracy of the CAVIAR system was 90% compared to 40% for the machine alone; (2) when initialized on a single training sample per class, recognition time was 16.4 seconds per flower compared to 26.4 seconds for the human alone; (3) decision-directed adaptation, with only four samples per class, brought the recognition time down to 10.7 seconds without any additional ground-truthed samples or any compromise in accuracy. 4 Narrative 8a 2/13/16 5:12 AM We successfully reengineered our PC-based system for a handheld computer with camera attachment (IVS). This camera-handheld combination provides the user with means to interact directly and immediately with the image, thereby taking advantage of human cognitive abilities. Of course, it also provides the option of taking additional images, perhaps from an angle that provides better contrast. It is much faster than unassisted humans, and far more accurate than a computer aloneD2. IVS draws on innate human perceptual ability to group "similar" regions, perceive approximate symmetries, outline objects, and recognize "significant" differences. It exploits computer capability of storing image-label pairs, quantifying features, and computing distances in an abstract feature space of shapes and colors. The IVS architecture was developed specifically for isolated object recognition in the field, where the time available for classifying each image is comparable to that of image acquisition. Whereas many of the more interesting mobile applications are communications based, IVS was autonomous. The proposed extensions will explore the pros and cons of networked operations. 1.2.2 Prior work on sign, face, and skin disease recognition Signs are a common medium for short, one-way communication. We will focus on street signs, store signs, parking directions, electronic railway and airplane cabin signs, and especially on foreign signs. Automatic sign recognition and translation systems have been studied12,13,14 and a tourist assistant system has been developed15. The system we propose differs from IBM's heavily publicized infoScope handheld translator system16,17,18 primarily in terms of its interactivity. Machine translation has been improving steadily during the last four decades, and example-based machine translation has been widely deployed19. However, most fully automated systems depend on perfect input, which is not likely to be the case with hand-held camera-based OCR. Attempts to extract features from face images were initiated at Bell Labs and SRI in the 1970s20,21,22. Bledsoe advocated man-machine interaction already in 196623. Since then, many face recognition algorithms have been proposed, including template matching, neural networks24,25,26, and eigenfaces27,28,29. Yet, the face recognition problem is still considered unsolved. In eigenface recognition, principal components are extracted for each face after computing the mean face from all the face images in the database, and then the nearest-neighbor algorithm is often used to classify the unknown faces. However, the proposed interactive face recognition system will use some geometrical feature representation, like the Elastic Bunch Graph Model30 or Line Edge Map31, because the eigenface model is incomprehensible to humans and therefore cannot serve as the basis for productive interaction. With such a geometric model, we expect to obtain high Top-k accuracy as with eigenfaces, especially after iterative adjustment of the features. Day and Barbour have reviewed automated melanoma diagnosis32, and Stoecker and Moss have recently updated their more general review of digital imaging in dermatology33. Considerable research has been conducted on boundary tracing of tumors and skin lesions34,35 (Fig. 4), and on color-based segmentation36,37,38. Automated classification of both broad39 and narrow skin disease categories has also been investigated, with reports of up to 80% correct classification of malignant and benign tumors40. Under no circumstances could a physician be expected to accept a diagnosis solely on the basis of automated image processing, but the nature of additional considerations is seldom discussed in reports whose major focus is novel neural network architectures. The need for 5 Narrative 8a 2/13/16 5:12 AM human intervention to guide segmentation seems to us evident both from the above articles and from our inspection of several hundred skin-lesion images in dermatology atlases41,42. Fig. 4 (Color): Automated skin lesion detection in digital clinical images using geodesic active contours D35. The segmented boundary can be made to fit a parametric model, with which the user can interact to guide classification. 1.3 Proposed interactive recognition systems Typical pattern classification problems involve capturing scene images, segmentation, feature extraction, classification, and post-processing. While each of these steps introduces errors in a fully automated vision system, many of these errors can be easily corrected in an interactive system. For effective interaction, we will make use of established research on human information processing43,44,45, and of guidelines developed for human-computer interaction in other contexts46,47,48. The next three sections describe how interaction benefits segmentation, feature extraction, and classification. 1.3.1 Segmentation The success encountered 30-40 years ago in classifying images of simple objects gave impetus to the continuing development of statistical pattern classification techniques. In actual applications, results were often limited by the ability to isolate the desired objects within the larger image. For example, most errors in character recognition were traced to touching or broken glyphs 49. Therefore, experiments designed to demonstrate classification algorithms often made use of handsegmented samplesD4. Pattern classification techniques attain a certain degree of generality by formulating them as operations on some arbitrary vector feature space, but no equivalent general formalization has been developed for segmentation. Progress in segmentation, that is, in the demarcation of objects from each other or from an arbitrary background, stalled early. Segmentation still remains a bottleneck in scene analysis. Humans easily perceive object outlines, but this information is difficult to communicate to a computer. For this reason, we propose model-based human computer interaction with the images. For flowers, our model was a six-parameter rose curve. An example of a simple model for signs is 6 Narrative 8a 2/13/16 5:12 AM an affine transformable (and therefore also scalable) rectangular template. For foreign sign recognition, the computer will first attempt to locate the sign in the image and transform it to a rectangle. If this is unsatisfactory, the user will first crop a region of interest and then rotate the template in 3D. From the affine transformation (which for small angles is a good approximation to a projective transformation), the computer receives rotation and angle corrections as inputs to the recognizer. For face recognition, fixing the pupil-to-pupil line is usually sufficient for automated extraction of geometric features. For both sign and face recognition, we will provide also means to interactively enhance insufficient contrast due to illumination, which is a major hindrance to segmentation at all levels. Human intervention will be applied only when machine segmentation fails. The exceptional human ability to perceive entire objects – "gestalt" perception – has been studied for centuriesD44,50. The human visual system is able to isolate occluding objects from one another and from complex backgrounds, under widely varying conditions of scale, illumination and perspective. Although some figure-background illusions may invert foreground and background, this does not hamper accurate location of visual borders. It is clear that human ability should be focused on tasks that humans can perform quickly and accurately, and where computer algorithms are error prone. For many pattern recognition applications, segmentation is such a task. Automated segmentation algorithms often home-in correctly on part of the boundary, but make egregious excursions elsewhere. The segmentation need be accurate enough only for automated feature extraction and classification. It must therefore be evaluated from the perspective of the classifier, rather than using some arbitrary distance from the true boundary. In our flower experiments, the correlation between conventional measures of segmentation and classification accuracy was quite low. 1.3.2 Feature Extraction Most fully automated visual pattern recognizers require extraction of a feature vector from the scene. Furthermore, automatically extracted features often differ from those used by human experts, e.g., in fingerprint and document examination. Feature extraction is difficult for fully automated vision systems because of inadequate segmentation. For example, under extreme conditions of illumination or camera angle, face recognition systems cannot always accurately locate features such as the eyes or ears. Hence, we propose investigating interactive model adjustment to improve feature extraction. Given a good model of an object (a flower or a face), our experiments show that automated methods can be reliable for accurate feature extraction. 1.3.3 Classification Humans are able to compare a pair of complex objects and assess their degree of similarity. The metrics on which we base our judgment of similarity are complex and have been thoroughly investigated in psychophysicsD50,51,52,53. What is important here is only that the goal of most pattern recognition systems is to classify objects like humans. In most domains, automated systems lag far behind humans in discriminating objects from similar classes. While some of the difference may be due to elaborate human exploitation of contextual clues, humans are 7 Narrative 8a 2/13/16 5:12 AM unquestionably better than current programs at detecting significant visual differences at the expense of incidental ones. Our judgment of similarity can be exercised effortlessly and flawlessly on a pair of images, but it takes much longer to compare a query image to several dozen classes of reference images. On the other hand, pattern recognition algorithms can often achieve very low error rates in "Top-k" classification tasks. For example, on hand-written city names in postal address sorting, the correct name is included among the top ten candidates 99% of the time. For many-class problems, this suggests using the automated system to cull out a large fraction of the unlikely candidates, and then letting the human make the final choice among similar classes (possibly after inspecting also reference images not included in the Top-k). 1.4 Proposed Research Issues To make use of complementary human skill and automated methods in difficult segmentation and discrimination tasks, it is necessary to establish seamless communication between the two. The key to efficient interaction is the display of the automatically fitted model that allows the human to retain the initiative throughout the classification process. We believe that such interaction must be based on a visible model, because (1) high-dimensional feature space is incomprehensible to the human, and (2) the human is not necessarily familiar with the properties of the various classes, and cannot judge the adequacy of the current decision boundaries. This precludes efficient interaction with the feature-based classifier itself. Furthermore, human judgment of the adequacy of the machine-proposed prototypes, compared visually to the unknown object, is far superior to any classifier-generated confidence measure. In contrast to classification, deciding whether two pictures are likely to represent the same class does not require familiarity with all the classes. The protocol we propose, after lengthy experimentation with alternative scenarios in our flower identification studies, is the following: 1. Rough machine segmentation of the unknown object, based on some visual model of the family of objects of interests, and on parameters extracted from "ground truth" segmentation of a set of training samples. The results of the segmentation are presented graphically by fitting the model to the object. 2. Human refinement of the object boundary – as many times as necessary – by means of an appropriate graphical interface. Rough segmentation suffices in many cases. More precise segmentation is necessary for machine classification only for more easily confused objects. 3. Automated ranking of the classes in order of the most likely class assignment of the previously segmented unknown object. The classifier accepts automatically extracted feature vectors that may represent shape, color, or texture and, for skin disease, "invisible" symptoms reported by the patient. 4. Display of labeled exemplars of the top-ranked classes for human inspection. Provisions are made also for inspection of additional reference samples from any class. 8 Narrative 8a 2/13/16 5:12 AM 5. Final classification by selection of the exemplar most similar, according to human judgment, to the unknown object. We will also explore the possibility of extending this protocol to include interactive feature extraction. 1.4.1 Problems to be solved We plan to conduct research aimed at solving the problems implicit in the above protocol for object recognition. Item 1 requires the formulation of a parametric model for each family of interest. It also requires statistical estimation of the model parameters from a set of already segmented samples. For Item 2, we must develop an efficient method of interactive refinement of an imperfect object boundary, based on our success with rapid segmentation of flowers in complex backgrounds. After each corrective action, it is desirable to have the machine attempt to improve the segmentation by taking advantage of an improved start state, as do CAVIAR and IVS. The method should also be applicable to efficient segmentation of the training samples for Item 1. Item 3 needs a set of feature extraction algorithms, and a statistical classifier appropriate for the number of features and the number of training samples. If the classes are not equally probable, their prior probabilities should be determined. Since both feature extraction and statistical classification have received much attention in the context of pattern recognition tasks of practical interest, we will use only off-the-shelf algorithms. (The first of our "state-of-the-art" surveys of classification algorithms, including neural networks, appeared in 1968.) All statistical classification algorithms are capable of ranking the classes according to posterior probability. The display of Item 4 depends, however, on the complexity of the object and the size of the available canvas. In mobile systems with diminutive displays, provisions must be made for scrolling or paging the candidate images and the individual reference samples of each class. This item requires only familiarity with GUI design principles and tools. Because an exemplar of the correct class is brought into view sooner or later either by the automated classifier or by the operator scrolling through the display, Item 5 requires only a mouse or stylus click on an image of the chosen class. Although the five steps are presented above in linear order, the operator may at any time return to refining the current segmentation instead of searching for additional exemplars in the ranked candidate list. Of course, in a well-designed system the correct class is usually near the head of the list. We have formalized the sequence of transitions of visual recognition states as a Finite State Machine. 9 Narrative 8a 2/13/16 5:12 AM The combination of human and machine classification tends to yield a very low error rate. It is therefore advantageous to add each classified sample into the current reference set. In our experience, additional samples improve the estimates of segmentation and classification parameters even if a few of these samples are mislabeled in consequence of errors in classification. So far we have discussed only stand-alone mobile systems. Stand-alone systems can learn only from their master, and remain ignorant of global changes affecting the classification strategy (like a change in the relative probabilities of the classes). Therefore an urgent topic of research for us is that of collaborative interactive pattern recognition modeled on David Stork's Open Mind initiative54,55. We envisage all users of individual recognition systems in a specific domain to contribute images, adjusted models, and classifier-assigned models to an ensemble database accessible to each system. This would effectively multiply the number of patterns available for either supervised or semi-supervised estimation of both model and classifier parameters. A further, but evanescent advantage of performing calculations on a host is the reduced computational dependence on the handheld. Assigning data acquisition and interaction to the hand-held, and database and computational functions to the host, is an example of the type of symbiosis advocated in56. 1.5 Evaluation Interactive pattern recognition systems can be evaluated in terms of the time expended by the human operator, and the final error rate. More detailed criteria are appropriate for studying the value of various options, like the interface, on learning by both the machine and the subject. For such studies, an automated logging system capable of capturing all user actions is indispensable. The log generated from experimental sessions can be transferred to software appropriate for statistical evaluation and report generation. We propose to adopt the log-based CAVIAR evaluation paradigm to all the applications, as described below. 1.5.1 Sign recognition Of the three proposed application areas for our proposed interactive recognition studies, sign recognition is probably the problem closest to being solved, particularly in light of IBM’s infoScope system. It is also the problem that we anticipate will have the simplest model (affine transformable rectangular template) and the simplest interface (for human correction of the rectangle corner locations). This application, therefore, will be the first to be ported to a mobile platform (anticipated within the first year) and experimentally studied with the interactive protocol. 1.5.2 Face identification From our previous face recognition work using the eigenface technique, we have a small database of face images from 60-80 students, containing 10 images for each individual: 9 face-direction poses (looking up left, up center, up right, mid left, mid center, mid right, low left, low center, and low right) and one expression pose (smiling or making a funny face). In those experiments we tested the classifier’s ability to generalize from images of one or several poses to other poses. 10 Narrative 8a 2/13/16 5:12 AM The two main groups of face recognition algorithms are feature-based and image-based algorithms. Because the image-based eigenface model is extremely sensitive to small variations and is incomprehensible to humans, for this study we plan to use a feature-based geometric model that is not only more conventional but also allows for interactive adjustment of facial features appropriate for our interactive studies. Furthermore, the geometric models collapse most of the face image variance due to position, size, expression, and pose, by extracting concise face descriptions in the form of image graphs (Fig. 5). For comparative purposes we plan to obtain results on available databases previously studied, such as the NIST FERET database57and the Bochum database58. We will include evaluation of recognition accuracy across pose. However, our primary evaluations will be comparisons of the speed and accuracy of the interactive system relative to those of the human alone and the machine alone. Figure 5. 3D face recognition template for orientation/size correction and feature extraction. 1.5.3 Cutaneous recognition During the first year, we will develop models of specific lesions and appropriate color and texture features for 100 different skin diseases, and test the modified CAVIAR system on 30 naïve subjects, using photographs from the available databases. The size and scope of these experiments is deliberately chosen to be similar to that of the flower experiments that we have already conducted. During the second year, we will port the system to the mobile platform, and test the interaction on the database images. We will first develop a stand-alone hand-held system, but keep in mind the advantages of linking the system to the Internet for access to changing conditions. Network access will also allow transmittal of the pictures and ancillary information to expert medical personnel, and perhaps also to public health record-keeping organizations, but this aspect is beyond the scope of the proposed research. Experiments on and with live subjects suffering from abnormal skin conditions are not envisioned in the current project, because they would require the advice and supervision of expert medical personnel. If our preliminary experiments are successful, we will prepare a proposal to NIH, in 11 Narrative 8a 2/13/16 5:12 AM collaboration with the Albany Medical Center and possibly Albert Einstein, Mount Sinai, and Cornell University Hospital, for further development and testing. 1.6 Schedule There are several tasks to be performed, some of which can be performed simultaneously rather than sequentially. The individual tasks are as follows: Task 1: Visual Data Collection and Acquisition Task 2: Interactive Image Preprocessing (Segmentation and size, orientation correction) Task 3: Feature Extraction (both automatic and interactive features) Task 4: Interactive Classifier Design Task 5: Testing Some Milestones are listed below: 0-6 months 7-12 months 13-18 months 19-24 months collect or select images of foreign sign samples, faces, and skin lesions survey and develop programs to extract features interactive visual image preprocessing refine segmentation and preprocessing algorithms design both automatic and interactive feature extractors design classifiers perform statistical hypothesis testing perform large-scale tests identify areas for improvement write a technical report 1.7 Intellectual Merit This study will advance our knowledge and understanding of the appropriate role of humancomputer interaction in the recognition of patterns in computer vision, particularly in the segmentation of objects from each other or from an arbitrary background. The study covers a diversity of areas including computer vision, pattern segmentation and recognition, pervasive computing, security, and man-machine interaction. The major proposed contribution is direct, model-based interaction in situ with images of objects to be recognized. Our research team has extensive experience in these areas, and our preliminary creative and original work on human-computer interaction for flower classification has resulted in faster recognition, at an acceptable error rate, than that achievable by either human or machine alone. The proposed system will not only improve with use, but it will also help its operator learn important visual classification skills. We have also established well-defined guidelines for extending these studies to other difficult pattern recognition problems such as the ones proposed here. While past progress in pattern segmentation has lagged progress in pattern classification, we 12 Narrative 8a 2/13/16 5:12 AM anticipate that a better understanding of how the segmentation and classification processes interact will lead to advances in both entirely automated segmentation and in interactive segmentation and classification techniques. 1.8 Broader Impacts In our School of Computer Science and Information Systems at Pace University we currently hold a weekly graduate-level research seminar in which we teach the students how to conduct research and then have each of them perform a small research study. Also, in elective Computer Vision and Pattern Recognition courses as well as in our core two-semester capstone Software Engineering graduate course in Computer Science, student project teams develop real-world computer information systems for actual customers59, and several of these projects have been related to our proposed interactive recognition study. Last year, as part of our preliminary work in the proposed area, a project team reengineered the PC-based system CAVIAR system developed at RPI for a handheld computer with camera attachment. The resulting Interactive Visual System (IVS) exploited the pattern recognition capabilities of humans and the computational power of a computer to identify flowers based on features that are interactively extracted from an image and submitted for comparison to a species database. Other projects investigated face recognition techniques such as eigenface. We plan to promote teaching, training, and learning in both research and project system development by having students in these courses work on research and projects related to the proposed activity. Pace University has three campuses located in the greater metropolitan area of New York City, and we have an above average percentage of students of underrepresented (minority) groups in terms of ethnicity, disability, nationality, and gender. We will make use of this diversity, particularly of multi-lingual students, to provide knowledge of the meaning of various signs that will be the focus of a major component of the proposed activity. While Pace University has been primarily a teaching institution, the proposed study will help to enhance our infrastructure for combining research with education, and the proposed partnership with RPI, a more research oriented institution, will also help in this regard. Rensselaer's motto is "Why not change the world?" Our ambition is to provide students with the intellectual capital for this task. Adaptive classification and interactive recognition have been integrated into our courses on Pattern Recognition and on Picture Processing. We have supervised 16 PhD students conducting related research (including two women) and have 6 currently in the pipeline (including one woman). In May 2004 one student will complete his doctoral dissertation on a formal specification of, and extensive experimentation with, CAVIAR. Five undergraduate research assistants (including two women) have also made significant contributions to CAVIAR. Although we are not convinced that archival research journals need to publish every PhD dissertation, we have always encouraged our graduate students to present their work and to become familiar with the work of others at conferences and workshops. We have also been successful in finding summer internships for them at leading industrial research laboratories. 13 Narrative 8a 2/13/16 5:12 AM The societal benefits of the proposed activity are substantial. The distribution of wildflowers in a region is recognized as a valuable indicator of environmental stress. The work on sign recognition should enable people to use handheld computers to understand signs in foreign languages as they travel abroad, or as they visit ethnic sections of our larger cities, such as Chinatown in New York City. Progress on interactive face detection and face recognition should be welcome for homeland security and other identification purposes. As we have indicated, even a tentative diagnosis of skin diseases can greatly benefit both at risk civilian populations and military personnel. We are already disseminating our preliminary results at conferences, and if this research is funded, will set up a web site for public access to our flower, sign, face, and skin lesion databases, to our interactive algorithms, and to the results of comparative evaluations. 1.9 Conclusion Founded on the successful but unconventional joint project between RPI and Pace on an interactive visual system for flowers, we propose to explore three additional application areas, namely foreign sign recognition, face identification, and diagnosis of skin diseases. Our objective is to develop guidelines for the design of mobile interactive object classification systems; to explore where interaction is, and where it is not, appropriate; and to demonstrate working systems that improve with use for all three applications. G. Nagy and J. Zou, “Interactive Visual Pattern Recognition,” Proc. Int. Conf. Pattern Recognition, vol. III, pp. 478-481, 2002. 1 A. Evans, J. Sikorski, P. Thomas, J. Zou, G. Nagy, S.-H. Cha, and C. C. Tappert, “Interactive Visual System,” Pace CSIS Tech. Rep., no.196, 2003. 2 C.K. Chow, “An Optimum Character Recognition System Using Decision Functions,” IEEE-EC vol. 6, pp. 247-254, 1957. 3 S. Cha and S.N. Srihari, “Handwritten Document Image Database Construction and Retrieval System,” Proc. SPIE Document Recognition and Retrieval VIII, vol. 4307, pp. 12-21, San Jose, California, 2001. 4 J. Zou and G. Nagy, “Computer Assisted InterActive Recognition: Formal Description and Evaluation,” submitted to Int. Conf. Computer Vision and Pattern Recognition, 2004. 5 J. Zou and G. Nagy, “Evaluation of Model-Based Interactive Flower Recognition,” submitted to Int. Conf. Pattern Recognition, 2004. 6 J. Zou, “A Procedure for Model-Based Interactive Object Segmentation,” submitted to Int. Conf. Pattern Recognition, 2004. 7 A. Hopgood, “Artificial Intelligence: Hype or Reality?” IEEE Computer, vol. 36, no. 5, pp. 2428, May 2003. 8 G. Nagy and G.L. Shelton, “Self-Corrective Character Recognition System,” IEEE Trans. Information Theory, vol. 12, no. 2, pp. 215-222, 1966. 9 G. Nagy and H.S. Baird, “A Self-Correcting 100-Font Classifier," Proc. SPIE Conference on Document Recognition, vol. 2181, pp. 106-115, San Jose, CA, February 1994. 10 14 Narrative 8a 2/13/16 5:12 AM 11 R.O. Duda, P.E. Hart, and D.G. Stork, Pattern Classification, Wiley 2000. J. Gao and J. Yang, “An Adaptive Algorithm for Text Detection from Natural Scenes,” Proc. Computer Vision and Pattern Recognition, vol. 2, pp. 84-89, 2001. 12 J. Yang, J. Gao, J. Yang, Y. Zhang, and A. Waibel, “Towards Automatic Sign Translation,” Proc. Int. Conf. Human Language Technology, 2001. 13 I. Haritaoglu, “Scene Text Extraction and Translation for Handheld Devices,” Proc. IEEE Conf. Computer Vision and Pattern Recognition, vol. 2, pp. 408-413, 2001. 14 J. Yang, W. Yang, M. Denecke, and A. Waibel, “Smart Sight: A Tourist Assistant System,” Proc. Third International Symposium on Wearable Computers, pp. 73-78, 1999. 15 16 http://www.almaden.ibm.com/software/user/infoScope/index.shtml; last accessed on Jan. 5, 2004. 17 http://www.pcmag.com/article2/0,4149,441830,00.asp; last accessed on Jan. 5, 2004. 18 http://ogasawalrus.com/MobileDot/1037259076/index_html; last accessed on Jan. 5, 2004. R.D. Brown, “Example-Based Machine Translation in the Pangloss System,” Proc. Int. Conf. on Computational Linguistics, pp. 169-174, 1996. 19 L.D. Harmon, “The Recognition of Faces,” Scientific American, vol. 229, pp. 71-82, October 1974. 20 A.J. Goldstein, L.D. Harmon, and A.B. Lesk, “Identification of Human Faces,” Proc. IEEE, vol. 59, pp. 748-760, May 1971. 21 M.A. Fischler and R.A. Elschlager, “The Representation and Matching of Pictorial Structures,” IEEE Tran. Computers, vol. 22, no. 1, 1971. 22 W.W. Bledsoe, “Man Machine Facial Recognition,” Technical Report PRI 22, Panoramic Research Inc., August 1966. 23 R. Brunelli and T. Poggio, “Face Recognition: Features vs. Templates,” IEEE Trans. Pattern Analysis and machine, vol.12, no.1, 1990. 24 S. Ranganath and K. Arun, “Face Recognition Using Transform Features and Neural Network,” Pattern Recognition, vol. 30, pp. 1615-1622, 1997. 25 S. Lawrence, C. Giles, A. Tsoi, and A. Back, “Face Recognition: A Convolutional Neural Network Approach,” IEEE Trans. Neural Networks, vol. 8, pp. 98-113, 1997. 26 M. Turk and A. Pentland, “Eigenfaces for Recognition,” Journal of Neuroscience, vol. 3, no. 1, 1991. 27 I. Craw, N. Costen, T. Kato, and S. Akamatsu, “How Should We Represent Faces for Automatic recognition,” IEEE Tran. Pattern Analysis and Machine Intelligence, vol. 21, no. 8, pp.725-736 1999. 28 P. Belhumeur, J. Hespanha, and D. Kriekman, “Eigenfaces vs. Fisherfaces: Recognition Using Class Specific Linear Projection,” IEEE Trans. Pattern Analysis Machine Intelligence, vol.19, no.7, pp. 711-720, 1997. 29 15 Narrative 8a 2/13/16 5:12 AM L. Wiskott, J.-M. Fellous, N. Kruger, and C. von der Malsburg, “Face Recognition by Elastic Bunch Graph Matching,” IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 19, no. 7, pp. 775-779, 1997. 30 Y. Gao and M. Leung, “Face Recognition Using Line Edge Map,” IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 24, no.6, pp.764-779, 2002. 31 G.R. Day and R.H. Barbour, “Automated Melanoma Diagnosis: Where Are We At?” Skin Research and Technology, vol. 6, pp. 1-5, 2000. 32 W.V. Stoecker and R.H. Moss, “Editorial: Digital Imaging in Dermatology,” Computerized Medical Imaging and Graphics vol. 16 no. 3, pp. 145-150, 1995. 33 Z. Zhang, W.V. Stoecker, and R.H. Moss, “Border Detection on Digitized Skin Tumor Images,” IEEE Trans. Medical Imaging, vol. 19, no. 11, pp. 1128–1143, November 2000. 34 D.H. Chung and G. Sapiro, “Segmenting Skin Lesions with Partial-Differential-EquationsBased Image Processing Algorithms,” IEEE Trans. Medical Imaging, vol. 19, no. 7, pp. 763-767, July 2000. 35 P. Schmid, “Segmentation of Digitized Dermatoscopic Images by Two-Dimensional Color Clustering,” IEEE Trans. Medical Imaging, vol. 18, no. 2, pp. 164–171, Feb. 1999. 36 M. Herbin, A. Venot, J.Y. Devaux, and C. Piette, “Color Quantitation through Image Processing in Dermatology,” IEEE Trans. Medical Imaging, vol. 9, no. 3, pp. 262–269, Sept. 1990. 37 G.A. Hance, S.E. Umbaugh, R.H. Moss, and W.V. Stoecker, “Unsupervised Color Image Segmentation: With Application to Skin Tumor Borders,” IEEE Engineering in Medicine and Biology Magazine, vol. 15, no. 1, pp. 104–111, Jan.-Feb. 1996. 38 M. Hintz-Madsen, L.K. Hansen, J. Larsen, E. Olesen, and K.T. Drzewiecki, “Design and Evaluation of Neural Classifiers Application to Skin Lesion Classification,” IEEE Workshop on Neural Networks for Signal Processing (NNSP'95), pp. 484-493, 1995. 39 F. Ercal, A. Chawla, W.V. Stoecker, H.-C. Lee, and R.H. Moss, “Neural Network Diagnosis of Malignant Melanoma from Color Images,” IEEE Trans. Biomedical Engineering, vol. 41, no. 9, September 1994. 40 41 http://www.dermis.net/index_e.htm; last accessed on Jan. 1, 2004. 42 http://dermatlas.med.jhmi.edu/derm/; last accessed on Jan. 1, 2004. P.M. Fitts, “The Information Capacity of the Human Motor System in Controlling the Amplitude of Movement,” Journal of Experimental Psychology, vol. 47, pp. 381-391, 1954. 43 F. Attneave, “Some Informational Aspects of Visual Perception,” Psychological Review, vol. 61, pp. 183-193, 1954. 44 G. Miller, “The Magical Number Seven Plus or Minus Two; Some Limits on Our Capacity for Processing Information,” Psychological Review, vol. 63, pp. 81-97, 1956. 45 B.A. Myers, “A Brief History of Human Computer Interaction Technology,” ACM interactions, vol. 5, no. 2, pp. 44-54, March 1998. 46 16 Narrative 8a 2/13/16 5:12 AM B. Myers, Scott E. Hudson, and Randy Pausch, “Past, Present and Future of User Interface Software Tools,” John M. Carroll, ed. HCI In the New Millennium, New York: ACM Press, Addison-Wesley, pp. 213-233, 2001. 47 E. Horvitz, “Principles of Mixed-Initiative User Interfaces,” Proc. of CHI ’99, ACM SIGCHI conf. Human Factors in Computing System, pp. 159-166, May, 1999. 48 49 S.V. Rice, G. Nagy, and T.A. Nartker, Optical Character Recognition: An Illustrated Guide to the Frontier, Kluwer Academic Publishers, 1999. 50 S.E. Palmer, Vision Science, Photons to Phenomenology, MIT Press, 1999. I. Biederman, “Recognition-by-components: A Theory of Human Image Understanding,” Psychological Review, vol. 94, pp. 115-147, 1987. 51 E.E. Cooper and T.J. Wojan, “Differences in the Coding of Spatial Relations in Face Identification and Basic-Level Object Recognition,” Journal of Experimental Psychology: Learning, Memory, and Cognition, vol. 26, no. 2, pp. 470-488, 2000. 52 M.J. Tarr and H.H. Blthoff, “Image-Based object recognition in man, monkey, and machine,” Cognition, Special issue on Image-based Recognition in Man, Monkey, and Machine, vol. 67, pp. 1-20, 1998. 53 D.G. Stork, “Toward a Computational Theory of Data Acquisition and Truthing,” Proc. Computational Learning Theory (COLT 01), David Helmbold (editor), Springer Series in Computer Science, 2001. 54 D.G. Stork, “Using Open Data Collection for Intelligent Software,” IEEE Computer, pp. 104106, October 2000. 55 M. Raghunath, C. Narayanaswami, C. Pinhanez, “Fostering a Symbiotic Handheld Environment,” IEEE Computer, September 2003. 56 P.J. Phillips, H. Moon, S.A. Rizvi, and P.J. Rauss, “The FERET Evaluation Methodology for Face-Recognition Algorithms,” IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 22, no. 10, pp. 1090-1104, 2000. 57 K. Okada, J. Steffans, T. Maurer, H. Hong, E. Elagin, H. Neven, and C.v.d. Malsburg, “The Bochum/USC Face Recognition System and How it Fared in the FERET Phase III Test,” Face Recognition: From Theory to Applications, Eds. H. Wechsler, P.J. Phillips, V. Bruce, F.F. Soulie and T.S. Huang, Springer-Verlag, pp. 186-205, 1998. 58 C.C. Tappert, “Students Develop Real-World Web and Pervasive Computing Systems,” Proc. E-Learn 2002 World Conf. on E-Learning in Corporate, Government, Healthcare, and Higher Ed., Montreal, Canada, October 2002. 59 17