Summary Report - International Council for Scientific and Technical

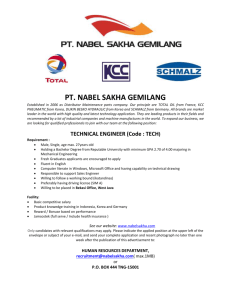

advertisement