Forecasting

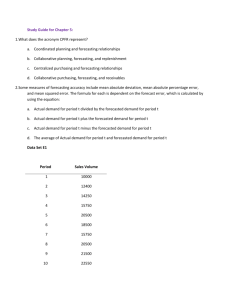

advertisement

Forecasting Handout for G12, Michaelmas term 2001 Lecturer: Stefan Scholtes The aim of forecasting is to predict a future value of a variable such as temperature, amount of rainfall, demand for a product, interest rate, air quality index, etc. A typical forecasting process proceeds as follows: i) Construct a parametric model of the data generating process. If possible, this model should incorporate as much information as possible about the nature of the data generating process (e.g. diffusion models for weather forecasts). Use historic data to estimate the unknown parameters in the model (model fit). Compute the forecasted values. Subject the forecast to expert judgement, intuition and common sense and adjust if necessary. ii) iii) iv) Time series. To get an idea about the variable that one wishes to forecast, it is most sensible to begin with a graphical plot of historic data, ordered by time points. Such data is called a time series. A typical time series plot looks like this: Time Series 200 180 160 Demand 140 120 100 80 60 40 20 0 0 10 20 30 Tim e 40 50 The above time series seems to show an upwards trend. There is also apparently some cyclical buying behaviour as well as two seasons, a downwards season in the middle of the year and an upward season towards the end of the year. Finally, there is obviously quite a bit of erratic buying behaviour present. It helps to analyse these structural components of a time series separately. To do this, we start from a model Yt t ct st t where t , ct , st are the deterministic trend, cyclic and seasonal components of the series, while t is a random variable which models the erratic behaviour. Trend. A trend is a monotonic (increasing or decreasing) function of time. The simplest such function is a linear trend t a bt . Other popular trend models are quadratic a bt ct 2 , exponential a be ct , or logarithmic a b ln( ct ) . If t a , independently of time t then we say that there is no trend in the time series. Cycles. Cycles are typically modelled using sine and cosine functions 2t 2t ct c sin( ) d cos( ), p p where p is the cycle length, i.e. ct ct p , c 2 d 2 is the maximal value of ct c during a cycle, and t s arctan( ) is the times at which this maximal value is d achieved. Whilst c, d can be estimated on the basis of historic data, using regression, the analyst has to provide the cycle length p . This is often done on the basis of knowledge of the underlying process. One may for instance know that ones customers tend to buy either at the end or beginning of a month and that demand in the middle of the months is rather low. Seasons. Seasons are periods where the behaviour of the time series is notably different from the behaviour outside of the season. The difference between a season and a cycle is that in a cycle the change is continuous over time and, at least in our modelling of cycles, a regular up and down, whilst in a seasonal pattern, the change in the time series can be discontinuous as time moves into a season. A popular way of modelling seasons is through so-called dummy variables (sometimes also called indicator variables) which take on values 0 or 1. Their value is 1 at times t during the season and 0 at times t outside the season. The model for this is st ei X it , where e i is a parameter and X it is a variable under your control with 1 if t is in the season X it 0 if t is outside the season As in the case of the cycle length, the analyst has to specify the seasons and thereby give X it their respective values for the historic time series at hand. Regression can then be used to estimate the parameters e i which amount to a constant increase or decrease of the time series variable (e.g. demand) during the season. Seasonal patterns are more general than cycles and a seasonal model with dummy variables can be fit to pure cyclic behaviour if sufficiently many dummy variables are used. The decomposition of a time series into trend, cycles, seasons and erratic behaviour ( t yt t ct st ) is illustrated in the following graph: Decomposition of Time Series 200 150 Trend 100 Cycles Seasons Random 50 Demand 0 -50 0 10 20 30 40 50 Tim e The random component. Our ability to forecast values of the time series variable depends obviously on our knowledge of the distribution of the erratic behaviour. The simplest assumption would be to assume that the random components t are independent and identically distributed (i.i.d) for all t and have zero means. This is the main assumption for the statistical analysis of regression models. Because of the independence assumption, earlier observations of the time series do not provide information about subsequent observations. The best forecast for the pure random component in the i.i.d. case is its expectation zero. The last graph has been generated with an i.i.d random component with normal distribution and zero mean. Unfortunately, many time series show correlations between the random components e t . This has far reaching consequences since it means that the statistical analysis of time series cannot rely on the fairly simple analysis of regression models, which assumed independent random errors. A popular model for the dependent random components in time series is a so-called autoregressive process: t a b1 t 1 ... bk t k ut , where the u t ’s are i.i.d random variables. The difference to the pure i.i.d model is that the expected value of t depends on the observed random effects at earlier times t 1,..., t k . Such a process is model is called AR(k), where k is the number of earlier time periods that are taken into consideration. Such autoregressive models are the basis for a sophisticated forecasting technique called the Box-Jenkins method. The presentation of this approach goes beyond the scope of this introduction and can be found in most books on time-series analysis. Digestion. We have seen general models for trends, cycles, seasons and random components of a time series. The models can be additively combined to a parametric model of the time series. The unknown parameters of this model are typically estimated by fitting the model to historic data, e.g., by using a least squares approach. The statistical analysis of such an approach is more complicated than that of a standard regression model since random errors in time series are typically correlated and also since forecasting, by its very nature, aims to predict the value outside of the range of the independent variable (time) of the historic data. We have seen that regression estimators tend to become very volatile outside of the range of historic x values (here t -values). Because of the difficulty of a sound statistical analysis for forecasting procedure, forecasting is much more an art than standard regression modelling. Whenever a forecast is being made, it should be subjected to expert judgement and common sense and adjusted if necessary. Fitting a forecast model. As with regression models, forecasting models involve unknown parameters p that are usually determined so that the model fits the data reasonably well. i) Root mean square error (RMSE): Recall that the standard measure of fit for regression models was the sum of squared deviations of model prediction from observation. We called this measure the sum of squared errors, SSE ( p) and wanted it to be as small as possible. The same set of “optimal” parameters would be obtained if we minimised the so-called mean square error SSE ( p) / n since the number of data points n is fixed and the minimizer of a function f does not change if the function is multiplied by a positive constant. More generally, g ( f ( x)) has the same minimizers as f (x) if g (.) is strictly increasing on the range of values of f . Therefore, the least squares parameters minimise not only the mean square error but also its root SSE( p) / n , which is called the root mean square error. This measure is often used to compare fits for forecasting models. Bear in mind that it is just a monotonic transformation of the sum of squared errors. ii) Forecast bias: Forecasting is often done by heuristic procedure which may well be biased. The forecast bias is defined by FB ( f (t , p) y ) t # of observatio ns in the times series . If FB is significantly positive then the forecast tends to be too high, while a negative value of FB indicates that it is too low. It may sometimes be sensible to correct for bias by subtracting FB from the forecast. However, it may be more sensible to adjust the parameters of the forecasting model to reduce the bias. iii) It is very important to graphically check the forecasting model against the time series before it is used. You should in particular check the ability of the model to predict turning points in the series. The prediction of turning points is vitally important for companies since it allows them to adjust their production and inventory policies to future changes in the market. Forecasters are often willing to sacrifice small mean square errors and/or small bias for accurate predictions of turning points. The fitting of a forecasting model to time series data can be conveniently done with an Excel add-in called Solver. It allows you to solve (reasonably small) optimisation problems. To invoke it, choose the solver command from the tools menu1 and you will see the solver command dialog box. In the case of curve fitting, input as target cell the cell that computes the fitting measure, e.g. the sum of squared errors. Click on Min for minimisation, and input under changing cells the array that contains the parameters of your model. Now click on solve and the solver will return the set of parameters that minimises your fitting measure.2 Try this out with the spreadsheet Time Series Fit.xls, workbook “Fitting a nonlinear model”. Here the fitting measure is in cell K7 and the parameters are in cells B7:B13. Notice that the parameter p is not variable; it has been fixed to the true value 10. Play with this parameter and resolve to see how sensitive the result depends on p. In fact, you may want to include it as an optimisation variable, i.e. re-run the optimisation with changing cells B7:B14. By doing this you have reduced your fitting measure, i.e. the new model fits the data better. But was that a good idea if you compare the results with the true parameters? De-seasoning time series. We have seen above that seasonality can be modelled by using dummy variables, one for each season. The parameters in such a model can then be estimated using, e.g., a least squares criterion. There is a simple alternative way of dealing with seasonality: We can “de-season” the data first, then forecast values for the de-seasoned series and “re-season” again. There are various ways of “de-seasoning” a time series. They basically all assume that the analyst has identified the number and timing of the seasons, either from the underlying data generating process or from inspection of the time series. Suppose we are looking at seasons over a year and have several years of data. Then for each year you compare the annual period average in year i 1 If there is no solver command under the tools menu, click Add-ins and activate solver add-in. If there is no solver add-in in the list of add-ins, you will have to load it from the Excel CD (your computer officer can probably help you with this). 2 Generally speaking, the solver will only return a local optimum which depends on the starting values for your parameter set. In the case of fitting a “base”-function type model f ( x, p) pi fi ( x) the solution will be the globally optimal solution, independently of the initial values of the parameters. You will learn more about the solver in the second part of G12. y Ai t t in year i # measuremen ts in year i with the period average in season j of year i : y S ij t t in season j of year i # measuremen ts in season j of year i . The seasonal factor for season j of year i is then S ij / Ai and the overall seasonal factor for season j is the average seasonal factor over all years in the time series sj S ij / Ai all years i # years in the time series . A season that tends to overshoot the annual average will have a seasonal factor larger than 1. To de-season the time series, you divide the time series values during the season by the corresponding seasonal factor. Then you perform a forecast for the deseasoned time series, e.g. by exponential smoothing. Afterwards this, you re-season again by multiplying the respective seasonal factor to the forecast.3 Smoothing procedures. Since a statistical analysis of the “best fit” forecasting models is difficult, heuristic procedures, based on the idea of “smoothing” the time series, are often used for forecasting. The simplest procedure is a moving average: Given the time series up to time t 1, forecast for time t is Ft yt 1 ... yt m . m Finding the right value of m is again not easy. The more volatile the time series is, the larger m should be to allow for the cancellation of positive and negative fluctuations. Considerable experience is required to obtain useful forecasts by a moving average approach. A very popular alternative to moving averages is the so-called exponential smoothing procedure. Given a time series y1 ,..., yT , this procedure generates an associated “smoothed” time-series of forecasts F1 ,..., FT 1 starting with F1 y1 according to the formula 3 Our computation of seasonal factors here was based on averaging the annual seasonal factors over all years in the time series. If there is a believe that the impact of seasons change over time, then there are alternative ways of computing the seasonal factors to be used in the forecast. One such way is to look at the time series of seasonal factors s j and use variants of the exponential smoothing techniques explained in the sequel. Ft 1 Ft Et , where Et yt Ft is the forecast error of the previous period and is a constant between 0 and 1. Notice that for 0 we make never update our forecast, while for 1 our forecast is the “last-value” forecast. The smaller the parameter the “smoother” the series of predictions Ft . In exponential smoothing, the time series is used to “train” the forecast. The forecast value Ft 1 depends only on the value yt and the previously generated forecast Ft and therefore the “smoothed” series FT 1 . The value FT 1 is the forecast for the next period in the future. Exponential smoothing does not perform particularly well in the presence of a trend. One way of addressing this problem is to first estimate by fitting a suitable regression model to the time series data and then subtracting the estimated trend from the data. This new “de-trended” time series can then be forecast using e.g. exponential smoothing. After this, the trend is added again to the data. An extension of exponential smoothing is the so-called double exponential smoothing which explicitly incorporates a trend. This procedure is based in a linear model E ( yt ) a bt . Notice that E ( yt 1 ) E ( yt ) b . This identity is the basis for the double exponential smoothing procedure, which forecasts E ( y t 1 ) as a sum of two components Ft 1 A Bt , t Base level Trend where At is a proxy for E( y t ) and Bt is a proxy for b E ( yt ) E ( yt 1 ) . If we replace the expectation by the observation, i.e. set At y t and Bt At At 1 , then we track the time series data, lagging one time step behind. To incorporate a smoothing component, we update At according to the exponential smoothing formula At At 1 ( yt ( At 1 Bt 1 )) . Notice that Bt 1 occurs here since our prediction of yt is At 1 Bt 1 . Having this update of At , we could use the formula Bt At At 1 . However, it turns out that this incorporates substantial fluctuations into our prediction of the trend slope b . It is better to smooth these fluctuations out by smoothing the series of values At At 1 . This is done in the same way as the smoothing of the original time series yt , i.e. rather than taking the last observation At At 1 as a prediction of b , we use the last observation corrected by a multiple of the “prediction error”: Bt Bt 1 ( At At 1 Bt 1 ) where is a suitable constant between 0 and 1, typically an order of magnitude smaller than . The method is fairly sensitive to the initial value of the trend at time t=0. A sensible starting value is the slope of a linear regression line through the time series. The following plot illustrates the advantage of two-parameter exponential smoothing in the presence of trend. Time Series 100 90 80 70 Time Series 60 Single exponential smoothing 50 Double exponential smoothing 40 Linear (Time Series) 30 20 10 0 1 11 21 31 41 51 Tim e Both, single and double exponential smoothing forecasts capture the cyclic pattern of the time series reasonably well, but the single forecast is biased towards lower values, as can be seen by comparing it with the regression line. The double exponential forecast allows us not only to compute a forecast FT 1 for one period ahead of the time series but also to forecast several periods ahead by FT m FT 1 (m 1) BT . Not only trends are badly caught by one-parameter exponential smoothing; it does also not cope well with seasonal patterns. In practice, seasonality is often dealt with by de-seasoning the data, e.g., as explained above. An alternative is the so-called triple exponential smoothing method, which depends on three parameters and incorporates trend as well as seasonality explicitly. Details can be found in most books on forecasting. Error estimates. One way of getting an idea of the potential error level involved in a forecast is to do a theoretical analysis of the distribution of the forecast error. This can be done under simplifying assumptions, e.g., along the lines of our probabilistic analysis of predictions of simple regression models. A simpler alternative to a theoretical analysis of the forecast error is to check how well the exponential smoothing procedure predicted values in the past and project that into the future. To do this, de-season (and in the case of single exponential smoothing de-trends) the time series. Give the smoothing procedure a “run-in” time of several periods (the number depends on the size of your time series). After the run-in time record the errors made by the exponential smoothing forecast. Take the empirical distributions of these errors as a proxy of the distribution of the errors for your forecast. A typical empirical cumulative distribution function looks like this (see Champagne Sales.xls): CDF of Forecast Errors 100.0% 90.0% 80.0% 70.0% 60.0% 50.0% 40.0% 30.0% 20.0% 10.0% 0.0% -1.6 -1.4 -1.2 -1 -0.8 -0.6 -0.4 -0.2 0 0.2 0.4 0.6 0.8 1 1.2 1.4 1.6 1.8 2 2.2 Error An empirical 90% confidence interval can now be obtained, by cutting off the 5% lowest and 5% highest errors, i.e. it is of the from [Forecast - u, Forecast + v], where u is such that F (u ) 5% and v is such that F (v) 95% . If you wish to estimate the distribution of forecasting errors several periods ahead, you must compute the historical forecasting errors several periods ahead. The further ahead you forecast, the larger the confidence intervals become. Judgemental forecasting. We have mentioned already that forecasting is more of an art than a science. Analytical forecasting techniques aim to recover the generating mechanism for a time series from historic data. The two techniques that we have considered, regression and exponential smoothing, suffer from different flaws. Regression assumes that you start with the “right” model to begin with and only adjust the parameters. Exponential smoothing only picks up information that is “present” in the time series. Neither procedure includes potentially available “outside” information such as, e.g., the knowledge that a new competitor is about to enter the market, that a new technology is emerging, or that demand patterns are changing. To account for this flaw, it is very important to subject the technical forecasting analysis to expert knowledge and common sense, making clear all assumptions used in the forecast. Expert opinion can be obtained by talking to individual managers or to a jury of executives. An interesting approach is the so-called Delphi method: Ask the members of a jury independently about their forecast4. Then reveal all these forecasts (or some summary statistics) to all members of the jury and ask them to adjust their forecast in the light of the forecast of the other jury members. Continue until no one adjusts their forecast anymore. If you are lucky, this process converges somehow to a consensus or close to a consensus. If not, then there is a substantial divergence of opinion – which is important to bear in mind in decision making. To form an own opinion, the decision maker can invite the jury to a meeting for a discussion of their diverging opinions. An often applied judgemental forecasting technique for demand estimates is the salesforce composite. Estimates are created by the sales force and sent up through the hierarchy, possibly adjusted by the line managers. Obviously, consumer surveys or test marketing can be invaluable complements to technical demand forecasts, in particular if there is substantial uncertainty, as in the case of a substantially altered product or price. References. Please read Chapter 18 of Hillier and Lieberman, Introduction to Operations Research. More in-depth information on forecasting and its applications in a business context can be found in specialized texts such as J.E. Hanke, D.W. Wichern, A.G. Reitsch, Business Forecasting, Prentice Hall 2001. 4 It is better to ask for an interval than for a point forecast. Also, one of the jury members may well be a “technical” forecaster, who uses analytic techniques like the ones explained earlier.