5. Problem Solving Methods

advertisement

Chapter 15 - Introduction to Problem Solving Methods

15.1 Greedy Method

15.2 Divide-and-Conquer

15.3 Backtracking

15.4 Dynamic Programming

15.5 Branch-and-Bound

Chapter 15 - Introduction to Problem Solving Methods

In this chapter we review the major problem solving methods used in the design of programs.

These methods are more general than any particular algorithm and are the basis of most applied

numerical and semi-numerical algorithms.

15.1 Greedy Method

The greedy method is a problem solving approach that makes the best immediate (i.e. locally

optimal) decision at each step toward the overall solution. When this approach results in the best

possible answer to the problem, we say that the problem is amenable to the greedy method. An

example of a problem for which the greedy method can give the best answer (i.e. optimal

solution) is the coin changing problem.

Coin Changing

The coin changing problem is to determine the minimum number of coins required to equal a

specified amount of change. There is a greedy optimal solution for the coin denominations

currently minted by the U.S. government, namely 50, 25, 10, 5 and 1 cents.

The greedy coin changing algorithm states, always deduct the largest coin denomination that is

no greater than the remaining change value until the remaining value is zero. A step algorithm for

this technique is,

Step 1: Set remaining_value = input change value.

Step 2: Find the largest coin denomination that is remaining_value.

Step 3: Deduct the coin value found in Step 2 from remainin_value and increment

the coin count for the corresponding coin denomination. If remaining_value

is > 0 then return to Step 2 else continue.

Step 4: Display coin counts.

We can implement a more efficient algorithm for coin changing by using integer division and the

mod function, as shown in the code segment below.

coin_set : array(1..5) of integer := (50,25,10,5,1);

num_coins : array(1..5) of integer := (0,0,0,0,0);

get(remval);

for i in 1..5 loop

num_coins(i):=remval/coin_set(i);

remval:=remval mod coin_set(i);

end loop;

So, for a change amount of 62 cents we would obtain a coin count of (1,0,1,0,2) for coin

denominations of (50,25,10,5,1) for a total of 4 coins. This is the minimum number of coins that

will total 62 cents.

It is interesting to note that this coin changing algorithm does not result in the minimum number of

coins for all coin sets. For example, consider the same change amount of 62 cents with the coin

denominations (50,26,10,5,1). Here, we have replaced the quarter with a 26-cent piece. The

greedy algorithm gives us a coin count of (1,0,1,0,2) or 4 coins, but we can make the same

change value with only three coins (0,2,1,0,0). As shown by this simple example, we have to be

careful about our claims of optimality with respect to our computer algorithms.

When the greedy method works it is the preferred problem solving approach because of its

efficiency. Since it only looks at immediately available data it never takes time to compute

alternatives. To get a better appreciation for the advantage of the greedy method, consider an

alternative algorithm for coin changing that would give the minimum number of coins for any coin

set.

Minimal Spanning Tree

Another example problem amenable to a greedy solution is the minimal spanning tree problem.

In this problem you are to find the minimum weight tree embedded in a weighted graph that

includes all the vertices.

1

2

C

B

3

4

A

G

2

I

4 1

6

2

5

F

3

D

H

3

5

1

1

E

A

B

C

D

E

F

G

H

I

A

4

–

5

1

–

B

4

–

2

3

1

C

–

2

–

4

1

2

-

D

5

3

4

–

5

–

-

E

1

–

5

–

3

1

-

F

1

3

–

6

3

2

G

2

–

6

-

H

1

3

-

I

1

2

-

Figure 15-1: Sample Weighted Graph and Associated Adjacency Matrix

The answer to the minimal spanning tree problem is a subset of edges from the weighted graph.

There are a number of algoirthm that use the greedy method to find the subset of edges that span

the nodes in the graph and whose total weight is a minimum. This minimal spanning subset of

edges will always be a tree. Why?

To implement an algorithm for the minimal spanning tree problem we need a data-representation

for weighted graphs. For human analysis, the graphical representation is sufficient, but for

computer analysis an edge list or adjacency matrix is preferred.

Prims Algorthm

Given a weighted graph G consisting of a set of vertices V and a set of edges E with weights,

ek (vi , v j , wk ) E and vi , v j V

where wk is the weight associated with the edge connecting vertex vi and vj. Prepare a vertex set

and an edge set to hold elements selected by Prim's Algorithm.

Step 1: Choose an arbitrary starting vertex vj

Step 2: Find the smallest weight edge e incident with with a vertex in the vertex set

whose inclusion in the edge set does not create a cycle.

Step 3: Include this edge in the edge set and its vertices in the vertex set.

Step 4: Repeat Steps 2 and 3 until all vertices are in the vertex set.

The edge set is the set of edges that have been chosen as member of the minimal spanning tree.

The vertex set is the set of vertices that are incident with a selected edge in the edge set. In

Prim's Algorithm the test for cycles is simple since we only need to ensure both vertices incident

with the new edge are not already in the vertex set. As shown in Figure 15-2 we may be forced

to choose from two or more equal weight edges.

1

2

C

B

A

I

1

4

2

F

1

E

partial solution

A

G

2

3

I

4 1

5

6

2

F

3

D

H

3

1

4

3

D

5

B

6

5

2

C

G

2

3

4

1

H

5 3

1

1

E

a completed minimal spanning tree

Figure 15-2: Sample Execution of Prim's Algorithm for Minimal Spanning Tree

In the example above, the partial solution shows that we may select either edge E-F or edge H-F

to be added to the edge set. In either case the added weight will be 3. This is why we refer to

the solution as the minimal spanning tree rather than the minimum spanning tree. Figure 15-2

also shows a complete solution whose total weight is 14. A bit of visual analysis of this graph

(staring at it for a while) should convince you that the embedded tree is a minimal spanning tree.

15.2 Divide-and-Conquer

The divide-and-conquer method involves dividing a problem into smaller problems that can be

more easily solved. While the specifics vary from one application to another, building a divideand-conquer solution involves the implementation of the following three steps in some form:

Divide - Typically this steps involves splitting one problem into two problems of

approximately 1/2 the size of the original problem.

Conquer - The divide step is repeated (usually recursively) until individual problem

sizes are small enough to be solved (conquered) directly.

Recombine - The solution to the original problem is obtained by combining all the

solutions to the sub-problems.

Divide and conquer works best with the size of the sub-problems are reduced in size by an

amount proportional to the number of sub-problems being generated. When the reduction in

problem size is small compared to the number of sub-problems divide-and-conquer should be

avoided. Therefore, we should avoid divide-and-conquer in the following cases:

An instance of size n is divided into two or more instances each of nearly n in size.

or

An instance of size n is divided into nearly n instances of size n/c (c is some constant).

To see why this is so, lets look at a graphical representation of the problem spaces for two cases

of divide-and-conquer.

Case 1: Starting with a problem of size n, divide-and-conquer divides each instance of size k into

two instances of size k/2 until k=1.

Figure 15-3: Problem Space for an Efficient Implementation of Divide-and-Conquer

The first instance (size n) gets divided into two instances of size n/2 each. Then each of these

gets divided into two instances of size n/4 (a total of 4 of these). Then D&C generates 8

instances of size n/8. At the kth stage D&C will have generated 2k instances of size n/2k. The

binary tree below represents the problem space for this D&C example.

As we have seen before the depth of this tree is log2n. The complexity of an algorithm exhibiting

this behavior is proportional to the number of nodes in the problem-space tree. How many nodes

are there in a complete binary tree of depth k? A binary tree of depth=k has 2k-1 nodes. In this

case we know that k=log2n so the number of nodes in the problem-space tree is 2log n - 1. But

2log n = n so the order of run time of this D&C algorithm is O(n).

Case 2: D&C divides each instance of size n into two instances of size n-1 until n=1.

Now the first instance (size n) gets divided into to instances of size n-1. The number of instances

doubles at each stage just as in Case 1, but now the depth, k of the problem-space tree is

determined by n-k = 1, or k = n-1. The total number of nodes in this tree is 2n-1 -1. Therefore the

order of run time of this D&C example is O(2n).

Figure 15-4: Problem Space for an Inefficient Implementation of Divide-and-Conquer

In Case 1 D&C is shown to be of linear order and therefore is an effective problem solving

method. Applying D&C in Case 2 results in exponential order so it would be better to choose

another method for this problem class.

Recursive Binary Search

The searching problems referenced in Chapter 13 were for both unordered and ordered lists. We

determined that, at most, n comparisons are needed to search for a particular value in an

unordered list of size n. For an ordered list, we presented an algorithm, called binary search, that

did not have to examine every value in the list to determine if a particular value was present. We

showed that the order of run time for binary search was O(log2n).

Now we will apply the divide-and-conquer problem solving method to the problem of searching an

ordered list. The function location( ) shown below is recursive. If the index lo is greater than hi,

the function returns 0 (i.e. this particular copy of location( ) did not find the value x). If the array

value S(mid) = x, then the index mid is returned. If x is less than the value S(mid) the function

calls itself with the index hi = mid-1. Finally if the value of x is greater than S(mid) then

location( ) is called with the index lo = mid + 1.

Analysis of Recursive Binary Search

We will now introduce recurrence relations as a method of analyzing recursive functions. The

recurrence relation T(n) below defines the amount of work done by the function location( ) in

terms n (the input problem size). If location( ) is called with n=1 (the termination condition) then

the amount of work performed (returning a 0) is constant which we label a C. Alternatively is n>1

then the function location( ) will perform some fixed number of operations plus it will call itself with

a problem size of n/2. We will label the fixed amount of work done as D (another constant) and

we will label the amount of work done by the additional call of location as T(n/2). So when n>1

we express the amount of work as T(n) = T(n/2) + D. In order to determine the complexity we

have to find a representation for T(n/2) as an explicit function of n. This is accomplished as

shown below.

There are a number of formal method for solving recurrence relations which we will not

emphasize in this course. It is important that you become familiar with the replacement method

shown above and, in particular, understand how to find the kth term for the recurrence. Notice

that since we define T(1) = C, we will be trying to replace T(f(n)) with T(1), which means we are

looking for the conditions under which f(n)=1. We then apply those conditions to the other terms

in the recurrence relation.

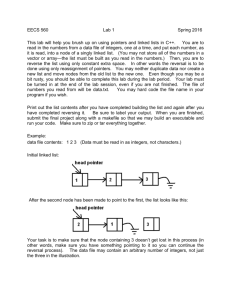

15.3 Backtracking

Backtracking is a form of exhaustive search that is used to find any feasible solution. For

example, we can use backtracking to solve a maze or to determine the arrangement of the

squares in a 15-puzzle. In order to implement backtracking we need a way to represent all

possible states of the problem or a way to generate all permutations of the potential solutions.

The possible (candidate) solutions or states of the problem are referred to as the problems state

space.

Backtracking is a modified depth-first search of the problem state-space . In the case of the

maze the start location is analogous to the root of an embedded search tree in the state-space.

This search tree has a node at each point in the maze where there is a choice of direction. The

dead-ends and the finish position are leaf nodes of the search tree. The children of each node in

the state-space tree represent the possible choices from that node, given the sequence of

choices that lead to the node. In the maxe solving problem, the branches of the search tree are

the allowed alternative paths in the state-space tree.

As shown in the example maze above the candidate solutions are the branches of a search tree.

The correct (feasible) solution is shown in green. This search tree is represents the state space

of the maze since is includes all reachable locations in the maze.

In backtracking we traverse the search tree of the state-space in a depth first order. Consider the

state-space tree shown below. Moving down the left-hand branches first we can generate the

sequence of nodes encountered in a depth-first order traversal of the tree. The nodes are

numbered in the order of a depth-first traversal of the tree below.

N-Queens

The N-Queens Problem is a classic problem that is solvable using backtracking. In this problem

you are to place queens (chess pieces) on an NxN chessboard in such a way that no two queens

are directly attacking one another. That is, no two queens share the same row, column or

diagonal on the board. In the examples below, we give two versions of backtracking as applied to

this problem.

Version 1 - We solve the 4-Queens Problem below as an example of brute-force backtracking.

Place 4 queens on a 4x4 board, in such a way that no two are in the same row, column or

diagonal. First we label the 16 squares of the board with letters A through P. Until all queens are

placed, choose the first available location and put the next queen in this position.

If queens remain to be placed and no space is left, backtrack by removing the last queens placed

and placing it in the next available position. Once all choices have been exhausted for the most

recent decision point, we backtrack to the next earlier decision point and choose the next

available alternative.

Starting with position A we find that the next position that is not under attack by the first queen is

G. In fact there are only 6 positions (G H J L N O) not under attack from position A. We place

the second queen in position G and see that there is only 1 remaining square not under attack by

one or both of these queens (position N). Once we choose N for the third queen we see that the

fourh queen cannot be placed. So we backtrack to the most recent decision point and choose the

next position for queen 2 (i.e. position H).

Continuing to traverse the state-space tree in a depth-first mode we find that queen two cannot

be placed at position H, J or L either. In fact we eventually exhaust all the alternatives for queen

2 and backtrack to the first decision. We have shown that the first queen cannot be in position A

(i.e. a corner of the board).

So we choose the next available location for queen 1. Notice that all the constraints for placing a

particular queen are based on the number and positions of the queens already placed. There is

no restriction on where the first queen may be placed so the next available position is B. From B

the six remaining positions are H I K M O P. We choose the first available position H for queen 2.

This leaves only I and O postions not under attack so we choose I for queen 3. Since O is not

under attack by any one of the first 3 queens we place queen 4 there, and the 4-Queens Problem

has been solved.

Notice that we did not have to traverse a large portion of the state-space tree before a solution

was found. This problem becomes much more difficult as the size of the board and the number

of queens increases.

Version 2 - Some analysis of this problem shows that, since N queens must be placed on an

NxN board, every row and column will have exactly one queen. That is, no two queens can share

a row or column, otherwise they would be attacking each other. Using this simple observation we

can redefine the state space of our algorithm.

We will associate each queen with 1 of n values representing the column for that queen. Now we

only have to find a row number, i for each queen Q j (the queen of the jth column). The statespace for this version of the 4-Queens Problem is much simpler.

We have 4 choices for the queen of the first column, three choices for the queen of the second

column, and so on. There are 4! = 24 nodes in the state-space tree for this version of the 4Queens Problem.

Hamiltonian Circuit

A Hamiltonian circuit (also called a tour) of a graph is a path that starts at a given vertex, visits

each vertex in the graph exactly once, and ends at the starting vertex. Some connected graphs

do not contain Hamiltonian circuits and others do. We may use backtracking to discover if a

particular connected graph has a Hamiltonian circuit.

Graph A

Graph B

Embedding a Depth-First State-Space Tree in a Connected Graph

A method for searching the state space for this problem is defined as follows. Put the starting

vertex at level 0 in the search tree, call this the zeroth vertex on the path (we may arbitrarily

select any node as the starting node since we will be traversing all nodes in the circuit). At level

1, create a child node for the root node for each remaining vertex that is adjacent to the first

vertex. At each node in level 2, create a child node for each of the adjacent vertices that are not

in the path from the root to this vertex, and so on.

Depth-First Traversal of Graph A

Hamiltonian circuit found

Depth-First Traversal of Graph B

no Hamiltonian circuit

Notice that the graph nodes may be represented by more than one node in the state space tree.

This is true since a particular graph node made be part of more than one path.

Implementing Backtracking

We have discussed a number of examples of depth-first search in the Backtracking problem

solving method. Now we will look at some of the details of implementing this method in a

computer program. We will use the Hamiltonian Circuit as our sample problem to solve.

Data Structure - First we will define a data structure to hold the graph representation. In this

problem, we will use an adjacency matrix W as shown below.

We will also define a one-dimensional array p of integers that will contain the labels of the

vertices in order of traversal.

We can now define a recursive algorithm hamiltonian(p,index) that performs a depth-first

traversal of the embedded state-space tree. This algorithm has the following form. We must

verify that the procedure hamiltonian(p,index) perform the correct operations for any stage in the

search for a Hamiltonian circuit.

For example, assume that we start with vertex v1. Let index be a location in the list p such that

p(index) is the most recent node entered. Let n be the number of nodes in the graph. The initial

call to hamiltonian( p,1) should be made with vertex v1 already loaded into p(1). The termination

segment of the pseudocode below is shown in red and the recursive segment is shown in green.

hamiltonian(p,index)

if index>n then

path is complete

display the values in p

else

for each node v in G

if v can be added to the path then

add v to path p and call hamiltonian(p,index+1)

end hamiltonian

Termination Segment - A Hamiltonian circuit has been created in p if the value of index (the next

position in the path list) is bigger than the number of nodes in the graph. If so, then this copy of p

must contain a path back the vertex v1. That is, p contains the indices of the nodes of the graph

in the order they must be traversed to complete a Hamiltonian circuit. If index is not greater than

n then there is still work to do.

Recursive Segment - The else part of the pseudocode builds all the children (if any) of p(index)

the node most recently added to the list p. The underlined phrase, v can be added to the path,

needs more explanation. A new node can be added to the path only under one of the following

criteria:

1. there are less than n nodes in p so far and v is not already in the path p and there is

an edge connecting v to p(i)

OR

2. there are exactly n nodes in p already and v is the first node in p and there is an

edge connecting v to p(n)

The Ada source code below is a working version of hamiltonian( ). The path list p is defined as an

in parameter (i.e. pass by value) so that each call to hamiltonian will carry with it, its own copy of

the path. We make a local copy of p called ptmp so that we can add another node to p to be

passed along in each recursive call to hamiltonian( ). This version of hamiltonian( ) generates

and displays all Hamiltonian circuits for the input graph. We could easily add a global boolean to

terminate the program as soon as the first Hamiltonian circuit is displayed.

procedure hamiltonian(p : in path_type; index : in integer) is

ptmp : path_type;

begin

if index>n then

display(p);

else

ptmp:=p;

for j in 1..n loop

if add_node_ok(p,index,j) then

ptmp(index+1):=j;

hamiltonian(ptmp,index+1);

end if;

end loop;

end if;

end hamiltonian;

Typical of recursive algorithms, the real work is hidden in the recursive conditional. The boolean

function add_node_ok( ) returns true if all the conditions listed in (1) or (2) for the recursive

segment have been satisfied. This implementation is not the most efficient possible. You are

strongly encouraged to follow your own path (no pun intended) when encoding complicated

logical constructs.

function add_node_ok(p:

index :

j :

isok : boolean;

begin

if W(p(index),j) then

isok:=true;

if index<n then

for k in 1..index

if p(k)=j then

isok:=false;

end if;

end loop;

else

if j>1 then

isok:=false;

end if;

end if;

else

isok:=false;

end if;

return isok;

end add_node_ok;

path_type;

integer;

integer) return boolean is

--A. there is an edge

--B. path is not done

loop

--C. new node has not

-been used already

--D. new node is not node 1

--E. there is no edge

The adjacency matrix W( ) has been implemented as a 2-D boolean array in which W(i,j)=true

indicates that there is an edge connecting node i to node j in the graph. This function is testing if

it is OK to add the node j to the path list p. We first check to see if there is an edge between the

node most recently added to the path p(index) and the new node j being considered (A) (the else

at E). If there is an edge then we check to see if we are at the end of a Hamiltonian circuit (i.e.

index=n). If index is less than n then we are not at the end of a circuit (B) and we then make sure

that the new node j is not already in the path p( ) (C). If index is equal to n then we just have to

make sure that we have returned to node 1

(D).

Reducing the Number of Computations in Backtracking - In those cases in which we need to find

any feasible solution to a problem, we could significantly reduce the number of computations

needed if we could develop some measure of the likelyhood that a particular path in the statespace tree would lead to an answer. Such measures of the merit of taking a particular path do

exist but they are problem-dependent.

In the n-queens problem, for example, we could use the number of open squares remaining as a

measure of merit. If a particular choice for placing a queen resulted in fewer open squares than

the number of queens left to place we could avoid following the branch in the state-space tree

altogether. Other more involved measures of merit could be devised to reduce the number of

computations even further.

In addtion to removing those branches in the state-space tree that cannot lead to a solution, we

can also arrange the order in which we traverse the tree by taking the paths most likely to lead to

a solution first. We will cover this issue in greater detail when we look at the Branch and Bound

method, alpha-beta pruning and relaxed algorithms.

15.4 Dynamic Programming

Dynamic programming is similar to divide-and-conquer in that the problem is broken down into

smaller subproblems. In this approach we solve the small instances first, save the results and

look them up later, when we need them, rather than recompute them.

1. characterize the structure of an optimal solution

2. recursively define the value of an optimal solution

3. compute the value of an optimal solution in a bottom-up manner

4. construct an optimal solution from computed information

Dynamic programming can sometimes provide an efficient solution to a problem for which divideand-conquer produces an exponential run-time. Occasionally we find that we do not need to

save all subproblem solutions. In these cases we can revise the algorithm greatly reducing the

memory space requirements for the algorithm.

The Binomial Coefficient - The binomial coefficient is found in many applications. It gets its name

from the binomial expansion. Given a two-element term (a+b) which we wish to raise to an

integer power n, we find that the expansion of these products produces a series with decreasing

powers of a, increasing powers of b and a coefficient

(a+b)0

(a+b)1

(a+b)2

(a+b)3

(a+b)4

=

=

=

=

=

1

1a+1b

1a2+2ab+1b2

1a3+3a2b+3ab2+1b3

4

1a +4a3b+6a2b2+4ab3+1b4

The binomial coefficient is also the number of combinations of n items taken k at a time,

sometimes called n-choose-k.

The binomial theorem gives a recursive expression for the coefficient of any term in the

expansion of a binomial raised to the nth power.

Binomial Coefficient Function

Divide-and-Conquer Version - This version of bin requires that the subproblems are recalculated

many times for each recursive call.

function bin(n,k : integer) return integer is

begin

if k=0 or k=n then

return 1;

else

return bin(n-1,k-1) + bin(n-1,k);

end if;

end bin;

Dynamic Programming Version - In bin2 the smallest instances of the problem are solved first

and then used to compute values for the larger subproblems. Compare the computational

complexities of bin and bin2.

function bin2(n,k : integer) return integer is

B : is array(0..n,0..k) of integer;

begin

for i in 0..n loop

for j in 0..minimum(i,k) loop

if j=0 or j=i then

B(i,j):=1;

else

B(i,j):=B(i-1,j-1)+B(i-1,j);

end if;

end loop;

end loop;

return B(n,k);

end bin2;

Applying the Dynamic Programming Method - Dynamic Programming is one of the most difficult

problem solving methods to learn, but once learned it is one of the easiest methods to implement.

For this reason we will spend some time developing dynamic programming algrotithms for a

variety of problems. Recall that the Dynamic Programming method is applicable when an optimal

solution can be obtained from a sequence of decisions. If these decisions can be made without

an error then we can use the Greedy Method rather than Dynamic Programming.

Shortest Path - Given a connected graph G={V,E} comprised of a set of vertices V and edges E

each with an associated non-negative weight w, find a shortest path from some vertex vi in a

connected graph to some other vertex vj. We define the set of vertices adjacent to vi by Ai. If vj is

in Ai and if the edge connecting vj to vi is the smallest weight edge connecting the set of edges A i

to vi then we know that the shortest path from vi to vj is the edge ei,j.

On the other hand, if vj is not adjacent to vi or if the edge ei,j is not the smallest weight edge

connecting vi to the members of Ai then we cannot be sure which edge to choose as an element

of the shortest path from vi to vj. Therefore, Greedy Method does not apply here. So, how do we

apply Dynamic Programming?

In this case we will attempt to solve the larger problem of finding the shortest path from v i to every

other vertex and, in the process, find the shortest path from vi to vj. Starting with the vertices in Ai

we note that the minimal weight edge connected to vi must be the shortest path from vi to the

vertex connected to vi (say vk) by this edge. We know this is true since any other path from v i to

vk not including ei,k would include an edge at least as large as ei,k. From the point of view of

finding the shortest path between two particular nodes, Dijstra's single-source shortest path

algorithm is an example of dynamic programming. For the problem of finding the shortest path

from a particular node to every other node, Dijkstra's algorithm is an example of the Greedy

Method.

The Principle of Optimality - When the Greedy method does not apply we can enumerate all

possible decision sequences and then pick out the best. Dynamic Programming can significanly

reduce the amount of work required by brute-force enumeration by avoiding the decision

sequences that cannot possibly be optimal. In order to implement dynamic programming we

must apply the Principle of Optimality. As stated by Horowitz and Sahni,

"an optimal sequence of decisions has the property that whatever the initial state and

decision are, the remaining decisions must constitute an optimal decision sequence

with regard to the state resulting from the first decision."

The difference between the greedy method and dynamic programming is that in the greedy

method we generate only one decision sequence while in dynamic programming we may

generate many. What differentiates dynamic programming from brute-force enumeration is that,

in dynamic programming we attempt to generate only the optimal decision sequences rather than

all possible sequences.

Fibonacci Numbers - As you probably recall, Fibonacci numbers are computed by taking the sum

of the the two previous Fibonacci numbers. This rule can be applied iteratively to generate all

Fibonacci numbers but it needs to be primed with the first two Fibonacci numbers,

Fibo(0) = 0,

Fibo(1) = 1, and

Fibo(n) = Fibo(n-1) + Fibo(n-2)

As with binomial coeffients we can compute Fibonacci number recursively or by applying dynamic

programming.

function fibo( n : integer) return integer is

begin

if n<=1 then

return n;

else

return fibo(n-1) + fibo(n-2);

end if;

end fibo;

When we call this function for some value of n, fibo( ) calls itself twice. Once with a parameter

value of n-1 and once with n-2. This doubling of the number of calls at each level continues until

the termination condition n<=1 is reached. The binary problem-space tree that is generated is

not complete since half of the call have parameters reduced by 1 and half have parameters

reduced by 2 at each level

.

An exact complexity analysis of this algorithm is an exercise in solving recurrence relations. It can

be shown that this is still an exponential run-time problem O(n) where >1.6. What is important

to notice here is the large number of times the smaller values of Fibo( ) are recalculated.

Dynamic Programming comes to the rescue. We need to restructure the algorithm so that a table

of values is computed only once and then saved to be used to compute the larger values.

function fibo(n : integer) return integer is

type listype is array(1..?) of integer;

F : listype;

begin

F(0):=0;

F(1):=1;

for i in 2 to n loop

F(i):=F(i-1)+F(i-2);

end loop;

return F(n);

end fibo;

This is an incomplete function since we have not specified the upper limit of the array F. There

are methods in Ada for handling unspecified data structures as in most languages but in this case

we can see that we can trade a little speed to significantly reduce the memory requirements, and

still have an efficient algorithm.

function fibo(n : integer) return integer is

Fa,Fb,Fc : integer;

begin

Fa:=0;

Fb:=1;

for i in 2 to n loop

Fc:=Fa+Fb;

Fa:=Fb;

Fb:=Fc;

end loop;

return Fc;

end fibo;

The two additional assignment statements in the for_loop would not be signficant compared the

the space (memory) requirements of the previous version when n is large. It should be clear that

both these functions have a linear (i.e. O(n) ) complextity.

15.5 Branch-and-Bound

The branch-and-bound problem solving method is very similar to backtracking in that a state

space tree is used to solve a problem. The differences are that B&B (1) does not limit us to any

particular way of traversing the tree and (2) is used only for optimization problems. A B&B

algorithm computers a number (bound) at a node to determine whether the node is promising.

The number is a bound on the value of the solution that could be obtained by expanding the state

space tree beyond the current node.

Types of Traversal - When implementing the branch-and-bound approach there is no restriction

on the type of state-space tree traversal used. Backtracking, for example, is a simple kind of B&B

that uses depth-first search.

A better approach is to check all the nodes reachable from the currently active node (breadthfirst) and then to choose the most promising node (best-first) to expand next. An essential

element of B&B is a greedy way to estimate the value of choosing one node over another. This is

called the bound or bounding heuristic. It is an underestimate for a minimal search and an

overestimate for a maximal search. When performing a breadth-first traversal, the bound is used

only to prune the unpromising nodes from the state-space tree. In a best-first traversal the bound

also can used to order the promising nodes on the live-node list.

Traveling Salesperson B&B - In the most general case the distances between each pair of cities

is a positive value with dist(A,B)? dist(B,A). In the matrix representation, the main diagonal values

are omitted (i.e. dist(A,A)?0).

We can use the greedy method to find an initial candidate tour. We start with city A (arbitrary)

and choose the closest city (in this case E). Moving to the newly chosen city, we always choose

the closest city that has not yet been chosen until we return to A.

Our candidate tour is A-E-C-G-F-D-B-H-A with a tour length of 28. We know that a minimal tour

will be no greater than this. It is important to understand that the initial candidate tour is not

necessarily a minimal tour nor is it unique. If we start with city E for example, we have, E-A-B-HC-G-F-D-E with a length of 30.

Defninig A Bounding Heuristic - Now we must define a bounding heuristic that provides an

underestimate of the cost to complete a tour from any node using local information. In this

example we choose to use the actual cost to reach a node plus the minimum cost from every

remaining node as our bounding heuristic.

h(x) = a(x) + g(x)

where,

a(x) - actual cost to node x

and

g(x) - minimum cost to complete

Since we know the minimum cost from each node to anywhere we know that the minimal tour

cannot be less than 25. Now we are ready to search the problem space tree for the minimal tour

using the branch-and-bound problem solving method.

1. Starting with node A determine the actual cost to each of the other nodes.

2. Now compute the minimum cost from every other node 25-4=21.

3. Add the actual cost to a node a(x) to the minimum cost from every other node to determine a

lower bound for tours from A through B,C,. . .,H.

4. Since we already have a candidate tour of 28 we can prune all branches with a lower-bound

that is ? 28. This leaves B, D and E as the onlypromising nodes.

5. We continue to expand the promising nodes in a best-first order (E1,B1,,D1).

6. We have fully expanded node E so it is removed from our live-node list and we have two new

nodes to add to the list. When two nodes have the same bounding values we will choose the one

that is closer to the solution. So the order of nodes in our live-node list is (C2,B1,B2,D1).

7. We remove node C2 and add node G3 to the live-node list. (G3,B1,B2,D1).

8. Expanding node G gives us two more promising nodes F4 and H4. Our live-node list is now

(F4,B1,H4,B2,D1).

actual cost of getting to G=11

9. A 1st level node has moved to the front of the live-node list. (B1,D5,H4,B2,D1)

10. (H2,D5,H4,B2,D1)

11. (D5,H4,B2,D1)

12. (H4,B2,D1)

13. (B2,D1)

14. (H3,D1)

15. (D1)

16.We have verified that a minimal tour has length >= 28. Since our candidate tour has a length

of 28 we now know that it is a minimal tour, we an use it without further searching of the statespace tree.

_

____________________________________________________________________________

Exercises:

5.1. Write an Ada program to find an arrangement of queens for the 8-Queens Problem.

5.2 Answer the following questions concerning Exercise

a. How many arrangements are possible for the 8 queens whether or not an arrangement is a

solution?

b. How many arrangement are there if each queen must be in its own row and column?

c. As the problem is made larger, do you think that the number of solutions increases or

decreases? Why?

d. Is there a solution for all n queens problems n>3? Argue for or against the conjecture that there

is always at least one solution for n>3. If you argue agains the conjecture you should try to

provide a counterexample.

5.3. Implement your own version of the Hamiltonian Circuit Algorithm.

a. Use your program to generate all Hamiltonian circuits for the sample graph shown below.

b. Modify your program to generate only one Hamiltonian circuit and then terminate. It is not

enough to simply block the output. You modification must actually force the program to terminate

normally (i.e. without a runtime errors or exception being thrown.)

Homework 4.1: You have seen two methods for computing binomial coefficients based on a

recurrence relation. Using the recursive expression for binomial coefficients provided create

another version of this function that has a lower order of complexity than either bin or bin2.

Develop, implement and test your version of this function and then derive its order of complexty.

Hint: simplify the factorial terms algebraically and capture their essential computations in a single

loop.