Clustering summary

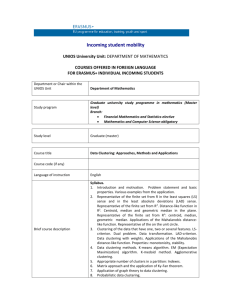

advertisement

Clustering of the self-organizing map Vesanto, J. Alhoniemi, E. Neural Networks Res. Centre, Helsinki Univ. of Technol., Espoo; This paper appears in: Neural Networks, IEEE Transactions on On page(s): 586-600 Volume: 11, May 2000 ISSN: 1045-9227 References Cited: 49 CODEN: ITNNEP INSPEC Accession Number: 6633557 Abstract: The self-organizing map (SOM) is an excellent tool in exploratory phase of data mining. It projects input space on prototypes of a low-dimensional regular grid that can be effectively utilized to visualize and explore properties of the data. When the number of SOM units is large, to facilitate quantitative analysis of the map and the data, similar units need to be grouped, i.e., clustered. In this paper, different approaches to clustering of the SOM are considered. In particular, the use of hierarchical agglomerative clustering and partitive clustering using Kmeans are investigated. The two-stage procedure-first using SOM to produce the prototypes that are then clustered in the second stage-is found to perform well when compared with direct clustering of the data and to reduce the computation time Index Terms: data analysis data mining learning (artificial intelligence) self-organising feature maps Documents that cite this document Select link to view other documents in the database that cite this one. Reference list: 1, D. Pyle, " Data Preparation for Data Mining", Morgan Kaufmann, San Francisco, CA, 1999. 2, T. Kohonen, " Self-Organizing Maps", Springer, Berlin/Heidelberg , Germany, vol.30, 1995. 3, J. Vesanto, "SOM-based data visualization methods", Intell. Data Anal., vol.3, no.2, pp.111- 126, 1999. 4, Fuzzy Models for Pattern Recognition: Methods that Search for Structures in Data", IEEE, New York, 1992. 5, G. J. McLahlan, K. E. Basford, "Mixture Models: Inference and Applications to Clustering", Marcel Dekker, New York, vol.84, 1987. 6, J. C. Bezdek, "Some new indexes of cluster validity", IEEE Trans. Syst., Man, Cybern. B, vol.28, pp.301 -315, 1998. [Abstract] [PDF Full-Text (888KB)] 7, M. Blatt, S. Wiseman, E. Domany, "Superparamagnetic clustering of data", Phys. Rev. Lett., vol.76, no.18, pp.3251- 3254, 1996. 8, G. Karypis, E.-H. Han, V. Kumar, "Chameleon: Hierarchical clustering using dynamic modeling", IEEE Comput., vol.32, pp.68 -74, Aug. 1999. [Abstract] [PDF Full-Text (1620KB)] 9, J. Lampinen, E. Oja, "Clustering properties of hierarchical self-organizing maps", J. Math. Imag. Vis., vol.2, no.2–3, pp.261-272, Nov. 1992. 10, E. Boudaillier, G. Hebrail, "Interactive interpretation of hierarchical clustering", Intell. Data Anal., vol.2, no.3, 1998. 11, J. Buhmann, H. Kühnel, "Complexity optimized data clustering by competitive neural networks", Neural Comput., vol.5, no. 3, pp.75-88, May 1993. 12, G. W. Milligan, M. C. Cooper, "An examination of procedures for determining the number of clusters in a data set", Psychometrika, vol.50, no.2, pp.159-179, June 1985. 13, D. L. Davies, D. W. Bouldin, "A cluster separation measure", IEEE Trans. Patt. Anal. Machine Intell., vol.PAMI-1, pp.224-227, Apr. 1979. 14, S. P. Luttrell, "Hierarchical self-organizing networks", Proc. 1st IEE Conf. Artificial Neural Networks, London , U.K., pp.2-6, 1989. [Abstract] [PDF Full-Text (344KB)] 15, A. Varfis, C. Versino, "Clustering of socio-economic data with Kohonen maps", Neural Network World, vol.2, no.6, pp.813-834, 1992. 16, P. Mangiameli, S. K. Chen, D. West, "A comparison of SOM neural network and hierarchical clustering methods", Eur. J. Oper. Res., vol. 93, no.2, Sept. 1996. 17, T. Kohonen, "Self-organizing maps: Optimization approaches ", Artificial Neural Networks, Elsevier, Amsterdam, The Netherlands, pp. 981-990, 1991. 18, J. Moody, C. J. Darken, "Fast learning in networks of locally-tuned processing units", Neural Comput., vol.1, no.2, pp. 281-294, 1989. 19, J. A. Kangas, T. K. Kohonen, J. T. Laaksonen, "Variants of self-organizing maps ", IEEE Trans. Neural Networks, vol.1, pp.93 -99, Mar. 1990. [Abstract] [PDF Full-Text (664KB)] 20, T. Martinez, K. Schulten, "A "neural-gas" network learns topologies ", Artificial Neural Networks, Elsevier, Amsterdam, The Netherlands, pp. 397-402, 1991. 21, B. Fritzke, "Let it grow—Self-organizing feature maps with problem dependent cell structure", Artificial Neural Networks, Elsevier, Amsterdam, The Netherlands, pp.403-408, 1991. 22, Y. Cheng, "Clustering with competing self-organizing maps ", Proc. Int. Conf. Neural Networks, vol.4, pp. 785-790, 1992. [Abstract] [PDF Full-Text (376KB)] 23, B. Fritzke, "Growing grid—A self-organizing network with constant neighborhood range and adaptation strength", Neural Process. Lett., vol.2, no.5, pp.9-13, 1995. 24, J. Blackmore, R. Miikkulainen, "Visualizing high-dimensional structure with the incremental grid growing neural network", Proc. 12th Int. Conf. Machine Learning, pp.55- 63, 1995. 25, S. Jockusch, "A neural network which adapts its stucture to a given set of patterns", Parallel Processing in Neural Systems and Computers , Elsevier, Amsterdam, The Netherlands , pp.169-172, 1990. 26, J. S. Rodrigues, L. B. Almeida, "Improving the learning speed in topological maps of pattern", Proc. Int. Neural Network Conf., pp.813-816, 1990. 27, D. Alahakoon, S. K. Halgamuge, "Knowledge discovery with supervised and unsupervised self evolving neural networks", Proc. Fifth Int. Conf. Soft Comput. Inform./Intell. Syst., pp. 907-910, 1998. 28, A. Gersho, "Asymptotically optimal block quantization ", IEEE Trans. Inform. Theory, vol.IT-25, pp.373 -380, July 1979. 29, P. L. Zador, "Asymptotic quantization error of continuous signals and the quantization dimension", IEEE Trans. Inform. Theory, vol.IT-28 , pp.139-149, Mar. 1982. 30, H. Ritter, "Asymptotic level density for a class of vector quantization processes", IEEE Trans. Neural Networks, vol.2, pp.173-175, Jan. 1991. [Abstract] [PDF Full-Text (280KB)] 31, T. Kohonen, "Comparison of SOM point densities based on different criteria", Neural Comput., vol.11, pp. 2171-2185, 1999. 32, R. D. Lawrence, G. S. Almasi, H. E. Rushmeier, "A scalable parallel algorithm for self-organizing maps with applications to sparse data problems", Data Mining Knowl. Discovery, vol.3, no.2, pp.171-195, June 1999. 33, T. Kohonen, S. Kaski, K. Lagus, J. SalojärviSalojarvi, J. Honkela, V. Paatero, A. Saarela, "Self organization of a massive document collection", IEEE Trans. Neural Networks, vol.11, pp.XXX-XXX, May 2000. [Abstract] [PDF Full-Text (548KB)] 34, P. Koikkalainen, "Fast deterministic self-organizing maps ", Proc. Int. Conf. Artificial Neural Networks, vol.2, pp.63-68, 1995 . 35, A. Ultsch, H. P. Siemon, "Kohonen's self organizing feature maps for exploratory data analysis", Proc. Int. Neural Network Conf., Dordrecht, The Netherlands, pp.305 -308, 1990. 36, M. A. Kraaijveld, J. Mao, A. K. Jain, "A nonlinear projection method based on Kohonen's topology preserving maps", IEEE Trans. Neural Networks, vol.6, pp.548-559, May 1995. [Abstract] [PDF Full-Text (1368KB)] 37, J. Iivarinen, T. Kohonen, J. Kangas, S. Kaski, "Visualizing the clusters on the self-organizing map", Proc. Conf. Artificial Intell. Res. Finland, Helsinki, Finland, pp.122- 126, 1994. 38, X. Zhang, Y. Li, "Self-organizing map as a new method for clustering and data analysis", Proc. Int. Joint Conf. Neural Networks, pp.2448-2451, 1993. [Abstract] [PDF Full-Text (304KB)] 39, J. B. Kruskal, "Multidimensional scaling by optimizing goodness of fit to a nonmetric hypothesis", Psychometrika, vol.29, no. 1, pp.1-27, Mar. 1964. 40, J. B. Kruskal, "Nonmetric multidimensional scaling: A numerical method", Psychometrika, vol.29, no.2, pp.115 -129, June 1964. 41, J. W. Sammon, Jr., "A nonlinear mapping for data structure analysis", IEEE Trans. Comput., vol.C-18, pp. 401-409, May 1969. 42, P. Demartines, J. HéraultHerault, " Curvilinear component analysis: A selforganizing neural network for nonlinear mapping of data sets", IEEE Trans. Neural Networks, vol.8, pp.148-154, Jan. 1997. [Abstract] [PDF Full-Text (216KB)] 43, A. Varfis, "On the use of two traditional statistical techniques to improve the readibility of Kohonen Maps", Proc. NATO ASI Workshop Statistics Neural Networks, 1993. 44, S. Kaski, J. Venna, T. Kohonen, "Coloring that reveals high-dimensional structures in data", Proc. 6th Int. Conf. Neural Inform. Process., pp.729-734, 1999. [Abstract] [PDF Full-Text (316KB)] 45, F. Murtagh, "Interpreting the Kohonen self-organizing map using contiguityconstrained clustering", Patt. Recognit. Lett., vol.16, pp.399-408, 1995. 46, O. Simula, J. Vesanto, P. Vasara, R.-R. Helminen, "Industrial Applications of Neural Networks", CRC , Boca Raton, FL, pp.87-112, 1999 . 47, J. Vaisey, A. Gersho, "Simulated annealing and codebook design", Proc. Int. Conf. Acoust., Speech, Signal Process., pp. 1176-1179, 1988. [Abstract] [PDF Full-Text (484KB)] 48, J. K. Flanagan, D. R. Morrell, R. L. Frost, C. J. Read, B. E. Nelson, "Vector quantization codebook generation using simulated annealing", Proc. Int. Conf. Acoust., Speech, Signal Process., vol.3, pp.1759-1762, 1989. [Abstract] [PDF Full-Text (200KB)] 49, T. Graepel, M. Burger, K. Obermayer, "Phase transitions in stochastic selforganizing maps", Phys. Rev. E, vol.56, pp.3876- 3890, 1997. Grid-clustering: an efficient hierarchical clustering method for very large data sets Schikuta, E. Inst. of Appl. Comput. Sci. & Inf. Syst., Wien Univ.; This paper appears in: Pattern Recognition, 1996., Proceedings of the 13th International Conference on 08/25/1996 -08/29/1996, 25-29 Aug 1996 Location: Vienna , Austria On page(s): 101-105 vol.2 25-29 Aug 1996 Number of Pages: 4 vol. (xxxi+976+xxix+922+xxxi+1008+xxix+788) INSPEC Accession Number: 5443777 Abstract: Clustering is a common technique for the analysis of large images. In this paper a new approach to hierarchical clustering of very large data sets is presented. The GRIDCLUS algorithm uses a multidimensional grid data structure to organize the value space surrounding the pattern values, rather than to organize the patterns themselves. The patterns are grouped into blocks and clustered with respect to the blocks by a topological neighbor search algorithm. The runtime behavior of the algorithm outperforms all conventional hierarchical methods. A comparison of execution times to those of other commonly used clustering algorithms, and a heuristic runtime analysis are presented Index Terms: computer vision data structures search problems topology A scalable parallel subspace clustering algorithm for massive data sets Nagesh, H.S. Goil, S. Choudhary, A. Dept. of Electron. & Comput. Eng., Northwestern Univ., Evanston, IL; This paper appears in: Parallel Processing, 2000. Proceedings. 2000 International Conference on 08/21/2000 -08/24/2000, 2000 Location: Toronto, Ont. , Canada On page(s): 477-484 2000 References Cited: 19 Number of Pages: xx+590 INSPEC Accession Number: 6742433 Abstract: Clustering is a data mining problem which finds dense regions in a sparse multidimensional data set. The attribute values and ranges of these regions characterize the clusters. Clustering algorithms need to scale with the data base size and also with the large dimensionality of the data set. Further, these algorithms need to explore the embedded clusters in a subspace of a high dimensional space. However the time complexity of the algorithm to explore clusters in subspaces is exponential in the dimensionality of the data and is thus extremely compute intensive. Thus, parallelization is the choice for discovering clusters for large data sets. In this paper we present a scalable parallel subspace clustering algorithm which has both data and task parallelism embedded in it. We also formulate the technique of adaptive grids and present a truly unsupervised clustering algorithm requiring no user inputs. Our implementation shows near linear speedups with negligible communication overheads. The use of adaptive grids results in two orders of magnitude improvement in the computation time of our serial algorithm over current methods with much better quality of clustering. Performance results on both real and synthetic data sets with very large number of dimensions on a 16 node IBM SP2 demonstrate our algorithm to be a practical and scalable clustering technique Index Terms: computational complexity data mining parallel algorithms pattern clustering very large databases Clustering soft-devices in the semantic grid Zhuge, H. Inst. of Comput. Technol., Acad. Sinica, China; This paper appears in: Computing in Science & Engineering [see also IEEE Computational Science and Engineering] On page(s): 60- 62 Nov/Dec 2002 ISSN: 1521-9615 INSPEC Accession Number: 7470995 Abstract: Soft-devices are promising next-generation Web resources. they are software mechanisms that provide services to each other and to other virtual roles according to the content of their resources and related configuration information. They can contain various kinds of resources such as text, images, and other services. Configuring a resource in a soft-device is similar to installing software in a computer: both processes contain multi-step human-computer interactions. Index Terms: Internet information resources A new data clustering approach for data mining in large databases Cheng-Fa Tsai Han-Chang Wu Chun-Wei Tsai Dept. of Manage. Inf. Syst., Nat. Pingtung Univ. of Sci. & Technol.; This paper appears in: Parallel Architectures, Algorithms and Networks, 2002. I-SPAN '02. Proceedings. International Symposium on 05/22/2002 -05/24/2002, 2002 Location: Makati City, Metro Manila , Philippines On page(s): 278-283 2002 References Cited: 37 Number of Pages: xiv+368 INSPEC Accession Number: 7329072 Abstract: Clustering is the unsupervised classification of patterns (data item, feature vectors, or observations) into groups (clusters). Clustering in data mining is very useful to discover distribution patterns in the underlying data. Clustering algorithms usually employ a distance metric-based similarity measure in order to partition the database such that data points in the same partition are more similar than points in different partitions. In this paper, we present a new data clustering method for data mining in large databases. Our simulation results show that the proposed novel clustering method performs better than a fast selforganizing map (FSOM) combined with the k-means approach (FSOM+k-means) and the genetic k-means algorithm (GKA). In addition, in all the cases we studied, our method produces much smaller errors than both the FSOM+k-means approach and GKA Index Terms: data mining genetic algorithms pattern clustering self-organising feature maps very large databases A distribution-based clustering algorithm for mining in large spatial databases Xiaowei Xu Ester, M. Kriegel, H.-P. Sander, J. Munich Univ.; This paper appears in: Data Engineering, 1998. Proceedings., 14th International Conference on 02/23/1998 -02/27/1998, 23-27 Feb 1998 Location: Orlando, FL , USA On page(s): 324-331 23-27 Feb 1998 References Cited: 14 Number of Pages: xxi+605 INSPEC Accession Number: 5856765 Abstract: The problem of detecting clusters of points belonging to a spatial point process arises in many applications. In this paper, we introduce the new clustering algorithm DBCLASD (Distribution-Based Clustering of LArge Spatial Databases) to discover clusters of this type. The results of experiments demonstrate that DBCLASD, contrary to partitioning algorithms such as CLARANS (Clustering Large Applications based on RANdomized Search), discovers clusters of arbitrary shape. Furthermore, DBCLASD does not require any input parameters, in contrast to the clustering algorithm DBSCAN (Density-Based Spatial Clustering of Applications with Noise) requiring two input parameters, which may be difficult to provide for large databases. In terms of efficiency, DBCLASD is between CLARANS and DBSCAN, close to DBSCAN. Thus, the efficiency of DBCLASD on large spatial databases is very attractive when considering its nonparametric nature and its good quality for clusters of arbitrary shape Index Terms: data analysis deductive databases knowledge acquisition very large databases visual databases Clustering algorithms and validity measures Halkidi, M. Batistakis, Y. Vazirgiannis, M. Dept. of Inf., Athens Univ. of Econ. & Bus. ; This paper appears in: Scientific and Statistical Database Management, 2001. SSDBM 2001. Proceedings. Thirteenth International Conference on 07/18/2001 -07/20/2001, 2001 Location: Fairfax, VA , USA On page(s): 3-22 2001 References Cited: 32 Number of Pages: x+279 INSPEC Accession Number: 7028952 Abstract: Clustering aims at discovering groups and identifying interesting distributions and patterns in data sets. Researchers have extensively studied clustering since it arises in many application domains in engineering and social sciences. In the last years the availability of huge transactional and experimental data sets and the arising requirements for data mining created needs for clustering algorithms that scale and can be applied in diverse domains. The paper surveys clustering methods and approaches available in the literature in a comparative way. It also presents the basic concepts, principles and assumptions upon which the clustering algorithms are based. Another important issue is the validity of the clustering schemes resulting from applying algorithms. This is also related to the inherent features of the data set under concern. We review and compare clustering validity measures available in the literature. Furthermore, we illustrate the issues that are under-addressed by the recent algorithms and we address new research directions Index Terms: data mining pattern clustering transaction processing very large databases `1+1>2': merging distance and density based clustering Dash, M. Liu, H. Xiaowei Xu Sch. of Comput., Nat. Univ. of Singapore; This paper appears in: Database Systems for Advanced Applications, 2001. Proceedings. Seventh International Conference on 04/18/2001 -04/21/2001, 2001 Location: Hong Kong , China On page(s): 32-39 2001 References Cited: 18 Number of Pages: xiv+362 INSPEC Accession Number: 6912922 Abstract: Clustering is an important data exploration task. Its use in data mining is growing very fast. Traditional clustering algorithms which no longer cater for the data mining requirements are modified increasingly. Clustering algorithms are numerous which can be divided in several categories. Two prominent categories are distance-based and density-based (e.g. K-means and DBSCAN, respectively). While K-means is fast, easy to implement and converges to local optima almost surely, it is also easily affected by noise. On the other hand, while density-based clustering can find arbitrary shape clusters and handle noise well, it is also slow in comparison due to neighborhood search for each data point, and faces a difficulty in setting the density threshold properly. We propose BRIDGE that efficiently merges the two by exploiting the advantages of one to counter the limitations of the other and vice versa. BRIDGE enables DBSCAN to handle very large data efficiently and improves the quality of K-means clusters by removing the noisy points. It also helps the user in setting the density threshold parameter properly. We further show that other clustering algorithms can be merged using a similar strategy. An example given in the paper merges BIRCH clustering with DBSCAN Index Terms: data mining database theory pattern clustering very large databases Interactively exploring hierarchical clustering results [gene identification] Jinwook Seo Shneiderman, B. Department of Computer Science & Human-Computer Interaction Laboratory, Maryland Univ., College Park, MD ; This paper appears in: Computer On page(s): 80-86 Volume: 35, Jul 2002 ISSN: 0018-9162 CODEN: CPTRB4 INSPEC Accession Number: 7330434 Abstract: To date, work in microarrays, sequenced genomes and bioinformatics has focused largely on algorithmic methods for processing and manipulating vast biological data sets. Future improvements will likely provide users with guidance in selecting the most appropriate algorithms and metrics for identifying meaningful clusters-interesting patterns in large data sets, such as groups of genes with similar profiles. Hierarchical clustering has been shown to be effective in microarray data analysis for identifying genes with similar profiles and thus possibly with similar functions. Users also need an efficient visualization tool, however, to facilitate pattern extraction from microarray data sets. The Hierarchical Clustering Explorer integrates four interactive features to provide information visualization techniques that allow users to control the processes and interact with the results. Thus, hybrid approaches that combine powerful algorithms with interactive visualization tools will join the strengths of fast processors with the detailed understanding of domain experts Index Terms: Hierarchical Clustering Explorer algorithmic methods arrays bioinformatics biological data sets biology computing data mining data visualisation gene functions gene identification gene profiles genetics hierarchical systems interactive exploration interactive information visualization tool interactive systems meaningful cluster identification metrics microarray data analysis pattern clustering pattern extraction process control sequenced genomes Clustering validity assessment: finding the optimal partitioning of a data set Halkidi, M. Vazirgiannis, M. This paper appears in: Data Mining, 2001. ICDM 2001, Proceedings IEEE International Conference on 11/29/2001 -12/02/2001, 2001 Location: San Jose, CA , USA On page(s): 187-194 2001 References Cited: 26 Number of Pages: xxi+677 INSPEC Accession Number: 7169295 Abstract: Clustering is a mostly unsupervised procedure and the majority of clustering algorithms depend on certain assumptions in order to define the subgroups present in a data set. As a consequence, in most applications the resulting clustering scheme requires some sort of evaluation regarding its validity. In this paper we present a clustering validity procedure, which evaluates the results of clustering algorithms on data sets. We define a validity index, S Dbw, based on well-defined clustering criteria enabling the selection of optimal input parameter values for a clustering algorithm that result in the best partitioning of a data set. We evaluate the reliability of our index both theoretically and experimentally, considering three representative clustering algorithms run on synthetic and real data sets. We also carried out an evaluation study to compare S Dbw performance with other known validity indices. Our approach performed favorably in all cases, even those in which other indices failed to indicate the correct partitions in a data set Index Terms: data mining pattern clustering Fast hierarchical clustering based on compressed data Rendon, E. Barandela, R. Pattern Recognition Lab., Technol. Inst. of Toluca, Metepec, Mexico; This paper appears in: Pattern Recognition, 2002. Proceedings. 16th International Conference on On page(s): 216- 219 vol.2 2002 ISSN: 1051-4651 Number of Pages: 4 vol.(xxix+834+xxxv+1116+xxxiii+1068+xxv+418) INSPEC Accession Number: 7474651 Abstract: Clustering in data mining is the process of discovering groups in a dataset, in such a way, that the similarity between the elements of the same cluster is maximum and between different clusters is minimal. Some algorithms attempt to group a representative sample of the whole dataset and later to perform a labeling process in order to group the rest of the original database. Other algorithms perform a pre-clustering phase and later apply some classic clustering algorithm in order to create the final clusters. We present a pre-clustering algorithm that not only provides good results and efficient optimization of main memory but it also is independent of the data input order. The efficiency of the proposed algorithm and a comparison of it with the pre-clustering BIRCH algorithm are shown. Index Terms: data compression data mining pattern clustering Clustering spatial data in the presence of obstacles: a density-based approach Zaiane, O.R. Chi-Hoon Lee Database Lab., Alberta Univ., Edmonton, Alta., Canada; This paper appears in: Database Engineering and Applications Symposium, 2002. Proceedings. International On page(s): 214- 223 2002 ISSN: 1098-8068 Number of Pages: xiii+295 INSPEC Accession Number: 7426599 Abstract: Clustering spatial data is a well-known problem that has been extensively studied. Grouping similar data in large 2-dimensional spaces to find hidden patterns or meaningful sub-groups has many applications such as satellite imagery, geographic information systems, medical image analysis, marketing, computer visions, etc. Although many methods have been proposed in the literature, very few have considered physical obstacles that may have significant consequences on the effectiveness of the clustering. Taking into account these constraints during the clustering process is costly and the modeling of the constraints is paramount for good performance. In this paper, we investigate the problem of clustering in the presence of constraints such as physical obstacles and introduce a new approach to model these constraints using polygons. We also propose a strategy to prune the search space and reduce the number of polygons to test during clustering. We devise a density-based clustering algorithm, DBCluC, which takes advantage of our constraint modeling to efficiently cluster data objects while considering all physical constraints. The algorithm can detect clusters of arbitrary shape and is insensitive to noise, the input order and the difficulty of constraints. Its average running complexity is O(NlogN) where N is the number of data points. Index Terms: computational complexity data mining pattern clustering visual databases Clustering large datasets in arbitrary metric spaces Ganti, V. Ramakrishnan, R. Gehrke, J. Powell, A. French, J. Dept. of Comput. Sci., Virginia Univ., Charlottesville, VA; This paper appears in: Data Engineering, 1999. Proceedings., 15th International Conference on 03/23/1999 -03/26/1999, 23-26 Mar 1999 Location: Sydney, NSW , Australia On page(s): 502-511 23-26 Mar 1999 References Cited: 26 Number of Pages: xxiii+648 INSPEC Accession Number: 6233205 Abstract: Clustering partitions a collection of objects into groups called clusters, such that similar objects fall into the same group. Similarity between objects is defined by a distance function satisfying the triangle inequality; this distance function along with the collection of objects describes a distance space. In a distance space, the only operation possible on data objects is the computation of distance between them. All scalable algorithms in the literature assume a special type of distance space, namely a k-dimensional vector space, which allows vector operations on objects. We present two scalable algorithms designed for clustering very large datasets in distance spaces. Our first algorithm BUBBLE is, to our knowledge, the first scalable clustering algorithm for data in a distance space. Our second algorithm BUBBLE-FM improves upon BUBBLE by reducing the number of calls to the distance function, which may be computationally very expensive. Both algorithms make only a single scan over the database while producing high clustering quality. In a detailed experimental evaluation, we study both algorithms in terms of scalability and quality of clustering. We also show results of applying the algorithms to a real life dataset Index Terms: data handling data mining database theory trees (mathematics) very large databases Improving OLAP performance by multidimensional hierarchical clustering Markl, V. Ramsak, F. Bayer, R. Bayerisches Forschungszentrum fur Wissensbasierte Syst., Munchen; This paper appears in: Database Engineering and Applications, 1999. IDEAS '99. International Symposium Proceedings 08/02/1999 -08/04/1999, Aug 1999 Location: Montreal, Que. , Canada On page(s): 165-177 Aug 1999 References Cited: 34 IEEE Catalog Number: PR00265 Number of Pages: xiii+467 INSPEC Accession Number: 6352678 Abstract: Data warehousing applications cope with enormous data sets in the range of Gigabytes and Terabytes. Queries usually either select a very small set of this data or perform aggregations on a fairly large data set. Materialized views storing pre-computed aggregates are used to efficiently process queries with aggregations. This approach increases resource requirements in disk space and slows down updates because of the view maintenance problem. Multidimensional hierarchical clustering (MHC) of OLAP data overcomes these problems while offering more flexibility for aggregation paths. Clustering is introduced as a way to speed up aggregation queries without additional storage cost for materialization. Performance and storage cost of our access method are investigated and compared to current query processing scenarios. In addition performance measurements on real world data for a typical star schema are presented Index Terms: data mining data warehouses distributed databases pattern clustering query processing Clustering algorithms for large sets of heterogeneous remote sensing data Palubinskas, G. Datcu, M. Pac, R. Remote Sensing Data Center, German Aerosp. Res. Establ., Wessling; This paper appears in: Geoscience and Remote Sensing Symposium, 1999. IGARSS '99 Proceedings. IEEE 1999 International 06/28/1999 -07/02/1999, 1999 Location: Hamburg , Germany On page(s): 1591-1593 vol.3 1999 References Cited: 13 Number of Pages: 5 vol. (xci+2770) INSPEC Accession Number: 6440656 Abstract: The authors introduce a concept for a global classification of remote sensing images in large archives, e.g. covering the whole globe. Such an archive for example will be created after the Shuttle Radar Topography Mission in 1999. The classification is realized as a two step procedure: unsupervised clustering and supervised hierarchical classification. Features, derived from different and noncommensurable models, are combined using an extended k-means clustering algorithm and supervised hierarchical Bayesian networks incorporating any available prior information about the domain Index Terms: belief networks data mining feature extraction geographic information systems geophysical signal processing geophysical techniques image classification remote sensing Clustering-regression-ordering steps for knowledge discovery in spatial databases Lazarevic, A. Xiaowei Xu Fiez, T. Obradovic, Z. Sch. of Electr. Eng. & Comput. Sci., Washington State Univ., Pullman, WA; This paper appears in: Neural Networks, 1999. IJCNN '99. International Joint Conference on 07/10/1999 -07/16/1999, 1999 Location: Washington, DC , USA On page(s): 2530-2534 vol.4 1999 References Cited: 11 Number of Pages: 6 vol. lxii+4439 INSPEC Accession Number: 6589860 Abstract: Precision agriculture is a new approach to farming in which environmental characteristics at a sub-field level are used to guide crop production decisions. Instead of applying management actions and production inputs uniformly across entire fields, they are varied to match site-specific needs. A first step in this process is to define spatial regions having similar characteristics and to build local regression models describing the relationship between field characteristics and yield. From these yield prediction models, one can then determine optimum production input levels. Discovery of “similar” regions in fields is done by applying the DBSCAN clustering algorithm on data from more than one field, ignoring spatial attributes and the corresponding yield values. The experimental results on real life agriculture data show observable improvements in prediction accuracy, although there are many unresolved issues in applying the proposed method in practice Index Terms: agriculture data mining pattern recognition visual databases Self-organizing systems for knowledge discovery in large databases Hsu, W.H. Anvil, L.S. Pottenger, W.M. Tcheng, D. Welge, M. National Center for Supercomput. Applications, Illinois Univ., Urbana, IL; This paper appears in: Neural Networks, 1999. IJCNN '99. International Joint Conference on 07/10/1999 -07/16/1999, 1999 Location: Washington, DC , USA On page(s): 2480-2485 vol.4 1999 References Cited: 20 Number of Pages: 6 vol. lxii+4439 INSPEC Accession Number: 6589850 Abstract: We present a framework in which self-organizing systems can be used to perform change of representation on knowledge discovery problems and to learn from very large databases. Clustering using self-organizing maps is applied to produce multiple, intermediate training targets that are used to define a new supervised learning and mixture estimation problem. The input data is partitioned using a state space search over subdivisions of attributes, to which self-organizing maps are applied to the input data as restricted to a subset of input attributes. This approach yields the variance-reducing benefits of techniques such as stacked generalization, but uses self-organizing systems to discover factorial (modular) structure among abstract learning targets. This research demonstrates the feasibility of applying such structure in very large databases to build a mixture of ANNs for data mining and KDD Index Terms: data mining learning (artificial intelligence) search problems self-organising feature maps very large databases Clustering very large databases using EM mixture models Bradley, P.S. Fayyad, U.M. Reina, C.A. Microsoft Res., USA; This paper appears in: Pattern Recognition, 2000. Proceedings. 15th International Conference on 09/03/2000 -09/07/2000, 2000 Location: Barcelona , Spain On page(s): 76-80 vol.2 2000 References Cited: 13 Number of Pages: 4 vol(xxxi+1134+xxxiii+1072+1152+xxix+881) INSPEC Accession Number: 6887409 Abstract: Clustering very large databases is a challenge for traditional pattern recognition algorithms, e.g. the expectation-maximization (EM) algorithm for fitting mixture models, because of high memory and iteration requirements. Over large databases, the cost of the numerous scans required to converge and large memory requirement of the algorithm becomes prohibitive. We present a decomposition of the EM algorithm requiring a small amount of memory by limiting iterations to small data subsets. The scalable EM approach requires at most one database scan and is based on identifying regions of the data that are discardable, regions that are compressible, and regions that must be maintained in memory. Data resolution is preserved to the extent possible based upon the size of the memory buffer and fit of the current model to the data. Computational tests demonstrate that the scalable scheme outperforms similarly constrained EM approaches Index Terms: data mining maximum likelihood estimation pattern clustering probability very large databases Documents that cite this document Select link to view other documents in the database that cite this one. DGLC: a density-based global logical combinatorial clustering algorithm for large mixed incomplete data Ruiz-Shulcloper, J. Alba-Cabrera, E. Sanchez-Diaz, G. Dept. of Electr. & Comput. Eng., Tennessee Univ., Knoxville, TN; This paper appears in: Geoscience and Remote Sensing Symposium, 2000. Proceedings. IGARSS 2000. IEEE 2000 International 07/24/2000 -07/28/2000, 2000 Location: Honolulu, HI , USA On page(s): 2846-2848 vol.7 2000 Number of Pages: 7 vol.(clvi+3242) INSPEC Accession Number: 6804410 Abstract: Clustering has been widely used in areas as pattern recognition, data analysis and image processing. Recently, clustering algorithms have been recognized as one of a powerful tool for data mining. However, the well-known clustering algorithms offer no solution to the case of large mixed incomplete data sets. The authors comment the possibilities of application of the methods, techniques and philosophy of the logical combinatorial approach for clustering in these kinds of data sets. They present the new clustering algorithm DGLC for discovering β0 density connected components from large mixed incomplete data sets. This algorithm combines the ideas of logical combinatorial pattern recognition with the density based notion of cluster. Finally, an example is showed in order to illustrate the work of the algorithm Index Terms: data mining geophysical signal processing geophysical techniques pattern recognition remote sensing terrain mapping Documents that cite this document Select link to view other documents in the database that cite this one. A new validation index for determining the number of clusters in a data set Haojun Sun Shengrui Wang Qingshan Jiang Dept. of Math. & Comput. Sci., Sherbrooke Univ., Que.; This paper appears in: Neural Networks, 2001. Proceedings. IJCNN '01. International Joint Conference on 07/15/2001 -07/19/2001, 2001 Location: Washington, DC , USA On page(s): 1852-1857 vol.3 2001 References Cited: 12 Number of Pages: 4 vol. xlvi+3014 INSPEC Accession Number: 7036661 Abstract: Clustering analysis plays an important role in solving practical problems in such domains as data mining in large databases. In this paper, we are interested in fuzzy c-means (FCM) based algorithms. The main purpose is to design an effective validity function to measure the result of clustering and detecting the best number of clusters for a given data set in practical applications. After a review of the relevant literature, we present the new validity function. Experimental results and comparisons will be given to illustrate the performance of the new validity function Index Terms: data mining neural nets pattern clustering Documents that cite this document Select link to view other documents in the database that cite this one. Image data mining from financial documents based on wavelet features El Badawy, O. El-Sakka, M.R. Hassanein, K. Kamel, M.S. Dept. of Syst. Design Eng., Waterloo Univ., Ont. ; This paper appears in: Image Processing, 2001. Proceedings. 2001 International Conference on 10/07/2001 -10/10/2001, 2001 Location: Thessaloniki , Greece On page(s): 1078-1081 vol.1 2001 References Cited: 11 Number of Pages: 3 vol.(lxx+1133+1108+1110) INSPEC Accession Number: 7211050 Abstract: We present a framework for clustering and classifying cheque images according to their payee-line content. The features used in the clustering and classification processes are extracted from the wavelet domain by means of thresholding and counting of wavelet coefficients. The feasibility of this framework is tested on a database of 2620 cheque images. This database consists of cheques from 10 different accounts. Each account is written by a different person. Clustering and classification are performed separately on each account using distance-based techniques. We achieved correct-classification rates of 86% and 81% for the supervised and unsupervised learning cases, respectively. These rates are the average of correct-classification rates obtained from the 10 different accounts Index Terms: banking cheque processing data mining document image processing feature extraction handwritten character recognition image classification learning (artificial intelligence) pattern clustering unsupervised learning wavelet transforms Gradual clustering algorithms Fei Wu Gardarin, G. PRiSM Lab., Versailles Univ., Versailles; This paper appears in: Database Systems for Advanced Applications, 2001. Proceedings. Seventh International Conference on 04/18/2001 -04/21/2001, 2001 Location: Hong Kong , China On page(s): 48-55 2001 References Cited: 13 Number of Pages: xiv+362 INSPEC Accession Number: 6912924 Abstract: Clustering is one of the important techniques in data mining. The objective of clustering is to group objects into clusters such that objects within a cluster are more similar to each other than objects in different clusters. The similarity between two objects is defined by a distance function, e.g., the Euclidean distance, which satisfies the triangular inequality. Distance calculation is computationally very expensive and many algorithms have been proposed so far to solve this problem. This paper considers the gradual clustering problem. From practice, we noticed that the user often begins clustering on a small number of attributes, e.g., two. If the result is partially satisfying the user will continue clustering on a higher number of attributes, e.g., ten. We refer to this problem as the gradual clustering problem. In fact gradual clustering can be considered as vertically incremental clustering. Approaches are proposed to solve this problem. The main idea is to reduce the number of distance calculations by using the triangle inequality. Our method first stores in an index the distances between a representative object and objects in n-dimensional space. Then these precomputed distances are used to avoid distance calculations in (n+m)-dimensional space. Two experiments on real data sets demonstrate the added value of our approaches. The implemented algorithms are based on the DBSCAN algorithm with an associated M-Tree as index tree. However the principles of our idea can well be integrated with other tree structures such as MVP-Tree, R*-Tree, etc., and with other clustering algorithms Index Terms: data mining pattern clustering tree data structures very large databases A similarity-based soft clustering algorithm for documents King-Ip Lin Kondadadi, R. Dept. of Math. Sci., Memphis Univ., Memphis, TN ; This paper appears in: Database Systems for Advanced Applications, 2001. Proceedings. Seventh International Conference on 04/18/2001 -04/21/2001, 2001 Location: Hong Kong , China On page(s): 40-47 2001 References Cited: 22 Number of Pages: xiv+362 INSPEC Accession Number: 6912923 Abstract: Document clustering is an important tool for applications such as Web search engines. Clustering documents enables the user to have a good overall view of the information contained in the documents that he has. However, existing algorithms suffer from various aspects, hard clustering algorithms (where each document belongs to exactly one cluster) cannot detect the multiple themes of a document, while soft clustering algorithms (where each document can belong to multiple clusters) are usually inefficient. We propose SISC (similarity-based soft clustering), an efficient soft clustering algorithm based on a given similarity measure. SISC requires only a similarity measure for clustering and uses randomization to help make the clustering efficient. Comparison with existing hard clustering algorithms like K-means and its variants shows that SISC is both effective and efficient Index Terms: data mining document handling pattern clustering very large databases Clustering of web users using session-based similarity measures Jitian Xiao Yanchun Zhang Sch. of Comput. & Inf. Sci., Edith Cowan Univ., Mount Lawley, WA; This paper appears in: Computer Networks and Mobile Computing, 2001. Proceedings. 2001 International Conference on 10/16/2001 -10/19/2001, 2001 Location: Los Alamitos, CA , USA On page(s): 223-228 2001 References Cited: 15 Number of Pages: xii+529 INSPEC Accession Number: 7114126 Abstract: One important research topic in web usage mining is the clustering of web users based on their common properties. Informative knowledge obtained from web user clusters were used for many applications, such as the prefetching of pages between web clients and proxies. This paper presents an approach for measuring similarity of interests among web users from their past access behaviors. The similarity measures are based on the user sessions extracted from the user's access logs. A multi-level scheme for clustering a large number of web users is proposed, as an extension to the method proposed in our previous work (2001). Experiments were conducted and the results obtained show that our clustering method is capable of clustering web users with similar interests Index Terms: Internet data mining pattern clustering user interface management systems Documents that cite this document Select link to view other documents in the database that cite this one. A fast algorithm to cluster high dimensional basket data Ordonez, C. Omiecinski, E. Ezquerra, N. Coll. of Comput., Georgia Inst. of Technol., Atlanta, GA; This paper appears in: Data Mining, 2001. ICDM 2001, Proceedings IEEE International Conference on 11/29/2001 -12/02/2001, 2001 Location: San Jose, CA , USA On page(s): 633-636 2001 References Cited: 17 Number of Pages: xxi+677 INSPEC Accession Number: 7169363 Abstract: Clustering is a data mining problem that has received significant attention by the database community. Data set size, dimensionality and sparsity have been identified as aspects that make clustering more difficult. The article introduces a fast algorithm to cluster large binary data sets where data points have high dimensionality and most of their coordinates are zero. This is the case with basket data transactions containing items, that can be represented as sparse binary vectors with very high dimensionality. An experimental section shows performance, advantages and limitations of the proposed approach Index Terms: data mining pattern clustering very large databases A scalable algorithm for clustering sequential data Guralnik, V. Karypis, G. Dept. of Comput. Sci., Minnesota Univ., Minneapolis, MN; This paper appears in: Data Mining, 2001. ICDM 2001, Proceedings IEEE International Conference on 11/29/2001 -12/02/2001, 2001 Location: San Jose, CA , USA On page(s): 179-186 2001 References Cited: 15 Number of Pages: xxi+677 INSPEC Accession Number: 7169294 Abstract: In recent years, we have seen an enormous growth in the amount of available commercial and scientific data. Data from domains such as protein sequences, retail transactions, intrusion detection, and Web-logs have an inherent sequential nature. Clustering of such data sets is useful for various purposes. For example, clustering of sequences from commercial data sets may help marketer identify different customer groups based upon their purchasing patterns. Grouping protein sequences that share similar structure helps in identifying sequences with similar functionality. Over the years, many methods have been developed for clustering objects according to their similarity. However these methods tend to have a computational complexity that is at least quadratic on the number of sequences. In this paper we present an entirely different approach to sequence clustering that does not require an all-against-all analysis and uses a near-linear complexity K-means based clustering algorithm. Our experiments using data sets derived from sequences of purchasing transactions and protein sequences show that this approach is scalable and leads to reasonably good clusters Index Terms: biology computing computational complexity data mining molecular biophysics pattern clustering proteins retail data processing sequences Documents that cite this document Select link to view other documents in the database that cite this one. A hypergraph based clustering algorithm for spatial data sets Jong-Sheng Cherng Mei-Jung Lo Dept. of Electr. Eng., Da Yeh Univ., Changhwa; This paper appears in: Data Mining, 2001. ICDM 2001, Proceedings IEEE International Conference on 11/29/2001 -12/02/2001, 2001 Location: San Jose, CA , USA On page(s): 83-90 2001 References Cited: 14 Number of Pages: xxi+677 INSPEC Accession Number: 7169282 Abstract: Clustering is a discovery process in data mining and can be used to group together the objects of a database into meaningful subclasses which serve as the foundation for other data analysis techniques. The authors focus on dealing with a set of spatial data. For the spatial data, the clustering problem becomes that of finding the densely populated regions of the space and thus grouping these regions into clusters such that the intracluster similarity is maximized and the intercluster similarity is minimized. We develop a novel hierarchical clustering algorithm that uses a hypergraph to represent a set of spatial data. This hypergraph is initially constructed from the Delaunay triangulation graph of the data set and can correctly capture the relationships among sets of data points. Two phases are developed for the proposed clustering algorithm to find the clusters in the data set. We evaluate our hierarchical clustering algorithm with some spatial data sets which contain clusters of different sizes, shapes, densities, and noise. Experimental results on these data sets are very encouraging Index Terms: data mining graph theory mesh generation pattern clustering visual databases Documents that cite this document Select link to view other documents in the database that cite this one. A genetic rule-based data clustering toolkit Sarafis, I. Zalzala, A.M.S. Trinder, P.W. Dept. of Comput. & Electr. Eng., Heriot-Watt Univ., Edinburgh; This paper appears in: Evolutionary Computation, 2002. CEC '02. Proceedings of the 2002 Congress on 05/12/2002 -05/17/2002, 2002 Location: Honolulu, HI , USA On page(s): 1238-1243 2002 References Cited: 17 IEEE Catalog Number: 02TH8600 Number of Pages: 2 vol.xxxvi+2034 INSPEC Accession Number: 7328863 Abstract: Clustering is a hard combinatorial problem and is defined as the unsupervised classification of patterns. The formation of clusters is based on the principle of maximizing the similarity between objects of the same cluster while simultaneously minimizing the similarity between objects belonging to distinct clusters. This paper presents a tool for database clustering using a rule-based genetic algorithm (RBCGA). RBCGA evolves individuals consisting of a fixed set of clustering rules, where each rule includes d non-binary intervals, one for each feature. The investigations attempt to alleviate certain drawbacks related to the classical minimization of square-error criterion by suggesting a flexible fitness function which takes into consideration, cluster asymmetry, density, coverage and homogeny Index Terms: data mining database theory genetic algorithms knowledge based systems least mean squares methods pattern clustering very large databases Documents that cite this document Select link to view other documents in the database that cite this one Clustering in the framework of collaborative agents Pedrycz, W. Vukovich, G. Dept. of Electr. & Comput. Eng., Alberta Univ., Edmonton, Alta.; This paper appears in: Fuzzy Systems, 2002. FUZZ-IEEE'02. Proceedings of the 2002 IEEE International Conference on 05/12/2002 -05/17/2002, 2002 Location: Honolulu, HI , USA On page(s): 134-138 2002 Number of Pages: 2 vol.xxxi+1621 INSPEC Accession Number: 7322085 Abstract: We are concerned with data mining in a distributed environment such as the Internet. As sources of data are distributed across the WWW cyberspace, this organization implies a need to develop computing agents exhibiting some form of collaboration. We propose a model of collaborative clustering realized over a collection of datasets in which a computing agent carries out an individual (local) clustering process. The essence of a global search for data structures carried out in this environment deals with a determination of crucial common relationships occurring across the network. Depending upon a way in which datasets are accessible and on a detailed mechanism of interaction, we introduce a concept of horizontal and vertical collaboration. These modes depend upon a way in which datasets are accessed. The clustering algorithms interact between themselves by exchanging information about "local" partition matrices. In this sense, the required communication links are established at the level of information granules (more specifically, fuzzy sets or fuzzy relations forming the partition matrices) rather than data that are directly available in the databases Index Terms: Internet WWW cyberspace World Wide Web clustering collaborative agents data mining data structures dataset access methods distributed data sources distributed environment fuzzy relations fuzzy set theory fuzzy sets horizontal collaboration information granules information resources interaction mechanism local partition matrices matrix algebra multi-agent systems pattern clustering vertical collaboration δ-clusters: capturing subspace correlation in a large data set Jiong Yang Wei Wang Haixun Wang Yu, P. IBM Thomas J. Watson Res. Center, Yorktown Heights, NY; This paper appears in: Data Engineering, 2002. Proceedings. 18th International Conference on 02/26/2002 -03/01/2002, 2002 Location: San Jose, CA , USA On page(s): 517-528 2002 References Cited: 13 Number of Pages: xix+735 INSPEC Accession Number: 7254404 Abstract: Clustering has been an active research area of great practical importance for recent years. Most previous clustering models have focused on grouping objects with similar values on a (sub)set of dimensions (e.g., subspace cluster) and assumed that every object has an associated value on every dimension (e.g., bicluster). These existing cluster models may not always be adequate in capturing coherence exhibited among objects. Strong coherence may still exist among a set of objects (on a subset of attributes) even if they take quite different values on each attribute and the attribute values are not fully specified. This is very common in many applications including bio-informatics analysis as well as collaborative filtering analysis, where the data may be incomplete and subject to biases. In bio-informatics, a bicluster model has recently been proposed to capture coherence among a subset of the attributes. We introduce a more general model, referred to as the δ-cluster model, to capture coherence exhibited by a subset of objects on a subset of attributes, while allowing absent attribute values. A move-based algorithm (FLOC) is devised to efficiently produce a near-optimal clustering results. The δ-cluster model takes the bicluster model as a special case, where the FLOC algorithm performs far superior to the bicluster algorithm. We demonstrate the correctness and efficiency of the δ-cluster model and the FLOC algorithm on a number of real and synthetic data sets Index Terms: data mining pattern clustering very large databases Binary rule generation via Hamming Clustering Muselli, M. Liberati, D. Ist. per i Circuiti Elettronici, Consiglio Nazionale delle Ricerche, Genova, Italy; This paper appears in: Knowledge and Data Engineering, IEEE Transactions on On page(s): 1258- 1268 Volume: 14, Nov/Dec 2002 ISSN: 1041-4347 INSPEC Accession Number: 7472285 Abstract: The generation of a set of rules underlying a classification problem is performed by applying a new algorithm called Hamming Clustering (HC). It reconstructs the AND-OR expression associated with any Boolean function from a training set of samples. The basic kernel of the method is the generation of clusters of input patterns that belong to the same class and are close to each other according to the Hamming distance. Inputs which do not influence the final output are identified, thus automatically reducing the complexity of the final set of rules. The performance of HC has been evaluated through a variety of artificial and realworld benchmarks. In particular, its application in the diagnosis of breast cancer has led to the derivation of a reduced set of rules solving the associated classification problem. Index Terms: Boolean functions data mining generalisation (artificial intelligence) learning (artificial intelligence) medical diagnostic computing medical expert systems pattern clustering Documents that cite this document Select link to view other documents in the database that cite this one. HD-Eye: visual mining of high-dimensional data Hinneburg, A. Keim, D.A. Wawryniuk, M. Inst. of Comput. Sci., Halle Univ.; This paper appears in: Computer Graphics and Applications, IEEE On page(s): 22-31 Volume: 19, Sep/Oct 1999 ISSN: 0272-1716 References Cited: 18 CODEN: ICGADZ INSPEC Accession Number: 6353310 Abstract: Clustering in high-dimensional databases poses an important problem. However, we can apply a number of different clustering algorithms to high-dimensional data. The authors consider how an advanced clustering algorithm combined with new visualization methods interactively clusters data more effectively. Experiments show these techniques improve the data mining process Index Terms: data mining data visualisation very large databases Chameleon: hierarchical clustering using dynamic modeling Karypis, G. Eui-Hong Han Kumar, V. Dept. of Comput. Sci., Minnesota Univ., Minneapolis, MN; This paper appears in: Computer On page(s): 68-75 Volume: 32, Aug 1999 ISSN: 0018-9162 References Cited: 11 CODEN: CPTRB4 INSPEC Accession Number: 6332134 Abstract: Clustering is a discovery process in data mining. It groups a set of data in a way that maximizes the similarity within clusters and minimizes the similarity between two different clusters. Many advanced algorithms have difficulty dealing with highly variable clusters that do not follow a preconceived model. By basing its selections on both interconnectivity and closeness, the Chameleon algorithm yields accurate results for these highly variable clusters. Existing algorithms use a static model of the clusters and do not use information about the nature of individual clusters as they are merged. Furthermore, one set of schemes (the CURE algorithm and related schemes) ignores the information about the aggregate interconnectivity of items in two clusters. Another set of schemes (the Rock algorithm, group averaging method, and related schemes) ignores information about the closeness of two clusters as defined by the similarity of the closest items across two clusters. By considering either interconnectivity or closeness only, these algorithms can select and merge the wrong pair of clusters. Chameleon's key feature is that it accounts for both interconnectivity and closeness in identifying the most similar pair of clusters. Chameleon finds the clusters in the data set by using a two-phase algorithm. During the first phase, Chameleon uses a graph partitioning algorithm to cluster the data items into several relatively small subclusters. During the second phase, it uses an algorithm to find the genuine clusters by repeatedly combining these subclusters Index Terms: data analysis data mining graph theory pattern clustering Documents that cite this document Select link to view other documents in the database that cite this one. Clustering of the self-organizing map Vesanto, J. Alhoniemi, E. Neural Networks Res. Centre, Helsinki Univ. of Technol., Espoo; This paper appears in: Neural Networks, IEEE Transactions on On page(s): 586-600 Volume: 11, May 2000 ISSN: 1045-9227 References Cited: 49 CODEN: ITNNEP INSPEC Accession Number: 6633557 Abstract: The self-organizing map (SOM) is an excellent tool in exploratory phase of data mining. It projects input space on prototypes of a low-dimensional regular grid that can be effectively utilized to visualize and explore properties of the data. When the number of SOM units is large, to facilitate quantitative analysis of the map and the data, similar units need to be grouped, i.e., clustered. In this paper, different approaches to clustering of the SOM are considered. In particular, the use of hierarchical agglomerative clustering and partitive clustering using K-means are investigated. The two-stage procedure-first using SOM to produce the prototypes that are then clustered in the second stage-is found to perform well when compared with direct clustering of the data and to reduce the computation time Index Terms: data analysis data mining learning (artificial intelligence) self-organising feature maps Documents that cite this document Select link to view other documents in the database that cite this one. Reference list: 1, D. Pyle, " Data Preparation for Data Mining", Morgan Kaufmann, San Francisco, CA, 1999. 2, T. Kohonen, " Self-Organizing Maps", Springer, Berlin/Heidelberg , Germany, vol.30, 1995. 3, J. Vesanto, "SOM-based data visualization methods", Intell. Data Anal., vol.3, no.2, pp.111- 126, 1999. 4, Fuzzy Models for Pattern Recognition: Methods that Search for Structures in Data", IEEE, New York, 1992. 5, G. J. McLahlan, K. E. Basford, "Mixture Models: Inference and Applications to Clustering", Marcel Dekker, New York, vol.84, 1987. 6, J. C. Bezdek, "Some new indexes of cluster validity", IEEE Trans. Syst., Man, Cybern. B, vol.28, pp.301 -315, 1998. [Abstract] [PDF Full-Text (888KB)] 7, M. Blatt, S. Wiseman, E. Domany, "Superparamagnetic clustering of data", Phys. Rev. Lett., vol.76, no.18, pp.3251- 3254, 1996. 8, G. Karypis, E.-H. Han, V. Kumar, "Chameleon: Hierarchical clustering using dynamic modeling", IEEE Comput., vol.32, pp.68 -74, Aug. 1999. [Abstract] [PDF Full-Text (1620KB)] 9, J. Lampinen, E. Oja, "Clustering properties of hierarchical self-organizing maps", J. Math. Imag. Vis., vol.2, no.2–3, pp.261-272, Nov. 1992. 10, E. Boudaillier, G. Hebrail, "Interactive interpretation of hierarchical clustering", Intell. Data Anal., vol.2, no.3, 1998. 11, J. Buhmann, H. Kühnel, "Complexity optimized data clustering by competitive neural networks", Neural Comput., vol.5, no. 3, pp.75-88, May 1993. 12, G. W. Milligan, M. C. Cooper, "An examination of procedures for determining the number of clusters in a data set", Psychometrika, vol.50, no.2, pp.159-179, June 1985. 13, D. L. Davies, D. W. Bouldin, "A cluster separation measure", IEEE Trans. Patt. Anal. Machine Intell., vol.PAMI-1, pp.224-227, Apr. 1979. 14, S. P. Luttrell, "Hierarchical self-organizing networks", Proc. 1st IEE Conf. Artificial Neural Networks, London , U.K., pp.2-6, 1989. [Abstract] [PDF Full-Text (344KB)] 15, A. Varfis, C. Versino, "Clustering of socio-economic data with Kohonen maps", Neural Network World, vol.2, no.6, pp.813-834, 1992. 16, P. Mangiameli, S. K. Chen, D. West, "A comparison of SOM neural network and hierarchical clustering methods", Eur. J. Oper. Res., vol. 93, no.2, Sept. 1996. 17, T. Kohonen, "Self-organizing maps: Optimization approaches ", Artificial Neural Networks, Elsevier, Amsterdam, The Netherlands, pp. 981-990, 1991. 18, J. Moody, C. J. Darken, "Fast learning in networks of locally-tuned processing units", Neural Comput., vol.1, no.2, pp. 281-294, 1989. 19, J. A. Kangas, T. K. Kohonen, J. T. Laaksonen, "Variants of self-organizing maps ", IEEE Trans. Neural Networks, vol.1, pp.93 -99, Mar. 1990. [Abstract] [PDF Full-Text (664KB)] 20, T. Martinez, K. Schulten, "A "neural-gas" network learns topologies ", Artificial Neural Networks, Elsevier, Amsterdam, The Netherlands, pp. 397-402, 1991. 21, B. Fritzke, "Let it grow—Self-organizing feature maps with problem dependent cell structure", Artificial Neural Networks, Elsevier, Amsterdam, The Netherlands, pp.403-408, 1991. 22, Y. Cheng, "Clustering with competing self-organizing maps ", Proc. Int. Conf. Neural Networks, vol.4, pp. 785-790, 1992. [Abstract] [PDF Full-Text (376KB)] 23, B. Fritzke, "Growing grid—A self-organizing network with constant neighborhood range and adaptation strength", Neural Process. Lett., vol.2, no.5, pp.9-13, 1995. 24, J. Blackmore, R. Miikkulainen, "Visualizing high-dimensional structure with the incremental grid growing neural network", Proc. 12th Int. Conf. Machine Learning, pp.55- 63, 1995. 25, S. Jockusch, "A neural network which adapts its stucture to a given set of patterns", Parallel Processing in Neural Systems and Computers , Elsevier, Amsterdam, The Netherlands , pp.169-172, 1990. 26, J. S. Rodrigues, L. B. Almeida, "Improving the learning speed in topological maps of pattern", Proc. Int. Neural Network Conf., pp.813-816, 1990. 27, D. Alahakoon, S. K. Halgamuge, "Knowledge discovery with supervised and unsupervised self evolving neural networks", Proc. Fifth Int. Conf. Soft Comput. Inform./Intell. Syst., pp. 907-910, 1998. 28, A. Gersho, "Asymptotically optimal block quantization ", IEEE Trans. Inform. Theory, vol.IT-25, pp.373 -380, July 1979. 29, P. L. Zador, "Asymptotic quantization error of continuous signals and the quantization dimension", IEEE Trans. Inform. Theory, vol.IT-28 , pp.139-149, Mar. 1982. 30, H. Ritter, "Asymptotic level density for a class of vector quantization processes", IEEE Trans. Neural Networks, vol.2, pp.173-175, Jan. 1991. [Abstract] [PDF Full-Text (280KB)] 31, T. Kohonen, "Comparison of SOM point densities based on different criteria", Neural Comput., vol.11, pp. 2171-2185, 1999. 32, R. D. Lawrence, G. S. Almasi, H. E. Rushmeier, "A scalable parallel algorithm for self-organizing maps with applications to sparse data problems", Data Mining Knowl. Discovery, vol.3, no.2, pp.171-195, June 1999. 33, T. Kohonen, S. Kaski, K. Lagus, J. SalojärviSalojarvi, J. Honkela, V. Paatero, A. Saarela, "Self organization of a massive document collection", IEEE Trans. Neural Networks, vol.11, pp.XXX-XXX, May 2000. [Abstract] [PDF Full-Text (548KB)] 34, P. Koikkalainen, "Fast deterministic self-organizing maps ", Proc. Int. Conf. Artificial Neural Networks, vol.2, pp.63-68, 1995 . 35, A. Ultsch, H. P. Siemon, "Kohonen's self organizing feature maps for exploratory data analysis", Proc. Int. Neural Network Conf., Dordrecht, The Netherlands, pp.305 -308, 1990. 36, M. A. Kraaijveld, J. Mao, A. K. Jain, "A nonlinear projection method based on Kohonen's topology preserving maps", IEEE Trans. Neural Networks, vol.6, pp.548559, May 1995. [Abstract] [PDF Full-Text (1368KB)] 37, J. Iivarinen, T. Kohonen, J. Kangas, S. Kaski, "Visualizing the clusters on the self-organizing map", Proc. Conf. Artificial Intell. Res. Finland, Helsinki, Finland, pp.122- 126, 1994. 38, X. Zhang, Y. Li, "Self-organizing map as a new method for clustering and data analysis", Proc. Int. Joint Conf. Neural Networks, pp.2448-2451, 1993. [Abstract] [PDF Full-Text (304KB)] 39, J. B. Kruskal, "Multidimensional scaling by optimizing goodness of fit to a nonmetric hypothesis", Psychometrika, vol.29, no. 1, pp.1-27, Mar. 1964. 40, J. B. Kruskal, "Nonmetric multidimensional scaling: A numerical method", Psychometrika, vol.29, no.2, pp.115 -129, June 1964. 41, J. W. Sammon, Jr., "A nonlinear mapping for data structure analysis", IEEE Trans. Comput., vol.C-18, pp. 401-409, May 1969. 42, P. Demartines, J. HéraultHerault, " Curvilinear component analysis: A selforganizing neural network for nonlinear mapping of data sets", IEEE Trans. Neural Networks, vol.8, pp.148-154, Jan. 1997. [Abstract] [PDF Full-Text (216KB)] 43, A. Varfis, "On the use of two traditional statistical techniques to improve the readibility of Kohonen Maps", Proc. NATO ASI Workshop Statistics Neural Networks, 1993. 44, S. Kaski, J. Venna, T. Kohonen, "Coloring that reveals high-dimensional structures in data", Proc. 6th Int. Conf. Neural Inform. Process., pp.729-734, 1999. [Abstract] [PDF Full-Text (316KB)] 45, F. Murtagh, "Interpreting the Kohonen self-organizing map using contiguityconstrained clustering", Patt. Recognit. Lett., vol.16, pp.399-408, 1995. 46, O. Simula, J. Vesanto, P. Vasara, R.-R. Helminen, "Industrial Applications of Neural Networks", CRC , Boca Raton, FL, pp.87-112, 1999 . 47, J. Vaisey, A. Gersho, "Simulated annealing and codebook design", Proc. Int. Conf. Acoust., Speech, Signal Process., pp. 1176-1179, 1988. [Abstract] [PDF Full-Text (484KB)] 48, J. K. Flanagan, D. R. Morrell, R. L. Frost, C. J. Read, B. E. Nelson, "Vector quantization codebook generation using simulated annealing", Proc. Int. Conf. Acoust., Speech, Signal Process., vol.3, pp.1759-1762, 1989. [Abstract] [PDF Full-Text (200KB)] 49, T. Graepel, M. Burger, K. Obermayer, "Phase transitions in stochastic selforganizing maps", Phys. Rev. E, vol.56, pp.3876- 3890, 1997. Redefining clustering for high-dimensional applications Aggarwal, C.C. Yu, P.S. IBM Thomas J. Watson Res. Center, Yorktown Heights, NY; This paper appears in: Knowledge and Data Engineering, IEEE Transactions on On page(s): 210-225 Volume: 14, Mar/Apr 2002 ISSN: 1041-4347 References Cited: 39 CODEN: ITKEEH INSPEC Accession Number: 7224458 Abstract: Clustering problems are well-known in the database literature for their use in numerous applications, such as customer segmentation, classification, and trend analysis. High-dimensional data has always been a challenge for clustering algorithms because of the inherent sparsity of the points. Recent research results indicate that, in high-dimensional data, even the concept of proximity or clustering may not be meaningful. We introduce a very general concept of projected clustering which is able to construct clusters in arbitrarily aligned subspaces of lower dimensionality. The subspaces are specific to the clusters themselves. This definition is substantially more general and realistic than the currently available techniques which limit the method to only projections from the original set of attributes. The generalized projected clustering technique may also be viewed as a way of trying to redefine clustering for high-dimensional applications by searching for hidden subspaces with clusters which are created by interattribute correlations. We provide a new concept of using extended cluster feature vectors in order to make the algorithm scalable for very large databases. The running time and space requirements of the algorithm are adjustable and are likely to trade-off with better accuracy Index Terms: data mining pattern clustering very large databases