Grayson Myers

advertisement

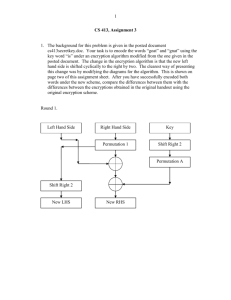

Grayson Myers CSEP 590 Final Paper Poking Holes in Knapsack Cryptosystems 1. The Knapsack Problem Given n items a1,…,an, each with an associated integer size, and a knapsack of some integer capacity S, the knapsack problem is to determine the largest number of items that can fit in the knapsack. This is an optimization problem which is known to be NP-hard. For the purposes of knapsack cryptosystems, a restriction is added that each item may only be included once, and the problem is stated as a decision problem: is there a subset of the items that exactly fills the knapsack (and if so, what is it?). In other words, given S, find binary-valued numbers x1,…,xn such that S = ∑ xi*ai If xi = 1, then ai is part of the solution, if xi = 0, it is not. This version of the problem is also known as the subset sum problem and is NP-complete. 2. Knapsack cryptosystems Like other public-key cryptosystems, knapsack cryptosystems use a trapdoor function to allow the intended recipient to use private information to solve an otherwise (hopefully) intractable problem. In the case of knapsacks, this function is used to transform a “hard” instance of the subset sum problem into an “easy” one. Thus, the public key in a knapsack system consists of the ais that form the hard instance. To encrypt an n-bit message to Bob, Alice computes S = ∑ xi*ai, where each xi is a bit in her message. To recover the message, an eavesdropper who knows only S and the ais would in theory have to solve the hard subset sum problem to recover the message. To decrypt, Bob uses his private information to transform the problem into an easy instance involving sum S’ and items a1’,…,an’ and solves that instead. 3. Merkle-Hellman knapsacks The first cryptosystem based on this idea was developed by Merkle and Hellman in 1978 [5], using a superincreasing sequence to implement the easy (private) knapsack instance. A superincreasing sequence y1,…,yn has the property that for all i, the sum y1,…,yi-1 < yi. A subset sum problem involving items with sizes corresponding to the yi’s can be solved in linear time. To determine if yn is in the sum, just compare it to S; if S ≥ yn, then yn must be in the subset because of the superincreasing property. If yn < S, it cannot be in the sum. This process can be repeated to find out if yn-1 is in the subset, using S – yn in place of S if yn was in the subset, and so forth until the entire subset is recovered. The Merkle-Hellman trapdoor uses modular multiplication to transform a superincreasing sequence of private weights a1’,…,an’ into a hard instance a1,…,an as follows: Bob chooses large relatively prime integers M and W, s.t. M ≥ ∑ ai’. Bob computes ai = W*ai’ mod M. Then to decrypt the message (the sum S) sent by Alice, Bob computes S’ = W-1*S mod M (W-1 is multiplicative inverse of W mod M) Bob solves the superincreasing knapsack S’ = ∑ xi*ai’ to recover Alice’s original xi values. To see that this works (that is, Bob gets back the correct xis) consider that S’ = W-1*S mod M = W-1*(∑ xi*ai mod M) replace S with elements in its sum = W-1*(∑ xi*(W*ai’) mod M) replace ai with the product that produced it = ∑ xi*ai’ mod M because W*W-1 = 1 = ∑ xi*ai’ because ∑ ai’ < M. At a minimum, Merkle and Hellman suggested using n=100 (100 items), M in the range (2201, 2202- 1), a1’ in [1, 2100], and ai’ in [(2i-1-1)*2100 +1, 2i-1*2100]. This describes the basic additive Merkle-Hellman system, their paper also suggests that an iterative version might be more secure. In the iterative case, S goes through a number of transformations, each with different M, W, and ai’sequence. Only the ai’ sequence in the final transformation must be superincreasing. Merkle and Hellman also suggested permuting the ais, so that for ai in the public key and aj’ in the private key, i is not necessarily equal to j. 4. Security of Merkle-Hellman knapsacks The Merkle-Hellman system was proposed just months after RSA. At the time, it was unclear which of the two would turn out be more secure. In their original paper, Merkle and Hellman state that “neither [RSA nor Merkle-Hellman] security has been adequately established, but when iterated, the trapdoor backpack appears less likely to possess a chink in its armor”. Apparently this view was not unique to Merkle and Hellman, as in 1979 Shamir called the Merkle-Hellman knapsack “the most popular [public-key cryptosystem]” [7]. This opinion eventually turned out to be wrong, of course. There are no knapsack cryptosystems in wide use today, while RSA has become ubiquitous. This is mainly due to insecurities discovered in knapsack cryptosystems. In trapdoor public-key cryptosystems generally, there are two main categories of attack, those that exploit weaknesses in the construction of the trapdoor, and those that attack the hard problem directly. In the case of knapsack cryptosystems, both types have proven successful. This paper will describe two attacks, one of each kind, against the basic (singly-iterated) Merkle-Hellman cryptosystem. The permutation of the ais will be ignored, because it turns out that applying the permutation doesn’t significantly increase the security of the system. 5. Shamir’s attack The first attack, due to Shamir[8], exploits a weakness in the trapdoor construction. It attempts to find integers M’ and W’ which yield a superincreasing sequence b1,…,bn, where bi = W’*ai mod M’ (the ais are the public key). Note that finding any such M’ and W’ allows the attacker to decrypt in linear time, as long as they are relatively prime and M’ is at least as large as the sum of the bis. They do not have to be equal to the M and W used in the original construction. Shamir’s attack proceeds in two stages. In the first stage, the ratio W’/M’ is approximated to a set of sub-intervals of [0,1) (note that W’ is only used in calculations mod M’, so if W’ is not less than M’, for the purposes of Merkle-Hellman, there exists an equivalent W’ that is). In the second phase, these intervals are refined such that any W’/M’ in the remaining intervals constitute a trapdoor pair. Shamir explains the algorithm graphically (Odlyzko provides a second completely algebraic explanation, but I found the graphical version more compelling). Consider the first value in the public sequence, a1. If we allow W to range over all values from 0 to M (M is the unknown modulus used in the original construction), then we get a sawtooth graph like this for the function W*a1 mod M: W*a1 mod M M W Shamir noticed that the corresponding private sequence value a1’ must be less than 2|M|-n, where |M| is the number of bits in the modulus, because the ai’s are superincreasing and their sum is < M. Compared to M, this is relatively small; for example, using the parameters suggested by Merkle and Hellman, M is 202 bits, but a1’ will only be 100 bits. Now, since the slope of the curve above is a1, with minima occurring at each M/a1 along the W axis, the unknown value W=W0 that was multiplied by a1’ to get a1 can be at most 2|M|-n/a1 ≈ 2-n away from the closest minima of the curve (which will be to its left). In other words, W0 is very close to some minima of the curve. This fact alone is not all that helpful, because there are too many minima to check each one. However, similar reasoning applies to the sawtooth curves generated for a2, a3, a4, and indeed all of the ais for which the corresponding ai’s have relatively small values compared to M (Shamir found that in practice analyzing three or four curves was usually enough, when n=100 and |M| = 202). Since W0 is a constant, what we want to find are places where the minima of all of these curves lie close together, as W0 is likely to lie in that vicinity. To do this given c < n curves, Shamir constructed a set of c inequalities with c integer-valued unknowns p1,…,pc, with each pi indicating the minima of the ai curve to be used (pi=1 is the first minima that occurs at M/ai, pi=2 is the second at 2*M/ai, and so on). He did this by calculating the maximum allowable distance between the a1 curve and the other ai curves that would still result in a superincreasing sequence of the ai’s. For example, as shown above, W0 is within a distance of ≈ 2-n to the right of the some minima of the a1 curve, and similarly, W0 is within a distance of ≈ 2-n+1 to the right of some minima of the a2 curve. So, these two desired minima must be within a distance of ≈ 2-n+1 of each other. Expressing this as an inequality using p1 and p2: -2-n+1 < p1*M/a1 – p2*M/a2 < 2-n+1 The other y inequalities are constructed similarly. Shamir then used Lenstra’s integer programming algorithm to find values of the pis in polynomial time. Actually, the algorithm runs in polynomial time only for a fixed number of variables (i.e., when c is a constant). The result is a set of clusters of minima of the ai curves along the W axis (there may be more than one cluster). W0, along with any other W-values that might generate the desired ais from a superincreasing sequence, should exist in the vicinity of these clusters. The second stage of Shamir’s algorithm considers each cluster separately. The main idea is to examine the interval [p1*M/a1,(p+1)*M/a1) between successive a1 minima, where p1 is one of the values obtained in the first part of the algorithm. Then treat the ai curves as linear equations in the interval. This is true between discontinuity points; there will be on the order of n of these in the interval, so the result is a set of about n linear equations for each ai curve, with each equation describing a segment between discontinuities. Using these equations, set up a system of linear inequalities expressing the size and superincreasing constraints on the ais. If this system of equations has a solution, it will be an interval containing only desired W’-values. One point glossed over in this discussion is that the attacker cannot find the sawtooth curves exactly as shown above, because in addition to W’, M is also unknown. However, since the above reasoning depends only on the slope of the ai curves, this problem can be easily solved by “dividing by” the unknown M, thereby replacing it with 1 in the graph above. This means that rather than finding W’ values, the algorithm actually finds W’/M values. However, since the final result is a set of sub-intervals of [0,1) containing only values that result in a superincreasing sequence, one can use a Diophantine approximation algorithm to recover a ratio W’/M’ in the interval. Once suitable W’ and M’ are known, generating the superincreasing sequence is easy. Recall that: ai = W*ai’ mod M, and W is invertible mod M, so ai’ = W-1*ai mod M A superincreasing sequence can be obtained from the W’ and M’ found using Shamir’s algorithm in exactly the same manner. Finally, note that Shamir’s algorithm runs in polynomial time. Lenstra’s integer programming algorithm runs in polynomial time for a fixed number of variables, and the linear equation solving, Diophantine approximation, and recovery of the superincreasing sequence can be done in polynomial time as well. To ensure that the number of clusters found in the first part of the algorithm doesn’t cause exponential growth, the algorithm halts if more than some constant k clusters are found (Shamir suggests k=100). This means that the algorithm will not break every knapsack, but this is not a big limitation since recovering even a small fraction of the private keys is enough to consider a cryptosystem broken. 6. Odlyzko and Lagarias’ attack Shamir’s attack did not spell immediate doom for the knapsack cryptosystems. Other knapsack cryptosystems were developed that were not susceptible to it, and the iterated Merkle-Hellman system remained unbroken. However, a few years later, Lagarias and Odlyzko found a way to attack certain instances of the subset sum problem directly in polynomial time [3]. This attack could be applied to a wide variety of knapsack cryptosystems, and rendered ineffective techniques like iteration, which attempt to make the private key recovery more difficult. Brickell [1] made a similar discovery, but as the Lagarias-Odlyzko attack is somewhat simpler, I will describe that here. The attack, called Algorithm SV (for short vector), first forms a basis for an integer lattice containing the public ais, and then applies a basis reduction algorithm in an attempt to recover the xis (the bits of the original message). To give some basic definitions, an integer lattice is a set L, containing all n-element vectors which can be formed using a linear combination of m n-element basis vectors. The basis vectors must be linearly independent. The first step in Algorithm SV is to construct the following matrix; the rows of this matrix form a basis for L. Each ai in the matrix is a public weight, and S is the target sum (the message). v1 = (1, 0, …, 0, -a1) v2 = (0, 1, …, 0, -a2) … vn = (0, 0, …, 1, -an) vn+1 = (0, 0, …, 0, S ) Note that these vectors are linearly independent, that is, none of them can be written as a linear combination of the others. Vectors in this lattice have n+1 elements, and are of the form: z1*v1 + z2*v2 + … + zn*vn + zn+1*vn+1 Of particular interest is the vector x in L whose first n coefficients are the unknown bits of the message, and whose n+1st coefficient is 1: x1*v1 + x2*v2 + … + xn*vn + 1* vn+1 The key insight about this vector is that it is very short (of length ≤ n, where length means squared Euclidean distance). Its first n elements will be either 1’s or 0’s, depending on the values of the xis. Its n+1st element will be 0, because its value will be all the ais which sum to S, subtracted from S. This is where the idea of basis reduction comes in. This paper will not go into the exact definition of a reduced basis, for our purposes it is sufficient to say that a reduced basis is generally made up of short vectors, and that a basis reduction algorithm takes as input a basis for L, and returns a reduced basis for L. The final steps of Algorithm SV are actually quite simple: Run the polynomial-time LLL basis reduction algorithm [4] (named after Lenstra, Lenstra, and Lovász, its inventors) on the basis given above. Check whether the reduced basis contains x (or x*c, for some constant c). If so, recover the xis and return success. If not, repeat the “reduce and check” process with S replaced by S – a1 in the original basis. If x has not been found, halt. Despite its simplicity (at least, when the basis reduction is treated as a black box), Algorithm SV solves a surprisingly high percentage of subset sum problems. With n=50 (the largest value Lagarias and Odlyzko reported trying), it solved 2 out 3 problems, with each solution taking about 14 minutes on a CRAY-1. Since the subset sum problem is NP-complete, it would be very surprising indeed if this algorithm were able to solve all subset sum problems. In fact, that is not the case, but its probability of success increases as the density of the subset sum decreases. Density here is defined as the ratio |x|/|S|, where |x| denotes the number of elements in the x vector, and |S| denotes the length of S in bits. In other words, the density is the number of subset elements divided by the number of bits in S. From the point of view of knapsack cryptosystems, density can be characterized as the ratio of the size of the unencrypted input to the size of the encrypted output. Densities greater than 1 cannot be used to transmit information, because they will not be uniquely decryptable. Lagarias and Odlyzko found that their method worked well on problem instances with density less than 0.65. Subsequent improvements to the algorithm have raised this bound even further [2]. The intuition as to why Algorithm SV works on low densities is that as the density decreases, the sizes of the ais and S increases, and thus the length of an “average” vector in L increases as well. This increases the likelihood that the basis reduction algorithm will find the atypically short x vector. What made the discovery of this attack particularly deadly for the singly-iterated Merkle-Hellman knapsacks is that it complements Shamir’s attack, which is effective against high-density subset sum problems. The intuition for this is that as the density increases, the size of M decreases. The result is that for desired W’ values, the ai minima corresponding to the smallest few values of the superincreasing ai’ sequence are squeezed closer and closer together along the W axis. This increases the likelihood that Shamir’s algorithm will be able to discover some W’ and M’. 7. Final remarks Although this paper only details one knapsack cryptosystem and two attacks against it, since Merkle and Hellman’s 1978 paper, there were dozens of knapsack cryptosystems proposed, and many more papers written on the subject. Nearly all are now considered broken, and there seems to be very little continuing research effort. The Chor-Rivest algorithm, which uses operations in a finite field to transform the private knapsack into the public one, survived the longest, but was apparently finally broken by Vaudenay in 1998 [9]. It is tempting to wonder if there is a lesson that can be learned from the failure of knapsack cryptosystems. If there is one, I think it is that worst-case complexity analyses (such as statements about NP-completeness) are at best insufficient and at worst misleading when applied to cryptography. Statements about average or best-case complexity (the least amount of work an attacker would have to do to break the system), while harder to come by, should be considered much more compelling arguments for security. 8. References [1] E. Brickell. “Solving Low Density Knapsacks”. Advances in Cryptology – CRYPTO ’84, Lecture Notes in Computer Science, vol 1460. Plenum, New York, 1984, pp. 342-358. [2] M. Coster, A. Joux, B. A. LaMacchia, A. M. Odlyzko, Claus-Peter Schnorr, Jacques Stern. “Improved Low-Density Subset Sum Algorithms”. Computational Complexity, vol. 2, 1992, pp. 111-128. [3] J. C. Lagarias, A. M. Odlyzko. “Solving Low-Density Subset Sum Problems”. Journal of the ACM, vol. 32, 1985, pp. 229-246. [4] A. K. Lenstra, H. W. Lenstra Jr., L. Lovász. “Factoring Polynomials with Rational Coefficients”. Mathematische Annualen, vol. 261, 1982, pp. 513-534. [5] R. C. Merkle, M. E. Hellman. “Hiding Information and Signatures in Trapdoor Knapsacks”. IEEE Transactions on Information Theory, vol. IT-24, 1978, pp. 525-530. [6] A. M. Odlyzko. “The Rise and Fall of Knapsack Cryptosystems”. Cryptology and Computational Number Theory, Proceedings of Symposia in Applied Mathematics, vol. 42. American Mathematics Society, Providence, RI, 1990, pp. 75-88. [7] A. Shamir. “On the Cryptocomplexity of Knapsack Systems”. Proceedings of the 11th ACM Symposium on Theory of Computing, 1979, pp. 339-340. [8] A. Shamir. “A Polynomial-time Algorithm for Breaking the Basic Merkle-Hellman Cryptosystem”. Proceedings of the IEEE Symposium on Foundations of Computer Science. IEEE, New York, 1982, pp. 145-152. [9] S. Vaudenay. “Cryptanalysis of the Chor-Rivest Cryptosystem”. Advances in Cryptology – CRYPTO ’98, Lecture Notes in Computer Science, vol. 1462. Springer, Berlin, 1998, pp. 243256.