IEEE

advertisement

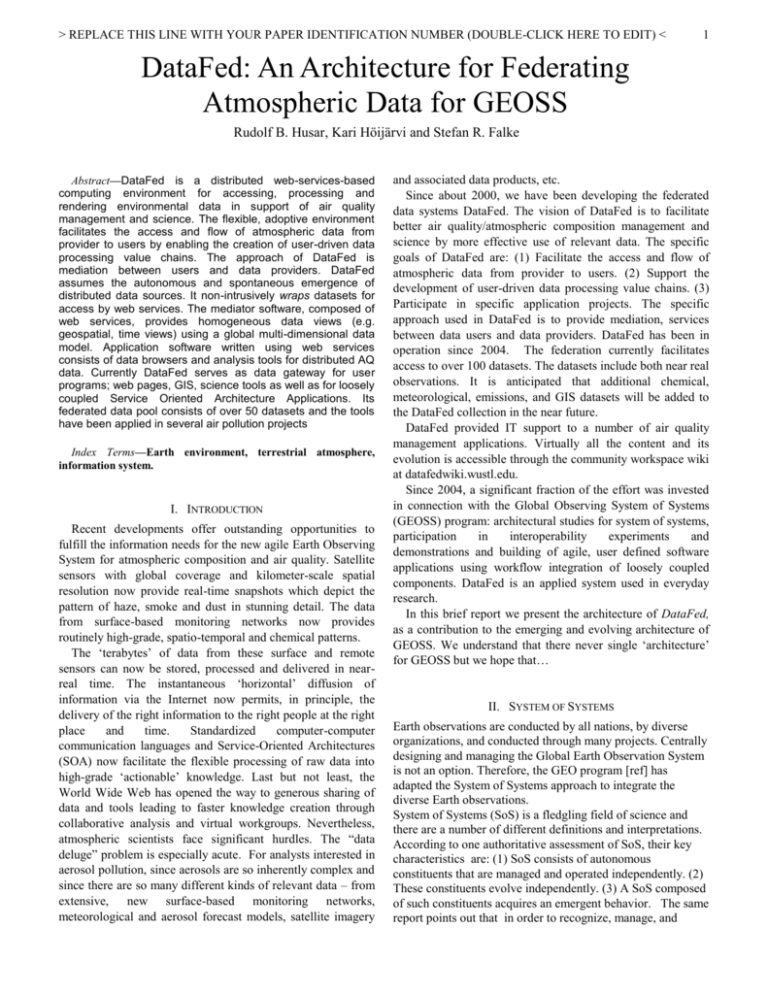

> REPLACE THIS LINE WITH YOUR PAPER IDENTIFICATION NUMBER (DOUBLE-CLICK HERE TO EDIT) < 1 DataFed: An Architecture for Federating Atmospheric Data for GEOSS Rudolf B. Husar, Kari Höijärvi and Stefan R. Falke Abstract—DataFed is a distributed web-services-based computing environment for accessing, processing and rendering environmental data in support of air quality management and science. The flexible, adoptive environment facilitates the access and flow of atmospheric data from provider to users by enabling the creation of user-driven data processing value chains. The approach of DataFed is mediation between users and data providers. DataFed assumes the autonomous and spontaneous emergence of distributed data sources. It non-intrusively wraps datasets for access by web services. The mediator software, composed of web services, provides homogeneous data views (e.g. geospatial, time views) using a global multi-dimensional data model. Application software written using web services consists of data browsers and analysis tools for distributed AQ data. Currently DataFed serves as data gateway for user programs; web pages, GIS, science tools as well as for loosely coupled Service Oriented Architecture Applications. Its federated data pool consists of over 50 datasets and the tools have been applied in several air pollution projects Index Terms—Earth environment, terrestrial atmosphere, information system. I. INTRODUCTION Recent developments offer outstanding opportunities to fulfill the information needs for the new agile Earth Observing System for atmospheric composition and air quality. Satellite sensors with global coverage and kilometer-scale spatial resolution now provide real-time snapshots which depict the pattern of haze, smoke and dust in stunning detail. The data from surface-based monitoring networks now provides routinely high-grade, spatio-temporal and chemical patterns. The ‘terabytes’ of data from these surface and remote sensors can now be stored, processed and delivered in nearreal time. The instantaneous ‘horizontal’ diffusion of information via the Internet now permits, in principle, the delivery of the right information to the right people at the right place and time. Standardized computer-computer communication languages and Service-Oriented Architectures (SOA) now facilitate the flexible processing of raw data into high-grade ‘actionable’ knowledge. Last but not least, the World Wide Web has opened the way to generous sharing of data and tools leading to faster knowledge creation through collaborative analysis and virtual workgroups. Nevertheless, atmospheric scientists face significant hurdles. The “data deluge” problem is especially acute. For analysts interested in aerosol pollution, since aerosols are so inherently complex and since there are so many different kinds of relevant data – from extensive, new surface-based monitoring networks, meteorological and aerosol forecast models, satellite imagery and associated data products, etc. Since about 2000, we have been developing the federated data systems DataFed. The vision of DataFed is to facilitate better air quality/atmospheric composition management and science by more effective use of relevant data. The specific goals of DataFed are: (1) Facilitate the access and flow of atmospheric data from provider to users. (2) Support the development of user-driven data processing value chains. (3) Participate in specific application projects. The specific approach used in DataFed is to provide mediation, services between data users and data providers. DataFed has been in operation since 2004. The federation currently facilitates access to over 100 datasets. The datasets include both near real observations. It is anticipated that additional chemical, meteorological, emissions, and GIS datasets will be added to the DataFed collection in the near future. DataFed provided IT support to a number of air quality management applications. Virtually all the content and its evolution is accessible through the community workspace wiki at datafedwiki.wustl.edu. Since 2004, a significant fraction of the effort was invested in connection with the Global Observing System of Systems (GEOSS) program: architectural studies for system of systems, participation in interoperability experiments and demonstrations and building of agile, user defined software applications using workflow integration of loosely coupled components. DataFed is an applied system used in everyday research. In this brief report we present the architecture of DataFed, as a contribution to the emerging and evolving architecture of GEOSS. We understand that there never single ‘architecture’ for GEOSS but we hope that… II. SYSTEM OF SYSTEMS Earth observations are conducted by all nations, by diverse organizations, and conducted through many projects. Centrally designing and managing the Global Earth Observation System is not an option. Therefore, the GEO program [ref] has adapted the System of Systems approach to integrate the diverse Earth observations. System of Systems (SoS) is a fledgling field of science and there are a number of different definitions and interpretations. According to one authoritative assessment of SoS, their key characteristics are: (1) SoS consists of autonomous constituents that are managed and operated independently. (2) These constituents evolve independently. (3) A SoS composed of such constituents acquires an emergent behavior. The same report points out that in order to recognize, manage, and > REPLACE THIS LINE WITH YOUR PAPER IDENTIFICATION NUMBER (DOUBLE-CLICK HERE TO EDIT) < exploit these characteristics, additional features of SoS must be understood: (4) No stakeholder has a complete insight and understanding of SoS. (5) Central control of SoS is limited; distributed control is essential. Finally, (6) Users must be involved throughout the life of SoS. The SoS approach places considerable burden on the information system that supports GEOSS. Fortunately, the Internet itself is a SoS with all of the above characteristics of SoS. Hence, the GEOSS IS could be patterned and implemented on internet itself. Recent developments in network science (Barabas and Albert) have shown the internet network of nodes connected by links. The topology of the Internet can be described by the power-law pattern, which can be explained by the continuous addition of new nodes (information sources) and by a growth rate of nodes connections that is proportional to its current number of connections. Until we have a better information model for GEOSS IS, it is prudent to follow the Internet model as a guide. III. DATAFED ARCHITECTURE In this section we are describing the DataFed architecture following the guidelines of the Open Distributed Systems Reference Model (RM-ODS). The information system is described from five different point of view: enterprise, information, computational, engineering and technology. A. Enterprise View -Value Creation of Earth Observations The enterprise view point focuses on the purpose, scope and policies of the SoS. From GEO perspective, the purpose of the system is to provide societal benefits by better informed decision-making through the use of Earth observations and models. When this enterprise architecture is applied to specific societal benefits, such as air quality, the components of the decision making system can be expressed in more detail (Fig. 1). In that case, the observations and models are used to improve the level of understanding within the technical analysis community. Such technical knowledge is then transmitted to the regulatory analysts who in turn transfer the relevant information to decision-making managers. These three components - represented as circles - are all human participants in the decision system. Much of the communication between the human participants is through reports and verbal communication. In other words, at the top of the decision support system interoperability stack are humans not machines. 2 Fig. 1 Schematic air quality information system: providers, users and data federation infrastructure. From the enterprise perspective the purpose of the information system is to support the value chain that turns lowgrade data and model outputs into high-grade actionable knowledge (ref) useful for decision-making purposes. Fig. 2 is a schematic representation of value adding IS components and the key value adding processes. As shown in Fig. 2, the three major components of the IS value chain are providing uniform access, performing data processing and preparing information products for decision as part of the DSS. The value-added processes within the value chain begin with organizing activities which are generally performed at data providers. In the next process of turning data into services for standardized access. Transformation of data to knowledge occurs through characterization and analyzing processes including, filtering, aggregation and fusion operations. Next, the IS needs to support preparation and reporting. Fig. 2 Schematic air quality information system: providers, users and data federation infrastructure. > REPLACE THIS LINE WITH YOUR PAPER IDENTIFICATION NUMBER (DOUBLE-CLICK HERE TO EDIT) < 3 The SoS approach places new demands on governance since the responsibilities of are also distributed. In case of DataFed, the responsibility for providing data lies with the data providers. The responsibility for the wrappers and mediators lies with the DataFed community. Data discovery is the responsibility of the data/service registries while the application programs are the responsibility of the end user. Formal mechanisms for governance (e.g. service contracts) for SoS are not yet developed. B. Information View-Earth Observations The information viewpoint focuses on the semantics of the information and information processing. The abstract data model used in DataFed is that of a multidimensional cube (Fig. 3.) The dimensions represent the physical dimensions (x, y, z, t) of the Earth system expressed in latitude-longitude, datetime units. All the data queries can be expressed using this abstract data model. Typical queries against this model are slices across different dimensions of the data cube as shown in Fig. 3. This simple data model is consistent with the Open Geospatial Consortium (OGC) standard data access protocols, WMS and WCS. Fig. 1 Schematic air quality information system: providers, users and data federation infrastructure. C. Computational View-Service Oriented Interoperability This view point of the architecture describes the functional relationship between the distributed components and their interaction at the interfaces. In particular, this view point visualizes the key difference between the classical client server architecture and the new loosely coupled, networked architecture of SoS (Fig. ). Fig. 3 Schematic air quality information system: providers, users and data federation infrastructure. In the client-server architecture the individual servers are designed and maintained as autonomous systems, each delivering information in its own way. Users need to access information for multiple servers carry the burden of finding the servers, re-packing the query results and performing the necessary homogenization procedures. The DataFed architecture the users’ access to distributed heterogeneous data sources is performed through the addition of two extra layers of software: wrappers and mediators. The purpose of the wrapper layer is to compensate for heterogeneities in physical access and syntactic differences. The role of the mediators is to perform the semantic, homogenization. The combination of wrappers and mediators allows the formulation of user queries to the abstract data model described above. In DataFed such queries are analogous to the view queries in relational data systems. In fact, Ullman 1997, argues that the main role of mediators is to allow such view-based queries (Wiederhold, 1992). The federation of heterogeneous data sources through mediators has been an active research area since its conception by Wiederhold, 1992. It provides a flexible way of connecting diverse systems which may include legacy databases …The emergence of service orientation has provided an additional impetus and technologies for data federation as the mediators. Standardization of service interfaces now allows easy creation mediators using Workflow software. DataFed is not a centrally planned and maintained data system but a facility to harness the emerging resources by powerful dynamic data integration technologies and through a collaborative federation philosophy. The key roles of the federation infrastructure are to (1) facilitate registration of the distributed data in a useraccessible catalog; (2) ensure data interoperability based on physical dimensions of space and time; (3) provide a set of basic tools for data exploration and analysis. The federated datasets can be queried, by simply specifying a latitudelongitude window for spatial views, time range for time views, etc. This universal access is accomplished by ‘wrapping’ the heterogeneous data, a process that turns data access into a standardized web service, callable through well-defined Internet protocols. The result of this ‘wrapping’ process is an array of > REPLACE THIS LINE WITH YOUR PAPER IDENTIFICATION NUMBER (DOUBLE-CLICK HERE TO EDIT) < homogeneous, virtual datasets that can be queried by spatial and temporal attributes and processed into higher-grade data products. The Service Oriented Architecture (SOA) of DataFed is used to build web-applications by connecting the web service components (e.g. services for data access, transformation, fusion, rendering, etc.) in Lego-like assembly. The generic web-tools created in this fashion include catalogs for data discovery, browsers for spatial-temporal exploration, multi-view consoles, animators, multi-layer overlays, etc.(Figure 2)If you are importing your graphics into this Word template, please use the following steps: Fig 2. Main software programs of DataFed-FASTNET: Catalog for finding and selecting data, Viewer for exploration, and an array of Consoles for data analysis and presentation. A good illustration of the federated approach is the realtime AIRNOW dataset described in a companion paper in this issue (Wayland and Dye, 2005). The AIRNOW data are collected from the States, aggregated by the federal EPA and used for informing the public (Figure 1) through the AIRNOW website. In addition, the hourly real-time O3 and PM2.5 data are also made accessible to DataFed where they are translated on the fly into uniform format. Through the DataFed web interface, any user can access and display the AIRNOW data as time series and spatial maps, perform spatial-temporal filtering and aggregation, generate spatial and temporal overlays with other data layer and incorporate these user-generated data views into their own web pages. As of early 2005, over 100 distributed air quality-relevant datasets have been ‘wrapped’ into the federated virtual database. About a dozen satellite and surface datasets are delivered within a day of the observations and two model outputs provide PM forecasts. D. Engineering View-Components Type This view point focuses on the mechanisms required to support interaction between distributed components. It involves the identification of key components and their interactions through standard communication and data transfer protocols. Mediator services allow the creation of homogeneous data views of the wrapped data in the federation (e.g. geospatial, time…). A data view is a user-specified representation of data accessible through DataFed. Data views consist of a stack of data layers, similar to the stack of spatial GIS data except that DataFed views can represent temporal and other dimensional pattern. Each data layer is created by chaining a set of web services, typically consisting of a DataAccessService which is followed by the DataRenderService. When all the data layers are rendered, and Overlay service merges all the layers into a single rendered image. Subsequent to the data access-render-overlay, the MarginService adds image margins and physical scales to the view. Finally, layerspecific AnnotationService can add titles and other user provided labels to the view. Below is a brief description and illustration of data views. Further detail can be found elsewhere. 4 Figure 1. Generic data flow in DataFed services. Views are defined by a 'view_state' XML file. Each view_state.xml contains the data and instructions to create a data view. Since the view creation is executed exclusively through web services, and web services are 'stateless' the data in the view_state.xml file represents the 'state' of the system, i.e. the input parameters for each of the web services used in the view. The view_state.xml file also contains instructions regarding the web service chaining. Thus, given a valid view_state.xml file an interpreter can read that file and execute the set of web services that creates a view. All data views, i.e. geo-spatial (GIS), temporal (time series), trajectories, etc are created in this manner. The concept and the practical illustration data views is given below through specific examples. Suppose we have single layer view with a map data layer, margin and a title annotation, encoded in the file TOMS_AI. The section on Settings contains parameters applicable for the entire view, such as image_width for the output image or lon_min for setting the zoom geo-rectangle. The section of Services specifies the services used to generate the data layers. In the example below, the combined MapImageAccessRender service accesses the map image and renders the boundaries. Normally there an more layers and services used in a view. The third section of each view state file is the ServiceFlow, which specifies the sequence and manner in which the individual services needs to execute. A data view program can be executed by either the SOAP or the HTTP Get protocol. These illustrations use the simpler and transparent HTTP Get protocol in the form a 'cgi' - URL. In order to execute the view generation program, the user (or her software agent) makes the following URL call (click on the URL below): The entry after the ? view_state=TOMS_AI is the only userspecified parameter. The resulting view was generated dynamically by the web service interpreter at the DataFed website. Since the view file TOMS_AI had default values for all the services, it was able generate an image of the view. However, each of the default parameters can be modified by the user by explicitly changing the desired parameters. These parameter changes are concatenated to the URL using the standard '&' character for instruction separation. In the example below, the parameters for image width, tom margin, minimum longitude, title text and location add the margin background color was changed. The difference in the two map renderings is evident. E. Technology View-Component Instances This view identifies the specific technologies views in the systems. In distributed systems, such as DataFed, the data are maintained by their respective providers in their native environment. Users access the distributed data through the ‘wrappers’ and mediators provided as federation services. An > REPLACE THIS LINE WITH YOUR PAPER IDENTIFICATION NUMBER (DOUBLE-CLICK HERE TO EDIT) < additional federation service is caching, i.e. local storage or pre-calculation of frequently requested queries. Numeric Data Caching. The above listed datasets are point monitoring data that are updated hourly or daily by their providers. The updates are generally individual files of spatial pattern containing the most recent measurements. This local storage is convenient for the incremental maintenance of the database but it is inefficient for data access, particularly for temporal data views. In DataFed the caching consists of densely packed numeric ‘data cubes’ suitable for fast and efficient queries for spatial and temporal views. The data cubes are updated hourly, daily or intermittently as dictated by the data availability and user-need. The daily cache updates is monitored through the cache status console. The time series show the number of valid stations for each hour/day in the dataset. A drop in number of stations indicates problems in data acquisition, transmission or caching. Image Caching. The creation of some of the data views is time consuming. For example spatial interpolation contouring of monitoring data may take 20-30 seconds, which makes interactive data browsing impractical. For frequently used views, the data are pre-rendered and stored as images for fast retrieval and interactive browsing. The FASTNET browsing tools including the Analysts Consoles and Animator are set up to use the cashed images. For a subset of views, the image caching is performed automatically following the update of the numeric data cubes. The image datasets are from distributed providers; the data are fetched from the provider and passed to the user on the fly. Specific user applications for data access, filtering and aggregation and rendering are built through web service composition. Since data views are images, the can be embedded into web pages as any other image. However, since the images are created dynamically, the content of the images can be controlled from the web page through a set of HTML controllers, connected to the image URL through JavaScript or other scripting languages. For instance, the date of the displayed data in the view can be set by standard Text or Dropdown boxes, and the value of the user selection is used to set views URL. Such web pages, containing the dynamic views and the associated controllers, are called WebApps or MiniApps. By design, such WebApps web pages can be designed and hosted on the user's server. The only realtionship to DataFed is that the view image are produced by the DataFed system. Unfortunately, full instructions for the implementation of WebApps is nut yet prepared. However, a number of WebApps have been prepared to illustrated the use of DataFed views in user's web pages. The JavaScript code of each WebApp is embedded in the source of each WebApp page. Fig. 4. Typical web application program. The DataFed 'middleware' aids the flow of aerosol data from the providers to the users and value-adders. Thus, DataFed neither produces, nor consumes data for its own purposes; it passes data from peer to peer. The data use, i.e. the transformation of the raw data to 'knowledge' occurs in 5 separate, autonomous projects. However, the evolution of DataFed is fueled by projects and projects use its webresources to be more effective. (List of illustrative WebApps) IV. INTEROPERABILITY DEMONSTRATIONS AND PILOT STUDIES The DataFed federation project strives to be a 'socially wellbehaving' data integration endeavor. Beyond coexistence, it strives to cooperate, co-evolve, even merge with other data federations. DataFed is pursuing linkages to two major data federations, OGC and OPeNDAP. Both utilize standard web based HTTP protocols for data sharing and therefore suitable for easy integration with DataFed. OGC Specifications The Open Geospatial Consortium, Inc. (OGC) is a memberdriven, not-for-profit international trade association that is leading the development of geoprocessing interoperability standards. OGC works government, private industry, and academia to create open and extensible software application programming interfaces for geographic information systems (GIS) and other geospatial technologies. Adopted specifications are available for the public's use at no cost. The Open-source Project for a Network Data Access Protocol, OPeNDAP, is a discipline-neutral protocol for requesting and providing data across the Web. The goal is to allow users, to access data in desired format for application software they already have. The protocol has its roots in the Distributed Oceanographic Data System (DODS), which successfully aided many researchers over the past decade. Ultimately, OPeNDAP is to support general machine-tomachine interoperability of distributed heterogeneous datasets. GALEON Geo-interface to Atmosphere, Land, Earth, Ocean netCDF GALEON is an OGC Interoperability Experiment to support open access to atmospheric and oceanographic modeling and simulation outputs. This is an active and productive group working on the nuts and bolts of ES data/model interoperability. ESIP AQ Earth Science Information Partners, AQ Cluster The ESIP:Air_Quality_Cluster is an activity within Earth Science Information Partners, ESIP. It connects air quality data consumers with the providers of those data. The AQ Cluster aims to (1) bring people and ideas together (2) facilitates the flow of earth science data to air quality management (3) provide a forum for individual AQ projects ESIP WS Earth Science Information Partners, Web Services Cluster The ESIP:Web_Services Cluster is an activity within Earth Science Information Partners, ESIP. It explores the emrging WS technologies and facilitaes the WC connectivity within ESIP and the NASA DSWG projects. NASA DSWG NASA Data Systems Work Group - > REPLACE THIS LINE WITH YOUR PAPER IDENTIFICATION NUMBER (DOUBLE-CLICK HERE TO EDIT) < Infusion/Web Services The NASA Earth Science Data System Working Groups (DSWGs) have been formed to address technology infusion, reuse, standards, and metrics as the ESE data systems evolve. They are comprised of Research, Education and Applications Solutions Network (REASoN) members and other interested users and providers. R. Husar has been serving as the facilitator of the Web Services subgroup. OGC GSN Open Geospatial Consortium - GEOSS Services Network The GEOSS Services Network (GSN) is a persistent network of a publicly accessible OpenGIS services for demonstration and research regarding interoperability arrangements in GEOSS. GSN is the basis for demonstrations in the GEOSS Workshop series. DataFed, along with NCDC and UNIDATA, has actively participated in the Beijing workshop. OGC WCS Open Geospatial Consortium - Web Coverage Services The DataFed group is active in adopting and applying the OGC WCS specification to air quality data interoperability. A specific goal is to include Point Coverages into WCS. Such data irise from surface-based surface monitoring networks. V. APPLICATION AREAS Traditionally, air quality analysis was a slow, deliberate investigative process occurring months or years after the monitoring data had been collected. Satellites, real-time pollution detection and the World Wide Web have changed all that. Analysts can now observe air pollution events as they unfold. They can ‘congregate’ through the Internet in ad hoc virtual work-groups to share their observations and collectively create the insights needed to elucidate the observed phenomena. Air quality analysis has become much more agile and responsive to the needs of air quality managers, the public and the scientific community. In April 1998, for example, a group of analysts keenly followed and documented on the Web, in real-time the transcontinental transport and impact of Asian dust from the Gobi desert on the air quality over the Western US. (Husar, et al., 2001, http://capita.wustl.edu/Asia-FarEast). Soon after, in May 1998, another well-documented incursion of Central American forest fire smoke caused record PM2.5 concentrations over much of the Eastern US (e.g. Peppler, et al., 2000. It is envisioned that such a community supported global aerosol information network be open to a broad international participation, complement and synergize with other monitoring programs and field campaigns, and support the scientific as well as the air quality and disaster management. In the future a web-based communication, cooperation, and coordination system would be useful to monitor the global aerosol pattern for unusual or extreme aerosol events. The system would alert and inform the interested communities so that the detection and analysis of such events is not left to serendipity. 6 VI. SUMMARY Data reside in their respective home environment where it can mature. ‘Uprooted’ data in centralized databases are not easily updated, maintained, enriched. Abstract (universal) query/retrieval facilitates integration and comparison along the key dimensions (space, time, parameter, method) The open data query based on Web Services promotes the building of further value chains: Data Viewers, Data Integration Programs, Automatic Report Generators etc.. The data access through the Proxy server protects the data providers and the data users from security breaches, excessive detail A. Software for the User Data Catalog for finding and browsing the metadata of registered datasets. Dataset Viewer/Editor for browsing specific datasets, linked to the Catalog. Data Views - geo-spatial, time, trajectory etc. views prepared by the user. Consoles, collections of views on a web page for monitoring multiple datasets. Mini-Apps, small web-programs using chained web services (e.g. CATT, PLUME). B. Software for the Developer Registration software for adding distributed datasets to the data federation. Web services for executing data access, processing and rendering tasks. Web service chaining facility for composing custom designed data views. C. DataFed Technologies and Architecture Form-based, semi-automatic, third-party wrapping of distributed data. Web services (based web standards) for the execution of specific tasks. Service Oriented Architecture for building loosely coupled application programs. D. Issues Reliability: Distributed computing issues: network reliability, bandwidth, etc Chaining: Orchestrating distributed web services to act as a single application Links: Linking users to providers and other federations (e.g. OGC, OPenDAP) Tech focus: Should it focus on data access, building web tools or both? Domain focus: Is the focus on air qualityappropriate? Other domains? Sustainability: Can Datafed be self-sustaining supported by > REPLACE THIS LINE WITH YOUR PAPER IDENTIFICATION NUMBER (DOUBLE-CLICK HERE TO EDIT) < 7 its community? In summary, a user can take existing views and place those in her own web-page by calling a URL that defines the view. The user does not need to know the intricacies of the web service programming language, except the parameters that she whishes to modify in the view. This aspect of DataFed is similar to the the procedure for retrieving maps from standard OGC (Open GIS Consortium) servers. The preparation of data views is accomplished by the DataFed view editor tool. Views can be simple or complex as shown in the current list of user-defined views page. Each illustrates a somewhat different aspect of the data or the DataFed services. List of VIEWS (April 2004) ACKNOWLEDGMENT . REFERENCES [1] R.B.. Husar,”The Asian dust events of April 1998” IEEE J. Geophys. Res. Atmos, vol. 106, pp. 18317-18330, 2001. Rudolf B. Husar was born in Martonos, former Yugoslavia. He studied at University of Zagreb, Croatia, and obtained the degree of Dipl Ing. at the Technische Hochschule, West Berlin, Germany. He earned his PhD in Mechanical Engineering at University of Minnesota, Minneapolis in 1971. The first paragraph may contain a place and/or date of birth (list place, then date). Next, the author’s educational background is listed. The degrees should be listed with type of degree in what field, which institution, city, state, and country, and year degree was earned. The author’s major field of study should be lower-cased. The second paragraph uses the pronoun of the person (he or she) and not the author’s last name. It lists military and work experience, including summer and fellowship jobs. Job titles are capitalized. The current job must have a location; previous positions may be listed without one. Information concerning previous publications may be included. Try not to list more than three books or published articles. The format for listing publishers of a book within the biography is: title of book (city, state: publisher name, year) similar to a reference. Current and previous research interests end the paragraph. The third paragraph begins with the author’s title and last name (e.g., Dr. Smith, Prof. Jones, Mr. Kajor, Ms. Hunter). List any memberships in professional societies other than the IEEE. Finally, list any awards and work for IEEE committees and publications. If a photograph is provided, the biography will be indented around it. The photograph is placed at the top left of the biography. Personal hobbies will be deleted from the biography. The currently available space-borne and surface aerosol monitoring can enable virtual communities of scientists and regulatory bodies to detect and follow such major aerosol events, whether resulting from fires, volcanoes, pollution, or dust storms. It has also been shown that ad hoc collaboration of scientists is a practical way to share observations and to collectively generate the explanatory knowledge about such unpredictable events. The experience from this event could also help in more effectively planning disaster mitigation efforts, such as an early detection, warning, and analysis system. All the datasets are cataloged, accessed, and distributed through the Datafed.net infrastructure. In most cases these providers themselves are simply passing data through as part of the value-adding data processing chain. Similarly, Datafed.net is designed to pass the data to other applications such as the FASTNET project, and other value-adding activities. For some datasets, such as ASOS_STI, SURF_MET, VIEWS_CHEM, ATAD the data are accessed > REPLACE THIS LINE WITH YOUR PAPER IDENTIFICATION NUMBER (DOUBLE-CLICK HERE TO EDIT) < through special arrangements between DataFed and the provider. In other cases e.g. NEXTRAD, NAAPS, TOMS_AI, and HMS_Fire the data are accessed from the provider's website without special arrangements with the provider. The job of the mediator is to provide an answer to a user query (Ullman, 1997) In database theory sense, a mediator is a view of the data found in one or more sources Heterogeneous sources are wrapped by translation software local to global language Mediators (web services) obtain data from wrappers or other mediators and process it … Software for the User Data Catalog for finding and browsing the metadata of registered datasets Dataset Viewer/Editor for browsing specific datasets, linked to the Catalog Data Views - geo-spatial, time, trajectory etc. views prepared by the user Consoles, collections of views on a web page for monitoring multiple datasets Mini-Apps, small web-programs using chained web services (e.g. CATT, PLUME) Software for the Developer Registration software for adding distributed datasets to the data federation Web services for executing data access, processing and rendering tasks Web service chaining facility for composing customdesigned data views DataFed Technologies and Architecture Form-based, semi-automatic, third-party wrapping of distributed data Web services (based web standards) for the execution of specific tasks Service Oriented Architecture for building loosely coupled application programs Software Issues Reliability: Distributed computing issues: network reliability, bandwidth, etc Chaining: Orchestrating distributed web services to act as a single application Links: Linking users to providers and other federations (e.g. OGC, OPenDAP) 8