TIME AND SPACE COMPLEXITY

advertisement

TIME AND SPACE COMPLEXITY

Time Complexity

The total number of steps involved in a solution to solve a problem is the function of the size of the

problem, which is the measure of that problem’s time complexity.

some general order that we can consider

(c) < O(log n) < O(n) < O(n log n) < O(nc) < O(cn) < O(n!), where c is some constant.

Space Complexity

Space complexity is measured by using polynomial amounts of memory, with an infinite amount of time.

The difference between space complexity and time complexity is that space can be reused.

Space complexity is not affected by determinism or nondeterminism.

Amount of computer memory required during the program execution, as a function of the

input size

A small amount of space, deterministic machines can simulate nondeterministic machines,

where as in time complexity, time increase exponentially in this case. A nondeterministic

TM using O(n) space can be changed to a deterministic TM using only O 2(n) space.

Complexity: why bother?

Estimation/Prediction

When you write/run a program, you need to be able to predict its

needs, its requirements.

Usual requirements

- execution time

- memory space

Quantities to estimate

execution time time complexity

memory space space complexity

It is pointless to run a program that requires:

- 6TeraB of RAM on a desktop machine;

- 10,000 years to run. . .

You do not want to wait for an hour:

- for the result of your query on Google;

- when you are checking your bank account online;

- when you are opening a picture file on Photoshop;

- etc.

It is important to write efficient algorithms

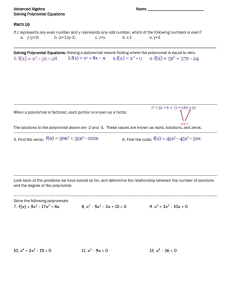

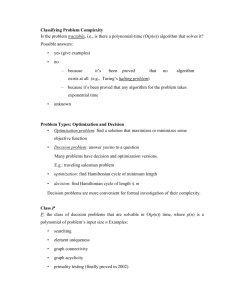

Complexity Classes

Deterministic Polynomial Time

P-complete

Hardest problems in P solvable on parallel computers

Nondeterministic polynomial time and YES answers checkable in

polynomial time

Co-NP

Nondeterministic polynomial time and NO answers checkable in

polynomial time

NPcomplete

Hardest problems in NP

Co-NP-complete

Hardest problems in CO-NP

NP-hard

At least as hard as NP-complete problems

NC

Solvable parallel computation efficiency

PSPACE

Polynomial memory with unlimited time

PSPACEcomplete

Hardest problems in PSPACE

EXPTIME

Exponential time

EXPSPACE

Exponential memory with unlimited time

BQP

Polynomial time on a quantum computer

Find the greatest common divisor (GCD) of two integers, m

and n.

Program (in C):

int gcd(int m, int n)

/* precondition: m>0 and n>0.Let g=gcd(m,n). */

{

while( m > 0 )

{ /* invariant: gcd(m,n)=g */

if( n > m )

{ int t = m; m = n; n = t; } /* swap */

/* m >= n > 0 */

m -= n;

}

return n;

}

At the start of each iteration of the loop, either n>m or m?n.

(i) If m?n, then m is replaced by m-n which is smaller than the previous value of m, and still

non-negative.

(ii) If n>m, m and n are exchanged, and at the next iteration case (i) will apply.

So at each iteration, max(m,n) either remains unchanged (for just one iteration) or it decreases.

This cannot go on for ever because m and n are integers (this fact is important), and eventually a

lower limit is reached, when m=0 and n=g.

So the algorithm does terminate.

Good test values would include:

special cases where m or n equals 1, or

m, or n, or both equal small primes 2, 3, 5, …, or

products of two small primes such as p1×p2 and p3×p2,

some larger values, but ones where you know the answers,

swapped values, (x,y) and (y,x), because gcd(m,n)=gcd(n,m).

The objective in testing is to "exercise" all paths through the code, in different combinations.

We can also consider the best,average and worst cases.

Average vs. worst-case complexity

Definition (Worst-case complexity)

The worst-case complexity is the complexity of an algorithm when

the input is the worst possible with respect to complexity.

Definition (Average complexity)

The average complexity is the complexity of an algorithm that is

averaged over all the possible inputs (assuming a uniform

distribution over the inputs).

We assume that the complexity of the algorithm is T(i) for an

input i. The set of possible inputs of size n is denoted In.

Big-O Notation Analysis of Algorithms

Big Oh NotationA convenient way of describing the growth rate of a function and hence the time complexity

of an algorithm.

Let n be the size of the input and f (n), g(n) be positive functions of n.

The time efficiency of almost all of the algorithms we have discussed can be characterized by

only a few growth rate functions:

I. O(l) - constant time

This means that the algorithm requires the same fixed number of steps regardless of the size

of the task.

Examples (assuming a reasonable implementation of the task):

A. Push and Pop operations for a stack (containing n elements);

B. Insert and Remove operations for a queue.

II. O(n) - linear time

This means that the algorithm requires a number of steps proportional to the size of the task.

Examples (assuming a reasonable implementation of the task):

A. Traversal of a list (a linked list or an array) with n elements;

B. Finding the maximum or minimum element in a list, or sequential search in an unsorted

list of n elements;

C. Traversal of a tree with n nodes;

D. Calculating iteratively n-factorial; finding iteratively the nth Fibonacci number.

III. O(n2) - quadratic time

The number of operations is proportional to the size of the task squared.

Examples:

A. Some more simplistic sorting algorithms, for instance a selection sort of n elements;

B. Comparing two two-dimensional arrays of size n by n;

C. Finding duplicates in an unsorted list of n elements (implemented with two nested loops).

IV. O(log n) - logarithmic time

Examples:

A. Binary search in a sorted list of n elements;

B. Insert and Find operations for a binary search tree with n nodes;

C. Insert and Remove operations for a heap with n nodes.

V. O(n log n) - "n log n " time

Examples:

A. More advanced sorting algorithms - quicksort, mergesort

VI. O(an) (a > 1) - exponential time

Examples:

A. Recursive Fibonacci implementation

B. Towers of Hanoi

C. Generating all permutations of n symbols

The best time in the above list is obviously constant time, and the worst is exponential time which, as

we have seen, quickly overwhelms even the fastest computers even for relatively small n.

Polynomial growth (linear, quadratic, cubic, etc.) is considered manageable as compared to

exponential growth.

Order of asymptotic behavior of the functions from the above list:

Using the "<" sign informally, we can say that

O(l) < O(log n) < O(n) < O(n log n) < O(n2) < O(n3) < O(an)

A word about O(log n) growth:

As we know from the Change of Base Theorem, for any a, b > 0, and a, b != 1

Therefore,

loga n = C logb n

where C is a constant equal to loga b.

Since functions that differ only by a constant factor have the same order of growth, O(log2 n) is the

same as O(log n).

Therefore, when we talk about logarithmic growth, the base of the logarithm is not important, and we

can say simply O(log n).

A word about Big-O when a function is the sum of several terms:

If a function (which describes the order of growth of an algorithm) is a sum of several terms, its order

of growth is determined by the fastest growing term. In particular, if we have a polynomial

p(n) = aknk + ak-1nk-1 + … + a1n + a0

its growth is of the order nk:

p(n) = O(nk)

Example: