Conversational browser for accessing VoiceXML

advertisement

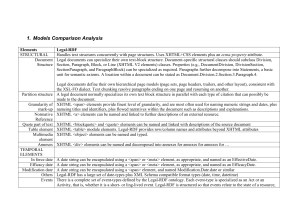

Conversational browser for accessing VoiceXML-based IVR services via multi-modal interactions on mobile devices JIEUN PARK, JIEUN KIM, JUNSUK PARK, DONGWON HAN Computer & Software Technology Lab. Electronics and Telecommunications Research Institute 161 Kajong-Dong, Yusong-Gu, Taejon, 305-350 KOREA Abstract: - Users can access VoiceXML-based IVR(Interactive Voice Response) systems using their mobile devices such as smart phones in anytime and at any place. Even though their mobile devices have small screens, they have to interact the services using voice-only modality. As a result of this uni-modality, the services have some fundamental problems as follows. 1) Users can not know what service items can be selected before TTS(Text-to-Speech) engine reads them. 2) Users always have to pay attentions not to forget items they can select and an item they will select. 3) Users cannot confirm if their speech input is valid or not at once. So, users always have to wait new questions from the server in order to confirm. Because of this inconvenience and cumbersomeness, users generally prefer to connect with a human operator directly. In this paper, we propose a new conversational browser that makes a user access the existing VoiceXML-based IVR(Interactive Voice Response) services via multi-modal interactions on a small screen. The conversational browser fetches voice-only web pages from web servers, converts the web pages to the multi-modal web pages by using a multi-modal markup language, and interprets the converted web pages. Key-Words: - VoiceXML, Multi-modal interaction, XHTML+Voice, WWW, Internet, Interactive voice response services, mobile devices 1 Introduction VoiceXML allows Web developers to use their exiting Java, XML, and Web development skills to design and implement IVR (Interactive Voice Response) services. They no longer have to learn the proprietary IVR programming language[1]. Many companies have changed their proprietary IVR systems to be written in VoiceXML. In general, the voice-only web services have simpler flows and have more specific domains than the existing visual-web services because they have to depend on voice-only modality. Users can access the voice-only web services using their mobile devices such as smart phones in anytime and at any place. Even though their mobile devices have small screens, they have to interact the services using voice-only modality. As a result of this uni-modality, the services have some fundamental problems as follows. 1) Users can not know what service items can be selected before TTS(Text-to-Speech) engine reads them. 2) Users always have to pay attentions not to forget items they can select and an item they will select. 3) Users cannot confirm if their speech input is valid or not at once. So, users always have to wait new questions from the server and reply to the server in order to confirm. Because of this inconvenience and cumbersomeness, users generally prefer to connect with an human operator directly. In this case, the original purpose of IVR services that is automation may be not achieved. In this paper, we propose a new conversational browser that makes user access the existing VoiceXML-based IVR services via multi-modal interactions on a small screen. Users can look at and listen to items they can select, and confirm the result of speech input through the displayed text instead of beginning a new dialogue with a server for confirmation. The organization of this paper is as follows. In section II, we describe the concept of multi-modal access to VoiceXML-based IVR services. In section III, we describe the conversational browser architecture and overall execution flows. In section IV and V, we describe related works and conclusions. 2 Multi-modal access VoiceXML-based IVR services to 2.1 VoiceXML-based IVR services Fig.1 illustrates an architectural model of VoiceXML-based IVR systems[1]. A conversation between a user and a system begins when a telephone call is initiated. Once a call is connected over the phone network, the VoiceXML Infrastructure acts as a “browser” and begins making a series of HTTP requests to a traditional Web server for VoiceXML, audio, grammar, and ECMAScript documents. The web server responds with these simple documents over HTTP. VoiceXML HTTP Request ------VoiceXML Response Phone Network End User IP Network VoiceXML Infrastructure ------Backend Web Systems Servers Fig. 1 The architecture model of VoiceXML-based IVR services Once retrieved, the actual VoiceXML “interpreter” within the VoiceXML Infrastructure executes the IVR applications and engages in a conversation with the end user. All software and resources necessary to “execute” a particular IVR service – such as voice recognition, computer-generated text-to-speech, ECMAScript execution etc – are embedded within the VoiceXML infrastructure. The following is a simple IVR service example[5] for ordering pizzas via a conversation between a human and a computer. Computer: How many pizzas would you like? Human: one Computer: What size of pizza would you like? Say one of small, medium, or large Human: medium Computer: Would you like extra cheese? Say one of yes or no. Human: yes. Computer: What vegetable toppings would you like? Say one of Olives, Mushrooms, Onions, or Peppers? Human: Um…help. Computer: What vegetable toppings would you like? Say one of Olives, Mushrooms, Onions, or peppers? Human: Mushrooms Computer: What meat toppings would you like? Say one of Bacon, Chicken, Ham, Meatball, Sausage, or Pepperoni Human: Help. Computer: Say one of Bacon, Chicken, Ham, Meatball, Sausage, or Pepperoni Human: Sausage Computer: Thank you for your orderings. Example 1. An IVR Service example In above example, users always have to answer to questions in a pre-defined order by a service provider and remember what items they can select. This voice-only interaction style is very inefficient because already many users have accessed these services using their mobile phone with a small screen. The small screen can support users to look at and listen to the information about what items he can select. Also it can support to select any item in any order if the selected item has no dependency with the others. In the following section, we will describe how to access an Example 1 service via multi-modal user interactions. 2.2 The access to IVR services multi-modal user interactions via Fig.2 shows the same service as Fig.1 via multi-modal user interactions. If the user clicks the textbox below the label “Quantity”, he can listen to “How many pizza….” like as the first dialogue in Example 1. At this time, the user can reply via voice or text input. If the user say “one”, the textbox will show a “1” character. If the user clicks the label “Size”, he can listen to “What size of Pizza …” like as the second dialogue in Example 1. At this time, the user can select one of radio buttons or say one of “small”, “medium”, or “large”. If the user say “small”, the radio button “small 12” will be selected. After replying all these questions, user clicks the button “Submit Pizza Order” for sending data to the servers. By supplementing visual modality in voice modality, users can know in advance what items can be selected and select their own favorite modality according to circumstances. Also users don’t need to answer additional questions for validating the speech input because users can know the recognition results through a displayed text at once. Click the textbox 1. How many Pizza would you like? Click the label 2. What size of Pizza would you like? Click the label 3. What toppings would you like? either a speech or a visual input. In Fig. 2, a “Quantity” value entered via speech is displayed in a visual element “textbox” below a label “Quantity” by using a “sync” element. A “cancel” element allows a user to stop a running speech dialogue when he doesn’t want the voice interactions. The structure of an XHTML+Voice Application is as Fig. 3[5]. Namespace Declaration Click the label 4. What vegetable toppings would …. Click the label 5. What meat toppings would like…. Visual Part (XHTML) Voice Part (VXML) Event Type Event Handler Fig. 2 A multi-modal web page By supplementing visual modality in voice modality, users can know in advance what items can be selected and select their own favorite modality according to circumstances. Also users don’t need to answer additional questions for validating the speech input because users can know the recognition results through a displayed text at once. Previous, there are many researches in the field of multi-modal browser[6, 7, 8, 9]. The researches focus on adding other modality (mainly voice) in the existing visual browser. But we think the effects of adding voice modality in visual-only web applications are not more powerful than adding visual modality in voice-only web applications – VoiceXML based IVR services. In the case of visual-only applications, whether the applications support voice-modality or not are not an indispensable problem. But in the case of voice-only applications, whether the applications support visual-modality or not are a very critical problem to users who already have accustomed to existing visual environments. We use a XHTML+Voice(X+V) markup language[4] for describing multi-modality that is proposed by IBM and Opera Software in W3C. X+V extends XHTML Basic with a subset of VoiceXML 2.0[10], XML-Events and a small extension module. In X+V, a modularized VoiceXML doesn’t include “non-local transfers” elements such as “exit”, “goto”, “link”, “script”, and “submit”, “menu” elements such as “menu”, “choice”, “enumerate”, and “object”, “root” elements such as “vxml” and “meta”, “telephone” elements such as “transfer” and “disconnect”. A small extension module includes important “sync” and “cancel” elements. The “sync” element supports synchronization of data entered via Processing Part Fig. 3. . The components of an XHTML+Voice A basic XHTML+Voice multi-modal application consists of a Namespace Declaration, a Visual Part, a Voice Part and a Processing Part. The Namespace Declaration for a typical XHTML+Voice applications is written in XHTML, with additional declarations for VoiceXML, and XML-events. The Visual Part of an XHTML+Voice application is XHTML code that is used to display the various form elements to the devices’ screen, if available. This can be ordinary XHTML code and may include check boxes and other form items that are found in a typical form. The Voice Part of an application is the section of code that is used to prompt the user for a desired field within a form. This VoiceXML code utilizes an external grammar to define the possible field choices. If there are many choices or combination of choices is required, the external grammar can be used to handle the valid combinations. The processing part of the application contains the code that is used to perform the needed instructions for each of the various events[5]. 3 The Conversational Browser 3.1 Conceptual Model The conversational browser transforms voice-only web pages into multi-modal web pages that include visual as well as voice elements to support multi-modal user interactions. By using our conversational browser, a mobile user with a small screen can access the existing VoiceXML-based IVR applications via voice as well as visual interactions. The effects of this supplement – adding visual interaction in voice-only applications is more convenient to users than the effects of the reverse case – adding voice interactions in visual-only applications. Fig.4 describes the conceptual model of our conversational browser. Event ------- Namespace ------- Execution modules – a VoiceXML Parser, a VoceXMLtoX+V Converter, and a XHTML+Voice Interpreter. Also, the conversational browser needs external systems for voice interactions such as a text to speech(TTS) engine and a speech recognizer, and a javascript engine for executing scripts. In the case of mobile devices, the TTS and speech recognizer have to be located in other platforms. XHTML XHTML+Voice VoiceXML VoiceXML Converting Fig.4 The Conceptual Model of Conversational Browser The conversational browser fetches VoiceXML pages from web servers that the user wants to access and analyzes what elements in the VoiceXML pages can be visualized. And then the conversational browser converts the original VoiceXML pages into the XHTML+Voice pages with the same scenario. The conversion process is divided into four parts – a VoiceXML part, a XHTML part, an Event part, and a Namespace part. In the VoiceXML part, the conversational browser transforms the elements in original VoiceXML pages into the modularized VoiceXML elements allowed in XHTML+Voice. In the XHTML part, the conversational browser adds new XHTML elements for visualizing some VoiceXML elements. For example, a “prompt” element in VoiceXML gives users to any information by saying through TTS engines. This “prompt” element could be changed to a “label” element in XHTML+Voice and showed in the form of a text string on the screen. The “field” element in VoiceXML is an input element to be gathered from the user. This could be changed to an input element with a “textbox” attribute in XHTML+Voice and showed in the form of a text box on the screen. The Event part is for combining visual and voice elements for synchronizing inputs generated from different modalities. The Namespace part is for making variables defined in the original VoiceXML pages to be used in new generated XHTML elements. The results of conversion produce XHTML+Voice pages including voice as well as visual elements. Finally, conversational browser executes the XHTML+Voice pages. 3.2 Architecture Fig.5 describes an architecture of the conversational browser. The conversational browser consists of three ------VoiceXML VoiceXML-to-X+V Converter VoiceXML Parser Modularized VoiceXML DOM Tree VoiceXML DOM Tree Created XHTML+Event DOM Tree XHTML+Voice Interpreter Sync VoiceXML Form Interpreter X+V Viewer Event Manager Text To Speech/Speech Recognizer JavaScript Engine Fig. 5 The architecture of conversational browser 3.2.1 VoiceXML Parser A VoiceXML Parser generates a DOM tree by parsing an input VoiceXML page. The generated DOM tree is transmitted to a VoiceXML-to-X+V converter that transforms a voice-only modal application into a multi-modal application. 3.2.2 VoiceXML-to-X+V Converter A VoiceXML-to-X+V converter creates a XHTML+Event dom tree by referencing the visual-able elements of the VoiceXML dom tree, delete and edit the some elements in the original VoiceXML dom tree. Fig. 6 shows roughly the VoceXML-to-X+V converter’s execution flows. First, the converter creates a new XHTML+Event dom tree that includes only a head element and a body element. And then, the converter executes as following steps until all elements in the VoiceXML dom tree are visited. In case of a “block” element that contains executable content, there are two cases – one includes a “pcdata” and the other includes a “submit” element. In case of the “pcdata”, the original meaning is the TTS engine to read the contents. Therefore the converter adds <P> elements in the created dom tree for visualizing in a text-form. In case of a “submit”, the meaning is to submit values to the specific server. The converter adds an <input> node in the created tree and delete the submit node in the original VoiceXML tree. The reason of deleting is that host language for multi-modal descriptions is not VoiceXML but XHTML. So, the same functions of “submit” is defined in XHTML. Interpreter executes the modularized VoiceXML dom tree and calls a TTS engine or a Speech Recognizer that is distributed in networked environments. An X+V Viewer shows the visual items on the screen and transmits user’s input event to the Event Manager. The Event Manager is to call the handler of the user’s input event – focus, click, etc. and synchronizes data entered via either a speech or a visual input. Create new DOM tree Node? in VoiceXML Block ? pcdata? Field ? submit? Menu? Add form node in X+V Prompt? Add label node in X+V Add P node in X+V Add input node in X+V Define Event & Handl er Delete Submit in VoiceXML Choice? Grammar Add input node in X+V Define Event & Handl er Add link node in X+V Prompt to Label Add input node in X+V Define Event & Handl er Fi Fig. 6 VoiceXML-to-X+V conerter’s execution flow Fi 3.2.2 VoiceXML-to-X+V Converter A “field” element specifies an input item to be gathered from the user. So the converter has to visualize this item for allowing a multi-modal input. The converter adds a new form node in the generated dom tree and add a new “label” node for informing what input the application want. And also, the converter adds a new “input” node in the created dom tree for gathering data from the user and connects the visual element with the field element in VoiceXML. The connection between a visual element and a voice element is needed for synchronizing both modalities. If a user reply by saying, the recognition result has to be showed in a text field. Even though in a reverse case, the input result also has to be transmitted to the VoiceXML Form Interpreter. In case of a “menu” element, the converter adds a new “label” node in the created dom tree for informing what the menu means. Also for a “choice” element, the converter adds the necessary numbers of link nodes in the created dom tree. In case of a “grammar” element, the converter adds a new “input” node in the created dom tree for selecting which items the user want and defines an event and its handler. 3.2.3 XHTML+Voice Interpreter An XHTML+Voivce(X+V) Interpreter consists of three parts – a VoiceXML Form Interpreter, an X+V Viewer, and an Event Manager. A VoiceXML Form Also, the X+V Interpreter calls the JavaScript Engine in the case of scripts included in the VoiceXML pages. 3.3 An Example This section describes the processing steps of the conversational browser using a simple example. If a user accesses a VoiceXML-based service (Example 2) on his mobile device, the user would listen a VoiceXML-based system saying - “Would you like coffee, tea, milk or nothing?” and then answer one of the items. <vxml xmlns… > <form> <field name=”drink”> <prompt> Would you like coffee, tea, milk, or nothing? </prompt> <grammar type=”application/srgs+vxml” root=”r2” version=”1.0”> <rule id=”r2” scope=”public”> <one-of> <item>coffee</item> <item>tea</item> <item>milk</item> <item>nothing</item> </one-of> </rule> </grammar> </field> <block> <submit next=”http://www.drink.example.com/drink2.asp”/> </block> </form> </vxml> Example 2. A simple VoiceXML file The processing steps of this service in the conversational browser are as follows. First, the VoiceXML parser generates a VoiceXML dom tree by parsing Example 2 as shown in Fig. 7-a. Fi stopped. This “cancel” button is used when the user doesn’t want voice modality. 4 Related Works Our research is related to two separated domains – automatically converting markup languages for web-based applications and multi-modal web browsers. Until recently, the researches for converting markup languages have mainly focused on HTML to VoiceXML. IBM developed a commercial product – WebSphere Transcoding Publisher that includes a HTML-to-VoiceXML transcoder[11]. Fig.7 Converting VoiceXML into XHTML+Voice Fi And then the VoiceXML-to-X+V converter inputs Fig. 7-a dom tree, changes it into a modularized dom tree as Fig.7-b, and generates a new XHTML+Event dom as Fig. 7-c. In this case, “field: drink” in Fig. 7-b is synchronized with “input:radio”s in Fig. 7-c. The XHTML+Voice Interpreter executes two dom trees in Fig. 7-b and 7-c as Fig. 8. Event: Click “Would you like…” Fig. 8. XHTML+Voice web page If the user clicks a label “Would you…”, he could listen the TTS engine’s saying as “Would you…”. At this time, users could say or click whatever he likes. If the user clicks a “Submit” button, the conversational browser sends the input value to a server. If user clicks a “Cancel” button, the VoiceXML interpreter is Frankie James proposed a framework for developing a set of guidelines for designing audio interfaces to HTML called the Auditory HTML access system[12]. Stuart Goose et al. proposed an approach for converting HTML to VoxML that is very similar to VoiceXML[3]. Gopal Gupata et al. have proposed an approach for translating HTML to VoiceXML that is based on denotational semantics and logic programming[13]. These researches considered only uni-modality – visual-only or voice-only. The previous works is to reuse the affluent HTML web contents through automatically converting mechanisms. Recently, the researches about multi-modal web browsers are based on Speech Application Language Tags(SALT)[14] or XHTML+Voice[4]. SALT tags are added to an HTML document so that users with a special browser can interact with the Web using graphics and voice at the same time. In XHTML+Voice, existing VoiceXML tags are integrated to XHTML. In this paper, we use XHTML+Voice markup language for describing multi-modal interactions because our goal is to access the existing VoiceXML-based IVR applications via multi-modal interactions. [4] Chirs Cross, Jonny Axelsson, Gerald McCobb, T.V. Raman, and Les Wilson, “XHTML+Voice Profile 1.1”, http://www-3.ibm.com. 5 Conclusions [6] X. Huang, A., et. al. “MiPAD: a multimodal intereaction prototype”, International Conference on Acoustics, Signal and Speech Processing, vol. 1, pp.7-11, May 2001. Even though many people use mobile devices with small screens, they always have accessed the VoiceXML-based IVR applications via voice-only modality. In the case of uni-modality, particularly voice-only modality, most users tend to connect a human operator directly by ignoring interaction with IVR systems. Because the existing IVR systems burden people with inconvenience such as paying attentions not to forget what items he select or repeatedly confirming a user’s speech input is valid or not. To resolve these problems on mobile devices, we suggested a new conversational browser that supports the user to access the existing VoiceXML-based IVR applications via multi-modal interactions. The conversational browser fetches the existing VoiceXML-based IVR applications and converts in the forms of multi-modal applications based on XHTML+Voice. By using this conversational browser, users can select which modality he use according to his circumstance, use visual and voice modalities at the same time, and know in advance what items could be selected without TTS engine’s saying. Until recently, many researches about multi-modality have focused on adding the voice modality in visual web applications. But the effects of adding the voice modality are not more powerful than the effects of the reverse case - adding the visual modality in the voice-only IVR applications. References: [1] Chetan Sharma, Jeff Kunins, VoiceXML: Strategies and Techniques for Effective Voice Application Development with VoiceXML2.0, John Wiley & Sons, Inc, 2002. [2] Zhuyan Shao, Robert Capra and Manuel A. Perez-Quinones, “Transcoding HTML to VoiceXML Using Annotations,” Proceedings of ICTAI 2003. [3] Goose S, Newman M, Schmidt C and Hue L, “Enhancing web accessibility via the Vox Portal and a web-hosted dynamic HTML to VoxML converter”, WWW9/Computer Networks, 33(1-6):583-592, 2000. [5] IBM Pervasive Computing, “Developing Multimodal Applications using XHTML+Voice”, January 2003. [7] Georg Niklfeld, et. al. “Multimodal Interface Architecture for Mobile Data Services”, Proceedings of TCMC2001 Workshop on Wearable Computing, Graz, 2001. [8] Zouheir Trabelsi, et. al. “Commerce and Businesses: A voice and ink XML multimodal architecture for mobile e-commerce systems”, Proceedings of the 2nd international workshop on Mobile commerce, September 2002. [9] Alpana Tiwari, et. al. “Conversational Multi-modal Browser: An Integrated Multi-modal Browser and Dialog Manager”, 2003 Symposium on Applications and the Internet, Jan. 2003, pp.27-31. [10] Scott McGlashan, et. al. “Voice Extensible Markup Language(VoiceXML) Version 2.0”, http://www.w3c.org/TR/2003. [11] Nichelle Hopson, “WebSphere Transcoding Publisher:HTML-to-VoiceXML Transcoder”, http://www7b.boulder.ibm.com/wsdd/library/techarti cles/0201_hopson/0201_hopson.html, January 2002. [12] James F., “Presenting HTML Structure in Audio: User Satisfaction with Audio Hyper-text”, Proceedings of the International Conference on Auditory Display, pp. 97-103, November 1997. [13] G. Gupta, O. El Khatib, M. F. Noamany, H. Guo, “Building the Tower of Babel: Converting XML Documents to VoiceXML for Accessibility”, Proceedings of the 7th International Conference on Computers helping people with special needs, pp. 267-272. [14] Speech Application Tags 1.0 Spec., http://www.saltforum.org/devforum/spec/SALT.1.0.a .asp, July 2002.