Vol. 6. No. 2 R-8 September 2002 A Focus on Language Test

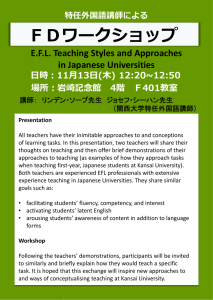

advertisement

Vol. 6. No. 2 R-8 September 2002 A Focus on Language Test Development: Expanding the Language Proficiency Construct Across a Variety of Tests (Technical Report #21) Thom Hudson & J. D. Brown (Eds.) (2001) Honolulu: University of Hawai'i, Second Language Teaching & Curriculum Center. Pp. xi + 230 ISBN 0-8248-2351-6 (paper) US $25.00 Eight research studies highlighting alternative approaches to language assessment are offered in this text. Systemically illustrating the entire test development process, these studies underscore some of the difficulties in designing and implementing tests. One strength of the book is that it offers a close-up view of how some non-standard tests were developed. Another is that it provides enough detail to carefully reflect on the design problems inherent in each test study. If you are looking for perfect model studies which all yield significant and meaningful results, this is not the book for you. However, if you are interested in how innovative studies of alternative forms of language assessment, this book has much to offer. 1. Evaluating Nonverbal Behavior After mentioning some of the ways nonverbal behavior impacts on communication, Nicholas Jungheim described a study assessing the correlation between oral proficiency in English and nonverbal ability. Nonverbal ability, in the context of this study, was operationalized as the extent that the head movements, hand gestures, and gaze shifts of Japanese EFL students differed from a native EFL teacher population. Linguistic proficiency was measured by the TOEFL and oral English ability by the ETS SPEAK. The results suggest that nonverbal ability is a separate construct from language proficiency as measured by TOEFL and SPEAK: the correlations between the 3-point nonverbal ability scale used in this study and the language test scores were too low to be meaningful. 2. Pragmatic Picture Response Tests Highlighting the problems with traditional discourse completion tasks, Sayoko Yamashita next described how a picture response test was developed to explore ways that apology strategies differ between (1) native English speakers speaking English (NSE), (2) native Japanese speaking Japanese (NSJ), (3) Americans speaking Japanese (JSL), and (4) Japanese speaking English (EFL). Yamashita found expressions of dismay were used by NSEs less frequently than the other groups. The NSE group also made offers of repair when apologizing more frequently than the other groups. Since perceptions of politeness partly depend on which apology act strategies are employed, these findings were meaningful. Yamashita concluded by mentioning ways picture response tests could be used to assess speaking, writing, listening, or cross-cultural proficiency skills. [-1-] 3. Assessing Cross-cultural Pragmatic Performance After noting some of the limitations of self-assessment tests in general, Thom Hudson detailed how a three-phase pragmatic performance assessment was developed. In the first phase, 25 Japanese ESL students rated how they thought they would respond to four roleplays using a 5-point scale of appropriacy. In the next phase, three native speakers rated their actual performance by the same scales. In the final phase, students viewed their performance on video and rated themselves again. Surprisingly, the three rating methods showed little variance. Hudson suggested the test population may have been too advanced and questioned whether self-assessment was the most appropriate way to evaluate pragmatic proficiency. The need for more research on both holistic and analytic self-assessment scales was emphasized at the end of this article. 4. Measuring L2 Vocabulary After mentioning the pros and cons of the leading vocabulary assessment methods, Yoshimitsu Kudo outlined a pilot study to gauge the vocabulary size of Japanese EFLstudents. A twenty-minute test consisting of 18 definition-matching style questions for five different vocabulary levels was administered. To reduce the task load and enhance speed, a translation format was selected. Kudo obtained a low reliability for the pilot test, suspecting that English neologisms may have affected his data. Kudo then revised the test by filtering out English loan words and the reliability coefficients rose for four of the five tests. Kudo concluded by cautioning vocabulary test developers to consider the impact of foreign loan words. English loan words now permeate many languages. 5. Assessing Collocational Knowledge After providing a theoretical discussion of what collocations are, and how they differ from idioms and formulaic speech, William Bonk described a study to ascertain the relation between collocational knowledge and general language proficiency. He administered a 50-item collocation test and abridged TOEFL to 98 ESL students at the University of Hawaii. Bonk found .73 correlation between collocational knowledge and general language proficiency and emphasizing the need for further collocational knowledge research. 6. Revising Cloze Tests Next J. D. Brown, Amy Yamashiro, and Ethel Ogane described three approaches to cloze test development. To see how each method differed they conducted a two-phase research study. In the first phase, cloze tests with different deletion patterns were administered to 193 Japanese university students. In the second phase, two revised versions of this test were created: one with an exact-answer scoring system and the other with a broader acceptable-answer scoring system. The researchers found the acceptable-answer scoring system superior in most respects to the exact-answer system, and concluded with some practical advice for cloze test design. 7. Task-based EAP Assessment Highlighting some of the ongoing controversies regarding performance assessment, John Norris then detailed how an EAP test at the University of Hawaii was developed. Three diverse representatives of the university community were recruited to identify criteria for an EAP performance test. An analysis of their notes revealed significant variation in ideas concerning benchmark performance criteria and in overall detail. The need for team members to be able to effectively negotiate the final test criteria was underscored. Norris concluded by listing ways to help criteria identification teams work more effectively, and mentioned some topics for further research in performance assessment. [-2-] 8. Criteria-referenced Tests In the final chapter J. D. Brown outlined how he developed a set of criterion-reference testing materials for a popular textbook series. After creating lots of test items and piloting them, those items that seemed problematic were revised or weeded out. A semester later different revised forms of the test were administered. Items that did not discriminate well or lacked validity or reliability were again discarded. The final test was sensitive to the material covered in the text series, valid for the populations tested, and fairly dependable. Despite this, Brown cautioned against basing high-stakes educational decisions on any single test score. This volume will appeal to two groups of people: those with some understanding of research design principles who wish to critically reflect on how those principles can be carried out in actual studies, and those with an interest and non-standard types of language assessment.