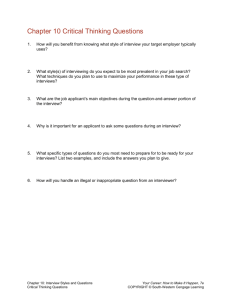

Chapter IV

advertisement