MSFD indicator structure and common elements for information flow

advertisement

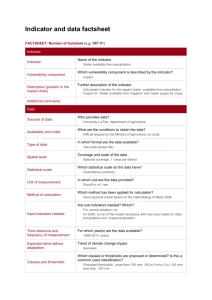

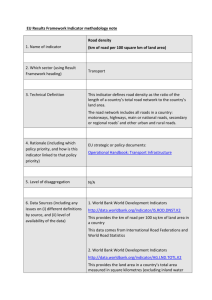

DIKE_11-2015-05 Marine Strategy Framework Directive (MSFD) Common Implementation Strategy 11th meeting of the Working Group on Data, Information and Knowledge Exchange (WG DIKE) with Working Group on Good Environmental Status (WG GES) 0900-1700: 19 June 2015 DG Environment, Room C, Avenue de Beaulieu 5, B-1160 Brussels Agenda item: 5 Document: DIKE_11-2015-05 Title: Indicator structure and common elements for information flow Prepared by: ETC/ICM for EEA Date prepared: 08/06/2015 Background: This document provides an overview of the indicator-based assessment structures in use, or under development, in two of the RSCs (OSPAR and HELCOM) and at the European level (EEA). A short analysis is provided that demonstrates the commonality between these approaches, and highlights some of the elements that may need further work in order to share these information to an agreed common set of indicator meta-information that could be exploited through WISE-Marine. Ultimately, this would help streamline the provision of information from MS/RSCs to the MSFD and aid regional coherence, thus avoiding parallel processes being required. This paper should provide steps towards convergence on agreeing the common elements of an indicator assessment, and provide guidance to MS/RSCs that are yet to formulate their approach. WG DIKE is invited to: a. Review and comment on the overall analysis and approach; b. Review and agree the common schema as presented in Table 7. 1 DIKE_11-2015-05 Indicator structure and common elements for information flow 1 Summary It is expected that assessments developed in the context of the Regional Sea Conventions will be used in support of the 2018 MSFD Art.8 assessments, including through the use of commonly agreed indicators. For two RSCs, developing their next assessments (HELCOM's HOLAS II and OSPAR's Intermediate Assessment 2017), the indicator assessment approach is seen as a direct contribution to MS needs in 2018. An analysis of the two RSC approaches, and the EEA approach to indicator assessment (Figure 1), suggests there is an underlying common structure and the possibility to agree a set of core information that could be mapped between the regional approaches/European approach and utilised in the provision of information that feed upwards from Member States to RSCs to the European level. A draft of a common schema is presented, which builds on these existing approaches. This draft needs to be developed in agreement with WG DIKE, the result of which will be an MSFD indicator specification. Figure 1 Indicator common categorization and number of information fields in each structure There are, however, some differences between the approaches and each of the structures could be modified to provide more explicit information, or improved compatibility to MSFD information needs. If this approach is seen as acceptable, agreement would need to be made on which of the common categories would be required and which could be optional, and which specific fields within each category would be appropriate to obtain a common mapping of information. 2 DIKE_11-2015-05 2 Indicator assessments For environmental assessments, indicators are a well-established method to apply a metric to the status and trends of specific pressures and states. This approach, in one form or another, has been used in the RSCs and at the European scale for the last two assessment rounds. The MSFD criteria also correlate to an indicator type approach, and this is recognised by the RSCs in their planning for their next regional assessments – the so-called 'roof reports'. The accompanying document DIKE_11-2015-03 (indicator overview section) provides a summary of the status of the availability and planned use of these indicators across the entire MSFD area. The breadth of subject matter of these indicators has already led to the formulation of agreed structures for the information that forms part of the indicator; this is equally true for the EEA, OSPAR and HELCOM. Information on the developments within the Mediterranean and Black Sea are not yet known. Each of these organisations has elaborated templates of information elements; Table 1 summarizes their approach and status. Table 1 overview of existing indicator structures Organization Development status Presentation format Structure EEA Currently used for coreset Online indicators (CSI) Follows a strict meta-data template adapted from the European SDMX Metadata Structure (ESMS) HELCOM Currently used for some existing assessments (i.e. Eutrophication) and available for use for roof report Online Follows a document-based structure based on an agreed HELCOM set of information OSPAR In draft and awaiting contracting party agreement. Available for use in roof report Planned to be online Follows a document and meta-data-based structure, developed within OSPAR UNEP/MAP Not known Not known BSC Not known Not known 3 Common elements to the indicators Building on experience from the MSFD initial assessment reporting, and with a clear aim to reduce the overall burden of sharing information between MS, RSCs and the Commission/EEA, a structured and explicit approach is required to make information within RSC indicator structures available to, and usable by, the Commission/EEA in an overall European marine assessment. Explicit means to make clear references and information placeholders to key parts of an indicator structure, so that information and results are not buried within other elements, and are clearly marked so that those unfamiliar with the regional setup can easily identify the information needed to understand and interpret correctly the conditions for a particular indicator assessment. Based on an analysis of the indicator specifications from OSPAR, HELCOM and EEA it has been possible to build a common categorization of the type of information that is used in the indicators 3 DIKE_11-2015-05 (see Table 2). These categories represent a de facto understanding of what key pieces of information are needed to present a robust indicator assessment. Table 2 Common categorization of indicator elements Category Description Access and use Explicit information on access rights, usage rights related to the data products, indicator publication etc. i.e. data policy, copyright restrictions etc. Assessment findings Key messages, assessment results, trend analysis and conclusions presented primarily as text Assessment methods Methodologies, aggregation methods, indicator specifications, references to other relevant methods Assessment purpose Purpose of assessment, rationale of approach, targets and policy context Contact and ownership Contact details for assessment indicator, including authorship and organisational contact points Data inputs and outputs Data sources, assessment datasets, assessment results, snapshots etc. Geographical scope Assessment units, other geographical information, countries Labelling and classification Identification and classification systems, such as INSPIRE, DPSIR, MSFD criteria Quality aspects Explicit information content on quality of assessment, including data and methods. i.e. uncertainty, gaps in coverage etc. Temporal scope Time range of assessment, usually expressed as a year range Version control Publishing dates, references to previous indicator versions, URI's etc. Table 3 summarizes for each indicator structure the application of a common categorisation. The numbers in the matrix refer to the number of information fields from the indicator that fall within the category. While the numbers are not analogous to the quality/completeness of information in each category, they give an indication of where emphasis is placed in explicit information content and where more elaboration could be needed. The colour coding is an aid to show where there are no relevant information fields (pink), one occurrence of a field (yellow) and more than one occurrence within a category (green). Table 3 Categorization of the 3 indicator types according to information fields used (count) Categories Access and use Assessment findings Assessment methods Assessment purpose Contact and ownership Data inputs and outputs Geographical scope Labelling and classification Quality aspects Temporal scope Version control Grand Total EEA fields HELCOM fields 4 5 6 2 8 1 7 3 2 6 44 3 7 7 2 3 1 1 6 1 31 OSPAR fields 1 8 1 4 2 2 8 10 1 2 3 42 4 DIKE_11-2015-05 Each indicator structure is analysed in greater detail in the Indicator assessment structures described section. 4 Indicator assessment structures described 4.1 EEA Core indicator1 The core indicators are presented as part of the ‘Data and Maps’ services on the EEA webpage. The format relies on a mixture of text, tabular information and maps to present the indicator. The structure was not developed specifically for the MSFD, nor for marine assessments. The content is well structured, due partly to the elements being underpinned and described within an XML specification standard, which also means the indicator, or parts thereof, can be outputted in different formats/configurations. Figure 2 Screenshot of indicator presentation on EEA webpage 1 An example can be found here http://www.eea.europa.eu/data-and-maps/indicators/chlorophyll-intransitional-coastal-and-2/assessment 5 DIKE_11-2015-05 Table 4 summarizes by information category how the EEA indicator is structured. While the absolute numbers of fields related to any particular category do not imply that the structure is weak/strong in these categories, it does provide information on where explicit references and placeholders have been thought useful/necessary. Table 4 EEA Indicator information elements EEA Category and relevant fields Assessment Findings Assessment methods Assessment Purpose Contact and Ownership Data inputs and outputs Geographical scope Labelling and Classification Quality Aspects Temporal scope Version control Grand Total Number of Fields 4 5 6 2 8 1 7 3 2 6 44 Notable features - EEA makes an explicit reference to the indicator units e.g. concentration expressed as microgramme per litre; this information would be very important for comparisons when looking across a range of indicators from different sources/regions. - The indicator approach is well developed in terms of a technical platform i.e. underlying XML schema and it is evident the information fields have been refined over a number of iterations to provide the most relevant set of information to the widest audience. - The labelling, classification and versioning fields are both numerous and well utilised – this reflects the wide range of indicator type products available via the EEA. Possible enhancements for MSFD - There is no specific reference to geographical assessment units. However the map products do depict the results by MSFD sub-regions, and the metadata structure has a field for ‘contributing countries’. - There is no explicit reference to an ‘assessment dataset’ which both OSPAR and HELCOM refer to; the EEA structure refers to the overall underlying dataset. - With the exception of the DPSIR label type, there are no specific labels that link the indicator to a specific MSFD utilisation i.e. using the MSFD criterion id’s. 4.2 HELCOM Core indicator2 The core indicators are presented thematically as part of the ‘Baltic Sea Trends’ section on the HELCOM webpage. The format relies on a mixture of short texts, tabular information and dynamic/static maps to present the indicator. The recently updated structure, which is similar but not identical to the online version, has been developed specifically for the MSFD. 2 An example can be found here http://helcom.fi/baltic-sea-trends/eutrophication/indicators/chlorophyll-a/ 6 DIKE_11-2015-05 Figure 3 Screenshot of presentation of indicator on HELCOM webpage Table 5 summarizes by information category how the HELCOM indicator is structured. While the absolute numbers of fields related to any particular category do not imply that the structure is weak/strong in these categories, it does provide information on where explicit references and placeholders have been thought useful/necessary. Table 5 HELCOM Indicator information elements HELCOM Category and relevant fields Assessment Findings Assessment methods Assessment Purpose Contact and Ownership Data inputs and outputs Number of Field 3 7ctr 7 2 3 7 DIKE_11-2015-05 HELCOM Category and relevant fields Geographical scope Labelling and Classification Quality Aspects Version control Grand Total Number of Field 1 1 6 1 31 Notable Features - The indicator makes explicit references to both an assessment dataset and assessment data sources. For transparency and reproducibility it is preferable to have both of these pieces of information as only referring to the overall data sources can make it more challenging to reproduce the exact dataset that was used in an assessment. - The indicator structure has extensive and explicit assessment methods and quality aspects components. These make it easier to both understand any possible weaknesses and limitations of the indicator, and also to compare against other regional indicators. - The HELCOM indicator structure makes explicit references to MSFD GES criteria. Possible enhancements for MSFD - There is no explicit placeholder for temporal scope i.e. the year range of the assessment - There is no direct reference to which countries the indicator assessment applies to (i.e. a list of countries), although this can be inferred from the map of assessment units/data results - Although an authorship citation is provided, there is no explicit reference to the access and use rights to the assessment dataset, or map products. 4.3 OSPAR Common Indicator3 The common indicators structure is yet to be adopted by OSPAR, and the OSPAR website and information system (ODIMS) are currently under development to accommodate this feature. Based on the draft proposal the indicators will be presented on the OSPAR website as part of the assessment and linked to ODIMS. The format relies on a mixture of text (some of which is tailored specifically for web presentation), tabular information and maps to present the indicator. The structure was not developed specifically for the MSFD but does address it. The content is well structured, and reference is made to known meta-data terminology such as INSPIRE keywords. It is anticipated that the structure presented in this report will be modified by OSPAR, partly to address their own internal review of information, but also triggered by feedback from this analysis. Table 6 summarizes by information category how the OSPAR indicator is structured. While the absolute numbers of fields related to any particular category do not imply that the structure is weak/strong in these categories, it does provide information on where explicit references and placeholders have been thought useful/necessary. 3 No online representation is available yet, the information is built on a document to the OSPAR Coordination group (CoG) 6-7 May, 2015 “Conclusions of ICG MAQ(1), (2) 2015 and intersessional work” document reference 0306 8 DIKE_11-2015-05 Table 6 OSPAR Indicator information elements OSPAR Category and relevant fields Access and Use Assessment Findings Assessment methods Assessment Purpose Contact and Ownership Data inputs and outputs Geographical scope Labelling and Classification Temporal scope Version control Quality Aspects Grand Total Number of fields 1 8 1 4 2 2 8 10 2 3 1 42 Notable Features - The OSPARs structure explicitly states data and indicator access and use rights. This is an important pre-condition for a common sharing platform as there is explicit agreement on what can be used, and any limitations on use. - Although the terminology differs, OSPAR makes an explicit reference to both an assessment dataset (a snapshot) and assessment data sources. - The indicator structure makes a specific labelling for MSFD criteria. Possible enhancements for MSFD - A clearer information provision to the data product relating to an assessment result would be useful - The field ‘linkage’ would benefit from a more structured approach as a collection of links – most of which will be intrinsic to interpreting the assessment, may be too ambiguous and lead to information not being translated correctly in a common set of information fields. - The structure would be improved by more explicit references to quality aspects of the assessment, monitoring and data aggregation methods. 5 A common indicator schema Based on the information presented relating to the three indicator assessment examined, a first draft of a common schema is presented in Table 7. As a guidance for discussion and agreement in WG DIKE, an indication is given as to whether the field should be a required element or optional, in order to ensure an adequate provision of information to the MSFD assessment and WISE-Marine. 9 DIKE_11-2015-05 Table 7 MSFD indicator schema draft outline x = more than one field present in existing indicator structure o = one field present in existing indicator n = no explicit fields in existing indicator Category and relevant fields Access and Use Conditions applying to access and use Assessment Findings Key assessment Key messages Results and Status Trend Assessment methods Indicator Definition Methodology for indicator calculation Methodology for monitoring Indicator units GES - concept and target setting method Description Explicit information on access rights, usage rights related to the data products, indicator publication etc. i.e. data policy, copyright restrictions etc. Content type i.e. Copyright, data policy text or URL Key messages, assessment results (text and graphic form), trend analysis and conclusions presented primarily as text Longer description of assessment results by assessment units Short descriptions of trends Textual description of assessment results, could include graphics Textual description of assessment trend, could include graphics Methodologies, aggregation methods, indicator specifications, references to other relevant methods EEA HELCOM OSPAR n n o Recommendation Required x x x text Required text text and figures text and figures Required Required Optional x x o Short description of indicator aimed at general audience Text and tabular information on the process of aggregation and selection etc Short textual description of monitoring requirements/method Units used for indicator text Required text Required text Optional text Required Text describing concept used and target method text Optional 10 DIKE_11-2015-05 Category and relevant fields Assessment Purpose Indicator purpose Policy relevance Relevant publications (policy, scientific etc) Policy Targets Description Purpose of assessment, rationale of approach, targets and policy context Content type Justification for indicator selection and relevance Text relating indicator to policy text text Required Optional Citable URLs to policy documents related to indicator text Optional Description of policy target text Optional Contact and Ownership Contact details for assessment indicator, including authorship and organisational contact points Authors Citation Point of contact List of authors Full citation Organisational contact Data inputs and outputs Data sources, assessment datasets, assessment results (tabular and dataset), snapshots etc. Data sources Underlying datasets Assessment dataset snapshot dataset that was derived from underlying data Assessment result summary results dataset/table/figure Assessment result- map GIS version of assessment result i.e. Shape file or WFS Geographical scope Assessment units, other geographical information, countries Assessment unit Countries Other geographical unit Labelling and Classification Nested assessment unit (if available) Countries that the indicator covers alternate source of geographical reference for indicator i.e. ICES areas identification and classification systems, such as INSPIRE, DPSIR, MSFD criteria EEA HELCOM OSPAR x x x x x Recommendation x text text text Optional Required Required x x x text and/or URL URL File or web service File or web service Required Required Required Optional o o x text text Optional Required text or URL Optional x o x 11 DIKE_11-2015-05 Category and relevant fields DPSIR MSFD criteria Indicator title INSPIRE topics Quality Aspects Data confidence Indicator methodology confidence GES - confidence Description assessment framework linkage criteria coding as listed in Annex III tables 1 and 2 Full title of indicator as published Keyword topics Explicit information content on quality of assessment, including data and methods. i.e. Uncertainty, gaps in coverage etc. Adequateness of spatial and temporal coverage, quality of data collection etc. Content type text text text text text Required Knowledge gaps and uncertainty in methods/knowledge text Required Confidence of target, descriptive text text Optional Temporal scope Time range of assessment, usually expressed as a year range Temporal Coverage assessment period expressed as year start -year end Version control Publishing dates, references to previous indicator versions, URI's etc. Last modified date Published date Unique reference version linkage date of last modification publish date of indicator Citable reference unique to resource i.e. URI, DOI Link to other versions of assessment EEA HELCOM OSPAR x x o x n Recommendation Optional Required Required Required x date range Required x o x date time date time text or URL URL Sum Required fields Sum Optional fields Optional Required Optional Optional 21 15 12 DIKE_11-2015-05 At present the common fields are not mapped directly to existing information fields in the OSPAR, HELCOM and EEA structures; this needs to be completed together with these organisations. WG DIKE would need to validate this and decide on whether each suggested category/field is required/optional for a common structure. This could be carried out by written comments to the table, or through TG DATA if necessary. This mapping would then be used as the basis for translating between the RSC/Member state structures and the common schema for reporting. This would mean that the existing indicator structures would be directly related to the MSFD common approach without a major restructure of the existing indicator structures. 13 DIKE_11-2015-05 6 Annex 1: EEA Core indicator specification EEA Number of Fields Category Assessment Findings Assessment methods Field Key Assessment Key messages Results Data product Chart:Title Results Data product Map:Title Indicator Definition Methodology for gap filling Methodology for indicator calculation Assessment Purpose Methodology references Units Justification for indicator selection Key policy question Policy context Policy documents Policy Targets Scientific references Contact and Ownership Data inputs and outputs EEA Contact Info Ownership Data sources Results Data product Chart: Data source Results Data product Chart: Image Results Data product Chart: Links Results Data product Chart: Table Results Data product Map:Data source Results Data product Map:Downloads Usage longer description of assessment results by regional sea Short descriptions of trends Title of Chart Title of map short description of indicator Text describing the method for filling missing values in dataset Text and tabular information on the process of aggregation and selection etc Citable URLs to regional reports and scientific papers on methodology units used for indicator Scientific justification of use of indicator what the indicator is addressing from a management perspective background text on policy setting Citable URLs to policy documents related to indicator Description of policy target Citable URLs to various types of regional reports EEA named resource Organisational owner Citable URL to underlying dataset for overall assessment Citation reference to dataset Image (interactive in this case) Further functions etc table of trends Citation reference to dataset Further images, information for map indicator product 14 DIKE_11-2015-05 EEA Number of Fields Category Geographical scope Labelling and Classification Quality Aspects Temporal scope Version control Field Results Data product Map:Image Usage Geographic Coverage Countries covered by the indicator DPSIR Indicator Codes Indicator specification Indicator title Tags Topics Typology Datasets uncertainty Methodology uncertainty Rationale uncertainty Category of DPSIR type EEA coding of indicators URL to full indicator template Short descriptive title of indicator more detailed keywords for filtering/discovery filtering/discovery of key topic areas Nature of content type i.e. descriptive indicator short descriptive text short descriptive text short descriptive text Indication of how often assessment indicator is run Year range Creation date of instance of indicator Aggregation of Create, Publish and Last modified date Last modification to indicator instance Publication date Link to latest version of assessment Link to this version of assessment Frequency of updates Temporal Coverage Create date Dates Last modified date Published date URI latest version URI version instance Image (map and legend) of indicator 15 DIKE_11-2015-05 7 Annex 2: HELCOM Core indicator specification HELCOM Number of Field Category Assessment Findings Field Usage Key message Short descriptions of trends Textual description of assessment results including graphics and tables Textual description of long term trend including graphics Text and tabular information on the process of aggregation and selection etc including assessment units short textual description on selection of data Status trend Assessment methods Assessment Protocol Data description Good Environmental State concept Good Environmental State target setting method Monitoring methodology Current monitoring Arrangements for updating indicator Assessment Purpose Anthropogenic Pressures Relevance of the core indicator Policy relevance of the core indicator Policy relevance the role of XX in the ecosystem Additional relevant publications Publications used in indicator Contact and Ownership Data inputs and outputs Authors Cite this indicator Map (Viewer) Results product Data source: assessment dataset Data source: underlying data trend or target data or model short textual description of monitoring requirements/method stations on map, which countries are monitoring short text on indicator arrangements (no dates) Text description linking indicator to DPSIR pressure element short justification of use of indicator summary background text on policy setting (tabulated) more detailed information on legislation background Scientific relevance of the indicator (blank) Links to various related reports (assessment, targets etc) Textual citation of all authors of indicator Textual citation of indicator, including URL reference GIS application usually excel snapshot Text description and URL's describing underlying 16 DIKE_11-2015-05 HELCOM Number of Field Category Labelling and Classification Quality Aspects Version control Geographical scope Field Usage data sources Full title of Indicator Confidence of indicator status Good Environmental State confidence of target Description of optimal monitoring Results and confidence Confidence data quality assurance routines Short descriptive title of indicator Archive Map (image): Results product - including assessment units Link to previous versions of assessment spatial/temporal coverage using high/moderate/low high/moderate/low short description of deficiencies in monitoring text and maps in detail for expert audience high/moderate/low (blank) Image (map and legend) of indicator 17 DIKE_11-2015-05 8 Annex 3: OSPAR Common indicator specification (DRAFT to be adopted) OSPAR Number of fields Category Access and Use Assessment Findings Assessment methods Assessment Purpose Contact and Ownership Data inputs and outputs Geographical scope Labelling and Classification Field Conditions applying to access and use Description Conclusion (brief) Conclusion (extended) - only for online version Figures (other) Key message Resource abstract Results Results (extended) - only for online version Results (figures) Analysis of trend results and conclusion Linkage Background (brief) Background (extended) -only for online version Background (figures) Purpose access rights and usage (blank) tabular list of figures short textual summary of main trends textual summary (abstract) Description of indicator results (blank) tabular list of figures multiple linkages to guidelines, decisions, methods etc combination of relevance of indicator and relation to pressures (blank) tabular list of figures Relevance text Email Point of contact (blank) Lead organisation AND/OR individual Data Snapshot Data Source assessment dataset underlying data sources Contributing countries Countries (2) E Lon Indirect spatial reference N Lat S Lat Subtitle 3 W Lon ISO country list of assessment countries contributing countries Assessment bounding box Assessment units Assessment bounding box Assessment bounding box Textual and graphic representation of assessment area Assessment bounding box Assessment type OSPAR orientated list 18 DIKE_11-2015-05 OSPAR Number of fields Category Temporal scope Version control Quality Aspects Field Component Sheet reference Subtitle 1 Subtitle 2 Thematic Strategies Title Topic category End date Start date Date of publication Lineage Metadata date Knowledge gaps - only for online version Description related to MSFD descriptors OR thematic assessment identification ID detailed title MSFD title OSPAR theme Indicator assessment title INSPIRE related topics Assessment temporal range (end date) Assessment temporal range (start date) (blank) previous assessment version Date of creation of metadata record quality assessment / gaps in knowledge 19 DIKE_11-2015-05 9 Annex 4: Analysis and mapping in detail (Excel workbook) Microsoft Excel Worksheet 20

![[#MOB-1946] Delay between end of indicator and end of upload](http://s3.studylib.net/store/data/007288750_1-db4d0a25c8f40144fc91128d44fde383-300x300.png)