bintree-problem1

advertisement

Parallelization of Binary Search Trees

1 Problem Description

In this assignment, you will parallelize primitives that occur while inserting and deleting

numbers in a binary search tree (called BST in rest of the document). Further you will

learn ways in which you can avoid deadlocks. Please refer to Chap 12, Binary Search

Trees, in the book “Introduction to Algorithms” by Rivest, Cormen, Leiserson, and Stein.

The basic code is given in the file btree.c. You will need one of the two header files

x86_funcs.h or aix_funcs.h depending on which machine you use.

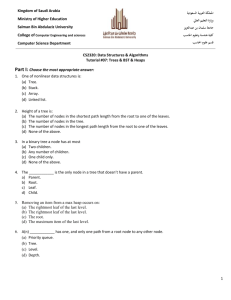

Fig.1 : Example of a binary search tree.

In a BST, each node has a maximum of two children, one left and one right. If y & z are

left and right children of a node ‘x’, then, the following property always holds

Value (y) < Value (x) < Value (z)

This property is fundamental to a BST. In our case, each node holds an integer value.

Function node_insert() takes a node whose value is already set and inserts it into the tree.

If the value is already present in the tree, no change is done to the tree. Function

node_delete() takes an integer as argument, searches it in the tree, and if found, deletes

the node containing the value. Initially, main() function allocates an array of nodes using

create_node_array() function. We also create two other arrays. We pre-allocate the

numbers to be inserted into the tree or to be deleted from the tree, by calling rand()

function on for each element of these arrays. These operations are to be done outside the

parallel loop as both malloc() and rand() functions are not parallel. Each iteration of the

insertion loop takes a number from data_array_1, inserts it into next available node from

array_1 and inserts the node into the tree by calling node_insert(). Similarly, each

iteration of the deletion loop takes a number from the data_array_2, searches the tree for

a node containing the number and deletes the node, if found by calling node_delete().

Note that the first for loop only does insertions and the second for loop does only

deletions. Each for loop is called sequentially. However, each for loop is parallelized

using OpenMP directives.

Further note that, sequential execution and parallel execution will lead to different trees at

the end of the execution. This is not a correctness problem as long as serializability is

maintained. “a parallel execution of a group of operations or primitives is serializable

if there is some sequential execution of the operations or primitives that produce

an identical result”. Because the tasks modify the tree, the code shows a correct (but

inefficient) solution of using an OpenMP coarse-grain lock encapsulating the entire body

of the insertion and deletion function. This is similar to the coarse grain locking approach

discussed in the textbook at Chapter 5, Section 5.2.1.

2 Assignment

2.1. Global Lock Approach. You will be provided with a sequential code of Binary

Search Tree called bst.c. Your first assignment is to implement a global lock

approach (example in Section 5.2.2), in which an insertion/deletion operation

is broken down into two logical steps: (1) the traversal to find where

to insert a node or where the node to be deleted is located, and (2) modification

of the binary search tree. The first (traversal) step can be performed in parallel

in this approach. Only when a thread needs to modify the tree (after completing

its traversal), it enters a critical section, modifies the tree, and exits

the critical section. Of course, prior to modifying the tree, the thread must

check whether the parts of the tree structure that it relies on for correctness

and has read prior to entering the critical section have not been changed by

other threads, between the time it read them and the time it has successfully

entered the critical section. The correctness of your implementation greatly

depends on how you perform the check.

2.2. Fine-Grain Lock Approach. Your second assignment is to implement the

Fine-Grain Lock approach (example in Section 5.2.3). For this, you need

to associate a lock variable with each node. Rather than using both read

and write locks, to simplify the problem, use write locks for everything,

including when read locks can be used. With this fine-grain

locking, different threads can completely execute in parallel as long as they

modify different parts of tree. Only when two threads conflict on a

node, they will execute sequentially. The correctness of your implementation

greatly depends on which nodes you lock for a given operation, the order of

their locking, and how you check for the possibility of a race situation in

which the tree has changed prior and after entering the critical section. See end of this

document for some implementation suggestions.

Perform and report the following for each of the two assignments:

Describe how you parallelize the insertion and deletion of nodes, and argue why you

think your implementation is correct.

State how optimal you think the performance will be, in terms of overheads and

concurrency.

Run the program and report the performance results (execution time) that you obtain

by running the program with one thread, two threads, and four threads. For each

number of threads, try the following number of elements, NUM_ELEMENTS is equal

to 1000, 10000, 100000, and 1000000.

In order to minimize affects of other programs running on the computer at the same

time as yours, it is better to run 3-4 iterations of each experiment and report the best

time you get.

Your report should contain the following:

o Two figures, one for the global lock approach and the second for the fine

lock approach. NUM_THREADS should vary along the x-axis and the

execution time should vary along the y-axis.

o Each figure consists of four different lines, each line corresponds to a

different number of elements (as mentioned above). Each line consists of

four points that represent one thread, two threads, four threads, and eight

threads.

o A detailed discussion following the figures that explains the differences

among the four lines and any other observations you can point out. i.e

diminishing returns.

o A comparison between the fine lock approach and the global lock

approach in terms of overhead and execution time.

Implementation suggestions

When implementing fine grain lock, you must be careful in the order you lock the various

nodes so as to prevent deadlocks. For example, think of what happens when two threads

try to delete a node and one of its children. It is very possible (especially with

node_delete() ) that thread 1 will want to lock nodes A,B,C,D (in that order) and thread 2

will want to lock nodes B,A,C,D (in that order). If you naively try to lock the nodes in the

order in which your code encounters them, you will run into a deadlock. Here are two

suggestions on how to overcome this problem.

Sort the nodes on the basis of the value stored in the nodes.

Sort the nodes on the basis of their addresses.

In either case, you will need to create an array of pointers to nodes which need to locked

and perform sorting on it. You may find the function qsort() which can be accessed by

including stdlib.h , to be useful. qsort() function takes a comparison function as fourth

argument. You may find the following function useful for the purpose.

int compare (const void* x, const void* y)

{

int xx = (int)*(node_t*)x;

int yy = (int)*(node_t*)y;

int ret_val = -100;

if (xx == yy)

ret_val = 0;

else if (xx > yy)

ret_val = 1;

else if (xx < yy)

ret_val = -1;

return ret_val;

}

What to hand in:

1- An Output file that contains the binary tree printed out using the function

“do_inorder_print()” provided in bst.c.

2- Source code of the global lock approach and the fine lock approach. (Don’t print

source code on paper)

3- A report file.

4- All files should be put in one zipped folder, named as groupID_BST.zip

5- You should submit it electronically (Emails are not accepted).