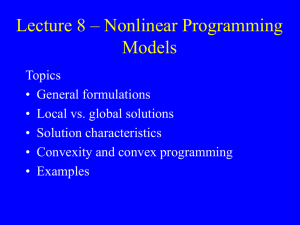

Nonlinear Programming: Concepts & Examples

advertisement

Nonlinear Programming

min (max) f (x)

subject to

(1a)

hi(x) = 0,

i = 1,...,p

(1b)

gi(x) ≤ 0,

i = 1,...,m

(1c)

where

x = (x1, ..., xn)

and

f, h1, ..., hp, g1, ..., gm

are continuous functions

Feasible Solution: Any point x satisfying (1b) – (1c).

Optimal Solution: A feasible –x such that f(–x) ≤ f(x) for all x

feasible.

Box Size Problem

Let x, y and z be respectively the length, width and height

of a box.

Problem: Select the box dimensions to minimize the

surface area subject to a constraint on volume.

minimize f(x,y,z) = 2xy + 2xz + 2yz

subject to xyz = V

x ≥ 0, y ≥ 0, z ≥ 0

Reliability Problem

Let ri = probability that component i will not fail

ci = cost of component i

wi = weight of component i

xi = number of redundant (parallel) components i

Reliability of component i: Ri = 1 – (1 – ri)

(1+xi)

The reliability of a series system is the product of the

component type reliabilities.

Problem: Find the optimal number of redundant

components of each type, subject to constraints on

maximum total cost, C, and weight, W.

n

Maximize

[1 – (1 – ri)

(1+xi)

i=1

subject to

n

c ix i ≤ C

i=1

n

wixi ≤ W

i=1

xi ≥ 0, i = 1,…,n

]

Example 1

min f(x1, x2) = (x1 – 3)2 + (x2 – 2)2

subject to

2

g1(x1, x2) = x1 – x2 – 3 ≤ 0

g2(x1, x2) = x2 – 1 ≤ 0

g3(x1, x2) = x1 ≥ 0

Isovalue Contour: (x1 – 3)2 + (x2 – 2)2 = c

(circle with radius c and center at (3, 2))

Geometric Solution for Example 1

g3

(3, 2)

Optimal

solution

(2, 1)

g2

•

•

Isovalue

contours

x1

g1

Feasible

region

x2

Example 2

min f(x1, x2) = |x1 – 2| + |x2 – 2|

subject to

2

2

h1(x1, x2) = x1 + x2 – 1 = 0

2

g1(x1, x2) = x1 – x2 ≥ 0

4

x2

f= 2

3

(2, 2)

•

2

Optimum

1

–2

•

1

–1

Isovalue

contours

f= 1

2

3

4

–1

h1= 0

–2

g1 •

0

5

x1

Convex Functions

Let x = (x1,...,xn). A function f(x) is convex if and only if

f (x1 + (1 – )x2) ≤ f (x1) + (1 – )f (x2)

for all such that 0 ≤ ≤ 1.

A function f(x) is strictly convex if the inequality is strict

except when = 0 or 1.

A function f (x) is concave if and only if

f (x1 + (1 – )x2) ≥ f (x1) + (1 – )f (x2).

If f (x) is convex then –f (x) is concave.

f(x)

f(x1 ) +(1- )f(x 2)

f( x + (1- )x 2 )

1

x1

x 1+ (1- )x 2

x2

x

Convex Sets

A feasible region, C, is a convex set if any point on

a straight line connecting any two feasible points,

x1, x2 C, is also feasible.

Mathematically, this property is expressed as

x = x1 + (1–)x2 C

for all such that 0 ≤ ≤ 1.

When g(x) is convex, the set

{x Rn : g(x) ≤ b}

defines a convex region.

g(x)

b

0

x1

x2

x

Convex vs. Nonconvex Programming

minimize f(x)

subject to gi(x) ≤ bi,

i = 1,…,m

When f(x) is convex and each gi(x) is convex,

any local optimum is a global optimum.

When either f(x) is nonconvex or any gi(x) is

nonconvex, it is possible that a local optimum

may not be a global optimum.

Most nonlinear programming algorithms are

search techniques that find local optima.

Properties of Linear Programs

(a) The set of feasible solutions {x n : Ax = b, x ≥ 0} is

convex.

(b) The set of all n-tuples (x1,...,xn) which yields a specified

value of the objective function is a hyperplane.

(c) A local extremum is also a global extremum.

(d) If the optimal value of the objective function is finite, at

least one extreme point in the feasible region will be

optimal.

Difficulties with Nonlinearities

When nonlinearities are present in the model, none of the above

properties are likely to hold.

Illustration of Local Optima

max f(x1, x2) = 25(x1 – 2)2 + (x2 – 2)2

subject to

x1 + x2 ≥ 2

–x1 + x2 ≤ 2

x1 + x2 ≤ 6

x1 – 3x2 ≤ 2

x1, x2 ≥ 0

x2

(2, 4)

•

4

f = 100

3

Feasible

region

f= 4

2

•

f = 226

• (5, 1)

1

•

0

1

2

3

4

5

6

x

1

More Local Extrema

Determining Convexity

Single Dimension

• f(x) is convex if and only if

d2f(x)

≥ 0 for all x.

dx2

• A function f(x) is concave if and only if

d2f(x)

≤ 0 for all x.

dx2

Separable Functions

• A function f(x1, x2, . . . , xn) is separable if

f(x1, x2, . . . , xn) = f1(x1) + f2(x2) + • • • + fn(xn)

• The separable function f(x1, x2, . . . , xn) is convex

(concave) if and only if each term is convex (concave).

A linear function is both convex and concave.

If the function f(x1, x2, . . . , xn) has some terms convex

and some terms concave, the function is neither convex or

concave.

Examples

a. f(x) = 4x2 – 20x + 10

b. f(x) = – 4x2 – 20x

c. f(x) = 8x3 +15x2 + 9x + 6

d. f(x) = 10x1 + 5x2 – 3x3 – 4x4

e. f(x) = 10(x1)2 + 5(x2)2 – 3x3 – 4x4

f. f(x) = 10(x1)2 – 5(x2)2 – 3x3 – 4x4

g. f(x) = – 10(x1)2 – 5(x2)2 – 3x3 – 4x4

Multiple Dimensions

To determine convexity in multiple dimensions we define the

following:

• The gradient of f(x), denoted by f(x), is the n-dimensional

column vector formed by the first partial derivatives of f(x)

with respect to each individual element of x.

f(x)

f(x) =

xj

• The Hessian matrix, H(x), associated with f(x) is the n n

symmetric matrix of second partial derivatives of f(x) with

respect to the elements of x.

2f(x)

H(x) =

x

x

i j

Examples

h. f(x)= (x1)2 + (x2)2

i. f(x) = 2(x1)2 + (x2)2 + 3(x3)2 + x1x2 + 2x1x3 + 3x2x3

Quadratic Forms

1

f(x) = k + cTx + 2 xTQx

f(x) = 3x1x2 + (x1)2 + 3(x2)2

Q=H=

2 3

3 6

(When written in polynomial form the element qii is twice

the value of the coefficient of (xi)2, and the element qij and

qji are both equal to the coefficient of xixj.)

j. f(x) = 2(x1)2 + 4(x2)2

k. f(x) = – 2(x1)2 + 4x1x2 – 4(x2)2 + 4x1 + 4x2 + 10

l. f(x) = – 0.5(x1)2 – 2x1x2 – 2(x2)2

Determining Convexity or Concavity from Hessian

Convex

• f(x) is convex (strictly convex) if its associated Hessian

matrix is positive semi-definite (definite) for all x.

• H(x) is positive semi-definite if and only if

xTHx ≥ 0 for all x.

• H(x) is positive definite if and only if

xTHx > 0 for every x ≠ 0.

Concave

• f(x) is concave (strictly concave) if its associated Hessian

matrix is negative semi-definite (definite) for all x.

• H(x) is negative semi-definite if and only if

xTHx ≤ 0 for all x.

• H(x) is negative definite if and only if

xTHx < 0 for every x ≠ 0.

Neither Convex nor Concave

• f(x) is neither convex nor concave if its associated Hessian

matrix, H(x), is indefinite for some point x.

• H(x) is indefinite

if and only if xTHx > 0 for some x,

and (x– )THx– < 0 for some other x– .

Both Convex and Concave

• f(x) is both convex and concave only if it is linear.

Testing for Definiteness with Determinants

The ith leading principal determinant of H is the determinant of

the matrix formed by taking the intersection of the first i rows

and the first i columns. Let Hi be the value of this determinant.

Then

H1 = h11

H2 =

h11 h12

h21 h22

and so on until Hn is defined.

H is positive definite if and only if:

Hi > 0, i = 1,... ,n.

H is negative definite if and only if:

H1 < 0, H2 > 0, H3 < 0, H4 > 0, …

H is indefinite if the leading principal determinants are all

nonzero and neither of the foregoing conditions are true.

Examples

f(x) = (x1)2 + (x2)2

2 0

H(x) = 0 2

f(x) = 3x1x2 + (x1)2 + 3(x2)2

H=

2 3

3 6

f(x) = 2(x1)2 + (x2)2 + 3(x3)2 + x1x2 + 2x1x3 + 3x2x3

4 1 2

H= 1 2 3

2 3 6

f(x) = – 2(x1)2 + 4x1x2 – 4(x2)2 + 4x1 + 4x2 + 10

f(x) = – 0.5(x1)2 – 2x1x2 – 2(x2)2

When does a Constraint Define a Convex Region?

• gi(x) ≤ bi defines a convex region if gi(x) is a convex

function.

• gi(x) ≥ bi defines a convex region if gi(x) is a concave

function.

• gi(x) = bi defines a convex region only if gi(x) is a linear

function.

When all the constraints individually define convex regions,

they collectively define a convex region.

When is a Local Optimum a Global Optimum?

minimize f(x)

maximize f(x)

subject to

subject to

gi(x) {≤, ≥, =} bi,

gi(x) {≤, ≥, =} bi,

i = 1, …,m

i = 1, …,m

When f(x) is convex and

the set of constraints

defines a convex region.

When f(x) is concave and

the set of constraints

defines a convex region.

• Otherwise, there is no guarantee that a specific local optimum

is a global optimum.

Problem Categories

1. Unconstrained Problems (classical optimization):

min{f(x) : x Rn}

2. Nonnegative Variables:

min{f(x) : x ≥ 0}

3. Equality Constraints (classical optimization):

min{f(x) : hi(x) = 0, i = 1, . . . , p}

4. Inequality Constraints:

min{f(x) : hi(x) = 0, i = 1, . . . , p; gi(x) ≤ 0, i = 1, . . . , m}

Optimality Conditions

Unconstrained Problems: min{f(x) : x Rn}

Necessary Conditions: Let f : n 1 be twice continuously

differentiable throughout a neighborhood of x*. If f has a

relative minimum at x*, then it necessarily follows that

(i) f(x*) = 0

(ii) The Hessian F(x*) is positive semidefinite.

Sufficient Condition: The Hessian F(x*) is positive definite.

Example

Find the relative extreme values of

3

2

f(x) = 3x1 + x2 – 9x1 + 4x2

2

Solution: f(x) = [9x1 – 9 2x2 + 4] = [0 0]

implies that x1 = 1 and x2 = – 2.

Checking x = (1, – 2), we have

F(1, – 2) =

18

0

0

2

2

2

positive definite since vTF(1, – 2)v = 18v1 + v2 > 0

when v ≠ 0. Thus (1, – 2) gives a strict relative minimum.

Next consider x = (–1, –2) with Hessian

F(– 1, – 2) =

– 18 0

0 2

2

2

Now we have vTF(– 1, – 2)v = – 18v1 + v2 which may equal 0

when v ≠ 0. Thus the sufficient condition for (– 1, – 2) to be

either a relative minimum or maximum is not satisfied.

Optimality Conditions

Nonnegative Variables: min{f(x) : x ≥ 0}

Necessary Conditions (relative minimum of f(x) at x*):

f(x*)

= 0,

xj

if x*j > 0

f(x*)

≥ 0,

xj

if x*j = 0

Alternatively:

f(x*) ≥ 0, f(x*)x* = 0, x* ≥ 0

Example

2

2

2

Minimize f(x) = 3x1 + x2 + x3 – 2x1x2 – 2x1x3 – 2x1

subject to x1 ≥ 0, x2 ≥ 0, x3 ≥ 0

Solution: Necessary conditions for a relative minimum:

f

= 6x1 – 2x2 – 2x3 – 2

x1

(1)

0 ≤

(2)

0 = x1

(3)

0 ≤

(4)

0 = x2

(5)

0 ≤

(6)

f

0 = x3

= x3(2x3 – 2x1)

x3

(7)

x1 ≥ 0, x2 ≥ 0, x3 ≥ 0

f

= x1(6x1 – 2x2 – 2x3 – 2)

x1

f

= 2x2 – 2x1

x2

f

= x2(2x2 – 2x1)

x2

f

= 2x3 – 2x1

x3

From (4), either x2 = 0 or x1 = x2.

When x2 = 0, (3) and (7) x1 = 0.

From (6), x3 = 0.

But this contradicts (1) so x2 ≠ 0 and x1 = x2.

Example (Continued)

Condition (6) either x3 = 0 or x1 = x3.

If x3 = 0, then (4), (5) and (7) x1 = x2 = x3 = 0.

But this situation has been ruled out.

Thus, x1 = x2 = x3 and from (2) either x1 = 0 or x1 = 1.

Since x1 ≠ 0, the only possible relative minimum of f occurs

when x1 = x2 = x3 = 1.

Must evaluate Hessian at x* = (1, 1, 1):

F(1, 1, 1) =

det(F) = 8, det

6 –2 –2

–2 2 0

–2 0 2

6 –2

–2 2

= 8, det(6) = 6

Determinants of all principal minors are positive definite so f is

strictly convex and has a relative minimum at x*.

Also, convexity implies that f(x*) = 1 is a global minimum.

Optimality Conditions

Equality Constraints: min{f(x) : hi(x) = 0, i = 1, . . . , p}

Jacobian matrix at x*:

h(x*)

=

x

h1(x*)

x

1•

•

•

hp(x*)

x

1

h1(x*)

• • •

xn

hp(x*)

xn

•

•

•

• • •

Lagrangian: L(x, ) f(x) + Th(x),

Necessary Conditions (relative minimum of f at x*):

If f and each component hi of h are twice continuously

differentiable throughout a neighborhood of x*, and if the

Jacobian matrix has full row rank p, then there exists a * p

such that

L(x*, *)

= f(x*) + Th(x*) = 0

x

L(x*, *)

= h(x*) = 0

Example

min (max) f(x1, x2) = 2x1x2

2

2

subject to x1 + x2 = 1

2

2

Solution: Let L(x1, x2, ) = 2x1x2 + (x1 + x2 – 1).

L

= 2x2 + 2x1 = 0

x1

(1)

L

= 2x1 + 2x2 = 0

x2

(2)

L

2

2

= x1 + x2 – 1 = 0

(3)

Three equations in three unknowns:

Solving (1) for we get = – x2/x1 as long as x1 ≠ 0.

2

2

Substituting into (2) gives x1 = x2 .

2

From (3), we get 2x1 = 1.

x1 = 2/2, x2 = 2/2 and = +1 or –1

depending on the values of x1 and x2.

Example (continued)

Four possible solutions for (x1, x2, ):

( 2/2,

2/2, – 1)

(– 2/2, – 2/2, – 1)

( 2/2, – 2/2, 1)

(– 2/2,

2/2, – 1)

By inspection:

(i) minimum value of f = –1 at both

x = (– 2/2, 2/2) and ( 2/2, – 2/2).

(ii) maximum value of f = 1 at both

x = ( 2/2, 2/2) and (– 2/2, – 2/2).

Second Order Optimality Conditions

Tangent Plane at x*: T = {y : h(x*)Ty = 0}

Second Order Necessary Conditions: Suppose that x* is a local

minimum of f(x) subject to h(x) = 0 and the Jacobian has rank p.

Then there exists a vector p such that

f(x*) + Th(x*) = 0

and the Hessian of the Lagrangian

L(x*) F(x*) + TH(x*)

is positive semidefinite on T; that is

yTL(x*)y ≥ 0 for all y T

Second Order Sufficient Conditions (for unique local minimum):

L(x*) is positive definite on T.

Example (continued)

Hessian: L(x*) =

0

2

2 0

2

+

(independent of x*)

0

0 2

Tangent Plane: T = {(y1, y2) : x*1 y1 + x*2y2 = 0}

At either minimizing point, = 1 so L =

T = {(y1, y2) : y1 = y2},

yTLy

=

2

2

2

(y1, y2)

2

2

,

2

2 y1

2

= 8y1 ≥ 0

2 y2

Thus second order necessary and sufficient conditions for a

relative minimum are satisfied.

At either maximizing point, = – 1, L =

–2

2

2

–2 ,

2

T = {(y1, y2) : y1 = –y2}, yTLy = –8y1 ≤ 0

L is negative definite on T relative maximum.

Optimality Conditions

Inequality Constraints:

min{f(x) : hi(x) = 0, i = 1, . . . , p; gi(x) ≤ 0, i = 1, . . . , m}

Regular Point: Let x* be a point satisfying the constraints h(x*)

= 0, g(x*) ≤ 0 and let I be the set of indices i for which gi(x*) =

0. Then x* is said to be a regular point of these constraints if

the gradient vectors hi(x*) (1 ≤ i ≤ p), gi(x*) (i I) are

linearly independent.

Necessary Optimality Conditions (Karush-Kuhn-Tucker

Conditions): Let x* be a relative minimum and suppose that x*

is a regular point for the constraints. Then there exists a vector

p and a vector m such that

f(x*) + Th(x*) + Tg(x*) = 0

Tg(x*) = 0

≥ 0

h(x*) = 0, g(x*) ≤ 0

Second Order Optimality Conditions

Necessary Conditions: Suppose that the functions f, h, g C2

and that x* is a regular point of the constraints. If x* is a

relative minimum, then there exists a vector p and a

vector m, ≥ 0 such that KKT Conditions hold and

L(x*) F(x*) + TH(x*) + TG(x*)

is positive semidefinite on the tangent subspace of the active

constraints at x*.

Sufficient Conditions: Hessian matrix

L(x*) = F(x*) + TH(x*) + TG(x*)

is positive definite on the subspace:

T' = {{y : h(x*)y = 0, gi(x*)y = 0 for all i I'}

where I' = {i : gi(x*) = 0, i > 0}.

Example

2

2

1 2

1

min f = 2x1 + x2 + 5 x3 + x1x3 – x1x2 + x1 – 2 x3

subject to

h1 = x1 + x2

= 3

2

2

g1 = x1 + x2

– x3 ≤ 0

g2 = x1 + x2 + 2x3 ≤ 16

g3 = x1

g4 =

≥ 0

x2

≥ 0

g5 =

x3 ≥ 0

Stationarity Conditions:

4x1 + x3 – x2 + 1

2x2 – x1

2

1

5 x3 + x1 – 2

–

1

3 0

0

–

+

1

1 1

0

0

4 1

0

–

+

2x1

1 2x2

–1

0

5 0

1

=

+

0

0

0

1

2 1

2

Complementarity Conditions:

2

2

1 x1 + x2 – x3 = 0

2 x1 + x2 + 2x3 – 16 = 0

3 x1 = 4 x2 = 5 x3 = 0

i ≥ 0, i = 1, . . . , 5

• The 5 complementarity equalities imply 25 = 32 combinations

may have to be examined (most of these are infeasible).

• Assume that only g2 is active so i = 0 for all i ≠ 2.

h1 = x1 + x2 = 3 means x3 =

13

2.

The third stationarity condition then yields

2 13

5 2

1

+ x1 – 2 + 22 = 0 2 < 0 which is not feasible.

• Now consider the case where only g1 is active.

One possible solution is –x = (1, 2, 5).

5

The third stationarity condition gives 1 = 2, and either the

first or second stationarity conditions gives 1 = –13.

Thus –x with corresponding and satisfies the first order

necessary conditions for a relative minimum.

Check second order conditions –– examine Hessian:

4 –1 1

2 0 0

9 –1 1

5

L(–x) = –1 2 0 + 2 0 2 0 = –1 7 0

1 0 2/5

0 0 0

1 0 2/5

Matrix L(–x) is positive definite on R3 so it must be positive

definite on the required tangent subspace.

Therefore, –x = (1, 2, 5) is a unique local minimum.